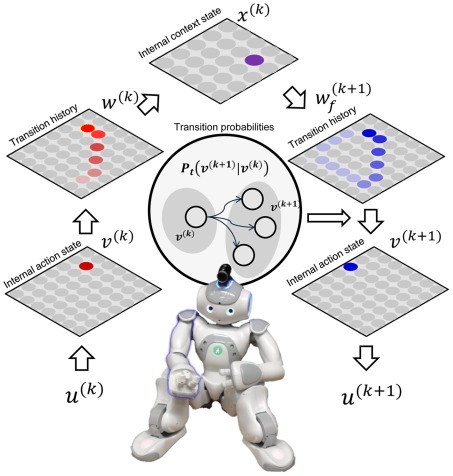

Figure 1.

Structure of motor action learning and reproduction system. The current action feature vector u(k) is reduced to internal action state v(k). From recent history w(k) of the internal action state (bright red is recently activated neuron), we can evaluate the most probable current action sequence state x(k). An action sequence state stores the probability of an action state within a sequence [w(k + 1) bright blue node is a more feasible action state]. With this top-down probability and the immediate probability of the next action state (Pt), we can find the posterior probabilities of the incoming action state, v(k + 1). From the first SOFM layer, this action state maps to action feature vector u(k + 1). The robot generates the action represented by u(k + 1).