Abstract

The perception of faces involves a large network of cortical areas of the human brain. While several studies tested this network recently, its relationship to the lateral occipital (LO) cortex known to be involved in visual object perception remains largely unknown. We used functional magnetic resonance imaging and dynamic causal modeling (DCM) to test the effective connectivity among the major areas of the face-processing core network and LO. Specifically, we tested how LO is connected to the fusiform face area (FFA) and occipital face area (OFA) and which area provides the major face/object input to the network. We found that LO is connected via significant bidirectional connections to both OFA and FFA, suggesting the existence of a triangular network. In addition, our results also suggest that face- and object-related stimulus inputs are not entirely segregated at these lower level stages of face-processing and enter the network via the LO. These results support the role of LO in face perception, at least at the level of face/non-face stimulus discrimination.

Keywords: dynamic causal modeling, face perception, effective connectivity, fusiform face area, lateral occipital cortex, occipital face area

Introduction

The neural processing of faces is a widely researched topic of cognitive science. Based on functional imaging studies, single-cell recordings, and neuropsychological research it has been suggested that face-processing is performed by a distributed network, involving several cortical areas of the mammalian brain (Haxby et al., 2000; Marotta et al., 2001; Rossion et al., 2003a; Avidan et al., 2005; Sorger et al., 2007). While the extent of this face-processing network is currently under intensive debate (Ishai, 2008; Wiggett and Downing, 2008) most researchers agree that there are numerous cortical areas activated by face stimuli. The most influential model of face perception, based on the original model of Bruce and Young, 1986; Young and Bruce, 2011) proposes a distinction between the representation of invariant and variant aspects of face perception in a relatively independent manner, separated into a “core” and an “extended” part (Haxby et al., 2000). The most important regions of the “core network” are areas of the occipital and the lateral fusiform gyri (FG). The areas of these two anatomical regions seem to be specialized for distinct tasks: while the occipital face area (OFA), located on the inferior occipital gyrus (IOG) seems to be involved in the structural processing of faces, the fusiform face area (FFA) processes faces in a higher-level manner, contributing for example to the processing of identity (Sergent et al., 1992; George et al., 1999; Ishai et al., 1999; Hoffman and Haxby, 2000; Rossion et al., 2003a,b; Rotshtein et al., 2005). In addition, the changeable aspects of faces (such as facial expressions, direction of eye–gaze, expression, lip movements (Perrett et al., 1985, 1990), or lip-reading (Campbell, 2011) seem to be processed in the superior temporal sulcus (STS; Puce et al., 1998; Hoffman and Haxby, 2000; Winston et al., 2004). The three above-mentioned areas (FFA, OFA, STS) form the so-called “core” of the perceptual system of face-processing (Haxby et al., 2000; Ishai et al., 2005). While basic information about faces is processed by this core system complex information about the others’ mood, level of interest, attractivity, or direction of attention also adds information to face perception and is processed by an additional, so-called “extended” system (Haxby et al., 2000). This system contains brain regions with a large variety of cognitive functions related to the processing of changeable facial aspects (Haxby et al., 2000; Ishai et al., 2005) and include areas such as the amygdala, insula, the inferior frontal gyrus as well as the orbitofrontal cortex (Haxby et al., 2000, 2002; Fairhall and Ishai, 2007; Ishai, 2008).

Interactions of the above-mentioned areas are modeled in the present study using methods that calculate the effective connectivity among cortical areas. Dynamic causal modeling (DCM) is a widely used method to explore effective connectivity among brain regions. It is a generic approach for modeling the mutual influence of different brain areas on each other, based on fMRI activity (Friston et al., 2003; Stephan et al., 2010) and to estimate the inter-connection pattern of cortical areas. DCMs are generative models of neural responses, which provide “a posteriori” estimates of synaptic connections among neuronal populations (Friston et al., 2003, 2007). The existence of the distributed network for face-processing was first confirmed by functional connectivity analysis by Ishai (2008), who claimed that the central node of face-processing is the lateral FG, connected to lower-order areas of the IOG as well as to STS, amygdala, and frontal areas. The first attempt to reveal the face-processing network in case of realistic dynamic facial expressions was done by Foley et al. (2012). They confirmed the role of IOG, STS, and FG areas and found that the connection strength between members of the core network (OFA and STS) and of the extended system (amygdala) are increased for processing affect-laden gestures. Currently, several studies elaborated our understanding on the face-processing network and revealed a direct link between amygdala and FFA and the role of this connection in the perception of fearful faces (Morris et al., 1996; Marco et al., 2006; Herrington et al., 2011). Finally, the effect of higher cognitive functions on face perception was also modeled by testing the connections of the orbitofrontal cortex to the core network (Li et al., 2010). It was found (Li et al., 2010) that the orbitofrontal cortex has an effect on the OFA, which further modulates the information processing of the FFA.

While prior effective connectivity studies revealed the details of the face-processing network related to various aspects of face perception, they ignored the simple fact that faces can also be considered as visual objects. We know from a large body of experiments that visual objects are processed by a distributed cortical network, including early visual areas, occipito-temporal, and ventral–temporal cortices, largely overlapping with the face-processing network (Haxby et al., 1999, 2000, 2002; Kourtzi and Kanwisher, 2001; Ishai et al., 2005; Gobbini and Haxby, 2006, 2007; Haxby, 2006; Ishai, 2008). One of the major areas of visual object processing is the lateral occipital cortex (LOC), which can be divided into two parts: the anterior–ventral (PF/LOa) and the caudal–dorsal part (LO; Grill-Spector et al., 1999; Halgren et al., 1999). The LOC was first described by Malach et al. (1995), who measured increased activity for objects, including famous faces as well, when compared to scrambled objects (Malach et al., 1995; Grill-Spector et al., 1998a). Since then, the lateral occipital (LO) is considered primarily as an object-selective area, which is nevertheless invariably found to have elevated activation for faces as well (Malach et al., 1995; Puce et al., 1995; Lerner et al., 2001), especially for inverted ones (Aguirre et al., 1999; Haxby et al., 1999; Epstein et al., 2005; Yovel and Kanwisher, 2005).

Thus, it is rather surprising that while several studies have dealt with the effective connectivity of face-processing areas, none of them considered the role of the LO in the network. In a previous fMRI study we found that LO has a crucial role in sensory competition for face stimuli (Nagy et al., 2011). The activity of LO was reduced by the presentation of simultaneously presented concurrent stimuli and this response reduction, which reflects sensory competition among stimuli, was larger when the surrounding stimulus was a face when compared to a Fourier-phase randomized noise image. This result also supported the idea that LO may play a specific role in face perception. Therefore, here we explored explicitly, using methods of effective connectivity, how LO is linked to FFA and OFA, members of the proposed core network of face perception (Haxby et al., 1999, 2000, 2002; Ishai et al., 2005).

Materials and Methods

Subjects

Twenty-five healthy participants took part in the experiment (11 females, median: 23 years, min.: 19 years, max.: 35 years). All of them had normal or corrected to normal vision (self reported), none of them had any neurological or psychological diseases. Subjects provided their written informed consent in accordance with the protocols approved by the Ethical Committee of the University of Regensburg.

Stimuli

Subjects were centrally presented by gray-scale faces, non-sense objects, and the Fourier randomized versions of these stimuli, created by an algorithm (Nasanen, 1999) that replaces the phase spectrum with random values (ranging from 0° to 360°), leaving the amplitude spectrum of the image intact, while removing any shape information. Faces were full-front digital images of 20 young males and 20 young females. They were fit behind a round shape mask (3.5° diameter) eliminating the outer contours of the faces (see a sample image in Figure 1). Objects were non-sense, rendered objects (n = 40) having the same average size as the face mask. The luminance and contrast (i.e., the standard deviation of the luminance distribution) of the stimuli were equated by matching the luminance histograms (mean luminance: 18 cd/m2) using Photoshop. Stimuli were back-projected via an LCD video projector (JVC, DLA-G20, Yokohama, Japan, 72 Hz, 800 × 600 resolution) onto a translucent circular screen (app. 30° diameter), placed inside the scanner bore at 63 cm from the observer. Stimulus presentation was controlled via E-prime software (Psychological Software Tools, Pittsburgh, PA, USA). Faces, objects, and Fourier noise images were presented in subsequent blocks of 20 s, interleaved with 20 s of blank periods (uniform gray background with a luminance of 18 cd/m2). Stimuli were presented for 300 ms and were followed by an ISI of 200 ms (2 Hz) in a random order. Each block was repeated five times. Participants were asked to focus continuously on a centrally presented fixation mark. These functional localizer runs were part of two other experiments of face perception, published elsewhere (Nagy et al., 2009, 2011).

Figure 1.

Sample stimuli of the experiment. All images were gray-scale, same in size, luminance, and contrast. Left panel shows a face, gender specific features (such as hair, jewelry etc.) was hidden behinds an oval mask. Middle panel shows a sample non-sense geometric object, while right panel shows the Fourier-phase randomized version of objects, used as control stimuli.

Data acquisition and analysis

Imaging was performed using a 3-T MR Head scanner (Siemens Allegra, Erlangen, Germany). For the functional series we continuously acquired images (29 slices, 10° tilted relative to axial, T2* weighted EPI sequence, TR = 2000 ms; TE = 30 ms; flip angle = 90°; 64 × 64 matrices; in-plane resolution: 3 mm × 3 mm; slice thickness: 3 mm). High-resolution sagittal T1-weighted images were acquired using a magnetization EPI sequence (MP-RAGE; TR = 2250 ms; TE = 2.6 ms; 1 mm isotropic voxel size) to obtain a 3D structural scan (For details, see Nagy et al., 2011).

Functional images were corrected for acquisition delay, realigned, normalized to the MNI space, resampled to 2 mm × 2 mm × 2 mm resolution and spatially smoothed with a Gaussian kernel of 8 mm FWHM (SPM8, Welcome Department of Imaging Neuroscience, London, UK; for details of data analysis, see Nagy et al., 2011).

VOI selection

First, volumes of interests (VOI) were selected, based on activity and anatomical constraints (including masking for relevant brain regions). Face-selective areas were defined as an area showing larger activation for faces compared to Fourier noise images and objects. FFA was defined within the Lateral Fusiform Gyrus, while OFA within the IOG. LO was defined from the Object > Fourier noise and face images contrast, within the Middle Occipital Gyrus. VOI selection was based on the T-contrasts adjusted with F-contrast, (p < 0.005 uncorrected with a minimum cluster size of 15 voxels). VOIs were spherical with a radius of 4 mm around the peak activation (for individual coordinates, see Table A1 in Appendix). The variance explained by the first eigenvariate of the BOLD signals was all above 79%. Only right hemisphere areas were used in the current DCM analysis as several studies point to the dominant role of this hemisphere in face perception (Michel et al., 1989; Sergent et al., 1992).

Effective connectivity analysis

Effective connectivity was tested by DCM-10, implemented in SPM8 toolbox (Wellcome Department of Imaging Neuroscience, London, UK), running under Matlab R2008a (The MathWorks, Natick, MA, USA). Models of DCM are defined with endogenous connections, representing coupling between brain regions (matrix A), modulatory connections (matrix B), and driving input (matrix C). Here in the A matrix we defined the connections between the face-selective regions (FFA and OFA) and LO. Images of faces and objects served as driving input (matrix C) and at this analysis step we did not apply any modulatory effects on the connections.

Model estimation aimed to maximize the negative free-energy estimates of the models (F) for a given dataset (Friston et al., 2003). This method ensures that the model fit uses the parameters in a parsimonious way (Ewbank et al., 2011). The estimated models were compared, based on the model evidences p(y|m), which is the probability p of obtaining observed data y given by a particular model m (Friston et al., 2003; Stephan et al., 2009). In the present study we apply the negative free-energy approximation (variational free-energy) to the log evidence (MacKay, 2003; Friston et al., 2007). Bayesian Model Selection (BMS) was carried out on both the random (RFX) and fixed (FFX) effect designs (Stephan et al., 2009). BMS RFX is more resistant to outliers than FFX and it does not assume that the same model would explain the function for each participant (Stephan et al., 2009). In other words RFX is less sensitive to noise. In the RFX approach the output of the analysis is the exceedance probability of the model space, which is the extent of which one model is more likely to explain the measured data than other models. The other output of the RFX analysis is the expected posterior probability, which reflects the probability that a model generated the observed data, allowing different distributions for different models. Both of these parameter values are reduced by the broadening of the model space (i.e., by increasing the number of models), therefore they behave in a relative manner and models with shared features and implausible models may distort the output of the analysis. Therefore, in addition to the direct comparison of the 28 created models we partitioned the model space into families, having similar connectivity patterns, using the methods of Penny et al. (2010).

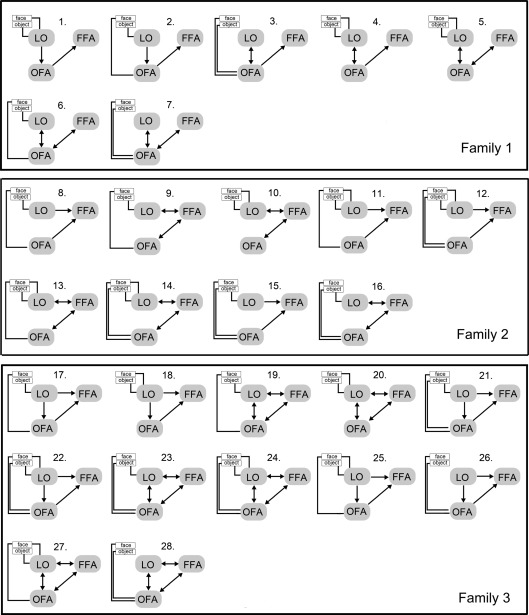

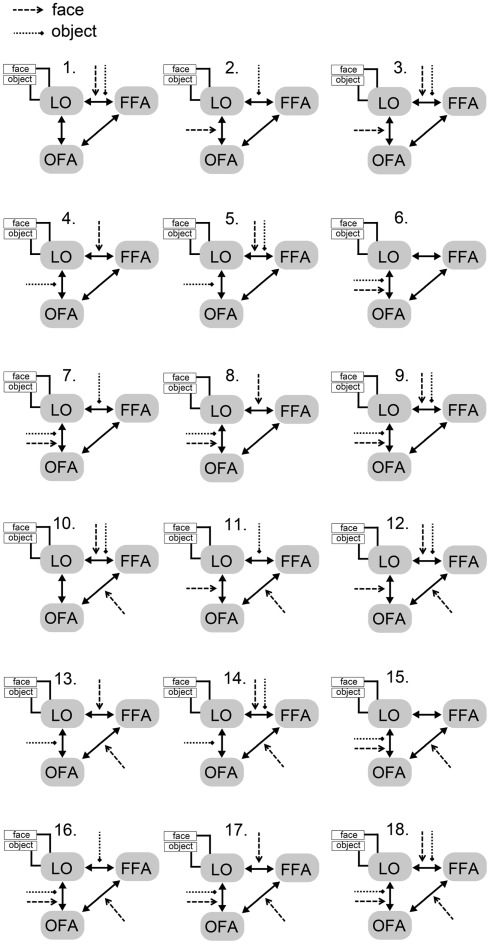

Since several previous DCM studies point to the close bidirectional connection between FFA and OFA (Ishai, 2008; Gschwind et al., 2012) in our analysis these two areas were always linked to each other and LO was connected to them in every biologically plausible way. The 28 relevant models were divided into three model-families based on structural differences (Penny et al., 2010; Ewbank et al., 2011). Family 1 contains models with linear connections among the three areas, supposing that information flows from the LO to the FFA via the OFA. Family 2 contains models with a triangular structure where LO sends input directly to the FFA and the OFA is also directly linked to the FFA. Family 3 contains models, in which the three areas are interlinked, supposing a circular flow of information (Figure 2). In order to limit the number of models in this step of the analysis the inputs modulated solely the activity of their entry areas. The three families were compared by a random design BMS.

Figure 2.

The 28 analyzed models. Black lines mark the division between the three families, having different A matrix structures. For details, see Section “Materials and Methods.”

Second, the models from the winner family were elaborated further by creating every plausible model with modulatory connections, applying three constraints. First, both faces and objects modulate at least one inter-areal connection. Second, in case of bidirectional links the modulatory inputs have an effect on both directions. Third, if face gives a direct input into OFA then we assume that it always modulates the OFA–FFA connection as well (see Table A2 in Appendix). These models were entered into a second family-wise random BMS analysis. Finally, members of the winner sub-family were entered into a third BMS to find a single model with the highest exceedance probability.

Results

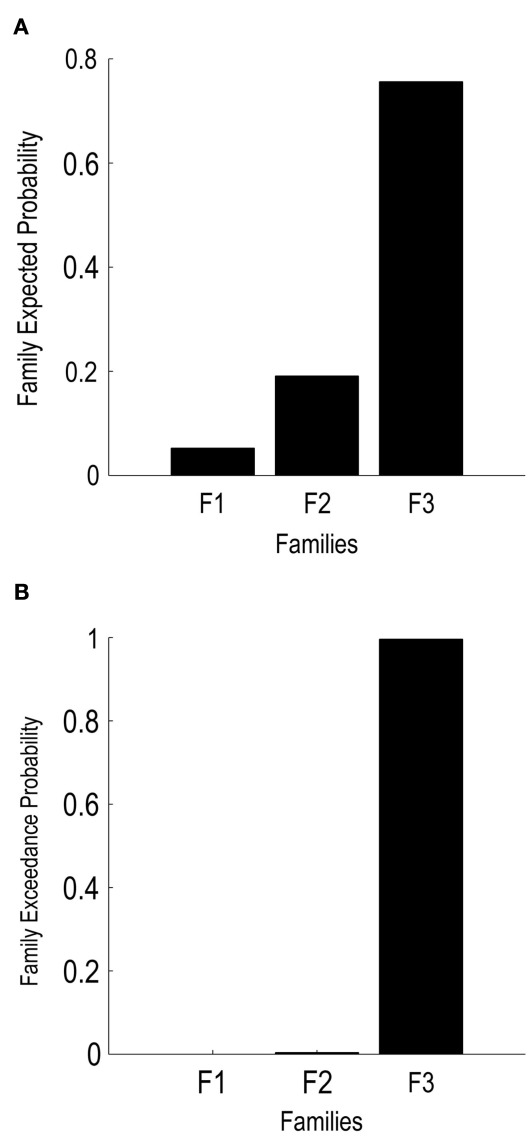

Bayesian model selection was used for deciding which model family explains the measured data best. As our results show the third family out-performed the other two, having an exceedance probability of 0.995 compared to the first family’s 0.00 and the second family’s 0.004 (Figures 3A,B). The winner model family (Family 3; Figure 2, bottom) contains 12 models, having connections between the LO and FFA, OFA and FFA, and LO and OFA as well, but differing in the directionality of the connections as well as in the place of input to the network.

Figure 3.

Results of BMS RFX on the level of families. (A) The expected probabilities of family-based comparison are shown, with the joint exceedance probabilities (B).

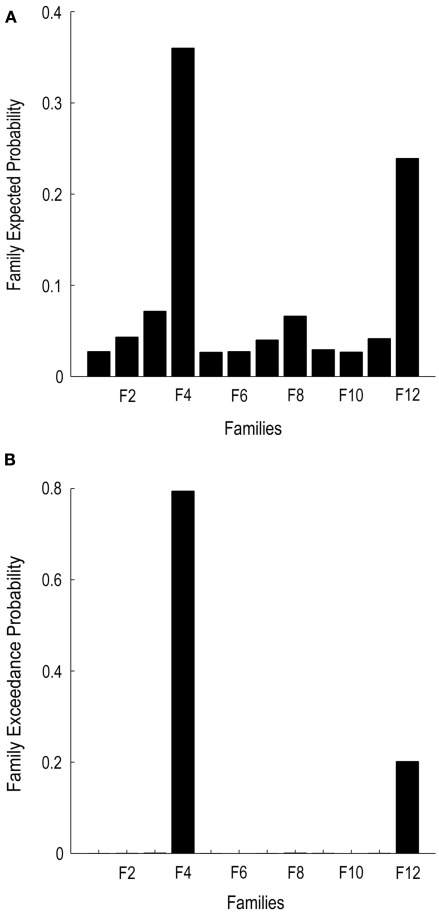

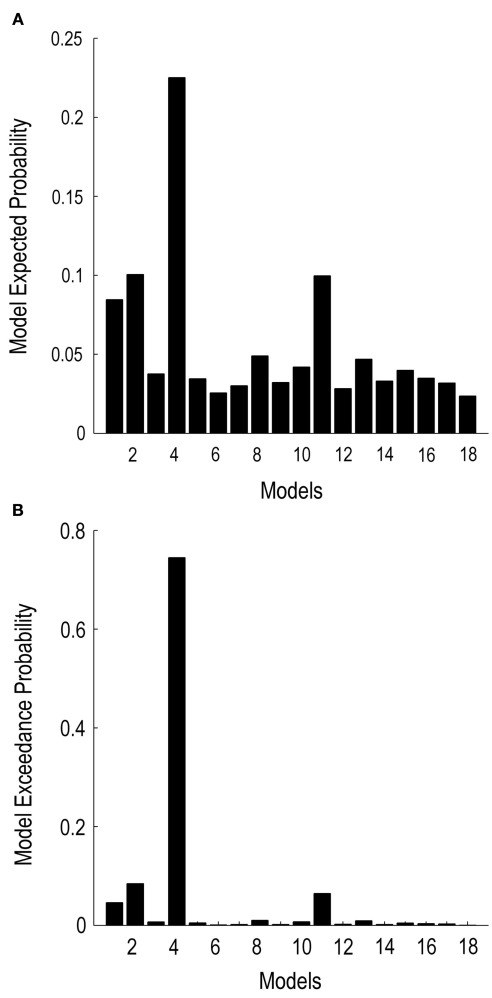

As a second step, all possible modulatory models were designed for the 12 models of Family 3 (see Table A2 in Appendix; Materials and Methods for details). This led to 122 models, which were entered into the BMS family-wise random analysis, using 12 sub-families. As it is visible from Figures 4A,B, model sub-family 4 out-performed the other sub-families with an exceedance probability of 0.79. As a third step, the models within the winner sub-family 4 [corresponding to the A matrix of Model 20 of the first BMS (Figure 2), however, with different modulatory connections] were entered into a random effect BMS. Figure 5 presents the 18 tested variations of Model 20. The model with the highest exceedance probability (p = 0.75) was model 4 (see Figure 6). This means that the winner model contains bidirectional connections between all areas and face and object inputs, surprisingly, both enter into the LO. In addition, faces have a modulatory effect on the connection between LO and FFA, while objects modulate the connection between LO and OFA.

Figure 4.

Results of BMS RFX on the level of sub-families. (A) The expected probabilities of family-based comparison are shown, with the joint exceedance probabilities (B).

Figure 5.

Models of sub-family 4. All models have the same DCM.A structure (identical with model 20 in Family 3), DCM.B structure differs from model to model. Dashed arrows symbolized face modulatory effect, while dotted square-arrows shows object modulation.

Figure 6.

Results of BMS within sub-family 4. (A) The expected probabilities of family-based comparison are shown, with the joint exceedance probabilities (B).

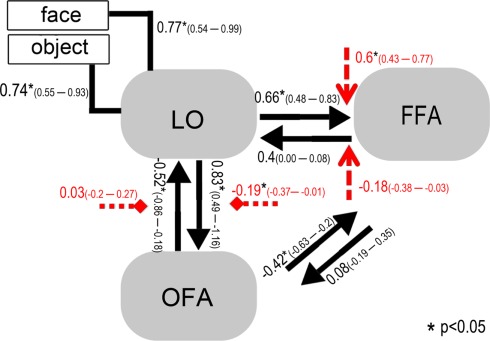

For analyzing parameter estimates of the winner model across the group of subjects a random effect approximation was used. All the subject-specific maximum a posteriori (MAP) estimates were entered into a t-test for single means and tested against 0 (Stephan et al., 2010; Desseilles et al., 2011). The results indexed with an asterisk on Figure 7 differed significantly from 0 (p < 0.05).

Figure 7.

The structure of the winner model. Simple lines signify the object and face input stimuli to the system (DCM.C). Black arrows show inter-regional connections (DCM.A) while the red arrows stand for the modulatory connections (DCM.B): face modulation is signified with dashed arrows, while object modulation is signified with square-head dashed arrows. Group-level averages of MAP estimates and 95% confidence intervals are illustrated. The averages were tested against 0 and significant results are signified with * if p < 0.05.

Discussion

The major result of the present effective connectivity study suggest that (a) LO is linked directly to the OFA–FFA face-processing system via bidirectional connections to both areas; (b) non-face and face inputs are intermixed at the level of occipito-temporal areas and enter the system via LO; (c) face input has a modulatory effect on LO and FFA connection, while object input modulates the LO and OFA connection significantly.

The role of LO in object perception is well-known from previous studies (Malach et al., 1995; Grill-Spector et al., 1998a,b, 2000; Lerner et al., 2001, 2008). However, in spite previous studies usually found an increased activity for complex objects, as well as for faces in LO, the area is usually associated with objects and relatively less importance is attributed to its role in face-processing. In the present study, effective connectivity analysis positioned the LO in the core network of face perception. The direct link between OFA and FFA has been proven previously both functionally and anatomically (Gschwind et al., 2012). However, as there is no current data available regarding the role of LO in this system, we linked it to the other two regions in several plausible ways.

The first random BMS showed that in the winner model family LO is interconnected with both FFA and OFA and the connections are bidirectional. Therefore, it highlights that LO may have a direct structural connection to FFA. With modeling all the possible modulatory effects we found that a sub-family of models won, where both object and face inputs enter the system via the LO, supposing that the LO plays a general and important input region role. Since previous functional connectivity studies all started the analysis of the face-processing network at the level of IOG, corresponding to OFA (Fairhall and Ishai, 2007; Ishai, 2008; Cohen Kadosh et al., 2011; Dima et al., 2011; Foley et al., 2012) it is not surprising that they overlooked the significant role of LO. However, faces are actually a distinct category of visual objects, suggesting that neurons sensitive to objects and shapes should be activated, at least to a certain degree, by faces as well. Indeed, single-cell studies of non-human primates suggest that the inferior-temporal cortex, the proposed homolog of human LO in the macaque brain (Denys et al., 2004; Sawamura et al., 2006) has neurons responsive to faces as well (Perrett et al., 1982, 1985; Desimone et al., 1984; Hasselmo et al., 1989; Young and Yamane, 1992; Sugase et al., 1999). The intimate connection of LO to OFA and FFA, suggested by the present study could underline the fact that faces and objects are not processed entirely separately in the ventral visual pathway, a conclusion supported by recent functional imaging data as well (Rossion et al., 2012). The modulatory effect of face input on the LO–FFA connection suggests that LO must play a role in face-processing, most probably linked to the earlier, structural processing of faces, a task previously put down mostly to OFA (Rotshtein et al., 2005; Fox et al., 2009). Finally, the group-level parameters of the winner model show that the link between the LO, OFA, and FFA are mainly hierarchical and the feedback connections from FFA toward OFA and LO play a weaker role in the system, at least during the applied fixation task.

In conclusion, by modeling the effective connectivity between face-relevant areas and LO we suggest that LO plays a significant role in the processing of faces.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was supported by the Deutsche Forschungsgemeinschaft (KO 3918/1-1) and the University of Regensburg. We would like to thank Ingo Keck and Michael Schmitgen for discussion.

Appendix

Table A1.

The MNI coordinates of the respective maximal activations within FFA, OFA, and LO of our subject sample.

| FFA (x, y, z) | OFA (x, y, z) | LO (x, y, z) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 1. | 42 | −58 | −14 | 36 | −74 | −18 | 54 | −70 | −2 |

| 2. | 48 | −48 | −26 | 48 | −76 | −18 | 50 | −74 | −8 |

| 3. | 40 | −58 | −20 | 38 | −86 | −8 | 40 | −84 | −8 |

| 4. | 48 | −48 | −22 | 44 | −76 | −16 | 54 | −70 | −2 |

| 5. | 48 | −50 | −20 | 46 | −74 | −10 | 52 | −68 | −4 |

| 6. | 38 | −50 | −22 | 38 | −72 | −16 | 44 | −76 | 4 |

| 7. | 40 | −48 | −22 | 38 | −86 | −18 | 56 | −68 | −8 |

| 8. | 42 | −42 | −24 | 38 | −86 | −18 | 56 | −68 | −8 |

| 9. | 46 | −58 | −18 | 48 | −76 | −12 | 56 | −70 | −4 |

| 10. | 40 | −62 | −20 | 44 | −84 | −8 | 44 | −82 | −10 |

| 11. | 40 | −56 | −24 | 42 | −84 | −12 | 48 | −80 | −6 |

| 12. | 44 | −52 | −20 | 40 | −76 | −8 | 46 | −82 | −2 |

| 13. | 48 | −62 | −28 | 36 | −84 | −12 | 50 | −80 | 4 |

| 14. | 38 | −54 | −22 | 46 | −82 | −10 | 40 | −78 | 2 |

| 15. | 42 | −64 | −18 | 56 | −66 | −2 | 54 | −68 | −2 |

| 16. | 52 | −52 | −30 | 46 | −76 | −20 | 46 | −80 | −4 |

| 17. | 44 | −50 | −16 | 40 | −80 | −10 | 50 | −72 | 2 |

| 18. | 46 | −60 | −14 | 44 | −78 | −12 | 52 | −76 | −2 |

| 19. | 48 | −58 | −20 | 42 | −82 | −14 | 40 | −80 | −4 |

| 20. | 40 | −50 | −24 | 36 | −74 | −18 | 44 | −82 | −10 |

| 21. | 40 | −54 | −22 | 44 | −74 | −14 | 54 | −74 | −6 |

| 22. | 40 | −58 | −16 | 40 | −78 | −40 | 50 | −76 | −2 |

| 23. | 46 | −52 | −18 | 42 | −74 | −12 | 48 | −80 | 0 |

| 24. | 42 | −44 | −26 | 44 | −70 | −16 | 34 | −78 | −2 |

| 25. | 48 | −48 | −24 | 46 | −74 | −10 | 52 | −70 | −4 |

| Mean | 43.6 | −53.44 | −21.2 | 42.48 | −77.68 | −14.08 | 48.56 | −75.44 | −3.44 |

| St. Dev. | 3.9 | 5.8 | 4.1 | 4.7 | 5.4 | 6.9 | 5.9 | 5.3 | 4.0 |

Table A2.

All possible modulatory models of Family 3.

| LO to FFA (object) | LO to OFA (object) | OFA to FFA (face) | ||||

| 17_1 | 0 | 1 | 1 | |||

| 17_2 | 1 | 0 | 1 | |||

| 17_3 | 1 | 1 | 1 | |||

| LO to OFA (object) | LO to OFA (face) | LO to FFA (object) | LO to FFA (face) | OFA to FFA (face) | ||

| 18_1 | 0 | 0 | 1 | 1 | 0 | |

| 18_2 | 0 | 1 | 1 | 0 | 0 | |

| 18_3 | 0 | 1 | 1 | 1 | 0 | |

| 18_4 | 1 | 0 | 0 | 1 | 0 | |

| 18_5 | 1 | 0 | 1 | 1 | 0 | |

| 18_6 | 1 | 1 | 0 | 0 | 0 | |

| 18_7 | 1 | 1 | 1 | 0 | 0 | |

| 18_8 | 1 | 1 | 0 | 1 | 0 | |

| 18_9 | 1 | 1 | 1 | 1 | 0 | |

| 18_10 | 0 | 0 | 1 | 1 | 1 | |

| 18_11 | 0 | 1 | 1 | 0 | 1 | |

| 18_12 | 0 | 1 | 1 | 1 | 1 | |

| 18_13 | 1 | 0 | 0 | 1 | 1 | |

| 18_14 | 1 | 0 | 1 | 1 | 1 | |

| 18_15 | 1 | 1 | 0 | 0 | 1 | |

| 18_16 | 1 | 1 | 1 | 0 | 1 | |

| 18_17 | 1 | 1 | 0 | 1 | 1 | |

| 18_18 | 1 | 1 | 1 | 1 | 1 | |

| OFA to FFA (face) | LO to OFA (face) | LO to OFA (object) | LO to FFA (object) | |||

| 19_1 | 1 | 0 | 0 | 1 | ||

| 19_2 | 1 | 0 | 1 | 1 | ||

| 19_3 | 1 | 1 | 0 | 1 | ||

| 19_4 | 1 | 1 | 1 | 1 | ||

| 19_5 | 1 | 0 | 0 | 1 | ||

| 19_6 | 1 | 0 | 1 | 1 | ||

| 19_7 | 1 | 1 | 0 | 1 | ||

| 19_8 | 1 | 1 | 1 | 1 | ||

| LO to OFA (object) | LO to OFA (face) | LO to FFA (object) | LO to FFA (face) | OFA to FFA (face) | ||

| 20_1 | 0 | 0 | 1 | 1 | 0 | |

| 20_2 | 0 | 1 | 1 | 0 | 0 | |

| 20_3 | 0 | 1 | 1 | 1 | 0 | |

| 20_4 | 1 | 0 | 0 | 1 | 0 | |

| 20_5 | 1 | 0 | 1 | 1 | 0 | |

| 20_6 | 1 | 1 | 0 | 0 | 0 | |

| 20_7 | 1 | 1 | 1 | 0 | 0 | |

| 20_8 | 1 | 1 | 0 | 1 | 0 | |

| 20_9 | 1 | 1 | 1 | 1 | 0 | |

| 20_10 | 0 | 0 | 1 | 1 | 1 | |

| 20_11 | 0 | 1 | 1 | 0 | 1 | |

| 20_12 | 0 | 1 | 1 | 1 | 1 | |

| 20_13 | 1 | 0 | 0 | 1 | 1 | |

| 20_14 | 1 | 0 | 1 | 1 | 1 | |

| 20_15 | 1 | 1 | 0 | 0 | 1 | |

| 20_16 | 1 | 1 | 1 | 0 | 1 | |

| 20_17 | 1 | 1 | 0 | 1 | 1 | |

| 20_18 | 1 | 1 | 1 | 1 | 1 | |

| LO to FFA (object) | LO to OFA (object) | OFA to FFA (face) | ||||

| 21_1 | 0 | 1 | 1 | |||

| 21_2 | 1 | 1 | 1 | |||

| 21_3 | 1 | 0 | 1 | |||

| OFA to FFA (face) | OFA to FFA (object) | LO to FFA (object) | LO to FFA (face) | |||

| 22_1 | 1 | 0 | 1 | 0 | ||

| 22_2 | 1 | 0 | 1 | 1 | ||

| 22_3 | 1 | 1 | 0 | 0 | ||

| 22_4 | 1 | 1 | 1 | 0 | ||

| 22_5 | 1 | 1 | 0 | 1 | ||

| 22_6 | 1 | 1 | 1 | 1 | ||

| OFA to FFA (face) | OFA to LO (object) | OFA to FFA (object) | LO to FFA (object) | |||

| 23_1 | 1 | 0 | 0 | 1 | ||

| 23_2 | 1 | 0 | 1 | 0 | ||

| 23_3 | 1 | 0 | 1 | 1 | ||

| 23_4 | 1 | 1 | 0 | 0 | ||

| 23_5 | 1 | 1 | 0 | 1 | ||

| 23_6 | 1 | 1 | 1 | 0 | ||

| 23_7 | 1 | 1 | 1 | 1 | ||

| OFA to FFA (face) | OFA to LO (face) | OFA to LO (object) | OFA to FFA (object) | LO to FFA (object) | LO to FFA (face) | |

| 24_1 | 1 | 0 | 0 | 0 | 1 | 0 |

| 24_2 | 1 | 0 | 0 | 0 | 1 | 1 |

| 24_3 | 1 | 0 | 0 | 1 | 0 | 0 |

| 24_4 | 1 | 0 | 0 | 1 | 1 | 0 |

| 24_5 | 1 | 0 | 0 | 1 | 0 | 1 |

| 24_6 | 1 | 0 | 0 | 1 | 1 | 1 |

| 24_7 | 1 | 1 | 0 | 0 | 1 | 0 |

| 24_8 | 1 | 1 | 0 | 0 | 1 | 1 |

| 24_9 | 1 | 1 | 0 | 1 | 0 | 0 |

| 24_10 | 1 | 1 | 0 | 1 | 1 | 0 |

| 24_11 | 1 | 1 | 0 | 1 | 0 | 1 |

| 24_12 | 1 | 1 | 0 | 1 | 1 | 1 |

| 24_13 | 1 | 0 | 1 | 0 | 0 | 0 |

| 24_14 | 1 | 0 | 1 | 0 | 1 | 0 |

| 24_15 | 1 | 0 | 1 | 0 | 0 | 1 |

| 24_16 | 1 | 0 | 1 | 0 | 1 | 1 |

| 24_17 | 1 | 0 | 1 | 1 | 0 | 0 |

| 24_18 | 1 | 0 | 1 | 1 | 1 | 0 |

| 24_19 | 1 | 0 | 1 | 1 | 0 | 1 |

| 24_20 | 1 | 0 | 1 | 1 | 1 | 1 |

| 24_21 | 1 | 1 | 1 | 0 | 0 | 0 |

| 24_22 | 1 | 1 | 1 | 0 | 1 | 0 |

| 24_23 | 1 | 1 | 1 | 0 | 0 | 1 |

| 24_24 | 1 | 1 | 1 | 0 | 1 | 1 |

| 24_25 | 1 | 1 | 1 | 1 | 0 | 0 |

| 24_26 | 1 | 1 | 1 | 1 | 1 | 0 |

| 24_27 | 1 | 1 | 1 | 1 | 0 | 1 |

| 24_28 | 1 | 1 | 1 | 1 | 1 | 1 |

| LO to OFA (face) | OFA to FFA (face) | LO to OFA (object) | LO to FFA (object) | LO to FFA (face) | ||

| 25_1 | 0 | 1 | 0 | 1 | 0 | |

| 25_2 | 0 | 1 | 0 | 1 | 1 | |

| 25_3 | 0 | 1 | 1 | 0 | 0 | |

| 25_4 | 0 | 1 | 1 | 1 | 0 | |

| 25_5 | 0 | 1 | 1 | 0 | 1 | |

| 25_6 | 0 | 1 | 1 | 1 | 1 | |

| 25_7 | 1 | 1 | 0 | 1 | 0 | |

| 25_8 | 1 | 1 | 0 | 1 | 1 | |

| 25_9 | 1 | 1 | 1 | 0 | 0 | |

| 25_10 | 1 | 1 | 1 | 1 | 0 | |

| 25_11 | 1 | 1 | 1 | 0 | 1 | |

| 25_12 | 1 | 1 | 1 | 1 | 1 | |

| OFA to FFA (object) | OFA to FFA (face) | |||||

| 26_1 | 1 | 1 | ||||

| OFA to FFA (face) | OFA to LO (face) | LO to OFA (object) | LO to FFA (object) | LO to FFA (face) | ||

| 27_1 | 1 | 0 | 0 | 1 | 0 | |

| 27_2 | 1 | 0 | 0 | 1 | 1 | |

| 27_3 | 1 | 1 | 0 | 1 | 0 | |

| 27_4 | 1 | 1 | 0 | 1 | 1 | |

| 27_5 | 1 | 0 | 1 | 0 | 0 | |

| 27_6 | 1 | 0 | 1 | 1 | 0 | |

| 27_7 | 1 | 0 | 1 | 0 | 1 | |

| 27_8 | 1 | 0 | 1 | 1 | 1 | |

| 27_9 | 1 | 1 | 1 | 0 | 0 | |

| 27_10 | 1 | 1 | 1 | 1 | 0 | |

| 27_11 | 1 | 1 | 1 | 0 | 1 | |

| 27_12 | 1 | 1 | 1 | 1 | 1 | |

| OFA to FFA (face) | OFA to FFA (object) | OFA to LO (object) | OFA to LO (face) | |||

| 28_1 | 1 | 0 | 1 | 0 | ||

| 28_2 | 1 | 0 | 1 | 1 | ||

| 28_3 | 1 | 1 | 0 | 0 | ||

| 28_4 | 1 | 1 | 1 | 0 | ||

| 28_5 | 1 | 1 | 0 | 1 | ||

| 28_6 | 1 | 1 | 1 | 1 |

The existence or non-existence of the modulatory effects are coded binary, the names of the models are in accordance to Figure 1.

References

- Aguirre G. K., Singh R., D’Esposito M. (1999). Stimulus inversion and the responses of face and object-sensitive cortical areas. Neuroreport 10, 189–194 10.1097/00001756-199901180-00036 [DOI] [PubMed] [Google Scholar]

- Avidan G., Hasson U., Malach R., Behrmann M. (2005). Detailed exploration of face-related processing in congenital prosopagnosia: 2. Functional neuroimaging findings. J. Cogn. Neurosci. 17, 1150–1167 10.1162/0898929054475145 [DOI] [PubMed] [Google Scholar]

- Bruce V., Young A. (1986). Understanding face recognition. Br. J. Psychol. 77(Pt 3), 305–327 10.1111/j.2044-8295.1986.tb02199.x [DOI] [PubMed] [Google Scholar]

- Campbell R. (2011). Speechreading and the Bruce-Young model of face recognition: early findings and recent developments. Br. J. Psychol. 102, 704–710 10.1111/j.2044-8295.2011.02021.x [DOI] [PubMed] [Google Scholar]

- Cohen Kadosh K., Cohen Kadosh R., Dick F., Johnson M. H. (2011). Developmental changes in effective connectivity in the emerging core face network. Cereb. Cortex 21, 1389–1394 10.1093/cercor/bhq215 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Denys K., Vanduffel W., Fize D., Nelissen K., Peuskens H., van Essen D., Orban G. A. (2004)., processing of visual shape in the cerebral cortex of human and nonhuman primates: a functional magnetic resonance imaging study. J. Neurosci. 24, 2551–2565 10.1523/JNEUROSCI.3569-03.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R., Albright T. D., Gross C. G., Bruce C. J. (1984). Stimulus-selective properties of inferior temporal neurons in the macaque. J. Neurosci. 8, 2051–2062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desseilles M., Schwartz S., Dang-Vu T. T., Sterpenich V., Ansseau M., Maquet P., Phillips C. (2011). Depression alters “top-down” visual attention: a dynamic causal modeling comparison between depressed and healthy subjects. Neuroimage 54, 1662–1668 10.1016/j.neuroimage.2010.08.061 [DOI] [PubMed] [Google Scholar]

- Dima D., Stephan K. E., Roiser J. P., Friston K. J., Frangou S. (2011). Effective connectivity during processing of facial affect: evidence for multiple parallel pathways. J. Neurosci. 31, 14378–14385 10.1523/JNEUROSCI.2400-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein R. A., Higgins J. S., Parker W., Aguirre G. K., Cooperman S. (2005). Cortical correlates of face and scene inversion: a comparison. Neuropsychologia 44, 1145–1158 10.1016/j.neuropsychologia.2005.10.009 [DOI] [PubMed] [Google Scholar]

- Ewbank M. P., Lawson R. P., Henson R. N., Rowe J. B., Passamonti L., Calder A. J. (2011). Changes in “top-down” connectivity underlie repetition suppression in the ventral visual pathway. J. Neurosci. 31, 5635–5642 10.1523/JNEUROSCI.5013-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fairhall S. L., Ishai A. (2007). Effective connectivity within the distributed cortical network for face perception. Cereb. Cortex 17, 2400–2406 10.1093/cercor/bhl148 [DOI] [PubMed] [Google Scholar]

- Foley E., Rippon G., Thai N. J., Longe O., Senior C. (2012). Dynamic facial expressions evoke distinct activation in the face perception network: a connectivity analysis study. J. Cogn. Neurosci. 24, 507–520 10.1162/jocn_a_00120 [DOI] [PubMed] [Google Scholar]

- Fox C. J., Moon S. Y., Iaria G., Barton J. J. (2009). The correlates of subjective perception of identity and expression in the face network: an fMRI adaptation study. Neuroimage 44, 569–580 10.1016/j.neuroimage.2008.09.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston K., Mattout J., Trujillo-Barreto N., Ashburner J., Penny W. (2007). Variational free energy and the Laplace approximation. Neuroimage 34, 220–234 10.1016/j.neuroimage.2006.08.035 [DOI] [PubMed] [Google Scholar]

- Friston K. J., Harrison L., Penny W. (2003). Dynamic causal modelling. Neuroimage 19, 1273–1302 10.1016/S1053-8119(03)00144-7 [DOI] [PubMed] [Google Scholar]

- George N., Dolan R. J., Fink G. R., Baylis G. C., Russell C., Driver J. (1999). Contrast polarity and face recognition in the human fusiform gyrus. Nat. Neurosci. 2, 574–580 10.1038/9230 [DOI] [PubMed] [Google Scholar]

- Gobbini M. I., Haxby J. V. (2006). Neural response to the visual familiarity of faces. Brain Res. Bull. 71, 76–82 10.1016/j.brainresbull.2006.08.003 [DOI] [PubMed] [Google Scholar]

- Gobbini M. I., Haxby J. V. (2007). Neural systems for recognition of familiar faces. Neuropsychologia 45, 32–41 10.1016/j.neuropsychologia.2006.04.015 [DOI] [PubMed] [Google Scholar]

- Grill-Spector K., Kushnir T., Edelman S., Avidan G., Itzchak Y., Malach R. (1999). Differential processing of objects under various viewing conditions in the human lateral occipital complex. Neuron 24, 187–203 10.1016/S0896-6273(00)80832-6 [DOI] [PubMed] [Google Scholar]

- Grill-Spector K., Kushnir T., Edelman S., Itzchak Y., Malach R. (1998a). Cue-invariant activation in object-related areas of the human occipital lobe. Neuron 21, 191–202 10.1016/S0896-6273(00)80526-7 [DOI] [PubMed] [Google Scholar]

- Grill-Spector K., Kushnir T., Hendler T., Edelman S., Itzchak Y., Malach R. (1998b). A sequence of object-processing stages revealed by fMRI in the human occipital lobe. Hum. Brain Mapp. 6, 316–328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K., Kushnir T., Hendler T., Malach R. (2000). The dynamics of object-selective activation correlate with recognition performance in humans. Nat. Neurosci. 3, 837–843 10.1038/77754 [DOI] [PubMed] [Google Scholar]

- Gschwind M., Pourtois G., Schwartz S., Van De Ville D., Vuilleumier P. (2012). White-matter connectivity between face-responsive regions in the human brain. Cereb. Cortex. [Epub ahead of print]. [DOI] [PubMed] [Google Scholar]

- Halgren E., Dale A. M., Sereno M. I., Tootell R. B., Marinkovic K., Rosen B. R. (1999). Location of human face-selective cortex with respect to retinotopic areas. Hum. Brain Mapp. 7, 29–37 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasselmo M. E., Rolls E. T., Baylis G. C. (1989). The role of expression and identity in the face selective response of neurons in the temporal visual cortex of the monkey. Behav. Brain Res. 32, 203–218 10.1016/S0166-4328(89)80054-3 [DOI] [PubMed] [Google Scholar]

- Haxby J. V. (2006). Fine structure in representations of faces and objects. Nat. Neurosci. 9, 1084–1086 10.1038/nn0906-1084 [DOI] [PubMed] [Google Scholar]

- Haxby J. V., Hoffman E. A., Gobbini M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. (Regul. Ed.) 4, 223–233 10.1016/S1364-6613(99)01423-0 [DOI] [PubMed] [Google Scholar]

- Haxby J. V., Hoffman E. A., Gobbini M. I. (2002). Human neural systems for face recognition and social communication. Biol. Psychiatry 51, 59–67 10.1016/S0006-3223(01)01330-0 [DOI] [PubMed] [Google Scholar]

- Haxby J. V., Ungerleider L. G., Clark V. P., Schouten J. L., Hoffman E. A., Martin A. (1999). The effect of face inversion on activity in human neural systems for face and object perception. Neuron 22, 189–199 10.1016/S0896-6273(00)80690-X [DOI] [PubMed] [Google Scholar]

- Herrington J. D., Taylor J. M., Grupe D. W., Curby K. M., Schultz R. T. (2011). Bidirectional communication between amygdala and fusiform gyrus during facial recognition. Neuroimage 56, 2348–2355 10.1016/j.neuroimage.2011.03.072 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman E. A., Haxby J. V. (2000). Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat. Neurosci. 3, 80–84 10.1038/71152 [DOI] [PubMed] [Google Scholar]

- Ishai A. (2008). Let’ face it: it’ a cortical network. Neuroimage 40, 415–419 10.1016/j.neuroimage.2007.10.040 [DOI] [PubMed] [Google Scholar]

- Ishai A., Schmidt C. F., Boesiger P. (2005). Face perception is mediated by a distributed cortical network. Brain Res. Bull. 67, 87–93 10.1016/j.brainresbull.2005.05.027 [DOI] [PubMed] [Google Scholar]

- Ishai A., Ungerleider L. G., Martin A., Schouten J. L., Haxby J. V. (1999). Distributed representation of objects in the human ventral visual pathway. Proc. Natl. Acad. Sci. U.S.A. 96, 9379–9384 10.1073/pnas.96.16.9379 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourtzi Z., Kanwisher N. (2001). Representation of perceived object shape by the human lateral occipital complex. Science 293, 1506–1509 10.1126/science.1061133 [DOI] [PubMed] [Google Scholar]

- Lerner Y., Epshtein B., Ullman S., Malach R. (2008). Class information predicts activation by object fragments in human object areas. J. Cogn. Neurosci. 20, 1189–1206 10.1162/jocn.2008.20082 [DOI] [PubMed] [Google Scholar]

- Lerner Y., Hendler T., Ben-Bashat D., Harel M., Malach R. (2001). A hierarchical axis of object processing stages in the human visual cortex. Cereb. Cortex 11, 287–297 10.1093/cercor/11.4.287 [DOI] [PubMed] [Google Scholar]

- Li J., Liu J., Liang J., Zhang H., Zhao J., Rieth C. A., Huber D. E., Li W., Shi G., Ai L., Tian J., Lee K. (2010). Effective connectivities of cortical regions for top-down face processing: a dynamic causal modeling study. Brain Res. 1340, 40–51 10.1016/j.brainres.2010.04.044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacKay D. J. C. (2003). Information Theory, Inference, and Learning Algorithms. Cambridge: Cambridge University Press [Google Scholar]

- Malach R., Reppas J. B., Benson R. R., Kwong K. K., Jiang H., Kennedy W. A., Ledden P. J., Brady T. J., Rosen B. R., Tootell R. B. (1995). Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proc. Natl. Acad. Sci. U.S.A. 92, 8135–8139 10.1073/pnas.92.18.8135 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marco G., de Bonis M., Vrignaud P., Henry-Feugeas M. C., Peretti I. (2006). Changes in effective connectivity during incidental and intentional perception of fearful faces. Neuroimage 30, 1030–1037 10.1016/j.neuroimage.2005.10.001 [DOI] [PubMed] [Google Scholar]

- Marotta J. J., Genovese C. R., Behrmann M. (2001). A functional MRI study of face recognition in patients with prosopagnosia. Neuroreport 12, 1581–1587 10.1097/00001756-200106130-00014 [DOI] [PubMed] [Google Scholar]

- Michel F., Poncet M., Signoret J. L. (1989). Are the lesions responsible for prosopagnosia always bilateral? Rev. Neurol. (Paris) 145, 764–770 [PubMed] [Google Scholar]

- Morris J. S., Frith C. D., Perrett D. I., Rowland D., Young A. W., Calder A. J., Dolan R. J. (1996). A differential neural response in the human amygdala to fearful and happy facial expressions. Nature 383, 812–815 10.1038/383389a0 [DOI] [PubMed] [Google Scholar]

- Nagy K., Greenlee M. W., Kovács G. (2011). Sensory competition in the face processing areas of the human brain. PLoS ONE 6, e24450. 10.1371/journal.pone.0024450 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nagy K., Zimmer M., Greenlee M. W., Kovács G. (2009). The fMRI correlates of multi-face adaptation. Perception 38(ECVP Abstract Suppl.) 77 [Google Scholar]

- Nasanen R. (1999). Spatial frequency bandwidth used in the recognition of facial images. Vision Res. 39, 3824–3833 10.1016/S0042-6989(99)00096-6 [DOI] [PubMed] [Google Scholar]

- Penny W. D., Stephan K. E., Daunizeau J., Rosa M. J., Friston K. J., Schofield T. M., Leff A. P. (2010). Comparing families of dynamic causal models. PLoS Comput. Biol. 6, e1000709. 10.1371/journal.pcbi.1000709 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perrett D. I., Harries M. H., Mistlin A. J., Hietanen L. K., Bevan R. (1990). Social signals analyzed at the single cell level: someone’s looking at me, something touched me, something moved! Int. J. Comp. Psychol. 4, 25–55 [Google Scholar]

- Perrett D. I., Rolls E. T., Caan W. (1982). Visual neurones responsive to faces in the monkey temporal cortex. Exp. Brain Res. 47, 329–342 10.1007/BF00239352 [DOI] [PubMed] [Google Scholar]

- Perrett D. I., Smith P. A., Potter D. D., Mistlin A. J., Head A. S., Milner A. D., Jeeves M. A. (1985). Visual cells in the temporal cortex sensitive to face view and gaze direction. Proc. R. Soc. Lond. B Biol. Sci. 223, 293–317 10.1098/rspb.1985.0003 [DOI] [PubMed] [Google Scholar]

- Puce A., Allison T., Bentin S., Gore J. C., McCarthy G. (1998). Temporal cortex activation in humans viewing eye and mouth movements. J. Neurosci. 18, 2188–2199 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A., Allison T., Gore J. C., McCarthy G. (1995). Face-sensitive regions in human extrastriate cortex studied by functional MRI. J. Neurophysiol. 74, 1192–1199 [DOI] [PubMed] [Google Scholar]

- Rossion B., Caldara R., Seghier M., Schuller A. M., Lazeyras F., Mayer E. (2003a). A network of occipito-temporal face-sensitive areas besides the right middle fusiform gyrus is necessary for normal face processing. Brain 126(Pt 11), 2381–2395 10.1093/brain/awg241 [DOI] [PubMed] [Google Scholar]

- Rossion B., Schiltz C., Crommelinck M. (2003b). The functionally defined right occipital and fusiform “face areas” discriminate novel from visually familiar faces. Neuroimage 19, 877–883 10.1016/S1053-8119(03)00105-8 [DOI] [PubMed] [Google Scholar]

- Rossion B., Hanseeuw B., Dricot L. (2012). Defining face perception areas in the human brain: a large-scale factorial fMRI face localizer analysis. Brain Cogn. 79, 138–157 10.1016/j.bandc.2012.01.001 [DOI] [PubMed] [Google Scholar]

- Rotshtein P., Henson R. N. A., Treves A., Driver J., Dolan R. J. (2005). Morphing Marilyn into Maggie dissociates physical and identity face representations in the brain. Nat. Neurosci. 8, 107–113 10.1038/nn1370 [DOI] [PubMed] [Google Scholar]

- Sawamura H., Orban G. A., Vogels R. (2006). Selectivity of neuronal adaptation does not match response selectivity: a single-cell study of the fMRI adaptation paradigm. Neuron 49, 307–318 10.1016/j.neuron.2005.11.028 [DOI] [PubMed] [Google Scholar]

- Sergent J., Ohta S., MacDonald B. (1992). Functional neuroanatomy of face and object processing. A positron emission tomography study. Brain 115(Pt 1), 15–36 10.1093/brain/115.1.15 [DOI] [PubMed] [Google Scholar]

- Sorger B., Goebel R., Schiltz C., Rossion B. (2007). Understanding the functional neuroanatomy of acquired prosopagnosia. Neuroimage 35, 836–852 10.1016/j.neuroimage.2006.09.051 [DOI] [PubMed] [Google Scholar]

- Stephan K. E., Penny W. D., Daunizeau J., Moran R. J., Friston K. J. (2009). Bayesian model selection for group studies. Neuroimage 46, 1004–1017 10.1016/j.neuroimage.2009.03.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephan K. E., Penny W. D., Moran R. J., den Ouden H. E., Daunizeau J., Friston K. J. (2010). Ten simple rules for dynamic causal modeling. Neuroimage 49, 3099–3109 10.1016/j.neuroimage.2009.11.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugase Y., Yamane S., Ueno S., Kawano K. (1999). Global and fine information coded by single neurons in the temporal visual cortex. Nature 400, 869–873 10.1038/23703 [DOI] [PubMed] [Google Scholar]

- Wiggett A. J., Downing P. E. (2008). The face network: overextended? (Comment on: “Let’s face it: it’s a cortical network” by Alumit Ishai). Neuroimage 40, 420–422 10.1016/j.neuroimage.2007.11.061 [DOI] [PubMed] [Google Scholar]

- Winston J. S., Henson R. N. A., Fine-Goulden M. R., Dolan R. J. (2004). fMRI-adaptation reveals dissociable neural representations of identity and expression in face perception. J. Neurophysiol. 92, 1830–1839 10.1152/jn.00155.2004 [DOI] [PubMed] [Google Scholar]

- Young A. W., Bruce V. (2011). Understanding person perception. Br. J. Psychol. 102, 959–974 10.1111/j.2044-8295.2011.02045.x [DOI] [PubMed] [Google Scholar]

- Young M. P., Yamane S. (1992). Sparse population coding of faces in the inferotemporal cortex. Science 256, 1327–1331 10.1126/science.1598577 [DOI] [PubMed] [Google Scholar]

- Yovel G., Kanwisher N. (2005). The neural basis of the behavioral face-inversion effect. Curr. Biol. 15, 2256–2262 10.1016/j.cub.2005.10.072 [DOI] [PubMed] [Google Scholar]