Abstract

Differences in transduction and transmission latencies of visual, auditory and tactile events cause corresponding differences in simple reaction time. As reaction time is usually measured in unimodal blocks, it is unclear whether such latency differences also apply when observers monitor multiple sensory channels. We investigate this by comparing reaction time when attention is focussed on a single modality, and when attention is divided between multiple modalities. Results show that tactile reaction time is unaffected by dividing attention, whilst visual and auditory reaction times are significantly and asymmetrically increased. These findings show that tactile information is processed preferentially by the nervous system under conditions of divided attention, and suggest that tactile events may be processed pre-attentively.

Keywords: reaction time, attention, latency, vision, audition, touch

Introduction

From an ecological viewpoint, the time taken for an organism to react to an external event can be of great importance. For example, consider an animal navigating an unfamiliar, potentially hostile environment. The goal of survival clearly requires that any sudden external event is processed by the nervous system as rapidly as possible so that appropriate action may be taken. The physiology of the sensory processing mechanisms has a marked influence on the speed of this process. For example, the process of converting sound waves into neural signals at the cochlea takes approximately 40μs [1]. By comparison, transduction of tactile events by human mechanoreceptors takes approximately 2ms [2], and transduction of visual events at the retina approximately 50ms in photopic conditions [3]. The axonal distance travelled by a neural signal is also an important factor in sensory latency, with shorter distances typically corresponding to shorter latencies - for example, tactile latency increases linearly with increasing distance between the site of stimulation and somatosensory cortex [4]. In order to measure the perceptual time course of these transduction and transmission processes, a reaction time (the time taken for an observer to respond to a sensory event) task is typically employed.

As expected when considering the physiological factors outlined above, the majority of literature indicates that auditory simple reaction time (that measured in the absence of any cognitive demand of the observer) is shorter than its visual counterpart by approximately 40-50ms [5,6]. Reaction time to tactile stimuli (although subject to variation due to the bodily region(s) stimulated) is usually found to be intermediate to visual and auditory values when the stimuli are delivered to an arm or hand [7,8]. These intermodal differences in simple reaction time are usually considered to be due to the transduction and neural factors discussed above [9,10].

Such differences in sensory latency suggest that external events that activate the auditory system offer an ecological advantage in that they are available to the perceptual system more rapidly than visual or tactile events. However, simple reaction time data are traditionally obtained when attention is focused on a single modality. Such a task may therefore not be the best predictor of sensory latency in a dynamic, multisensory environment where survival depends on rapid detection of all sensory events, irrespective of which sensory system is stimulated. Simple reaction time measured whilst attention is divided between modalities thus provides a measure of sensory latency more applicable to a ‘real-world’ environment.

In this context, it is likely that tactile events are of the most urgent interest to an organism as, by definition, the cause of such an event is always in physical contact with the organism and is potentially an immediate threat. In comparison, distant events will stimulate the visual and/or auditory modalities first, allowing the organism more time to execute an appropriate response to such an event. Therefore, a clear ecological advantage would be conferred if tactile processing were quickest when attention is divided across the sensory modalities. However, it is also arguable that both visual and auditory cues are capable of conveying uniquely important sensory information. Visual sensory input provides the most accurate cues to spatial location under normal circumstances, whilst audition is the only sense able to signal the presence of a distal event occurring outside the visual field. We sought to investigate this issue by manipulating the spread of attention across the sensory modalities, with at least three possible outcomes being anticipated.

Firstly, it is possible that sensory latency increases (relative to baseline simple reaction time measured in unimodal blocks) in all of the modalities tested. If reaction time were to increase by the same amount in all three modalities, this would suggest shared attentional resources for vision, audition and touch in the context of simple reaction time. Previous work is consistent with this notion. For example, Post and Chapman [11] demonstrated that visual and tactile reaction time is slowed by 7-10% when observers are warned that either signal is equally likely compared to conditions where observers are cued to the modality of the upcoming trial. If this finding were to generalise to the other crossmodal stimulus combinations, we would expect that reaction time would increase by approximately the same proportion of unimodal simple reaction time in each modality in all combinations. This explanation would also suggest that the reaction time cost would be greatest when attention was divided between all three modalities (rather than two, as in [11]), due to the finite attentional resources available to observers [12].

A second possible outcome is that auditory and tactile simple reaction time increase significantly under conditions of divided attention in comparison to visual. This possibility is suggested by the existence of the so-called visual dominance effect [13]. In his original study, Colavita presented simultaneous audiovisual (AV) stimuli on a subset of trials and found that such trials were perceived as ‘vision alone’ trials, whilst the classic latency advantage of audition was no longer observed [13]. A recent renewal of interest in this phenomenon has demonstrated the robustness of the effect in the original audiovisual context (e.g., [14,15], and analogous effects in the visuotactile (VT) domain [15,16]. Conversely, in the audiotactile (AT) domain neither modality appears to ‘dominate’ the other [15,17]. These findings are likely to reflect the fact that vision is the primary sense in primates, and/or that observers cannot equally divide their attention between vision and any other sense(s) due to an inherent bias toward vision. It has been suggested that such bias may be due to inherently less alerting properties of visual events compared to auditory and tactile events, which observers compensate for by unconsciously allocating disproportionate processing resources to vision [18]. Interestingly, these sensory dominance studies utilised a choice reaction time task, where observers had to respond differently according to which stimulus (or stimulus combination) they perceived on each trial. This extra cognitive demand means that choice reaction time values often bear no relation to the transduction and neural latency values already discussed. For example, visual and auditory choice reaction time have been shown to be indistinguishable [13]. It therefore remains to be seen whether visual input also ‘dominates’ in a simple reaction time paradigm when attention is divided between the modalities.

The third potential outcome is that the latency of tactile events is unaffected by division of attention across sensory modalities. Such an outcome would be consistent with previous suggestions that detection of tactile events requires minimal neural processing resources relative to visual or auditory events [19], and that tactile events are inherently alerting in nature [12]. This outcome would also suggest a degree of independence of attentional resources in the three sensory modalities, consistent with previous audiovisual data obtained using a discrimination (rather than reaction time) task [20].

Methods

Five trained adult observers, including the authors, took part in the study. All had normal or corrected-to-normal vision and no reported hearing or tactile impairments, and all participated on the basis of informed consent. The experiments were conducted in accordance with the Declaration of Helsinki and were approved by the local ethics committee.

Stimuli were a 10ms flash of an LED (subtending 1.05°, luminous intensity of 600cd/m2) positioned in front of observers, a 10ms square-wave windowed white noise burst delivered binaurally over headpohones (70dB SPL), and a 10ms ‘tap’ delivered by an electrical solenoid to the left forefinger (operational noise from which was rendered inaudible to observers). All stimuli were grossly suprathreshold, although no specific attempt to match the stimuli was made as the dimension in which to match stimuli differing in sensory modality is currently unclear [21]. The onset timing of all stimuli was verified with a multiple storage oscilloscope. All stimuli were controlled by custom-written software run in MatLab (MathWorks, U.S.A.) on a Dell PC.

A total of seven conditions were tested. In each of three unimodal conditions (visual, auditory and tactile), all trials within an experimental run were of the same modality; thus, the certainty of the stimulus modality on any trial was 100%. In each of four crossmodal conditions, the stimuli were combined within experimental runs with corresponding reductions in stimulus certainty. The stimulus combinations were audiovisual (AV), audiotactile (AT), visuotactile (VT), all with a stimulus certainty of 50% on each trial, and a combination of all three stimuli which had a stimulus certainty of 33% on each trial. In all of the conditions each trial comprised the presentation of a single stimulus. Because of this, each visual, auditory, or tactile trial remained physically identical in the uni- and crossmodal conditions despite manipulations in the spread of observers’ attention.

Prior to each run, observers were instructed to focus their attention on the single modality to be tested (unimodal conditions), or to divide their attention equally between the modalities to be tested (crossmodal conditions). On each trial, observers had to respond (via a computer mouse) as soon as they detected the presence of any stimulus. No modality identification or response choice element was present in the task, so that the response was the same regardless of which event was detected. In the crossmodal conditions, the order of presentation of different modalities was determined randomly. Crucially, and in contrast to previous studies employing a similar methodology [7,11], observers were not cued as to the modality of the next trial. This step was taken to ensure that attention remained equally divided between the modalities at all times. Such cuing could also unconsciously bias observers’ attention toward the modality used to present the cue [22]. It could be argued that as well as attending to multiple sensory modalities, observers were also forced to attend to multiple spatial locations. However, this was considered desirable in the present study as such a situation is closer to that in a natural, unfamiliar environment, where an organism needs to monitor all sensory channels and multiple regions of external space.

Following the observers’ response, the next trial was initiated with a delay which varied randomly between 250-750ms. Each experimental run began with five ‘practice’ trials, which were excluded from analysis, followed by 40 trials in each of the modalities tested. Therefore, an experimental run in the unimodal conditions (100% stimulus certainty) featured 40 trials, and the crossmodal conditions a total of 80 (50% stimulus certainty) or 120 (33% stimulus certainty) trials. Each observer performed five experimental runs of each of the conditions, making a total of 200 trials for each modality in each of the conditions per observer. Reaction time values of less than 100ms were treated as anticipatory [23] and the trial repeated.

Results

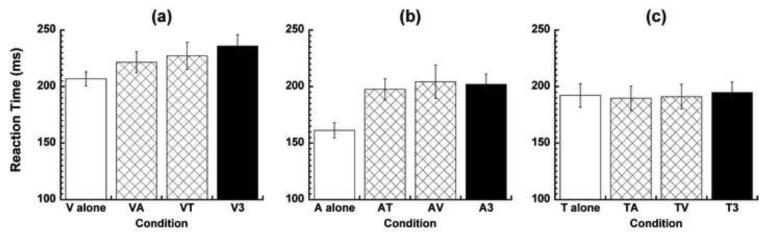

Mean reaction time values pooled across observers are displayed in Figure 1 (a-c, corresponding to visual, auditory and tactile reaction time respectively). Repeated-measures ANOVA on unimodal reaction time values (unfilled bars) found an expected and highly-significant effect of sensory modality (F2, 8 = 40.47, p < 0.001), reflecting the aforementioned differential transduction and neural latencies. For the visual modality (Figure 1a), the mean simple reaction time measured in unimodal blocks was 206.9ms, but this increased as attention was divided between multiple sensory modalities and stimulus certainty decreased (hatched and filled bars). A repeated-measures ANOVA confirmed that these differences in reaction time were significant (F3, 12 = 13.50, p < 0.001). Post-hoc analysis corrected for multiple comparisons (Tukey’s HSD) showed that the unimodal visual condition differed from all other visual conditions (p < 0.05). Visual reaction times when attention was divided between vision and audition (shown on Figure 1a as ‘VA’) and when attention was divided between all three modalities (‘V3’; filled bar) also differed significantly from each other (p < 0.05). No other comparisons reached significance (p > 0.05).

Figure 1 (a-c).

Mean reaction time values (n = 5) in the visual (a), auditory (b) and tactile (c) modalities. Data measured in unimodal blocks (‘alone’) are represented by unfilled bars, in crossmodal blocks where attention is divided between two modalities by hatched bars, and in crossmodal blocks where attention is divided between all three modalities (‘3’) by filled bars. For the hatched bars, the letters indicate the modalities observers divided their attention between, with the first letter indicating the modality represented by that bar; e.g., the AV bar (Figure 1b) represents the mean auditory reaction time when observers’ attention was divided between vision and audition. Error bars represent one SEM either side of the parameter values.

For the auditory data (Figure 1b), the mean simple reaction time measured in unimodal blocks was 161.3ms. However, as with vision, reaction time increased when attention was divided across the sensory modalities (F3, 12 = 8.27, p < 0.005). Post-hoc analysis revealed significant differences between the unimodal auditory condition and all crossmodal auditory conditions (p < 0.05), with no other comparisons reaching significance (p > 0.05).

For the tactile condition (Figure 1c), mean simple reaction time measured in unimodal blocks was 192.3ms. In contrast to the visual and auditory results, tactile reaction time appeared invariant across the unimodal and crossmodal conditions. Repeated-measures ANOVA confirmed that no significant differences in reaction time were apparent between any of the tactile conditions (F3, 12 = 2.36, p > 0.05). In other words, division of attention between multiple sensory modalities does not affect the speeded detection of superthreshold tactile events as measured by simple reaction time.

When attention was divided between all three sensory channels, reaction times differed significantly between the modalities (F2, 8 = 17.06, p < 0.01). This effect arose due to differences between vision and both other modalities (p < 0.01), with no difference between auditory and tactile values (p > 0.05). Therefore, when dividing attention between the three modalities auditory and tactile events are available to the nervous system at comparable latency.

The mean cost to reaction time of dividing attention between sensory modalities ranged from 14.7 - 29.1ms (7.1 - 14.1%) in the visual modality, and 36.4 - 43.0ms (22.6 – 26.7%) in the auditory modality. Thus, auditory detection latency suffers disproportionately as attention is divided between the modalities.

Discussion

In this study, we investigated the effects of dividing attention between sensory channels on the speeded detection of visual, auditory and tactile events. No significant differences in tactile reaction time were found in any conditions; however, visual and auditory reaction time were both slowed when observers were required to divide their attention between multiple sensory channels. Auditory latency was disproportionately slowed relative to visual.

Our results expand upon the large body of literature examining the visual dominance effect first described by Colavita [13]. Crucially, visual dominance studies employ a choice reaction time paradigm in which observers must identify the stimulus modality on each trial and choose their response accordingly. Measures of reaction time in such studies are more likely to reflect the time course of this decision process rather than simply the detection latency of sensory events. For example, no significant differences were found between visual, auditory and tactile choice reaction time (approximately 500ms in each modality) when attention was divided equally between these modalities [22]. The lack of response choice in the present study suggests that our data are more likely to reflect lower-level perceptual latencies in the visual, auditory and tactile systems under conditions of divided attention. The results of the present study suggest that tactile sensory input is regarded as most urgent by the nervous system and hence is immune to latency costs consequent to divided attention. The finding that manipulations of attention fail to modulate tactile processing latency implies that tactile events may be processed ‘pre-attentively’ in an arguably more low-level manner than their visual and auditory counterparts. The same attentional manipulations cause audition to suffer greater latency costs than vision which may point toward a processing hierarchy whereby the nervous system prioritises detection of tactile over visual events, with auditory events perhaps receiving a relatively low priority.

As mentioned earlier, external events that induce tactile sensation afford little or no response time to potential threats. The results of the current study show that tactile perception is unique in maintaining fixed levels of sensory latency despite changes in attentional load – a finding with clear ecological benefits.

Conclusion

The speeded detection of unimodal tactile events is unaffected by dividing attention between multiple sensory channels and regions of external space, whilst modality-specific latency costs are introduced to vision and audition. These results are consistent with preferential processing of tactile events by the nervous system.

Acknowledgements

This work was supported by the Wellcome Trust, the Leverhulme Trust, and the College of Optometrists (U. K.).

REFERENCES

- [1].Corey DP, Hudspeth AJ. Response latency of vertebrate hair cells. Biophys J. 1979;26:499–506. doi: 10.1016/S0006-3495(79)85267-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Mizobuchi K, Kuwabara S, Toma S, Nakajima Y, Ogawara K, Hattori T. Single unit responses of human cutaneous mechanoreceptors to air-puff stimulation. Clin Neurophysiol. 2000;111:1577–1581. doi: 10.1016/s1388-2457(00)00368-0. [DOI] [PubMed] [Google Scholar]

- [3].Schnapf JL, Kraft TW, Baylor DA. Spectral sensitivity of human cone photoreceptors. Nature. 1987;325:439–441. doi: 10.1038/325439a0. [DOI] [PubMed] [Google Scholar]

- [4].Harrar V, Harris LR. Simultaneity constancy: detecting events with touch and vision. Exp Brain Res. 2005;166:465–473. doi: 10.1007/s00221-005-2386-7. [DOI] [PubMed] [Google Scholar]

- [5].Rutschmann J, Link R. Perception of Temporal Order of Stimuli Differing in Sense Mode and Simple Reaction Time. Percept Mot Skills. 1964;18:345–352. doi: 10.2466/pms.1964.18.2.345. [DOI] [PubMed] [Google Scholar]

- [6].Arrighi R, Alais D, Burr D. Neural latencies do not explain the auditory and audio-visual flash-lag effect. Vision Res. 2005;45:2917–2925. doi: 10.1016/j.visres.2004.09.020. [DOI] [PubMed] [Google Scholar]

- [7].Boulter LR. Attention and reaction times to signals of uncertain modality. J Exp Psychol Hum Percept Perform. 1977;3:379–388. doi: 10.1037//0096-1523.3.3.379. [DOI] [PubMed] [Google Scholar]

- [8].Todd JW. Reaction to multiple stimuli. The Science Press; New York: 1912. [Google Scholar]

- [9].Brebner JMT, Welford AT. Introduction: An Historical Background Sketch. In: Welford AT, editor. Reaction Times. Academic Press Inc.; London: 1980. [Google Scholar]

- [10].Elliott R. Simple visual and simple auditory reaction time: A comparison. Psychonomic Science. 1968;10:335–336. [Google Scholar]

- [11].Post LJ, Chapman CE. The Effects of Cross-Modal Manipulations of Attention on the Detection of Vibrotactile Stimuli in Humans. Somatosens Mot Res. 1991;8:149–157. doi: 10.3109/08990229109144739. [DOI] [PubMed] [Google Scholar]

- [12].Posner MI. Chronometric Explorations of Mind. Erlbaum; Hillsdale, NJ: 1978. [Google Scholar]

- [13].Colavita FB. Human Sensory Dominance. Percept Psychophys. 1974;16:409–412. [Google Scholar]

- [14].Koppen C, Spence C. Seeing the light: exploring the Colavita visual dominance effect. Exp Brain Res. 2007;180:737–754. doi: 10.1007/s00221-007-0894-3. [DOI] [PubMed] [Google Scholar]

- [15].Hecht D, Reiner M. Sensory dominance in combinations of audio, visual and haptic stimuli. Exp Brain Res. 2009;193:307–314. doi: 10.1007/s00221-008-1626-z. [DOI] [PubMed] [Google Scholar]

- [16].Hartcher-O’Brien J, Gallace A, Krings B, Koppen C, Spence C. When vision ‘extinguishes’ touch in neurologically-normal people: extending the Colavita visual dominance effect. Exp Brain Res. 2008;186:643–658. doi: 10.1007/s00221-008-1272-5. [DOI] [PubMed] [Google Scholar]

- [17].Occelli V, Hartcher-O’Brien J, Spence C, Zampini M. Is the Colavita effect an exclusively visual phenomenon?. International Multisensory Research Forum; Hamburg. 2008. [Google Scholar]

- [18].Posner MI, Nissen MJ, Klein RM. Visual Dominance - Information-Processing Account of Its Origins and Significance. Psychol Rev. 1976;83:157–171. [PubMed] [Google Scholar]

- [19].Gregory RL. Origin of Eyes and Brains. Nature. 1967;213:369–372. doi: 10.1038/213369a0. [DOI] [PubMed] [Google Scholar]

- [20].Alais D, Morrone C, Burr D. Separate attentional resources for vision and audition. Proceedings of the Royal Society B-Biological Sciences. 2006;273:1339–1345. doi: 10.1098/rspb.2005.3420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Spence C, Shore DI, Klein RM. Multisensory prior entry. Journal of Experimental Psychology-General. 2001;130:799–832. doi: 10.1037//0096-3445.130.4.799. [DOI] [PubMed] [Google Scholar]

- [22].Spence C, Nicholls MER, Driver J. The cost of expecting events in the wrong sensory modality. Percept Psychophys. 2001;63:330–336. doi: 10.3758/bf03194473. [DOI] [PubMed] [Google Scholar]

- [23].Jeeves MA, Moes P. Interhemispheric transfer time differences related to aging and gender. Neuropsychologia. 1996;34:627–636. doi: 10.1016/0028-3932(95)00157-3. [DOI] [PubMed] [Google Scholar]