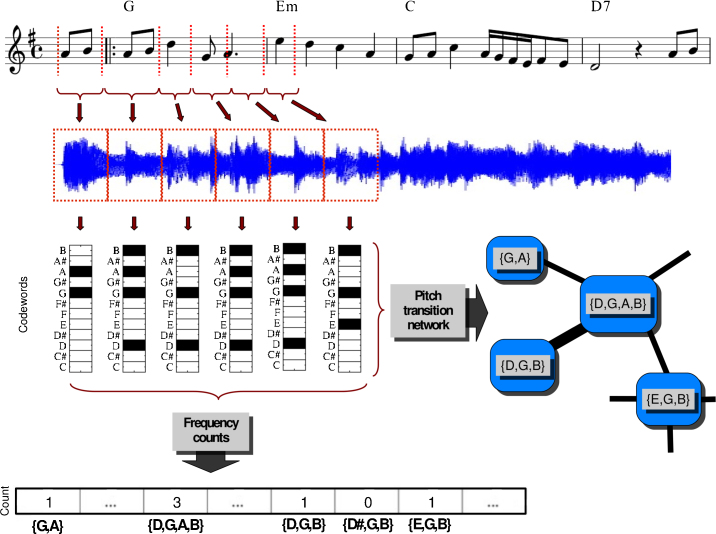

Figure 1. Method schematic summary with pitch data.

The dataset contains the beat-based music descriptions of the audio rendition of a musical piece or score (G, Em, and D7 on the top of the staff denote chords). For pitch, these descriptions reflect the harmonic content of the piece15, and encapsulate all sounding notes of a given time interval into a compact representation11,12, independently of their articulation (they consist of the 12 pitch class relative energies, where a pitch class is the set of all pitches that are a whole number of octaves apart, e.g. notes C1, C2, and C3 all collapse to pitch class C). All descriptions are encoded into music codewords, using a binary discretization in the case of pitch. Codewords are then used to perform frequency counts, and as nodes of a complex network whose links reflect transitions between subsequent codewords.