Abstract

A rapid response to environmental threat is crucial for survival and requires an appropriate attention allocation toward its location. Visual search paradigms have provided evidence for the enhanced capture of attention by threatening faces. In two EEG experiments, we sought to determine whether the detection of threat requires complete faces or salient features underlying the facial expression. Measuring the N2pc component as an electrophysiological indicator of attentional selection we investigated participants searching for either a complete discrepant schematic threatening or friendly face within an array of neutral faces, or single features (eyebrows and eyes vs. eyebrows) of threatening and friendly faces. Threatening faces were detected faster compared to friendly faces. In accordance, threatening angry targets showed a more pronounced occipital N2pc between 200 and 300 ms than friendly facial targets. Moreover, threatening configurations, were detected more rapidly than friendly-related features when the facial configuration contained eyebrows and eyes. No differences were observed when only a single feature (eyebrows) had to be detected. Threatening-related and friendly-related features did not show any differences in the N2pc across all configuration conditions. Taken together, the findings provide direct electrophysiological support for rapid prioritized attention to facial threat, an advantage that seems not be driven by low level visual features.

Keywords: Threat detection advantage, Visual attention, Visual search, Face-in-the-crowd effect, schematic faces, low-level features N2pc, ERP

Introduction

Focusing attention on potential threat cues in the environment is a fundamental mechanism that serves an evolutionary survival function. Evidence for such prioritized attention allocation comes from a variety of experimental studies using human fear conditioning (for review see Öhman and Mineka, 2001), perceptual encoding (Schupp et al., 2004), visual probe tasks (Armony and Dolan, 2002; Pourtois et al., 2004), attentional blink (Anderson and Phelps, 2001; Maratos et al., 2008) or visual search (for review see, Öhman et al., 2009). This preferential processing of threat stimuli can be considered from an evolutionary perspective: Öhman and Mineka (2001) proposed a specialized fear module centered in the amygdala (LeDoux, 1996), which is activated by threat-related stimuli in order to promote automatic, fast activation of defense. Indeed, an enhanced human amygdala contribution was found during processing of highly salient (threatening) stimuli, supporting the proposed model (e.g., Anderson and Phelps, 2001; Carlson, Reinke, & Habib, 2009).

The faster detection of threatening facial expressions relative to friendly faces in a crowd of distractor faces (a phenomenon labeled as “anger superiority effect”) has been demonstrated in a variety of visual search studies using either schematic faces (Öhman et al., 2001, Calvo et al., 2006; Fox et al., 2000; Juth et al., 2005; Tipples et al., 2002, Schubö et al., 2006) or real faces (Horstmann and Bauland, 2006; Fox and Damjanovic, 2006; Gilboa-Schechtman et al., 1999; Pinkham et al., 2010). Interestingly, results focusing on configural stimuli revealed that specific “key”-features are relevant for the sustained capture of attention in visual search. Using simple low-level features, such as a downward-pointing “V” shape (eyebrows), which is similar to the geometric configuration of the schematic faces in angry expression (Öhman et al., 2001), Larson and colleagues (2007) found a faster detection of downward “V”s, as well as triangles with a downward-pointing vertex compared to identical shapes pointing upwards. This shape also activates structures implicated in processing of emotional significant and threatening stimuli, such as the amygdala, the subgenual anterior cingulated cortex (sACC), the fusiform gyrus, and visual sensory regions (Larson et al., 2009). Together with behavioral data, showing that V-shaped figures were rated as more threatening (Lundqvist et al., 1999; Lundqvist et al., 2004) than shapes with V-angle pointing upwards, this finding from Larson et al. (2009) suggests that visual threat can be signaled and conveyed by a simple acontextual geometric configuration. However, other findings indicate that facilitated search for V-shapes only occurs when the V-shapes are integrated in the context of a face, thus rapid attention allocation is only evident when the threatening eyebrow shape can be interpreted as an internal feature of a face (Tipples et al., 2002; Fox et al., 2000; Lundqvist and Öhman, 2005; Schubö et al. 2006, but see Watson et al., in press).

To gain more detailed insights into the precise temporal dynamics of attention capture of threat in visual search and the relevance of low-level features, the present study investigated high-density event-related potentials (ERPs), measuring the N2pc component as an electrophysiological marker of spatial selective attention (Eimer and Kiss, 2008, 2010; Luck and Hillyard, 1994; Woodman and Luck, 1999). The N2pc is an enhanced negativity elicited in the time range of 180 and 300 ms and typically observed over posterior electrodes contralateral to the side of a visual search target that is presented among non-target items. The spatial selective attention effect can result from their bottom-up salience or be modulated by top-down task sets (Luck and Hillyard, 1994; Eimer and Kiss, 2010). Unlike other attention related ERP components such as the P1 and N1 that are linked to early location-specific sensory gating mechanisms (e.g., Mangun & Hillyard, 1987) prior to target selection and the EDAN (early directing attention negativity; e.g., Harter et al., 1989) reflecting preparatory attention shift to target locations, the N2pc is assumed to reflect the direct spatially attention target selection among distractors in visual displays (e.g., Kiss, Van Velzen, & Eimer, 2008). It is still under debate, however, whether the N2pc either reflects distractor suppression (e.g., Luck & Hillyard, 1994) or target feature enhancement (e.g., Mazza, Turatto, & Caramazza, 2009a, 2009b). Nevertheless, due to the lateralized nature of the N2pc and their functional interpretation as an electrophysiological measure of the selective attentional processing of targets, the N2pc component is well suited to investigate the allocation of attention to friendly and threatening targets in visual space, such as in “classical” visual search paradigm with friendly and threatening face targets among neutral face distractors (e.g., Öhman et al., 2001). Recent ERP studies demonstrated that highly salient threat cues can modulate the N2pc, even when attention is focused on another visual demanding monitoring task, providing neural evidence for a fast attention allocation toward threatening faces (Eimer & Kiss, 2007; Holmes et al., 2009).

The aim of the present study was to investigate both behavioral and electrophysiological measures of attention capture to determine the temporal course of attention allocation to threatening and friendly facial expressions when searching in visual space. According to the anger-superiority phenomenon found in earlier studies (Öhman et al., 2009) we expected to find an attentional bias more apparent for threatening than for friendly target faces among neutral distractor faces. Consistent with evolutionary models of threat perception (Öhman et al., 2000), threatening faces should capture attention more efficiently compared to friendly faces, resulting in shorter visual search latencies and an enhanced N2pc component effects.

To further investigate the role of simple configurational threat (Larson et al., 2007, 2009) in visual search we conducted a second study presenting a V-shaped eyebrow and eye configuration and a V-shaped eyebrow configuration alone which have been discussed as critical features of threatening faces that humans have evolved to detect efficiently (Fox and Damjanovic, 2006; Lundqvist et al., 1999; 2004; Tipples et al., 2002). We hypothesized that even simple V-shaped eyebrow configurations are recognized more rapidly than shapes with the V-angle pointing upward leading to briefer search times and more rapid attentional capture, as revealed by the N2pc.

Material and methods

Experiment 1

Participants

Twenty-one healthy students from the University of Greifswald (19 female, 2 male; mean age: 21.4 years, range: 18–31 years; all right-handed) participated in Experiment 1. All had normal or corrected-to-normal vision. All participants provided informed written consent for the protocol approved by the Review Board of the University of Greifswald and received financial compensation for participation.

Stimulus Materials and Procedure

The facial stimuli were schematic pictures portraying threatening, friendly, and neutral facial expressions (Lundqvist et al., 1999, 2004; Öhman et al., 2001). The faces were drawn in black against a white background; the outline of the face and the nose was drawn with 1-pixel lines, and the eyebrows, eyes, and mouth were drawn with 2-pixel lines. The stimuli displayed arrays of six schematic faces arranged in a circle around the fixation cross (0.27° width × 0.27° height). The distance from the fixation cross at the center of the display to the center (nose) of each one of the six faces, was 3.44°. The individual faces were 1.37° width × 1.68° height. Half of the stimulus arrays (n=240) were composed of faces that all showed neutral face expressions. In the other half of the arrays, one of the faces in the array had either a friendly (n=120) or threatening (n=120) emotional expression. The targets occurred 20 times at any of the six positions in the matrix. Targets positions were randomized over trials. Examples of the stimulus arrays are shown in Figure 1. The matrices were presented on a 20” computer monitor (1024 × 768, 60 Hz) located 1.5 m in front of the viewer.

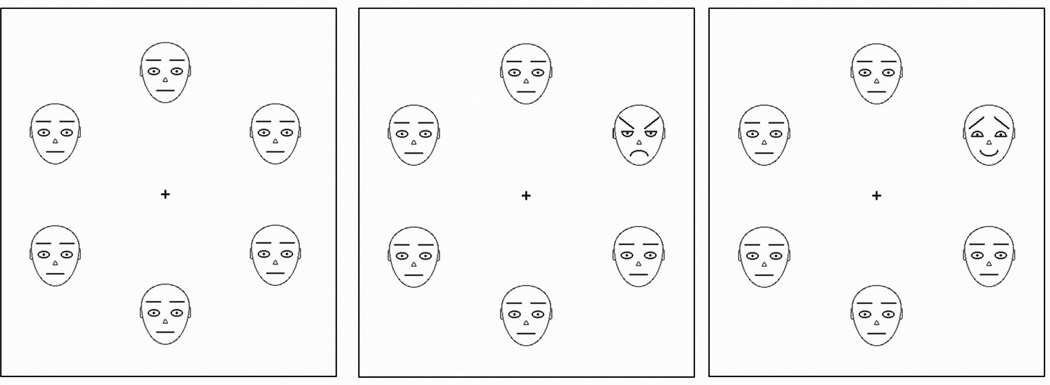

Figure 1.

Schematic facial arrays used as stimuli in the visual search task in Experiment 1. Search array contained either irrelevant neutral faces (left), a threatening face target among neutral distractor faces (middle) or a friendly face target among neutral faces (right). Each type of target faces appeared 20 times at each of the six positions.

Subjects were seated in a reclining chair in a sound-attenuated, dimly lit room. After the electrodes had been attached, participants were instructed to attentively watch the displays presented on the monitor. The participants were instructed to detect as quickly and accurately as possible a discrepant face in the presented arrays of faces. It was also explained that participants had to press different keys depending on whether a discrepant target was present in the array. Using a keyboard, participants had to press either a “yes” or “no” button when a target was present or not in the stimulus array. Before the task began, all participants practiced the visual search task in a series consisting of 6 trials with displays containing a target or not.

A trial was initiated by the appearance of a 500 ms fixation cross preceding each onset of the stimulus arrays in order to encourage the participants to keep their gaze focused on the central fixation location throughout the experiment. There was a variable inter-trial interval (ITI) of 1500, 2000, or 2500 ms. The arrays were presented in random order for each participant with the constraint that no array with a target (either angry or happy) was presented on more than four consecutive trials. A trial was terminated by the participants’ response. Before starting the task, subjects were instructed to avoid eye blinks and excessive body movements during ERP measurement.

Apparatus and data analysis

EEG signals were recorded continuously from 256 electrodes using an Electrical Geodesic system and digitized at a rate of 250 Hz, using the vertex sensor (Cz) as recording reference. Scalp impedance for each sensor was kept below 30 kΩ, as recommended by the manufacturer guidelines. All channels were bandpass filtered online from 0.1 to 100 Hz. Off-line analyses were performed using EMEGS (Junghöfer and Peyk, 2004) including low-pass filtering at 40 Hz, artefact detection, sensor interpolation, baseline correction, and conversion to an average reference (Junghöfer et al., 2000). Stimulus-synchronized epochs were extracted from 100 ms before to 800 ms after picture onset and baseline corrected (100 ms prior to stimulus onset).

The lateralized N2pc component was calculated by averaging the electrocortical activity ipsi- or contralateral relative to an event. The ipsilateral waveform was computed as the average of the left-sided electrode cluster when the target was presented to the left and the right-sided electrode cluster when the target was presented to the right. The contralateral waveform was defined as the average of the left-sided electrode cluster to the right-sided target and the right-sided electrode cluster to the left-sided target. Averages were based on correctly responded trials only. Overall, approximately 30 % of the trials were rejected because of artifacts and outliers in reaction times. These rejected trials were equally distributed across all target categories. Finally, separate averages were computed for each condition including facial expression (threatening vs. friendly), emotional target location (left vs. right relative to fixation) and electrode site (left vs. right cluster). For facial expression location the upper and lower left faces were merged to one single left location condition (n=40 for each facial expression), whereas the upper right and lower right faces were averaged for the right location condition (n=40 for each facial expression). Face targets presented at the top or bottom positions did not enter the analyses. Corresponding sensory cluster and time window representative for the N2pc component were determined on the basis of visual inspection of individual subject waveforms and in common with prior research (Eimer and Kiss, 2007). Analyses focused on lateral occipital electrodes in the time range between 220 and 270 ms, where the N2pc component was maximal: The N2pc was scored on the basis of maximal ERP mean amplitudes including the EGI sensors 95, 96, 105, 106, 107, 114, 115 (left), and 159, 160, 168, 169, 170, 177, 178 (right) (Figure 2). Data were entered into a repeated measures ANOVA including the factors facial expression (threatening vs. friendly), emotional target location (left vs. right relative to fixation) and electrode site (left vs. right cluster). Because the N2pc is a lateralized component, the occurrence of a reliable N2pc will be indicated by a significant interaction between target location and electrode site. Correspondingly, emotional target effects (threatening vs. friendly) on the N2pc will be reflected by a three-way interaction between one of these factors with target location and electrode site.

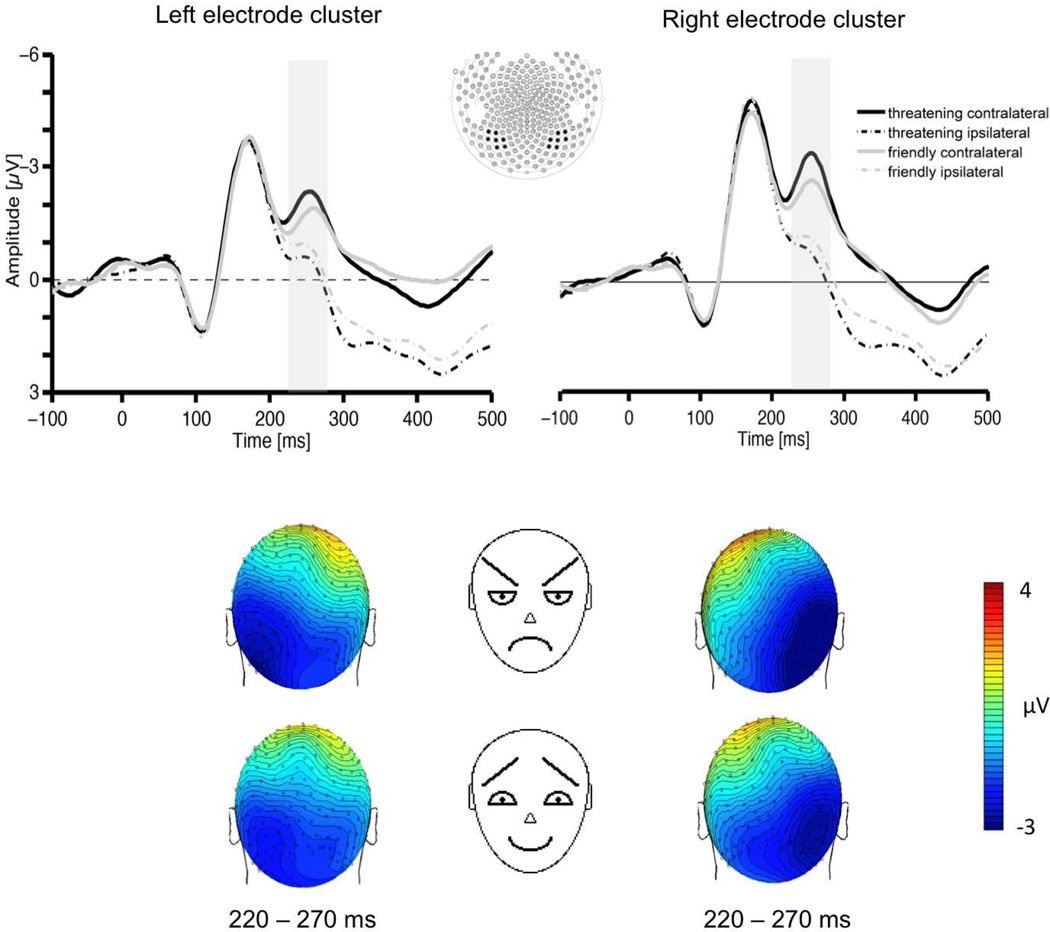

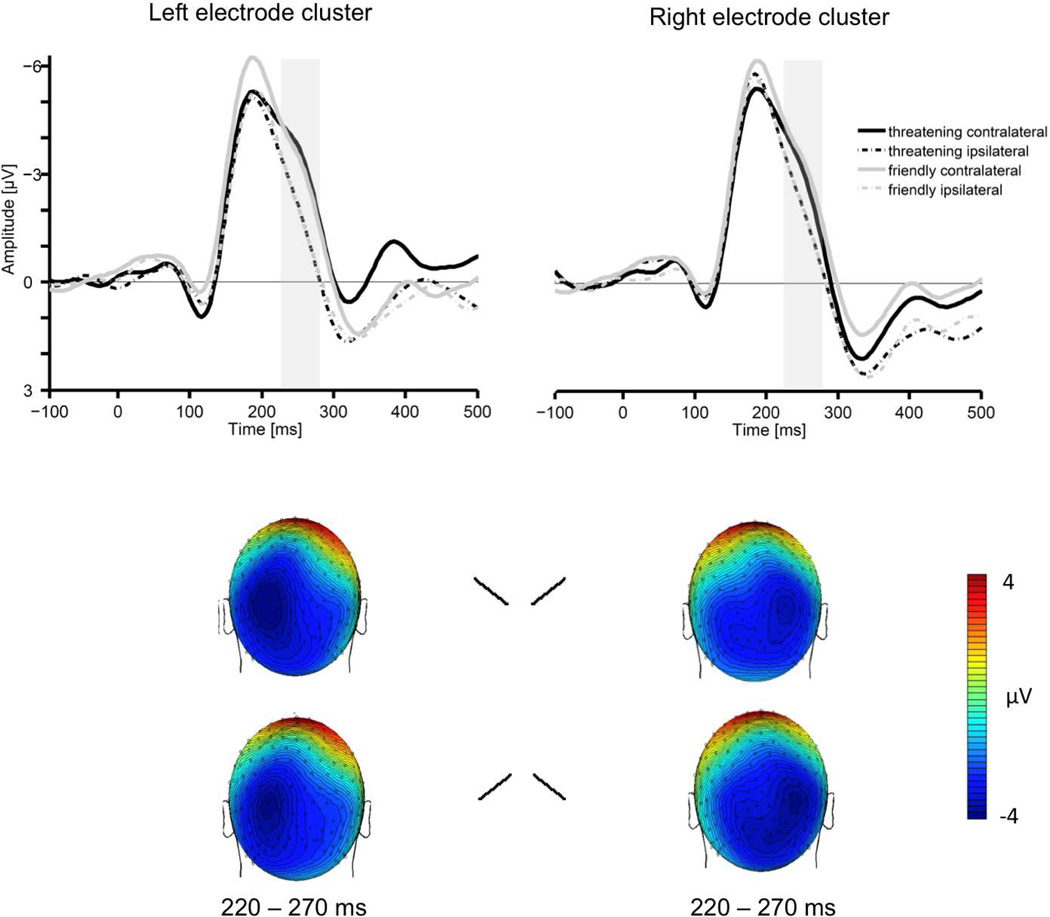

Figure 2.

The upper section shows grand averaged ERPs elicited in the 500 ms interval after target onset in response to threatening (black lines) and friendly (grey lines) target faces contralateral (solid lines) and ipsilateral (dashed lines) to the visual hemifield where the face was presented. The shaded area represents the time window (220–270 ms) used in the analyses. ERPs are averaged across electrodes within posterior clusters used in the analyses (middle section). The lower section shows the topographical voltage maps (back views) for threatening and friendly face targets presented on the right side (left maps) and left side (right maps), showing the distribution of N2pc negativity in the time interval 220–270 ms.

For behavioral data, reaction times (RT) and accuracy rates (AR), were analyzed. Accuracy was calculated as the percentage of targets correctly detected for each facial expression and each side of array. Mean reaction times were calculated using correct trials only, for each condition and each participant. Behavioral data were submitted to repeated measures ANOVAs using the factors facial expression (threatening vs. friendly) and emotional target location (left vs. right). For effects involving repeated measures, the Greenhouse-Geisser procedure was used to correct for violations of sphericity.

Finally, a correlation analysis was performed to determine possible relations between the behavioral and N2pc data. The reaction times of the correct responses to a target (absolute values) were correlated with the mean amplitudes of the N2pc component. Significant positive correlations between the reaction times and the N2pc amplitude would indicate that subjects with shorter target-related reaction times show larger N2pc amplitudes.

RESULTS

Experiment 1

Behavioral data

Overall, participants responded correctly on 99 % of the trials (hits and correct rejections). Reaction times more than 3 SDs above each participant’s mean (see Holmes et al., 2008) were excluded in order to reduce the influence of outliers (1.5 % of data). Participants detected threatening and friendly target faces with similar accuracy, F(1,20) < 1, for the main effect of target expression. A main effect of location revealed that the accuracy rate was lower for targets presented on the left location side relative to targets presented on the right location side, F(1,20) = 6.56, p < .05.

The mean RT data are shown in Table 1. As expected threatening targets were detected faster than friendly targets as revealed by a main effect of facial expression for mean reaction times, F(1,20) = 48.47, p < .001. An interaction between location and facial expression indicated that reaction times were shorter for threatening faces if presented on the right compared to the left location side, F(1,20) = 7.81, p < .05.

Table 1.

Mean reaction times to targets (in milliseconds) and N2pc amplitude (in microvolts) across the two experiments.

| Experiment 1 | Experiment 2 | |||||

|---|---|---|---|---|---|---|

| Complete face | Eyes and Eyebrows | Eyebrows | ||||

| Threatening | Friendly | Threatening | Friendly | Threatening | Friendly | |

| RT data | 614 (115) | 667 (118) | 590 (97) | 618 (119) | 565 (93) | 562 (84) |

| ERP data: N2pc | − 1.88 (1.38) | − 1.04 (0.96) | − 1.58 (1.45) | − 1.31 (0.94) | − 1.16 (1.27) | − 1.18 (1.77) |

Numbers in parentheses indicate SD.

ERP data

Figure 2 shows the Grand average ERPs obtained over the left and right occipital sensor cluster contralateral to the emotional target location and ipsilateral to the emotional target location for targets containing a threatening face and for targets containing a friendly face. As can be seen, a N2pc component was elicited starting about 220 ms poststimulus of lateral occipital scalp sites. Overall analysis revealed an interaction between electrode site and target position, F(1,20) = 36.43, p < .001, indicating the presence of the N2pc. Importantly, there was an interaction between the factors emotional target, target position and electrode site, F(1,20) = 17.84, p < .001, showing that the visual search for threatening schematic faces induced a stronger N2pc amplitude than friendly schematic faces (see upper panel of Figure 2). This effect is also illustrated in the bottom panel of figure 2, showing the scalp distribution of the N2pc.

Correlations between behavioral measures and N2pc amplitude

The magnitude of the reaction time was correlated with the amplitude of the N2pc for both emotional facial expressions (r= .44, p< .05), indicating that shorter reaction times are associated with larger N2pc amplitudes.

Discussion

Threatening faces were detected faster than friendly faces, replicating previous studies (e.g., Lundqvist and Öhman, 2005; Öhman et al., 2001; Öhman et al., 2009). Neural responses showed a clear correspondence to these behavioral data. Extending earlier findings, which found larger N2pc amplitudes to threatening compared to neutral facial expressions (Eimer & Kiss, 2007), the current ERP data showed that the N2pc was also larger to threatening compared to other emotionally salient friendly facial expressions. This is consistent with the idea that specifically threatening stimuli automatically capture more selective attention, suggesting that the evolutionary relevant defense system can be easily primed by these stimuli (Öhman and Mineka, 2001).

Experiment 2

The second experiment was designed to test whether simple features of a threatening face would be sufficient to evoke increased spatial attention as indexed by the N2pc. In this study we reduced the schematic faces from Experiment 1 to a configuration of eyebrows and eyes and just to V-shaped or Λ-shaped eyebrows for a second visual search study. If the N2pc is enhanced for targets containing threatening-related features relative to friendly-related features, the “anger superiority effect” is a simple feature superiority effect. In contrast, if different facial features do not affect the N2pc, this would suggest that the prioritized capture of attention for threat is restricted to the perceptual “context” of a face. Tipples et al. (2002) reported data concordant with the latter possibility. In the same vein, Lundquist an coworkers reported that single features in isolation did affect emotional ratings (Lundqvist et al., 2004), but that detection times of single features in a visual search setting required a facial context to result in more rapid detection of threatening features (Lundqvist and Öhman, 2005).

Material and Methods

Participants

A separate sample of twenty-one healthy female students from the University of Greifswald (mean age: 20.2 years, range: 18–29 years; all right-handed) participated in Experiment 2. All had normal or corrected-to-normal vision.

Stimulus Materials, Procedure, ERP data acquisition and Analysis

The methods were identical to Experiment 1, except that in Experiment 2 the stimulus arrays consisted of threatening-, friendly- and neutral related features of the schematic faces (presented in Experiment 1). As simple “low-level feature cues” with facial context we presented the eyebrows and eyes compared to the eyebrows alone as stimulus materials (see Figure 3) in two consecutive experimental blocks with a 5 min pause between both blocks. Block order was counterbalanced across participants. Participants were told to detect as accurate and quickly as possible an inconsistent singleton among the crowd of five identical distractors in the stimulus array.

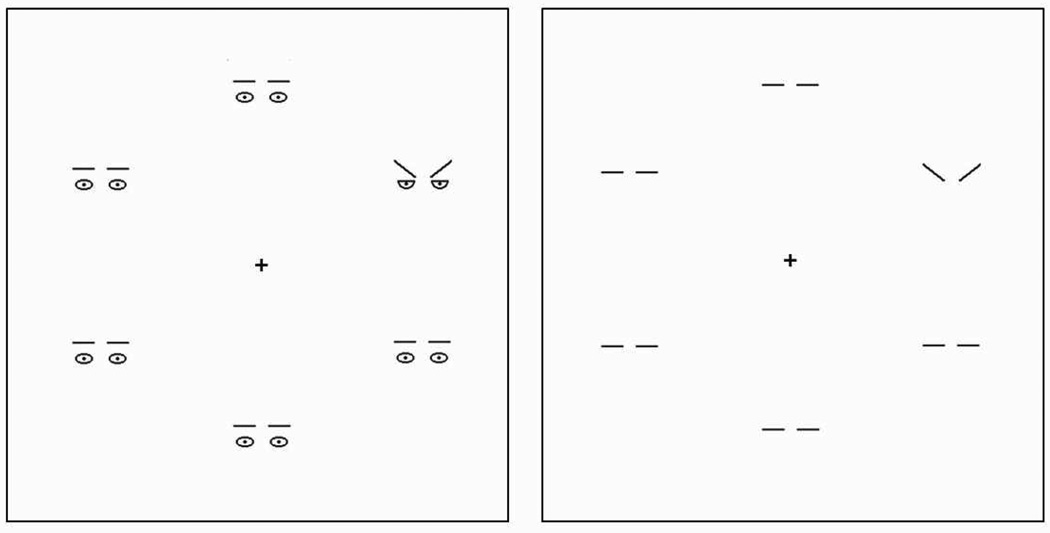

Figure 3.

Illustration of face feature stimuli used in Experiment 2. Search arrays consisted of threatening-, friendly- and neutral related features of the schematic faces. Search arrays contained feature configurations (eyebrows and eyes) of irrelevant neutral faces, of threatening face targets (left search array) or friendly face targets. The right search array shows the same trial containing V-shaped, Λ-shaped, and horizontal eyebrows. Procedure was identical to Experiment 1 with the participants’ task to find an inconsistent pattern in the matrix.

EEG recording and analysis procedures were identical to Experiment 1. In the overall ANOVAs, the factors feature (threat-related vs. friendly-related), position (left vs. right to fixation) and electrode site (left vs. right cluster) were calculated separately for each experimental block condition. Greenhouse-Geisser corrections for nonsphericity were applied where appropriate. Correlational analyses were carried out using the same variables as described for experiment 1.

Behavioral data

The overall accuracy rates (hit rate and correct rejection rate) did not differ significantly between the two feature conditions, likely because of a ceiling effect (eyebrows and eye vs. eyebrows, both 98.8 %), F(1,20) < 1. Again, trials with outliers were discarded (2 % of data of the “eyebrow and eye”-feature condition; 1.7 % of data of the “eyebrow alone”-feature condition)

For the “eyebrow and eye”-feature condition, accuracy was better for the threatening relative to friendly configurations, F(1,20) = 6.16, p < .05. Again, targets presented on the right side were detected more accurately than targets presented on the left side, F(1,20) = 6.39, p < .05. The type of target was not affected by location side, F(1,20) = 2.33, p = .143. For RTs, participants were faster in identifying the threat-related stimuli targets in comparison to friendly-related targets, F(1,20) = 7.85, p < .05. A main effect of location indicate that responses to right-sided targets were faster relative to left-sided targets, F(1,20) = 39.23, p < .001.

For the “eyebrow alone”-feature condition, accuracy did not differ between threatening and friendly features, F(1,20) < 1. Accuracy rate was increased for feature targets on the left side relative to the right side, F(1,20) = 14.40, p < .001. For RTs, no differences were observed between threat-related V-shaped- and friendly-related -targets, F(1,20) < 1. A main effect of target location suggests faster reaction times for targets placed on the right side in comparison to targets placed on the left-side, F(1,20) = 49.12, p < .001. The mean percentage error rate (missing rate) was less than 3 %. A main effect of target location showed that less errors were made for the right target location relative to the left target location, F(1,20) = 14.40, p < .001, indicating the absence of a speed accuracy trade-off. Error rates did not differ between threatening and friendly features, F(1,20) < 1.

ERP data

ERPs elicited over occipital electrode clusters contralateral and ipsilateral to the target for features containing either the “eyes and eyebrows”-feature condition and “eyebrows alone”-feature condition are presented in Figures 4 and 5.

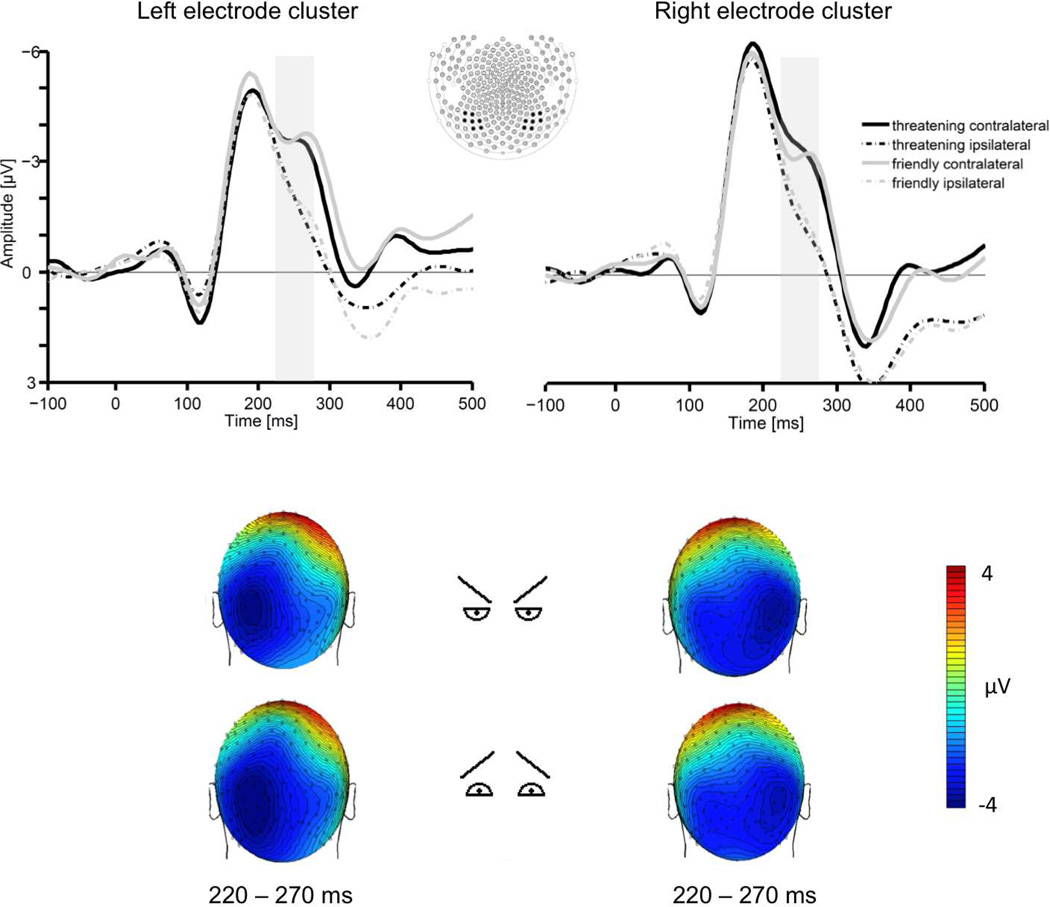

Figure 4.

The upper section shows grand averaged ERPs elicited in the 500 ms interval after target onset in response to threatening-related (black lines) and friendly-related (grey lines) configuration (eyes and eyebrows) stimuli contralateral (solid lines) and ipsilateral (dashed lines) to the visual hemifield where the configuration stimulus was presented. The shaded area represents the time window (220–270 ms) used in the analyses. ERPs are averaged across electrodes within posterior clusters used in the analyses. The lower section shows the topographical voltage maps (back views) for threatening and friendly – related configuration targets presented on the right side (left maps) and left side (right maps), showing the distribution of N2pc negativity in the time interval 220–270 ms.

Figure 5.

The upper section shows grand averaged ERPs elicited in the 500 ms interval after target onset in response to threatening (black lines) and friendly (grey lines) eyebrow stimuli contralateral (solid lines) and ipsilateral (dashed lines) to the visual hemifield where the eyebrow stimulus was presented. The shaded area represents the time window (220–270 ms) used in the analyses. ERPs are averaged across electrodes within posterior clusters used in the analyses. The lower section shows the topographical voltage maps (back views) for threatening and friendly eyebrow targets presented on the right side (left maps) and left side (right maps), showing the distribution of N2pc negativity in the time interval 220–270 ms.

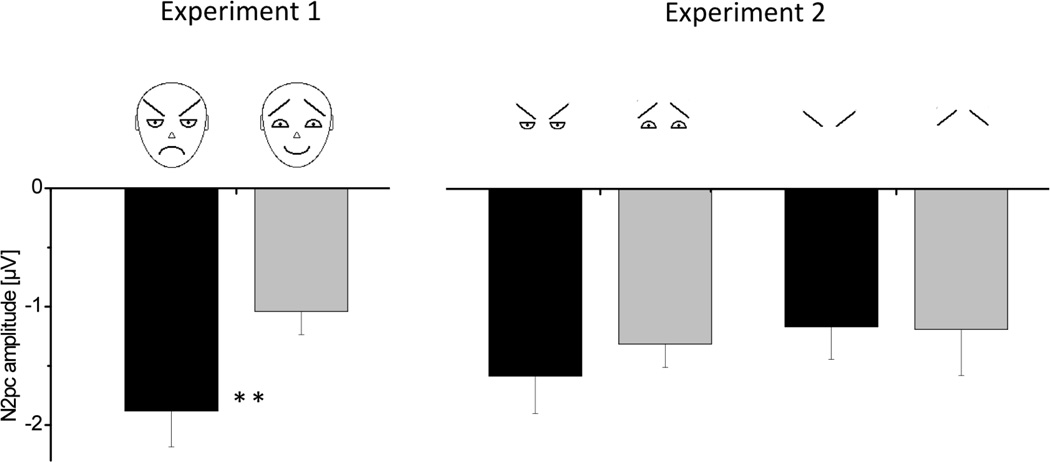

A reliable N2pc was observed over occipital scalp electrodes in the time range of 220–270 ms (see Figure 4), reflected by a significant interaction of target position and electrode site, F(1,20) = 55.60, p < .001. Although the N2pc amplitude was larger for threat-related features containing eyes and eyebrows of the schematic faces (M= −1.58; SD= 1.45) than for friendly eyes and eyebrows (M= −1.31, SD= 0.94), these differences were statistically not significant F(1,20) < 1 (see Figure 6 for a comparison of the N2pc across all experiments). Moreover, no other main and interaction effects for the factors feature, target position and electrode site were significant, all F(1,20)< 1.

Figure 6.

Magnitude of the N2pc amplitude (contralateral minus ipsilateral) across all experiments and experimental conditions. Asterisks indicate significant differences (p< .001).

For the “eyebrow alone”-feature condition, targets also elicited a robust N2pc over lateral occipital electrodes (see Figure 5), statistically confirmed by an significant interaction between target location and electrode site, F(1,20)= 31.47, p< .001. Under these conditions, mean N2pc amplitudes for v-shaped configurations (M= −1.16; SD= 1.27) were almost identical compared to those elicited by Λ-shaped target configurations (M= −1.18; SD= 1.77) with no statistical differences (see again Figure 6 for comparison of these differences across all experiments). Again, no significant main or interaction effects were observed for the factors feature, position and electrode site, all F(1,20)< 1.

Correlations between behavioral measures and N2pc amplitude

No significant correlations were observed between the reaction times and the N2pc amplitude for the “eye and eyebrow”- and “eyebrow alone” condition.

Discussion

Experiment 2 tested whether simple low-level configurations of threatening faces, such as eyes and eyebrows, are sufficient to capture attention in visual search. When eyes and eyebrows were present, reaction times for detection of threat-related features were faster than those for friendly-related features. When the eyes were absent, no differences in reaction times were observed between V-shaped and Λ-shaped eyebrows. Interestingly, discrepant to the behavioral data no emotion effects were detected in the brain-potentials. Configurations containing eyes and eyebrows of the schematic threatening faces indeed evoked greater N2pc amplitudes than friendly-related configurations but these differences were not statistically significant. If only eyebrows were used as configurations no differences between threatening and friendly configurations were observed in either behavioral or ERP-data.

GENERAL DISCUSSION

In the present study, we investigated high density ERPs to delineate the underlying brain dynamics associated with the search for threatening and friendly face targets in a crowd of neutral background expressions. We found that threatening facial expressions were better detected than friendly expressions as in previous studies (e.g., Öhman et al., 2001; for review see Öhman et al., 2009). Moreover, the detection of face targets was accompanied by the presence of N2pc components in response to both threatening and friendly faces. With the respect to the anger-superiority effect shown in behavioural data the N2pc amplitudes to threatening face targets were enhanced relative to friendly face targets, indicating enhanced automatic attention capture by this highly salient threatening material.1

The current results extend previous visual search findings of facilitated detection of threatening stimuli by delineating the neural responses during the detection of schematic threat. The present results are consistent with recent dot probe studies showing an emotion effect in the N2pc component toward natural threatening faces relative to neutrals in healthy (Holmes et al., 2009) and high anxious individuals (Fox et al., 2008). The current visual search study also extended recent work using threatening vs. neutral natural faces (Eimer and Kiss, 2007) and threatening vs. friendly faces (Feldmann-Wüstefeld et al., 2011) by demonstrating that the attention capture is enhanced for threatening relative to other emotional but non threatening friendly face targets2. These findings are in line with the proposed specific functional and evolutionary role of the fear system requiring a fast and accurate encoding of the threatening environment to promote defensive responses (Öhman et al., 2000; Öhman et al., 2001; Schupp et al., 2004).

The neural networks underlying facilitated search for threatening targets in the environment are less clear. Known from brain imaging and EEG studies (Lang et al., 1998; Vuilleumier et al. 2001; Sabatinelli et al., 2005, 2007; Schupp et al., 2003; Pourtois et al., 2004; Keil et al. 2009) emotional visual stimuli produce greater activations in cortical visual areas. It is suggested that re-entrant feedback loops from limbic regions (amygdala) to visual cortex lead to amplified perceptual processing (Emery and Amaral, 2000). Similar attention related modulations of cortical sensory areas have been shown, where the extrastriate cortex and the amygdala were highly correlated during the processing of angry relative to neutral faces (Morris et al., 1998; Pessoa et al., 2002; Sabatinelli et al., 2005) and abolished in patients with amygdala lesions (Vuillemier et al., 2004). Furthermore, emotional inputs from limbic regions (such as amygdala) can also bias critical spatial attention networks that involve fronto-parietal brain systems (Armony and Dolan, 2002; Mohanty et al., 2009). Since the N2pc reflects activity in circuits that involves parietal and temporo-occipital pathways (Hopf et al., 2000), the findings of larger N2pc for threatening faces may suggest the outcome of the integration of spatial and motivational threatening information following the modulation of spatial attention networks and visual areas by the amygdala to facilitate the rapid detection of aversive stimuli (Mohanty et al., 2009).

To examine whether single isolated or configurational features are sufficient to activate the defense networks, producing the threat superiority effect, we reduced the entire schematic face to the eye region, which is proposed to be the most salient key feature for conveying potential social threat (Aronoff et al., 1988; Fox and Damjanovic, 2006; Lundqvist et al., 1999, 2004; Tipples et al., 2002; Whalen et al., 2004), hereby presenting the eyes and eyebrows as feature configuration and the eyebrows only.

The N2pc did not differ between features of threatening facial expressions compared to friendly expressions, suggesting no enhanced attention capture for threat-related features. Interestingly, discrepant to the ERP findings reaction times for the threat-related stimuli targets were significantly shorter compared to friendly-related targets containing eyes and eyebrows. Indeed, the N2pc amplitude was larger for threat-related than for friendly-related targets, but this difference was statistically not confirmed. A possible explanation for this discrepancy could be that shorter reaction times toward the “eye and eyebrows” condition are facilitated by motor priming (e.g., Dehaene et al., 1998). Thus, subliminal motor activation might already be engaged before selective attention effects can be detected over the visual cortex. A possible neural candidate might by the amygdala and its efferent projections which are critically involved in affective priming processes (for review, Hamm et al., 2003) in which defensive reflexes are automatically primed during confrontation with unpleasant stimuli. As mentioned above, the enlarged N2pc amplitude might reflect a later sensory process as an outcome of the integration of limbic inputs in parietal and temporo-occipital pathways.

The absence of a feature based anger superiority effect is also in contrast with recent results showing that simple geometric stimuli, similar to the threat-related stimuli of the current study, can guide prioritized spatial attention (Larson and colleagues (2007, 2009). As an explanation the authors argued that the stimuli used in the experiment had a more threatening shape than the eyebrow configuration of the schematic faces. However, other findings indicate that it is important to interpret the single features, as the eyebrow frown, as a facial component (Fox et al., 2000; Lundqvist and Öhman, 2005; Tipples et al., 2002; Schubö et al., 2006). In all of these studies, a detection advantage was observed for threatening stimuli only when the stimuli contains the gestalt of a face or features that were rated as “facelike”. Thus, the rapid detection of threat-related features of schematic faces may be restricted to the context “face” and perceptual gestalt of a face respectively, and is not limited to low-level perceptual factors. Another possibility could be that not the eyebrow region but the eyes alone are the critical region that conveys potential threat. Recent findings indicate that natural eye partitions alone can lead to search advantage for threatening facial expressions in visual search (Fox and Damjanovic. 2006) and that single visual features related to the face such as fearful eye whites can modulate the human amygdala activation (Whalen et al., 2004). Future ERP work is needed to test whether single eye partitions alone are potential cues to attract attention.

The present studies are not without limitations. Because we did not vary the matrix size we cannot determine whether the anger superiority effect is a “pop-out” phenomenon or underlies serial search. Moreover, although evidence suggest that the eyebrow frown is evaluated as more threatening, and consequently is detected quicker relative to “friendly” features, we did not measure the perceived subjective intensity. Moreover, ratings asking whether features are “facelike” or “nonfacelike” (Tipples et al., 2002) would be more helpful to support the current ERP findings, demonstrating enhanced attention capture for whole schematic facial expressions. Furthermore, because we studied primarily women in the current experiments, we cannot rule out that the present results are influenced by gender. Future studies are needed to investigate whether the current findings can be replicated in men. Finally, to increase the ecological validity of the current results, future ERP work should investigate the brain dynamics of whole natural faces as well as specific natural facial features in isolation.

Research Highlights.

The detection of schematic facial threat is facilitated in visual search

Enhanced attention allocation to schematic threat is reflected in the N2pc component

The rapid prioritizing of facial threat as reflected in the N2pc is not driven by single features

ACKNOWLEDGMENTS

This research was supported by a grant from the NIMH, P50MH72850-05. We are grateful to Tina Kunze and Janine Wirkner for their assistance in data collection.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Alternative to the present view of an enhanced target selection for threatening relative to friendly faces, recent evidence from Galfano and colleagues (2010) suggest that the N2pc can be also interpreted as reflecting a reorienting of attention to a target. Using a gaze cueing paradigm the authors showed that the N2pc is larger in incongruent trials (vs. congruent trials) where participant where mislocated by gaze to a target location. In the same vein, even previously attended target locations can inhibit the return of attention that in turn causes a reorienting of attention to a target (McDonald et al., 2009).

Although the main focus of the current paper was on the N2pc component, additional analyses were performed for the P1 (90 – 120 ms) and N1 (150 – 190 ms) component. Critically, neither the main effect for emotional target nor the target position × electrode site interaction and emotional target × target position × electrode site interaction were significant for the complete faces in Experiment 1 and the specific feature configurations in Experiment 2. The results indicate that the P1/N1 complex is not involved in the target selection but rather represents sensory gating mechanisms in early visual processing prior to the selection of specific target stimuli.

Contributor Information

Mathias Weymar, Email: mweymar@ufl.edu.

Andreas Löw, Email: andreas.loew@uni-greifswald.de.

Arne Öhman, Email: arne.ohman@ki.se.

Alfons O. Hamm, Email: hamm@uni-greifswald.de.

References

- Anderson AK, Phelps EA. Lesions of the human amygdala impair enhanced perception of emotionally salient events. Nature. 2001;411:305–309. doi: 10.1038/35077083. [DOI] [PubMed] [Google Scholar]

- Armony JL, Dolan RJ. Modulation of spatial attention by fear-conditioned stimuli: an event-related fMRI study. Neuropsychologia. 2002;40:817–826. doi: 10.1016/s0028-3932(01)00178-6. [DOI] [PubMed] [Google Scholar]

- Aronoff J, Barclay AM, Stevenson LA. The recognition of threatening facial stimuli. J. Pers. Soc. Psych. 1988;54:647–655. doi: 10.1037//0022-3514.54.4.647. [DOI] [PubMed] [Google Scholar]

- Calvo MG, Avero P, Lundqvist D. Facilitated detection of angry faces: Initial orienting and processing efficiency. Cogn. Emot. 2006;20:785–811. [Google Scholar]

- Carlson JM, Reinke KS, Habib R. A left amygdala mediated network for rapid orienting to masked fearful faces. Neuropsychologia. 2009;47:1386–1389. doi: 10.1016/j.neuropsychologia.2009.01.026. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Naccache L, Le Clec HG, Koechlin E, Mueller M, Dehaene-Lambertz G, van de Moortele PF, Le Bihan D. Imaging unconscious semantic priming. Nature. 1998;395:597–600. doi: 10.1038/26967. [DOI] [PubMed] [Google Scholar]

- Eimer M, Kiss M. Attentional capture by task-irrelevant fearful faces is revealed by the N2pc component. Biol. Psychol. 2007;74:108–112. doi: 10.1016/j.biopsycho.2006.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eimer M, Kiss M. Involuntary attentional capture is determined by task set: Evidence from event-related brain potentials. J. Cogn. Neurosci. 2008;20:1423–1433. doi: 10.1162/jocn.2008.20099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eimer M, Kiss M. Top-down search strategies determine attentional capture in visual search: Behavioral and electrophysiological evidence. Atten. Percept. Psychophys. 2010;74:951–962. doi: 10.3758/APP.72.4.951. [DOI] [PubMed] [Google Scholar]

- Emery NJ, Amaral DG. The role of the amygdala in primate social cognition. In: Lane R, Nadel L, editors. Cognitive neuroscience of emotion. New York: Oxford University Press; 2000. pp. 156–191. [Google Scholar]

- Feldmann-Wüstefeld T, Schmidt-Daffy M, Schubö A. Neural evidence for the threat detection advantage: Differential attention allocation to angry and happy faces. Psychophysiology. 2011;48:697–707. doi: 10.1111/j.1469-8986.2010.01130.x. [DOI] [PubMed] [Google Scholar]

- Fox E, Damjanovic L. The eyes are sufficient to produce a threat superiority effect. Emotion. 2006;6:534–539. doi: 10.1037/1528-3542.6.3.534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox E, Derakshan N, Shoker L. Trait anxiety modulates the electrophysiological indices of rapid spatial orienting towards angry faces. NeuroReport. 2008;19:259–263. doi: 10.1097/WNR.0b013e3282f53d2a. [DOI] [PubMed] [Google Scholar]

- Fox E, Lester V, Russo R, Bowles RJ, Pichler A, Dutton K. Facial expressions of emotion: Are angry faces detected more efficiently? Cogn. Emot. 2000;14:61–92. doi: 10.1080/026999300378996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galfano G, Sarlo M, Sassi F, Munafò M, Fuentes LJ, Umiltà CA. Reorienting of spatial attention in gaze cuing is reflected in N2pc. Social Neuroscience. 2010:1–13. doi: 10.1080/17470919.2010.515722. iFirst. [DOI] [PubMed] [Google Scholar]

- Gilboa-Schechtman E, Foa EB, Amir N. Attentional biases for facial expressions in social phobia: The face-in-the-crowd paradigm. Cogn. Emot. 1999;13:305–318. [Google Scholar]

- Hamm AO, Schupp HT, Weike AI. Motivational organization of emotions: Autonomic changes, cortical responses, and reflex modulation. In: Davidson RJ, Scherer K, Goldsmith HH, editors. Handbook of Affective Sciences. Oxford: Oxford University Press; 2003. pp. 188–211. [Google Scholar]

- Harter MR, Miller SL, Price NJ, LaLonde ME, Keyes AL. Neural processes involved in directing attention. Journal of Cognitive Neuroscience. 1989;1:223–237. doi: 10.1162/jocn.1989.1.3.223. [DOI] [PubMed] [Google Scholar]

- Holmes A, Bradley BP, Kragh Nielsen M, Mogg K. Attentional selectivity for emotional faces: Evidence from human electrophysiology. Psychophysiology. 2009;46:62–68. doi: 10.1111/j.1469-8986.2008.00750.x. [DOI] [PubMed] [Google Scholar]

- Hopf JM, Luck SJ, Girelli M, Hagner T, Mangun GR, Scheich H, Heinze HJ. Neural sources of focused attention in visual search. Cereb. Cortex. 2000;10:1233–1241. doi: 10.1093/cercor/10.12.1233. [DOI] [PubMed] [Google Scholar]

- Horstmann G, Bauland A. Search asymmetries with real faces: Testing the anger-superiority effect. Emotion. 2006;6:193–207. doi: 10.1037/1528-3542.6.2.193. [DOI] [PubMed] [Google Scholar]

- Junghöfer M, Peyk P. Analysis of electrical potentials and magnetic fields of the brain. Matlab Select. 2004;2:24–28. EMEGS software is freely available at http://www.emegs.org. [Google Scholar]

- Junghöfer M, Elbert T, Tucker D, Rockstroh B. Statistical control of artifacts in dense array EEG/MEG studies. Psychophysiology. 2000;37:523–532. [PubMed] [Google Scholar]

- Juth P, Lundqvist D, Karlsson A, Öhman A. Looking for foes and friends: Perceptual and emotional factors when finding a face in the crowd. Emotion. 2005;5:379–395. doi: 10.1037/1528-3542.5.4.379. [DOI] [PubMed] [Google Scholar]

- Kiss M, Van Velzen J, Eimer M. The N2pc component and its links to attention shifts and spatially selective visual processing. Psychophysiology. 2008;45:240–249. doi: 10.1111/j.1469-8986.2007.00611.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keil A, Sabatinelli D, Ding M, Lang PJ, Ihssen N, Heim S. Re-entrant projections modulate visual cortex in affective perception: Evidence from Granger Causality Analysis. Hum. Brain Mapp. 2009;30:532–540. doi: 10.1002/hbm.20521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Fitzsimmons JR, Cuthbert BN, Scott JD, Moulder B, Nangia V. Emotional arousal and activation of the visual cortex: An fMRI analysis. Psychophysiology. 1998;35:199–210. [PubMed] [Google Scholar]

- Larson CL, Aronoff J, Stearns JJ. The shape of threat: Simple geometric forms evoke rapid and sustained capture of attention. Emotion. 2007;7:526–534. doi: 10.1037/1528-3542.7.3.526. [DOI] [PubMed] [Google Scholar]

- Larson CL, Aronoff J, Sarinopoulos IC, Zhu DC. Recognizing threat: A simple geometric shape activates neural circuitry for threat detection. J. Cogn. Neurosci. 2009;21:1523–1535. doi: 10.1162/jocn.2009.21111. [DOI] [PubMed] [Google Scholar]

- LeDoux JE. The Emotional Brain. New York: Simon and Schuster; 1996. [Google Scholar]

- Luck SJ, Hillyard SA. Spatial filtering during visual search: Evidence from human electrophysiology. J. Exp. Psychol. Hum. Percept. Perform. 1994;20:1000–1014. doi: 10.1037//0096-1523.20.5.1000. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Esteves F, Öhman A. The face of wrath: Critical features for conveying facial threat. Cogn. Emot. 1999;13:691–711. doi: 10.1080/02699930244000453. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Esteves F, Öhman A. The Face of wrath: The role of features and configurations in conveying facial threat. Cogn. Emot. 2004;18:161–182. doi: 10.1080/02699930244000453. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Öhman A. Emotion regulates attention: The relationship between facial configuration, facial emotion, and visual attention. Visual Cogn. 2005;12:51–84. [Google Scholar]

- Mangun GR, Hillyard SA. The spatial allocation of visual attention as indexed by event-related brain potentials. Hum Factors. 1987;29:195–211. doi: 10.1177/001872088702900207. [DOI] [PubMed] [Google Scholar]

- Maratos FA, Mogg K, Bradley BP. Identification of angry faces in the attentional blink. Cogn. Emot. 2008;22:1340–1352. doi: 10.1080/02699930701774218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazza V, Turatto M, Caramazza A. Attention selection, distractor suppression and N2pc. Cortex. 2009a;45:879–890. doi: 10.1016/j.cortex.2008.10.009. [DOI] [PubMed] [Google Scholar]

- Mazza V, Turatto M, Caramazza A. An electrophysiological assessment of distractor suppression in visual search tasks. Psychophysiology. 2009b;46:771–775. doi: 10.1111/j.1469-8986.2009.00814.x. [DOI] [PubMed] [Google Scholar]

- McDonald JJ, Hickey C, Green JJ, Whitman JC. Inhibition of return in the covert deployment of attention: Evidence from human electrophysiology. Journal of Cognitive Neuroscience. 2009;21:725–733. doi: 10.1162/jocn.2009.21042. [DOI] [PubMed] [Google Scholar]

- Mohanty A, Egner T, Monti JM, Mesulam MM. Search for a Threatening Target Triggers Limbic Guidance of Spatial Attention. J. Neurosci. 2009;29:10563–10572. doi: 10.1523/JNEUROSCI.1170-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS, Ohman A, Dolan RJ. Conscious and unconscious emotional learning in the human amygdala. Nature. 1998;393:467–470. doi: 10.1038/30976. [DOI] [PubMed] [Google Scholar]

- Öhman A, Mineka S. Fear, phobias, and preparedness: Toward an evolved module of fear and fear learning. Psychol. Rev. 2001;108:483–522. doi: 10.1037/0033-295x.108.3.483. [DOI] [PubMed] [Google Scholar]

- Öhman A, Flykt A, Lundqvist D. Unconscious emotion: evolutionary perspectives, psychophysiological data, and neuropsychological mechanisms. In: Lane R, Nadel L, editors. Cognitive neuroscience of emotion. New York: Oxford University Press; 2000. pp. 296–327. [Google Scholar]

- Öhman A, Juth J, Lundqvist D. Finding the face in a crowd: Relationships between redundancy, target emotion, and target gender. Cogn. Emot. 2009;24:1216–1228. [Google Scholar]

- Öhman A, Lundqvist D, Esteves F. The face in the crowd revisited: A threat advantage with schematic stimuli. J. Pers. Soc. Psychol. 2001;80:381–396. doi: 10.1037/0022-3514.80.3.381. [DOI] [PubMed] [Google Scholar]

- Pessoa L, McKenna M, Gutierrez E, Ungerleider LG. Neural processing of emotional faces requires attention. Proc. Natl. Acad. Sci. U.S.A. 2002;99:11458–11463. doi: 10.1073/pnas.172403899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pinkham AE, Griffin M, Baron R, Sasson NJ, Gur RC. The face in the crowd effect: Anger superiority when using real faces and multiple identities. Emotion. 2010;10:141–146. doi: 10.1037/a0017387. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Grandjean D, Sander D, Vuilleumier P. Electrophysiological Correlates of Rapid Spatial Orienting Towards Fearful Faces. Cereb. Cortex. 2004;14:619–633. doi: 10.1093/cercor/bhh023. [DOI] [PubMed] [Google Scholar]

- Sabatinelli D, Bradley MM, Fitzsimmons JR, Lang PJ. Parallel amygdala and inferotemporal activation reflect emotional intensity and fear relevance. NeuroImage. 2005;24:1265–1270. doi: 10.1016/j.neuroimage.2004.12.015. [DOI] [PubMed] [Google Scholar]

- Sabatinelli D, Lang PJ, Keil A, Bradley MM. Emotional perception: correlation of functional MRI and event-related potentials. Cereb. Cortex. 2007;17:1085–1091. doi: 10.1093/cercor/bhl017. [DOI] [PubMed] [Google Scholar]

- Schubö A, Gendolla GHE, Meinecke C, Abele AE. Detecting emotional faces and features in a visual search paradigm: are faces special? Emotion. 2006;6:246–256. doi: 10.1037/1528-3542.6.2.246. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Junghöfer M, Weike AI, Hamm AO. Emotional facilitation of sensory processing in the visual cortex. Psychol. Sci. 2003;14:7–13. doi: 10.1111/1467-9280.01411. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Öhman A, Junghöfer M, Weike AI, Stockburger J, Hamm AO. The facilitated processing of threatening faces: An ERP analysis. Emotion. 2004;4:189–200. doi: 10.1037/1528-3542.4.2.189. [DOI] [PubMed] [Google Scholar]

- Tipples J, Atkinson AP, Young AW. The eyebrow frown: A salient social signal. Emotion. 2002;2:288–296. doi: 10.1037/1528-3542.2.3.288. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–841. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nat. Neurosci. 2004;7:1271–1278. doi: 10.1038/nn1341. [DOI] [PubMed] [Google Scholar]

- Watson DG, Blagrove E, Selwood S. Emotional triangles: A test of emotion-based attentional capture by geometric shapes. Cogn Emot. doi: 10.1080/02699931.2010.525861. in press. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Kagan J, Cook RG, Davis C, Kim H, Polis S, McLaren DG, Somerville LH, McLean AA, Maxwell JS, Johnstone T. Human amygdala responsivity to masked fearful eye whites. Science. 2004;306:2061. doi: 10.1126/science.1103617. [DOI] [PubMed] [Google Scholar]

- Woodman GF, Luck SJ. Electrophysiological measurement of rapid shifts of attention during visual search. Nature. 1999;400:867–869. doi: 10.1038/23698. [DOI] [PubMed] [Google Scholar]