Abstract

This paper presents a projection regression model (PRM) to assess the relationship between a multivariate phenotype and a set of covariates, such as a genetic marker, age and gender. In the existing literature, a standard statistical approach to this problem is to fit a multivariate linear model to the multivariate phenotype and then use Hotelling’s T2 to test hypotheses of interest. An alternative approach is to fit a simple linear model and test hypotheses for each individual phenotype and then correct for multiplicity. However, even when the dimension of the multivariate phenotype is relatively small, say 5, such standard approaches can suffer from the issue of low statistical power in detecting the association between the multivariate phenotype and the covariates. The PRM generalizes a statistical method based on the principal component of heritability for association analysis in genetic studies of complex multivariate phenotypes. The key components of the PRM include an estimation procedure for extracting several principal directions of multivariate phenotypes relating to covariates and a test procedure based on wild-bootstrap method for testing for the association between the weighted multivariate phenotype and explanatory variables. Simulation studies and an imaging genetic dataset are used to examine the finite sample performance of the PRM.

Keywords: imaging genetics, multivariate phenotype, projection regression model, single nucleotide polymorphism, wild bootstrap

1 Introduction

Many studies have been collecting/collected multivariate phenotypes in order to investigate their relationship with some explanatory variables of interest. For example, multivariate imaging phenotypes have been widely collected to characterize brain structures and their functions [Knickmeyer et al., 2008, Lenroot]. Such multivariate imaging phenotypes include diffusion tensor, deformation tensors of deformation field, the hemodynamic response function of functional magnetic resonance images, and the spherical harmonic boundary description of subcortical structures, among many others [Basser et al., 1994, Zhu et al., 2007, Styner et al., 2004, Friston, 2007, Huettel et al., 2004, Taylor and Worsley, 2008, Worsley et al., 2004]. Statistical analysis of these multivariate imaging phenotypes with explanatory variables eventually leads to a better understanding of the progression of neuropsychiatric and neurodegenerative diseases or the normal brain development/aging [Chung et al., 2010, Styner et al., 2003, 2004, Friston, 2007, Huettel et al., 2004, Taylor and Worsley, 2008, Worsley et al., 2004].

There are four commonly used methods to delineate the association between multivariate phenotypes and covariates. A standard statistical approach to this problem is to fit a multivariate linear model (MLM) to the multivariate phenotype and then use Hotelling’s T2 to test hypotheses of interest [Chung et al., 2010, Taylor and Worsley, 2008, Worsley et al., 2004]. Since MLM involves estimating the covariance matrix of all individual phenotypes, it is limited to the case that the dimension of the multivariate phenotype is relatively smaller than the sample size. An alternative approach is to fit a marginal linear model and calculate a test statistic for each component of the multivariate phenotype. Then it combines all tests with their associated p–values to test an overall hypothesis across all individual phenotypes [Heller et al.,2007, Lazar et al., 2002]. However, this method ignores the potential correlation among all individual phenotypes. Another approach is to directly reduce the dimension of the multivariate phenotype by using dimension reduction techniques, such as principal component analysis (PCA). Then it fits a MLM to the reduced multivariate phenotype and covariates [Formisano et al., 2008, Teipel et al., 2007, Rowe and Ho mann, 2006, Kherif et al., 2002]. This method does not properly account for the variation of covariates and their association with the individual phenotypes. Partial least squares regression (PLSR) is another statistical method that finds a linear regression model by projecting the multivariate phenotype and the explanatory variables to a new and smaller space [Chun and Keles, 2010, Krishnan et al., 2011]. This method focuses on prediction and classification, instead of investigating the association between the multivariate phenotype and the covariates of interest.

There is a large body of research on establishing the association between multivariate phenotype and genotypes (e.g., single nucleotide polymorphism (SNP)) in genome-wide association studies [Chun and Keles, 2010, Klei et al., 2008, Mukhopadhyay et al., 2010, Yang et al., 2010, Roeder et al., 2005, Yu et al., 2010, Xu et al., 2003, Ding et al., 2009, Zhu and Zhang, 2009]. Similar statistical methods for multivariate phenotype have been extensively developed and examined for the association between multivariate phenotype and SNPs. For instance, in simulation studies, Zhu and Zhang [2009] demonstrated that the performance of simultaneously testing all components of the multiple phenotype simultaneously is better than that of testing each phenotype individually in various models for family-based association studies. As pointed by Klei et al. [2008] and many others, testing each phenotype individually requires a substantial penalty for controlling multiplicity. An alternative approach is to create a single ‘pseudo’ phenotype, which is a weighted sum of all individual phenotypes from the same subject, and then carry out a univariate analysis [Amos et al., 1990, Amos and Laing, 1993, Ott and Rabinowitz, 1999, Lange et al., 2004, Klei et al., 2008]. The optimal weighted sum of individual phenotypes is based on the principal component of heritability (PCH) [Ott and Rabinowitz, 1999, Lange et al., 2004, Klei et al., 2008]. The idea of the PCH is to project the multivariate phenotype from a high dimensional space to a low dimensional space, while accounting for the association between the multivariate phenotype and genotype [Klei et al., 2008]. It has been shown that the PCH has relatively higher power, but it may require additional computational time to estimate the appropriate weights [Klei et al., 2008].

The aim of this paper is to develop a new statistical framework, called the projection regression model (PRM), which overcomes the limitations mentioned above. The PRM includes simultaneous selection, estimation, and testing in a general regression setting. We develop an estimation procedure for estimating the optimal weights of the multivariate response in the PRM, while properly accounting for the space of explanatory variables. Particularly, the PRM can accommodate the case that the sample size is relatively smaller than the dimension of the multivariate phenotype. We also propose a test procedure based on a wild-bootstrap method, which leads to a single p–value to test for the association between the projected weighted multivariate phenotype and the covariates of interest, such as genetic markers. This test procedure controls the overall type I error, while avoiding the use of an inefficient sample splitting method [Mukhopadhyay et al., 2010, Yang et al., 2010]. Simulation studies are carried out to compare the PRM with several commonly used methods for the multivariate phenotype in terms of both the type I and II error rates.

Section 2 of this paper introduces the PRM and its associated estimation and testing procedure. In Section 3, we conduct simulation studies with a known ground truth to examine the finite sample performance of the PRM and several other statistical methods. Section 4 illustrates an application of PRM in an imaging genetic data set. We present concluding remarks in Section 5.

2 Methods

2.1 Projection Regression Model

Suppose that we observe a q × 1 multivariate phenotype yi = (yi1, …, yiq)T and a p × 1 vector of covariates of interest xi = (xi1, …, xip)T for i = 1, …, N. We consider a commonly used MLM as follows:

| (1) |

where Y is an N × q matrix formed by the q × 1 multivariate phenotype of each subject in each row, X is an N × p matrix consisting of the p × 1 vector of covariates of each subject in each row, and B = (βjl) is a p × q matrix, in which βjl represents the effect of the j–th covariate on the l–th response. Moreover, E is an N × q matrix representing the random errors and is the i–th row of E with zero mean and covariance matrix VR. Assuming that xi and ei are independent, the covariance of yi is given by

| (2) |

where VQ represents the variation coming from the covariates of interest.

Most scientific questions require the comparison across two (or more) diagnostic groups and the association of the genetic marker for each component of yi. Such questions can often be formulated as linear hypotheses of B as follows:

| (3) |

where C is a r × p matrix of full row rank and B0 is a p × q vector of constants.

We consider a projection of yi via a q × k weight matrix W and create a k × 1 projection vector WTyi such that k << q. Then, we propose a projection regression model (PRM) given by

| (4) |

where βw is a p × k regression coefficient matrix and εi is the random vector with Cov(εi) = Σi. The PRM (4) is a heteroscedastic multivariate linear model. When k = 1 and Σi = Σ for all i, PRM reduces to the pseudo-phenotype model considered in [Amos et al., 1990, Amos and Laing, 1993, Ott and Rabinowitz, 1999, Lange et al., 2004, Klei et al., 2008]. A direct connection between models (1) and (4) is that model (1) can be rewritten as

| (5) |

Therefore, if W in (4) were known, then one would directly perform an appropriate hypothesis test to address specific research hypotheses as follows:

| (6) |

where b0 is an r × k vector of constants. Based on model (5), the null hypothesis of (6) can be written as Cβw = CBW = B0W = b0.

Let C1 be a (p − r) × p amatrix such that

| (7) |

Let be a p × p matrix and be a p × 1 vector, where and are, respectively, the r × 1 and (p − r) × 1 subvectors of . We define to be or . We consider , where and are, respectively, the first r rows and the last p − r rows of B. Therefore, model (5) can be rewritten as

| (8) |

The next issue is to determine an optimal q × k matrix W under some certain criteria. In PCH [Ott, 1999, Lange et al., 2004, Klei et al., 2008], the heritability ratio is defined by

| (9) |

The heritability ratio characterizes the ratio of the variation from the genetic biomarkers xi to the total variation of responses yi. Maximizing h(w) leads to the optimal W.

Instead of directly using the heritability ratio h(w), we consider a generalized ‘heritability’ ratio H(w) for a given q × 1 vector w as follows:

| (10) |

The H(w) can be interpreted as the ratio of the variance of relative to that of wTei under the null hypothesis. We require that the optimal W enhances the power of detecting the association between WTyi and xi for the null hypothesis (6). Thus, we need to find a W to project the data into a space containing the most information on the null hypothesis of (3). Let ΣX = Cov(x). It can be shown that reduces to

| (11) |

where (D−TΣXD−1)(1,1) is the upper r × r submatrix of D−TΣXD−1. When C = [Ir 0], reduces to the ratio of to wTVRw, in which (ΣX)(1,1) is the upper r × r submatrix of ΣX.

When VR is positive definite, maximizing (11) is equivalent to maximizing

| (12) |

where L is the lower triangular matrix obtained from the Cholesky decomposition of VR = LLT. Letting . Let v be the eigenvector corresponding to the largest eigenvalue of the matrix VC,X, then (11) is maximized when equals v. Hence, (12) is maximized when equals L−Tv. If q is relatively small compared to N, based on (11), we take the q × k matrix W in (4) by choosing the largest k sparse eigenvectors of VC,X using PCA. However, when q is relatively large compared to N, calculating L−T and the eigenvectors of VC,X can be challenging, which makes the optimal weight matrix W very unstable.

2.2 Estimation procedure for optimal weights

We develop an estimation procedure for estimating the optimal weights. This procedure consists of three major steps: (i) a pre-screening process for eliminating ‘unrelated’ measures; (ii) a shrinkage procedure for approximating VC,X and VR; and (iii) a sparse principal component analysis (SPCA) procedure for calculating the eigenvalue-eigenvector pairs of VC,X. Each step is implemented as follows.

The pre-screening procedure is to rank individual phenotypes according to marginal utility and eliminate ‘unrelated’ phenotypes when q is relatively large relative to N, say q ≥ N/3. This procedure is to mimic various screening methods, such as sure independence screening (SIS), for discarding covariates in high-dimensional linear models [Fan and Lv, 2010]. In Step 1, we fit q marginal linear regression models to individual phenotypes and the covariates of interest. In Step 2, we calculate the corresponding Wald-type test statistics under the same null hypothesis (6), and the respective p-values from a chi-square distribution with degrees of freedom r for each individual phenotype. In Step 3, after ordering the q p-values from the smallest to the largest, we only select the phenotypes with the first q* = [q/log(q)] + 1 if q ≤ N, or the first q* = [N/log(N)] + 1 if q > N, where [x] represents the largest integer smaller than x. Thus, we set the weights for those unselected individual phenotypes to be zero, or equivalently, we consider a reduced response vector, denoted as .

The shrinkage procedure is to approximate VC,X and VR as follows. In Step 1, we refit the multivariate linear regression in (1) with the selected individual phenotypes in as responses conditional on X. Let B* be the regression parameter matrix for the selected individual phenotypes. We estimate B* by its least square estimator, denoted by , which equals . In Step 2, we estimate Cov(X) by using its empirical estimator, denoted by , and then approximate by . In Step 3, we calculate a shrinkage estimate of VR by following [Ledoit and Wolf, 2004]. Let CE be the sample covariance matrix of , μE = q−1tr(CE) and , in which . Finally, we approximate VR and VC,X by using and , respectively. We use mainly due to its computational e ciency and relatively nice properties [Ledoit and Wolf, 2004].

The SPCA procedure is to estimate the sparse eigenvectors and eigenvalues of V̂R,S, by following Zou et al. [2006] as follows. The key idea of this SPCA process is to transform the eigenvalue-eigenvector problem into an elastic net problem [Zou et al., 2006], which can be solved neatly. We include the key steps here for completion. In Step 1, we choose a value of k so that the proportion of variance explained is greater than a certain threshold, such as 80% percent to truncate the eigenvalues. Then, we calculate the loadings of the first k ordinary principal components of V̂R,S, denoted as α. In Step 2, given a fixed α, we solve the following naive elastic net problem: for j = 1, …, k,

| (13) |

where |·|1 denotes the L1 norm. Moreover, λ1,j and λ2,j are tuning parameters and selected simultaneously by using a BIC-type selection criterion [Leng and Wang, 2009]. We calculate the BIC-type criterion given by

| (14) |

where df(λ1,j, λ2,j) is the number of nonzero coefficients in . In Step 3, for each fixed , we calculate the singular value decomposition of , and then we update αj = UVT for j = 1, …, k. In Step 4, we repeat steps 2-3, until γ converges. In Step 5, we normalize γ, and then set for j = 1, …, k. The optimal weight wj is estimated by using for j = 1, …, k and W = [w1, …, wk].

Finally, to further reduce the dimension of the pre-screened Y*, we apply the SPCA procedure repeatedly to estimate W by selecting ‘related’ individual phenotypes suggested from the estimated weight matrix W obtained from the previous iteration. Specifically, we eliminate the responses corresponding to the zero rows in the sparse weight matrix W obtained from the SPCA procedure in order to reduce the screened response vector Y* to an even smaller dimension. Subsequently, we rerun the shrinkage and SPCA procedures on the new Y* to calculate the new weight matrix W. This iteration process of weight estimation can be processed iteratively until W converges. Our simulation studies show that in most cases, the process converges in only two iterations.

2.3 Test Procedure for Testing Hypotheses

We develop several statistics of testing H0W against H1W for the PRM (4) as follows. Given the estimated weight matrix W, we can calculate the ordinary least squares estimate of βw, given by . Subsequently, to calculate a statistic for testing H0W against H1W, we calculate a k × k matrix, denoted by TN, as follows:

| (15) |

where is a consistent estimate of the covariance matrix of given by

| (16) |

Moreover, and where is the restricted least squares (RLS) estimate of β under H0, and is given by

| (17) |

When k = 1, TN is a Wald-type (or Hotelling’s T2) test statistic. When k > 1, we define three test statistics based on the functionals of TN as follows:

| (18) |

where det, trace, and eig denote the determinant, trace and eigenvalues of a symmetric matrix, respectively. When k = 1, all these statistics reduce to TN. For simplicity, we focus on TrN throughout the paper.

We present a wild bootstrap method to improve the finite sample performance of the test statistic TrN in (18) in testing the null hypothesis H0. First, we fit model (1) under the null hypothesis (3) and calculate the estimated multivariate regression coefficients under (3), denoted by , with corresponding residuals for i = 1, …, N. Then, we generate G bootstrap samples as follows:

| (19) |

where are independently and identically distributed as a distribution d, in which d is chosen as

| (20) |

For each generated wild-bootstrap sample, we repeat the estimation procedure for estimating the optimal weights and the calculation of the test statistic . Subsequently, the p-value of TrN is computed as , where 1(·) is an indicator function.

2.4 Summary

We summarize the key steps of the PRM as follows:

Step (i). Fit q marginal linear regression models with the univariate dependent variable as each single phenotype and the independent variables as the covariates of interest.

Step (ii). Calculate q Wald-type test statistics under the same null hypothesis (6) and their corresponding p-values.

Step (iii). Select the responses with the smallest p-values and establish the shrunken response space Y*;

Step (iv). Apply SPCA to estimate the weight W based on Y*;

Step (v). Project Y to WTY and regress WTY by X;

Step (vi). Calculate the Wald-type test statistic TrN;

Step (vii). Generate G bootstrap samples and repeat Steps (i) to (vi) for each bootstrap sample;

Step (viii). Approximate the p-value of TrN.

3 Results

3.1 Simulation Studies

We carried out two scenarios of simulation studies to examine the finite-sample performance of the PRM. The simulation studies were designed to establish the association between a relatively high-dimensional phenotype with a commonly used genetic marker (e.g., SNP), while adjusting for age and other environmental factors. The first scenario focuses on that q is relatively smaller than the sample size N. The second scenario focuses on that q is comparable to the sample size N.

We set q and then simulated the multivariate phenotype according to model (1). The random errors were simulated from a multivariate normal distribution with mean 0 and covariance matrix with diagonal elements equal to 1. For the off-diagonal elements in the covariance matrix, we categorized each component of the multivariate phenotype into three categories: high correlation (0.6), medium correlation (0.3), and very low correlation (0.1) with the corresponding number of components (1, 1, q – 2) in each category. Specifically, we set the correlation between the first and second random errors as 0.6, those between the first random error and all others to be 0.3, and others to be 0.1. In the covariate matrix, we included a SNP, a diagnostic status as a binary variable with probability 0.5, and 3 additional continuous covariates. We simulated the additive SNP effect under different minor allele frequencies (MAFs). We simulated the three additional continuous covariates from a multivariate normal distribution with mean 0, standard deviation 1, and equal correlation 0.3. Our hypothesis of interest is to test the SNP effect on the multivariate phenotype. We set the number of the repetitions to be 150 and the number of wild bootstrap samples to be 250.

3.1.1 Scenario I

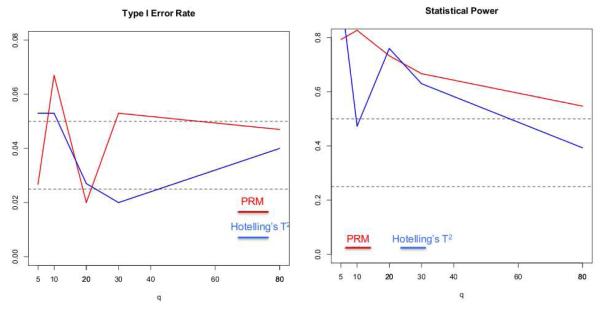

In the first scenario, we set the sample size N to be 150 and the MAF to be 0.5. The q were chosen to be 5, 10, 20, 30, 80 and 100, respectively. The first five individual phenotypes were associated with the SNP, whose coefficients were independently generated from a normal distribution with mean 0.15 and variance 0.05, and the 5th phenotype was also associated with disease status with regression coefficient being 0.5. We applied both the PRM and Hotelling’s T2 test to each simulated dataset in order to examine the type I and II error rates under the 5% significance level. Inspecting Figure 1 reveals that the type I errors are well controlled for both methods. Moreover, as q increases, the power in detecting the SNP effect decreases faster for Hotelling’s T2 test compared with the PRM.

Fig. 1.

The comparison results of the PRM and Hotelling’s T2 test based on N = 150 and MAF=0.5: the type I error (the left panel) and power (the right panel). The upper and middle dashed lines in the left panel correspond to 0.05 and 0.025, respectively; and the upper and middle dashed lines in the right panel represent 0.5 and 0.25, respectively.

3.1.2 Scenario II

In the second scenario, we set q to be 50, 100, 150 and 200, respectively, and the sample size N to be 150, 200, 250 and 300, respectively. We generated the additive SNP effect under 6 different MAFs, which are 0.05, 0.1, 0.2, 0.3, 0.4 and 0.5, respectively. We considered two scenarios of the SNP effect. In the first scenario, only the first individual phenotype is associated with the SNP effect with regression coefficient being 0.5 and the second individual phenotype is associated with the disease status effect with regression coefficient being 0.5. Other individual phenotypes are not associated with any covariate. The second scenario is that the first 10 individual phenotypes are associated with the SNP. We generated the corresponding regression coefficients independently from a normal distribution with mean 0.5 and standard deviation 0.15. Moreover, we set the regression coefficient for the diagnosis status to be 0.5 for the 10th individual phenotype and all other regression coefficients to be zero.

We applied the PRM to the simulated data sets and compared it with two other methods including a component wise method (CWM) and a principal components regression (PCR) using a 5% significance level. The CWM method fits a single linear regression to each individual phenotype with the same set of covariates and uses the false discovery rate (FDR) to test the additive SNP effect. The PCR method extracts the first three principal components of the multivariate phenotype by using the PCA and then fits a multivariate linear model to the extracted principal components with the same set of covariates. The Hotelling’s T2 test is not considered here since it is invalid for q > N.

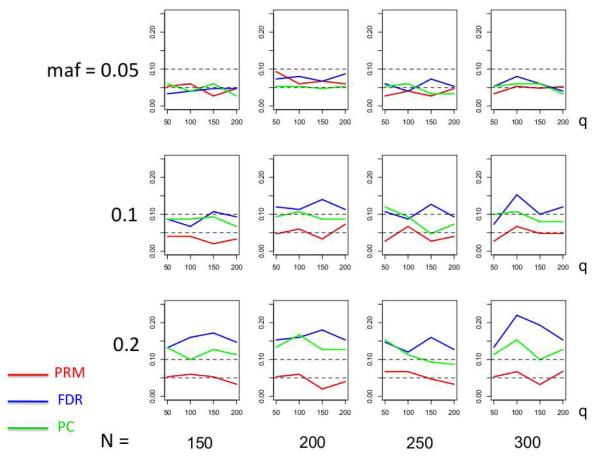

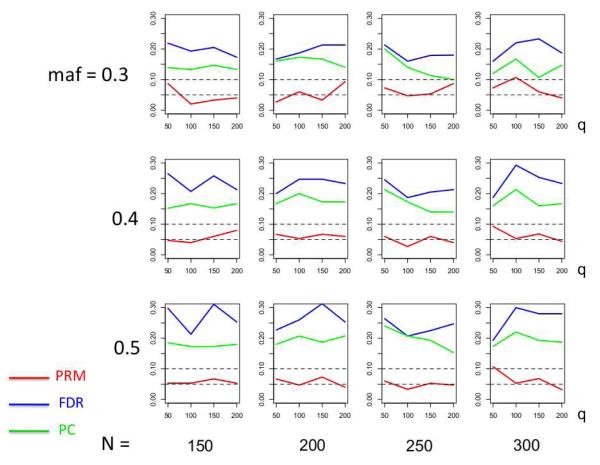

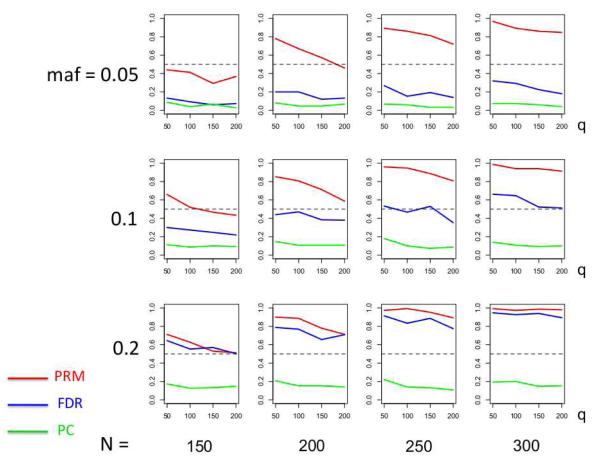

We observe that the type I error rates are well controlled and more stable in the PRM, compared to the CWM and PCR methods (Figures 2 and 3). When the SNP effect is sparse, the powers of the PRM are generally higher than the CWM method, particularly for SNPs with small MAF and it is uniformly better than the PCR method (Figures 4 and 5). As expected, increasing either the sample size N or the MAF enhances the statistical power in detecting the SNP effect, whereas increasing the number of responses q areduces the power in detecting the SNP effect. When more SNPs show impact on the phenotypes, PRM is still comparable to CWM and better then PCR when the MAF is small (Figures 6 and 7). With increasing MAF, all three methods perform equally well.

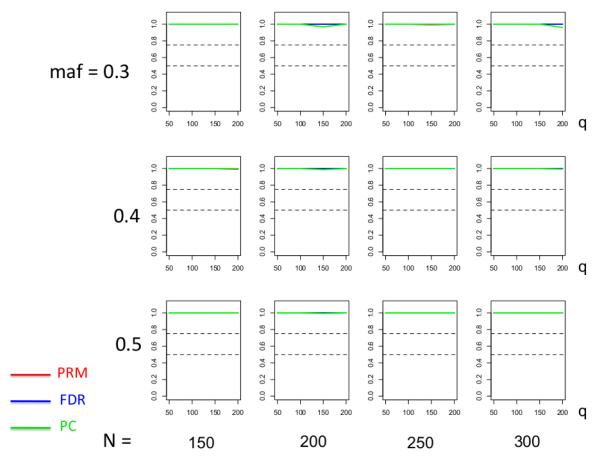

Fig. 2.

The type I error comparison results of the PRM, CWM, and PCR methods based on different sample sizes (150, 200, 250 and 300) and different minor allele frequencies (0.05, 0.1 and 0.2). The horizontal axis of each plot is the number of phenotypes q and the vertical axis is the type I error rate. The upper and middle dashed lines are 0.1 and 0.05, respectively.

Fig. 3.

The type I error comparison results of the PRM, CWM, and PCR methods based on different sample sizes (150, 200, 250 and 300) and different minor allele frequencies (0.3, 0.4 and 0.5). The horizontal axis of each plot is the number of phenotypes q and the vertical axis is the type I error rate. The upper and middle dashed lines are 0.1 and 0.05, respectively.

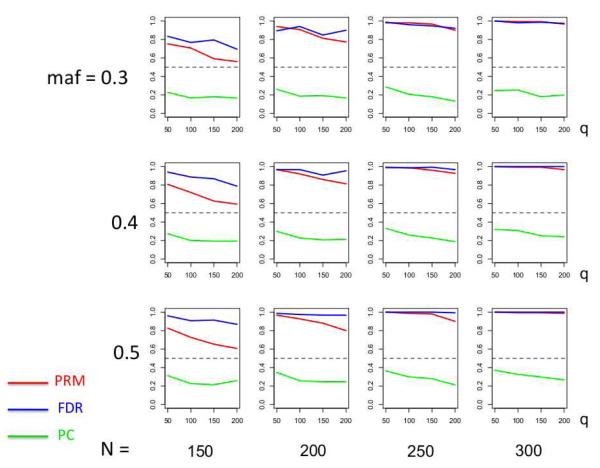

Fig. 4.

The power comparison results of the PRM, CWM, and PCR methods for the first scenario of sparse SNP effect based on different sample sizes (150, 200, 250 and 300) and different minor allele frequencies (0.05, 0.1 and 0.2). The horizontal axis of each plot is the number of phenotypes q and the vertical axis is the power. The dashed line represents a power of 50%.

Fig. 5.

The power comparison results of the PRM, CWM, and PCR methods for the first scenario of sparse SNP effect based on different sample sizes (150, 200, 250 and 300) and different minor allele frequencies (0.3, 0.4 and 0.5). The horizontal axis of each plot is the number of phenotypes q and the vertical axis is the power. The dashed line represents a power of 50%.

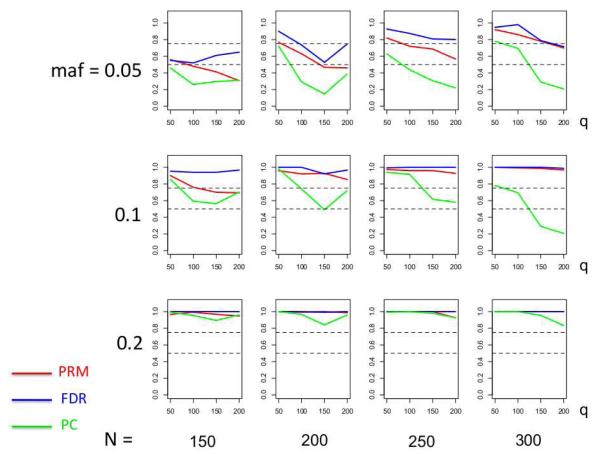

Fig. 6.

The power comparison results of the PRM, CWM, and PCR methods for multiple SNP effects based on different sample sizes (150, 200, 250 and 300) and different minor allele frequencies (0.05, 0.1 and 0.2). The horizontal axis of each plot is the number of phenotypes q and the vertical axis is the power. The upper and lower dashed lines represent the powers of 75% and 50%, respectively.

Fig. 7.

The power comparison results of the PRM, CWM, and PCR methods for the second scenario of multiple SNP effects based on different sample sizes (150, 200, 250 and 300) and different minor allele frequencies (0.3, 0.4 and 0.5). The horizontal axis of each plot is the number of phenotypes q and the vertical axis is the power. The upper and lower dashed lines represent the powers of 75% and 50%, respectively.

3.2 A neonatal study

The data set is from a neonatal study to assess the impact of common SNPs in putative psychiatric genes on early age brain development. The study recruited 237 pregnant women in their second trimester, who were free from abnormalities on fetal ultrasounds and major medical illness. Each subject had one time visit with a T1-weighted medical resonance image (MRI), demographic and genetic information assessment. The MRI images were collected with a Siemens head-only 3T scanner using a 3D spoiled gradient (FLASH TR/TE/Flip Angle 15/7msec/25) with spatial resolution 1 × 1 × 1 mm3 voxel size. There are 47 regions of interest defined from the T1-weighted images by non-linear warping of a parcellation atlas template [Gilmore et al., 2007, Knickmeyer et al., 2008]. The demographic information includes gender, gestational age at birth in days, age after birth in days and intracranial volume (ICV) of the infants. There are 128 male and 109 female infants with average gestational age 264.0 (SD ±18.91), age after birth in days of 30.2 (SD ±17.80) and ICV 481799.9 (SD ±61528.96). Moreover, 9 genetic variants expressed in SNPs from 6 genes were collected and genotyped by Genome Quebec using Sequenom iPLEX Gold Genotyping Technology.

We applied our PRM method to multivariate phenotype including the volumes of 47 regions of interest (ROIs) with covariates of interest including gender, gestational age, age after birth, ICV and the 9 SNPs with an additive effect. Each hypothesis tests a single SNP effect, while adjusting for other covariates including demographic information and other SNPs. We list the 9 SNPs with their corresponding genes and respective p-values in Table 1.

Table. 1.

Selected SNPs with the corresponding genes and result for testing a single SNP effect while adjusting for demographic information and other SNPs

| Gene | Abbreviation | SNP | P-value |

|---|---|---|---|

| Catechol-O-methyltransferase | COMT | rs4680 | 0.88 |

| Disrupted-in-schizophrenia-1 | DISC1 | rs821616 rs6675281 |

0.75 0.016 |

| Neuregulin 1 | NRG1 |

rs35753505 rs6994992 |

0.0136 0.51 |

| Estrogen Receptor Alpha | ESR1 | rs9340799 rs2234693 |

0.44 0.57 |

| Brain-derived Neurotrophic Factor Glutamate Decarboxylase 1 |

BDNF GAD1 (GAD67) |

rs6265 rs2270335 |

0.60 0.39 |

The results show that the SNPs rs6675281 and rs35753505 have a significant impact on early age brain development with p-values of 0.016 and 0.0136, respectively. This agrees with the existing literature. Specifically, DISC1 was known to be associated with mental illness, such as schizoprenia and bipolar disorder, and NRG1 was known to relate to brain tissue volume [Mata et al., 2009].

We also applied the PCR and CWM methods to the same data set with the same set of covariates for comparison. In the PCR application, the first three principal components of the 47 ROIs, which explain 74.4% of the variation, are regressed on the same group of covariates of interest and the same null hypotheses were tested for each SNP by Hotelling’s T2 test at the 0.05 significance level. None of the 9 SNPs were found to be significant for brain volume development. The details of the test results are given in the supplementary document. When analyzing the same data set by CWM with multiple comparisons adjusted by FDR, none of the 9 SNPs are detected to be significant for the 47 ROIs at the same testing level.

4 Discussion

We have developed the PRM which provides a more effective analysis for the association delineation between multivariate phenotypes and covariates of interest. The proposed methodology is demonstrated in a study investigating the impact of candidate SNPs on early age brain development. Analysis results obtained from the PRM successfully identified two previously reported SNPs while none of them were detected by either CWM or PCR. This phenomenon is consistent with the results in the simulation studies showing that compared to the two other methods, the PRM tends to have higher power for detecting the association between high dimensional phonetypes and the covariates of interest with better type I error control. Hence we expect that this novel statistical tool will assist scientists in exploring new findings with more effective and reliable statistical results in the high dimensional data settings. Future work includes establishing the asymptotic properties of the PRM under mild conditions, considering ultra-high dimensional phenotypes and genomic data, as well as extending the PRM to longitudinal and familial studies. User-friendly software to implement the PRM will be available to public for non-profit purposes on our group website: http://www.bios.unc.edu/research/bias/software.html.

Supplementary Material

Acknowledgement

We thank the Editor and two referees for valuable suggestions, which helped to improve our presentation greatly. All authors have no conflict of interest to declare.

Reference

- Amos CI, Elston RC, Bonney GE, Keats BJB, Berenson GS. A multivariate method for detecting genetic linkage, with application to a pedigree with an adverse lipoprotein phenotype. Am. J. Hum. Genet. 1990;47:247–254. [PMC free article] [PubMed] [Google Scholar]

- Amos CI, Laing AE. A comparison of univariate and multivariate tests for genetic linkage. Genetic Epidemiology. 1993;84:303–310. doi: 10.1002/gepi.1370100657. [DOI] [PubMed] [Google Scholar]

- Basser PJ, Mattiello J, LeBihan D. MR diffusion tensor spectroscopy and imaging. Biophysical Journal. 1994;66:259–267. doi: 10.1016/S0006-3495(94)80775-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chun H, Keles S. Sparse partial least squares regression for simultaneous dimension reduction and variable selection. J. Roy. Statist. Soc. Ser. B. 2010;72:3–25. doi: 10.1111/j.1467-9868.2009.00723.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chung MK, Worsley KJ, Nacewicz BM, Dalton KM, Davidson RJ. General multivariate linear modeling of surface shapes using surfstat. NeuroImage. 2010;53:491–505. doi: 10.1016/j.neuroimage.2010.06.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ding X, Lange C, Xu X, Laird N. New powerful approaches for family-based association tests with longitudinal measurements. Annals of Human Genetics. 2009;73:74–83. doi: 10.1111/j.1469-1809.2008.00481.x. [DOI] [PubMed] [Google Scholar]

- Fan J, Lv J. A selective overview of variable selection in high dimensional feature space (invited review article) Statistica Sinica. 2010;20:101–148. [PMC free article] [PubMed] [Google Scholar]

- Formisano E, Martino FD, Valente G. Multivariate analysis of fmri time series: classification and regression of brain responses using machine learning. Magnetic Resonance Imaging. 2008;26:921–934. doi: 10.1016/j.mri.2008.01.052. [DOI] [PubMed] [Google Scholar]

- Friston KJ. Statistical Parametric Mapping: the Analysis of Functional Brain Images. Academic Press; London: 2007. [Google Scholar]

- Gilmore JH, Lin W, Prastawa M, Looney CB, Vetsa YSK, Knickmeyer RC, Evans DD, Smith JK, Hamer RM, Lieberman J, Gerig G. Regional gray matter growth, sexual dimorphism, and cerebral asymmetry in the neonatal brain. Journal of Neuroscience. 2007;27:1255–1260. doi: 10.1523/JNEUROSCI.3339-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heller R, Golland Y, Malach R, Benjaminia Y. Conjunction group analysis: an alternative to mixed/random effect analysis. Neuroimage. 2007;37:1178–1185. doi: 10.1016/j.neuroimage.2007.05.051. [DOI] [PubMed] [Google Scholar]

- Huettel SA, Song AW, McCarthy G. Functional Magnetic Resonance Imaging. Sinauer Associates, Inc; London: 2004. [Google Scholar]

- Kherif F, Poline JB, Flandin G, Benali H, Simon O, Dehaene S, Worsley K. Multivariate model specification for fmri data. Neuroimage. 2002;16:1068–1083. doi: 10.1006/nimg.2002.1094. [DOI] [PubMed] [Google Scholar]

- Klei L, Luca D, Devlin B, Roeder K. Pleiotropy and principle components of heritability combine to increase power for association. Genetic Epidemiology. 2008;32:9–19. doi: 10.1002/gepi.20257. [DOI] [PubMed] [Google Scholar]

- Knickmeyer RC, Gouttard S, Kang C, Evans D, Wilber K, Smith J, Hamer R, Lin W, Gerig G, Gilmore J. A structural mri study of human brain development from birth to 2 years. J Neurosci. 2008;28:12176–12182. doi: 10.1523/JNEUROSCI.3479-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krishnan A, Williams LJ, McIntosh AR, Abdi H. Partial least squares (pls) methods for neuroimaging: a tutorial and review. NeuroImage. 2011;56:455–475. doi: 10.1016/j.neuroimage.2010.07.034. [DOI] [PubMed] [Google Scholar]

- Lange C, van Steen K, Andrew T, Lyon H, DeMeo DL, Raby B, Murphy A, Silverman EK, MacGregor A, Weiss ST, Laird NM. A family-based association test for repeatedly measured quantitative traits adjusting for unknown environmental and/or polygenic effects. Stat Appl Genet Mol Biol. 2004;3:1–17. doi: 10.2202/1544-6115.1067. [DOI] [PubMed] [Google Scholar]

- Lazar N, Luna B, Sweeney J, Eddy W. Combiningbrains: a survey of methods for statistical pooling of information. NeuroImage. 2002;16:538–550. doi: 10.1006/nimg.2002.1107. [DOI] [PubMed] [Google Scholar]

- Ledoit O, Wolf M. A well-conditioned estimator for large-dimensional covariance matrices. Journal of Multivariate Analysis. 2004;88:365–411. [Google Scholar]

- Leng C, Wang H. On general adaptive sparse principal component analysis. Journal of Computational and Graphical Statistics. 2009;18:201–215. [Google Scholar]

- Lenroot R, Giedd J. Brain development in children and adolescents: insights from anatomical magnetic resonance imaging. Neurosci Biobehav Rev. 2006;30:718–729. doi: 10.1016/j.neubiorev.2006.06.001. [DOI] [PubMed] [Google Scholar]

- Mata I, Perez-Iglesias R, Roiz-Santianez R, Tordesillas-Gutierrez D, Gonzalez-Mandly A, Vazquez-Barquero JL, Crespo-Facorro BA. Neuregulin 1 variant is associated with increased lateral ventricle volume in patients with first-episode schizophrenia. Biological Psychiatry. 2009;65:535–540. doi: 10.1016/j.biopsych.2008.10.020. [DOI] [PubMed] [Google Scholar]

- Mukhopadhyay I, Feingold E, Weeks DE, Thalamuthu A. Association tests using kernelbased measures of multi-locus genotype similarity between individuals. Genetic Epidemiology. 2010;34:213–221. doi: 10.1002/gepi.20451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ott J, Rabinowitz D. A principle-components approach based on heritability for combining phenotype information. Hum Heredity. 1999;49:106–111. doi: 10.1159/000022854. [DOI] [PubMed] [Google Scholar]

- Roeder K, Bacanu SA, Sonpar V, Zhang X, Devlin B. Analysis of single-locus tests to detect gene/disease associations. Genetic Epidemiology. 2005;28:207–219. doi: 10.1002/gepi.20050. [DOI] [PubMed] [Google Scholar]

- Rowe D, Ho mann R. Multivariate statistical analysis in fmri. IEEE Eng Med Biol Med. 2006;25:60–64. doi: 10.1109/memb.2006.1607670. [DOI] [PubMed] [Google Scholar]

- Styner M, Gerig G, Lieberman J, Jones D, Weinberger D. Statistical shape analysis of neuroanatomical structures based on medial models. Medical Image Analysis. 2003;3:207–220. doi: 10.1016/s1361-8415(02)00110-x. [DOI] [PubMed] [Google Scholar]

- Styner M, Lieberman J, Pantazis D, Gerig G. Boundary and medial shape analysis of the hippocampus in schizophrenia. Medical Image Analysis. 2004;4:197–203. doi: 10.1016/j.media.2004.06.004. [DOI] [PubMed] [Google Scholar]

- Taylor J, Worsley K. Random fields of multivariate test statistics, with applications to shape analysis. Annals of Statistics. 2008;36:1–27. [Google Scholar]

- Teipel SJ, Born C, Ewers M, Bokde ALW, Reiser MF, Möller HJ, Hampel H. Multivariate deformation-based analysis of brain atrophy to predict alzheimers disease in mild cognitive impairment. NeuroImage. 2007;38:13–24. doi: 10.1016/j.neuroimage.2007.07.008. [DOI] [PubMed] [Google Scholar]

- Worsley KJ, Taylor JE, Tomaiuolo F, Lerch J. Unified univariate and multivariate random field theory. NeuroImage. 2004;23:189–195. doi: 10.1016/j.neuroimage.2004.07.026. [DOI] [PubMed] [Google Scholar]

- Xu D, Mori S, Shen D, van Zijl P, Davatzikos C. Spatial normalization of diffusion tensor fields. Magnetic Resonance in Medicine. 2003;50:175–182. doi: 10.1002/mrm.10489. [DOI] [PubMed] [Google Scholar]

- Yang Q, Wu H, Guo C, Fox CS. Analyze multivariate phenotypes in genetic association studies by combining univariate association tests. Genetic Epidemiology. 2010;34:444–454. doi: 10.1002/gepi.20497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu K, Wheeler W, Li Q, Bergen AW, Caporaso N, Chatterjee N, Chen J. A partially linear tree-based regression model for multivariate outcomes. Biometrics. 2010;66:89–96. doi: 10.1111/j.1541-0420.2009.01235.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu HT, Zhang HP, Ibrahim JG, Peterson BG. Statistical analysis of diffusion tensors in diffusion-weighted magnetic resonance image data (with discussion) Journal of the American Statistical Association. 2007;102:1085–1102. [Google Scholar]

- Zhu W, Zhang HP. Why do we test multiple traits in genetic association studies? Journal of the Korean Statistical Society. 2009;38:1–10. doi: 10.1016/j.jkss.2008.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zou H, Hastie T, Tibshirani R. Sparse principal component analysis. Journal of Computational and Graphical Statistics. 2006;15:262–286. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.