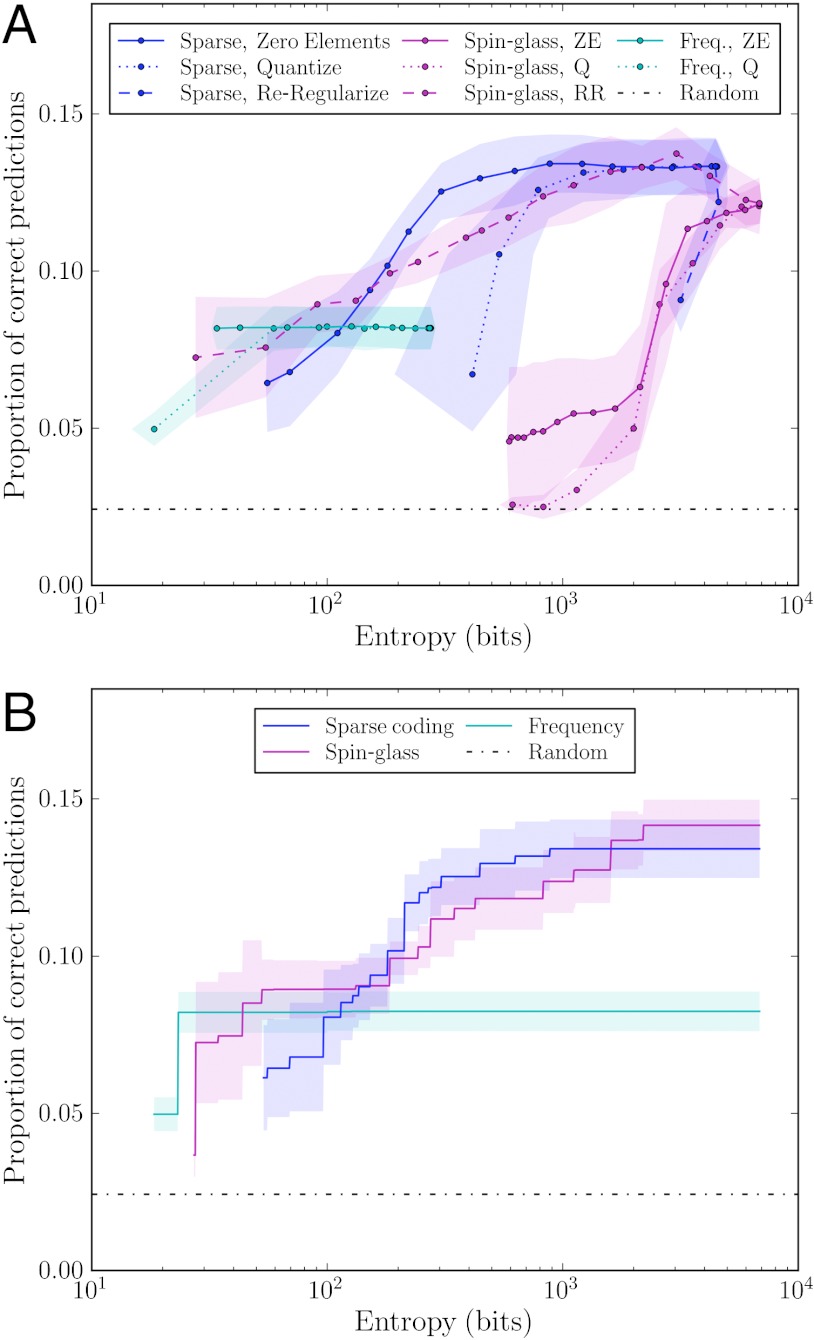

Fig. 4.

Predictive power versus entropy of memory storage. (A) Smaller entropy is achieved in each model in three different ways: (dotted lines) by quantizing each model’s parameters (the elements of hi, Jij (j≥i), and Bij, for frequency, spin-glass, and sparse coding, respectively), mapping them to a restricted set of allowed values to simulate diminished precision; (dashed lines) by regularizing and refitting the model (in the case of spin-glass, reducing the size of the model by limiting it to fit only individuals who appear in high-covariance pairs, and in the case of sparse coding, increasing λ to reduce the number of basis vectors); (solid lines) by zeroing the smallest stored elements. Sparse coding is quite robust to zeroing elements, performing as well as spin-glass when it is regularized and refitted. Shaded regions connect error bars that represent +/- one standard deviation in both entropy and performance. (B) The Pareto front for each model, defined as the maximum average performance achievable using less than a given amount of memory (including trials not depicted in A; SI Text). Shaded regions represent +/- one standard deviation in performance for the best average performer.