Abstract

Researchers sometimes mistakenly accuse their peers of misconduct. It is important to distinguish between misconduct and honest error or a difference of scientific opinion to prevent unnecessary and time-consuming misconduct proceedings, protect scientists from harm, and avoid deterring researchers from using novel methods or proposing controversial hypotheses. While it is obvious to many researchers that misconduct is different from a scientific disagreement or simply an inadvertent mistake in methods, analysis or misinterpretation of data, applying this distinction to real cases is sometimes not easy. Because the line between misconduct and honest error or a scientific dispute is often unclear, research organizations and institutions should distinguish between misconduct and honest error and scientific disagreement in their policies and practices. These distinctions should also be explained during educational sessions on the responsible conduct of research and in the mentoring process. When researchers wrongfully accuse their peers of misconduct, it is important to help them understand the distinction between misconduct and honest error and differences of scientific judgment or opinion, pinpoint the source of disagreement, and identify the relevant scientific norms. They can be encouraged to settle the dispute through collegial discussion and dialogue, rather than a misconduct allegation.

Keywords: research misconduct, honest error, scientific disagreement, ethics

Most definitions of misconduct in research distinguish between misconduct and honest error and scientific disagreement (Shamoo and Resnik, 2009). For example, the U.S. government defines misconduct as “fabrication, falsification, or plagiarism in proposing, performing, or reviewing research, or in reporting research results” and also notes “Research misconduct does not include honest error or differences of opinion” (Office of Science and Technology Policy, 2000). The Wellcome Trust, a private philanthropic research organization based in the U.K., states that misconduct does not include “honest error or honest differences in the design, execution, interpretation or judgement in evaluating research methods or results” (Wellcome Trust, 2005). Likewise, the Natural Sciences and Engineering Research Council of Canada, which funds government-sponsored Canadian research, recognizes that good science may still involve “conflicting data or valid differences in experimental design or in interpretation or judgment of information” (Natural Sciences and Engineering Research Council of Canada, 2006). In an influential report on research integrity, the National Academy of Sciences stated that misconduct definitions should discourage “the possibility that a misconduct complaint could be lodged against scientists based solely on their use of novel or unorthodox research methods” (National Academy of Sciences, 2002).

It is important to distinguish between misconduct and honest error or scientific disagreement for three reasons. First, a misconduct allegation is a serious charge, with potentially dire consequences for one’s career. A finding of misconduct can lead to loss of funding or employment and legal actions, such as a fine or imprisonment. Even a person who is absolved of misconduct may suffer from psychological distress or a damaged reputation (Shamoo and Resnik, 2009). Misconduct allegations should be reserved for serious ethical transgressions, not honest errors or scientific disputes about methods, data, assumptions, or theories. Second, misconduct proceedings are time-consuming and costly. Valuable resources should not be wasted pursuing a misconduct charge that is essentially an inadvertent mistake or a scientific dispute. Third, if research policies do not clearly distinguish between misconduct and scientific disagreement, then scientists may fear that they could be accused of misconduct for making a mistake or using novel methods or techniques, or proposing controversial theories or hypotheses. The fear of an unwarranted misconduct charge could have a chilling effect on scientific innovation and progress.

While it may seem obvious that misconduct is different from honest error or a scientific disagreement, applying this distinction to real cases is not always easy. To understand the difference between misconduct and an honest mistake or a scientific dispute, it is useful to locate the notion of misconduct in a broader conceptual framework. Misconduct takes place against the background of norms for scientific practice, or, to borrow a phrase from Thomas Kuhn, within a normal science tradition (Kuhn, 1962). Misconduct is first and foremost an intentional (or deliberate) deviation from accepted norms of scientific behavior (Shamoo and Resnik, 2009). Deviations that are unintended (or accidental) are regarded as honest error, not misconduct. Unintended deviations are still an important ethical concern, because they may harm the scientific community, human or animal subjects, public health, or society. Scientific competency is itself considered to be of ethically important. However, deviations due to error are not considered misconduct because they are not deliberate. They result from negligence, not willful (or malicious) misbehavior (Shamoo and Resnik, 2009).

Not all deliberate violations of scientific norms are regarded as misconduct, however; only those that significantly threaten the integrity of the research enterprise, such as data fabrication, falsification, or plagiarism (FPP), are considered misconduct. Although many funding agencies and universities limit the definition of misconduct to FFP, some include other misbehaviors, such as interfering with a misconduct investigation or serious breaches of rules for conducting research with human participants or animals (Resnik, 2003).

It can often be difficult to distinguish between misconduct and honest error because one must evaluate a person’s intentions (or motives) to decide whether a certain action of concern constitutes misconduct. Misconduct is marked by intended deception. Sometimes a person’s intentions are obvious. For example, if a researcher copies and republishes another scientist’s entire paper without permission or attribution, this would be an open-and-shut case of plagiarism. If a researcher systematically excludes data that undermines his hypothesis without good reason, this would clearly be a case of falsification.

Sometimes, however, a researcher’s intentions are not obvious. For example, suppose a researcher uses an inappropriate statistical method that affects his overall results by increasing the degree of support for the hypothesis. If the researcher had used the most appropriate method, then the data would have provided much less support for the hypothesis. Is this a case of honest error or misconduct? The may not be an easy question to answer because it requires one to get inside the researcher’s head and explain his behavior. If the most plausible explanation of his behavior is that it is unintended, then we may regard it as honest error. For example, if a reviewer points out a problem with the statistical methods when the paper is submitted for publication, and the researcher corrects the mistake and thanks the reviewer, then this would seems to be an honest mistake. However, if two graduate students point out the statistical error, and the researcher, who happens to also have a depth of knowledge about statistics, refuses to acknowledge it and proceeds to present the paper at a meeting, this would appear to be deliberate misbehavior and could be construed as misconduct.

Distinguishing between misconduct and scientific disagreement can be difficult because scientific disagreement involves a dispute about the norms within one’s discipline or their application to a particular study. What appears to be a violation of accepted norms (misconduct) to one party may really be a dispute concerning those norms. In some cases, a person who uses unorthodox or controversial methods may be accused of misconduct inappropriately. To determine whether a dispute is misconduct or a scientific disagreement, one must have a clear understanding of the relevant norms and how they apply to the particular situation. Most scientific disciplines have norms for designing experiments, and collecting, recording, analyzing, interpreting, and reporting data. Sometimes these norms are codified, but often they are not. Although omitting data is part of the definition of falsification, most disciplines have norms for excluding outliers from an analysis. The difference between legitimate exclusion of outliers and falsification hinges on disciplinary norms (Shamoo and Resnik, 2009). Exclusion of outliers that falls within these norms is not falsification. When disputants can agree upon relevant research norms, or at least reasonable boundaries surrounding norms, it is not difficult to distinguish between legitimate exclusion of outliers and falsification.

Scientists do not always agree about research norms, however. When disagreement occurs, actions may be viewed inappropriately as research misconduct. A recent example of this occurred when David O’Neill accused his former supervisor, New York University (NYU) immunologist Nina Bhardwaj, of research misconduct related to a statistical analysis used in cancer vaccine trial. According to O’Neill, the analysis was deceptive because it was chosen after the data had been collected in order to favor Bhardwaj’s dendritic cell method over other approaches. O’Neill made this accusation in a wrongful dismissal lawsuit against Bhardwaj and NYU after he was fired for unprofessional behavior. O’Neill claimed that he was fired in retaliation for making a misconduct allegation and not for unprofessional behavior (Morris, 2010). An NYU inquiry committee found that Bhardwaj did not commit misconduct and that the allegation constituted a difference of scientific opinion (Abramson, 2011). In this situation, both scientists agreed that it is unethical to pick a statistical approach after the data from a clinical trial have been collected in order to favor a particular hypothesis, but they disagreed about the application of this particular norm to this study. The more appropriate way to resolve this disagreement would have been to have an honest and open discussion about the selection of the statistical analysis used in the study, rather than to make a misconduct allegation. If this solution were not possible, then informal arbitration mediated by colleagues could have been effective.

Other disputes involve disagreements about the norms themselves. One of the key issues in clinical trial methodology is whether to use an intent-to-treat or on-treatment approach for reporting the findings of a study. According to the intent-to-treat approach, data from all participants that are randomized to different groups in the trial should be reported, including those who drop-out of the study or are withdrawn for not following study requirements (Fisher et al., 1990). The rationale for this approach is that it controls for biases related to study withdrawal and non-compliance. If a participant withdraws or fails to comply with study requirements because of worsening disease or the development of intolerable adverse effects, then it is important to include these data in the final analysis, so that the risks of the study can be properly understood (Gupta, 2011). The on-treatment approach, which reports data only for those participants who complete the study, may underreport treatment risks. However, many researchers believe that the on-treatment approach is preferable to the intent-to-treat approach in some cases, because it provides a better understanding of the effectiveness of a treatment, not just the risks. Including data from participants who withdrew from a study due to non-compliance may underestimate the efficacy of a treatment, because they might have had a better outcome if they had complied (Gupta, 2011).

An ethical dispute concerning the use of the on-treatment approach emerged when documents pertaining to litigation against Merck for the development of rofecoxib (Vioxx) became available. Internal company memos indicated that Merck had results from both on-treatment and intent-to-treat approaches for clinical trials on the safety and efficacy of Vioxx to treatment Alzheimer’s disease or cognitive impairment (Psaty and Kronmal, 2008). In 2001, Merck submitted data from the on-treatment analysis to the Food and Drug Administration (FDA), but not data from the intent-to-treat analysis. Merck concluded that rofecoxib was well tolerated, based on the on-treatment analysis, even though the company’s internal memos indicated that an intent-to-treat analysis showed that rofecoxib significantly increased total mortality. Using the on-treatment approach, there were 29 deaths among rofecoxib users, as compared to placebo, but using an intent-to-treat approach, there were 34 deaths among rofecoxib patients, compared to 12 for placebo. Merck did not disclose the intent-to-treat results to the FDA or the public for two years (Psaty and Kronmal, 2008). In an editorial published in the same issue of the journal in which this discrepancy was reported, DeAngelis and Fontanarosa argued that Merck misrepresented data and deliberately misled the FDA and the public about the safety of rofecoxib by reporting results from the on-treatment analysis but not the intent-to-treat analysis (DeAngelis and Fontanarosa, 2008).

While we agree with DeAngelis and Fonanarosa that Merck’s actions were misleading and ethically questionable, there is no proof at this point that they constituted data falsification or any other type of research misconduct, provided that the researchers stated the analysis used and their rationale when reporting the results to the FDA. If, however, the researchers intentionally withheld data that impacted the outcome of the research, and they could not adequately justify why they withheld the data, this would constitute misconduct. Until more is known about Merck’s research on rofecoxib, launching a misconduct inquiry against investigators associated with this research is probably not the optimal way to handle this situation. The best solution would be to carefully examine the aims, design, and procedures of the clinical trials to determine which statistical methodology is most appropriate.

Many other fields have honest differences of opinion about research norms. Ecologists are accustomed to working with “messy” datasets with significant biological variation. While they agree that statistical analysis is necessary, they often disagree passionately about framing the null hypothesis, which statistical analysis is most appropriate, whether to use Bayesian or standard approaches, which data should be shown in the publication, and how data should be displayed (Garamszegi et al., 2009). There can be fierce differences of opinions because certain statistical manipulations can change the stated conclusions of a study. Many molecular biologists do not use statistical analyses to test for significant differences in gene expression studies involving real-time polymerase chain reaction (PCR), even though the tools are available (Yuan et al., 2006). Historically, the norm amongst some molecular biologists is that statistical analyses are often not necessary because of the large apparent differences among sample means. However, certain assays, such as real-time PCR, are very sensitive to experimental conditions that make statistical analyses very powerful tools (Yuan et al., 2008). Disputes about the proper use of statistics in ecology or molecular biology should be treated as scientific disagreements, not as cases of possible misconduct.

The most serious disputes occur when scientists disagree about the fundamental assumptions or concepts underlying their discipline. When this occurs, scientists may disagree about many research methods and norms. These types of disputes may occur when a field is changing rapidly, undergoing a scientific revolution, or is fractured into different camps. In these situations, there may be no dominant paradigm accepted by most members of the discipline and no normal science tradition (Kuhn, 1962). For example, in the early 20th century, the advent of quantum mechanics utterly transformed physics. Before 1900, most physicists subscribed to the Newtonian idea that all physical systems operate according to deterministic laws in which outcomes can predicted with certainty if one knows enough about the initial conditions. Quantum physicists, such as Neils Bohr, Max Planck, and Werner Heisenberg, rejected this idea, based on their interpretation of experiments designed to test whether electromagnetic radiation acts as a particle or a wave. They proposed that physical systems at the atomic scale operate according to probabilistic laws, and that outcomes cannot be predicted with certainty. Albert Einstein abhorred the idea of quantum indeterminacy, and he spent many years trying to prove that this phenomenon is an artifact of experiments and their interpretations, and that the world is fundamentally deterministic (Gamow, 1985). Suppose that Einstein had expressed his displeasure through an allegation of misconduct, arguing that quantum indeterminacy is a fraud perpetrated by certain physicists. This would have been an inappropriate way of resolving a scientific disagreement. The better path, which he followed, would be to try to convince his peers by means of evidence and arguments that quantum indeterminacy rests on a mistake.

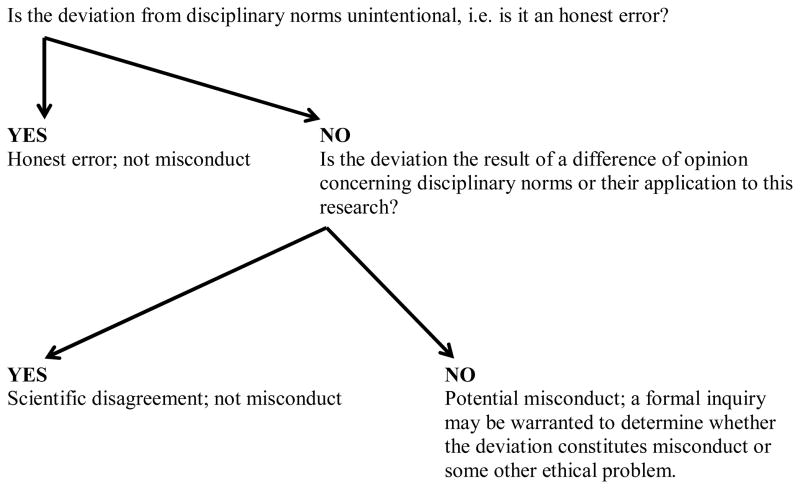

Because the line between misconduct and honest error a scientific dispute is often unclear to some, research organizations and institutions should distinguish between misconduct and scientific disagreement in their policies and practices. The distinction should also be explained during educational sessions on the responsible conduct of research and during mentoring. We have provided a decision-tree to aid in education, discussion, and policy development (see Figure 1). When researchers wrongfully accuse their peers of misconduct, it is important to educate about the distinction between misconduct and honest error and differences of scientific judgment or opinion, pinpoint the sources of disagreement, and identify the relevant scientific norms. They can be encouraged to settle the dispute through collegial discussion and dialogue, rather than a misconduct allegation.

Figure 1. Misconduct vs. Honest Error and Scientific Disagreement Decision-Tree.

Acknowledgments

This article is the work product of an employee or group of employees of the National Institute of Environmental Health Sciences (NIEHS), National Institutes of Health (NIH). However, the statements, opinions or conclusions contained therein do not necessarily represent the statements, opinions or conclusions of NIEHS, NIH or the United States government. Thanks to Jennifer Hinds for help in document preparation.

Footnotes

The authors have no conflicts of interest to disclose.

References

- Abramson SB. Differing opinion, not misconduct. Nature. 2011;470:465. doi: 10.1038/470465a. [DOI] [PubMed] [Google Scholar]

- DeAngelis CD, Fontanarosa PB. Impugning the integrity of medical science: the adverse effects of industry influence. JAMA. 2008;299:1833–1835. doi: 10.1001/jama.299.15.1833. [DOI] [PubMed] [Google Scholar]

- Fisher LD, et al. In: Statistical Issues in Drug Research and Development. Peace KE, editor. New York: Marcel Dekker; 1990. pp. 331–50. [Google Scholar]

- Gamow G. Thirty Years that Shook Physics: The Story of Quantum Theory. New York: Dover; 1985. [Google Scholar]

- Garamszegi LZ, et al. Changing philosophies and tools for statistical inferences in behavioral ecology. Behavior Eco. 2009;20:1363–1375. [Google Scholar]

- Gupta SK. Intention-to-treat concept: a review. Persp Clin Res. 2011;2:109–112. doi: 10.4103/2229-3485.83221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhn T. The Structure of Scientific Revolutions. Chicago: Univ. of Chicago Press; 1962. [Google Scholar]

- Morris E. Statistics spark dismissal suit. Nature. 2010;467:260. doi: 10.1038/467260a. [DOI] [PubMed] [Google Scholar]

- National Academy of Sciences. The Ensuring Integrity of the Research Process. Vol. 1. Washington, DC: National Academy Press; 2002. Responsible Science; p. 27. [Google Scholar]

- Natural Sciences and Engineering Research Council of Canada. Tri-Council Policy Statement: Integrity in Research and Scholarship. 2006 Available at: http://www.nserc-crsng.gc.ca/NSERC-CRSNG/policies-politiques/tpsintegrity-picintegritie_eng.asp.

- Office of Science and Technology Policy. Federal Research Misconduct Policy. Federal Register. 2000;65(235):76262. [Google Scholar]

- Psaty BM, Kronmal RA. Reporting mortality findings in trials of tofecoxib for Alzheimer disease or cognitive impairment: a case study based on documents from rofecoxib litigation. JAMA. 2008;299:1813–1817. doi: 10.1001/jama.299.15.1813. [DOI] [PubMed] [Google Scholar]

- Resnik DB. From Baltimore to Bell Labs: reflections on two decades of debate about scientific misconduct. Account Res. 2003;10:123–125. doi: 10.1080/08989620300508. [DOI] [PubMed] [Google Scholar]

- Shamoo AS, Resnik DB. Responsible Conduct of Research. 2. New York: Oxford Univ. Press; 2009. [Google Scholar]

- Wellcome Trust. Statement on the Handling of Allegations of Research Misconduct. 2005 Available at: http://www.wellcome.ac.uk/About-us/Policy/Policy-and-position-statements/WTD002756.htm.

- Yuan JS, Reed A, Chen F, Stewart CN., Jr Statistical analysis of real-time PCR data. BMC Bioinformatics. 2006;7:85. doi: 10.1186/1471-2105-7-85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan JS, Wang D, Stewart CN., Jr Statistical methods for efficiency adjusted real-time PCR analysis. Biotechnol J. 2008;3:112–123. doi: 10.1002/biot.200700169. [DOI] [PubMed] [Google Scholar]