Abstract

Objective. To describe and assess a course review process designed to enhance course quality.

Design. A course review process led by the curriculum and assessment committees was designed for all required courses in the doctor of pharmacy (PharmD) program at a school of pharmacy. A rubric was used by the review team to address 5 areas: course layout and integration, learning outcomes, assessment, resources and materials, and learner interaction.

Assessment. One hundred percent of targeted courses, or 97% of all required courses, were reviewed from January to August 2010 (n=30). Approximately 3.5 recommendations per course were made, resulting in improvement in course evaluation items related to learning outcomes. Ninety-five percent of reviewers and 85% of course directors agreed that the process was objective and the course review process was important.

Conclusion. The course review process was objective and effective in improving course quality. Future work will explore the effectiveness of an integrated, continual course review process in improving the quality of pharmacy education.

Keywords: course review, curriculum, assessment, instructional methods, course evaluations

INTRODUCTION

Quality assurance is defined as the systematic monitoring and evaluation of a project to ensure that standards of quality are being met. Quality in higher education is multifaceted and complex, but ultimately, the quality of an education program should be measured in terms of what students know, understand, and can do at the end of the curriculum. Thus, quality monitoring should focus on improvement and enhancement of student learning. Two components critical to achieving this objective are how course outcomes are identified and the teaching and learning strategies used to achieve them.1

One of the principal mechanisms for ensuring the quality of learning and teaching is peer review of teaching and evaluation of the curriculum, including the instructional methods.2,3 There are published guidelines regarding peer observation of classroom teaching, but the evaluation of the curriculum and related teaching, learning, and assessment practices is less well defined. Horsburgh1 explored factors that impact student learning through a quality assurance process and found that the most important were the curriculum, the instructors, how the teachers taught and facilitated learning, and the assessment practices used. Curricular evaluations and course reviews, often driven by accreditation expectations, tend to be isolated events that are not well integrated into institutional processes for accountability and often fail to improve teaching and learning. Ideally, the course review process needs to be efficient, effective, and economical.4

Quality assurance methods, for example Six Sigma, stress the importance of developing a factual understanding of the current quality status of a program, locating sources of problems, establishing a process map, measuring the process, and collecting data to serve as a baseline. A course review process should identify the quality of individual courses and locate areas in each course and potentially more global areas for improvement. This process should focus on foundational aspects of teaching, learning, and assessment, such as presence of appropriate learning objectives; degree of learning-centered activities; assessment methods consistent with learning objectives; and course goals. The course review process should also examine consistency in coordination and in appropriate course policies and content.

A culture of evidence-based practice within higher education is emerging, the effects of which have trickled down to pharmacy education, particularly in the development of processes to review curricula. Within the past 2 years, the number of articles describing quality improvement processes within pharmacy education has increased. Stevenson and colleagues5 developed a course review process to identify the strengths of and areas for improvement in advanced pharmacy practice experiences. The described process included areas of teaching and learning, assessment, and content. General areas for improvement included efforts to identify quality training sites and preceptors, consistent use of syllabi templates by preceptors, and appropriate use of the approved standardized evaluation form. A course review process was developed by Peterson and colleagues6 to review lecture-based courses within a pharmacy curriculum. These authors examined issues similar to those of the current study (ie, policies, content, integration, skills, course management) but also included a review of teaching skills for each instructor. Finally, a model for curricular quality improvement, which addressed many areas of assessment and resources, was described for a new school of pharmacy.7

This manuscript, which outlines a course review process that identified areas for improvement in courses, was part of a process map moving forward and provided baseline data. The goal was to make the course review process efficient (ie, requiring a short period of time), effective (ie, identifying areas for improvement in courses), and economical (ie, minimizing the need for human and financial resources), resulting in enhanced quality of pharmacy education at a research-intensive university. This goal presents unique challenges because of multiple competing time commitments present at research-intensive universities. Because of these competing priorities, educational initiatives can become undervalued by faculty.8

DESIGN

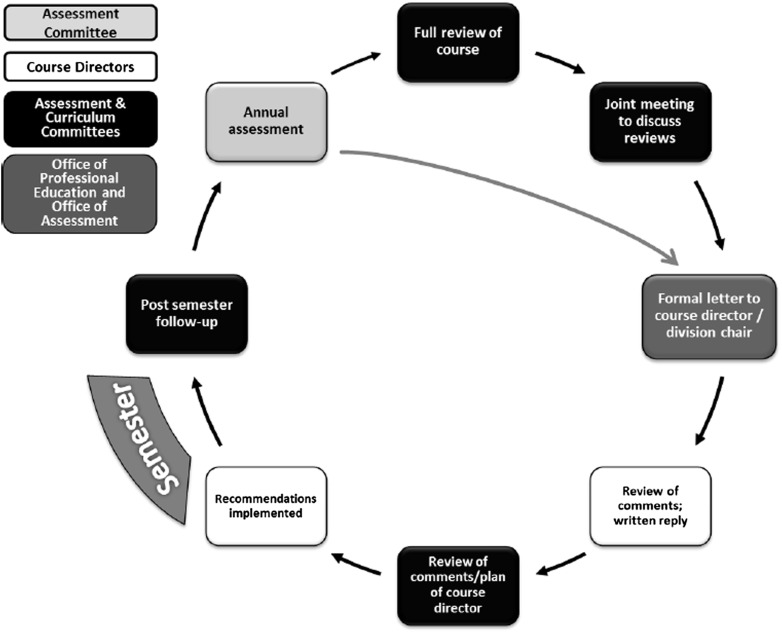

The course review was initiated in January 2010 by the School of Pharmacy at The University of North Carolina at Chapel Hill as part of both a quality assurance process and the self-study process for the Accreditation Council for Pharmacy Education reaccreditation visit. The most recent comprehensive course review was completed between 2000 and 2004, prior to the previous self-study process. The process was initiated by the curriculum committee and included 5 parts: self-reflection by the course director; review of the course by a course review team; review of the team’s findings and recommendations; review of the team’s recommendations by course directors; and retrospective analysis (Figure 1). The process was based on principles outlined by Venkatraman.9 This research was deemed exempt by the university’s institutional review board.

Figure 1.

Schematic of a course review process used in a doctor of pharmacy program.

Course directors completed a self-reflection document and a 52-item teaching goals inventory covering 6 topic areas.10 Course directors were given approximately 3 weeks to complete these items. Review teams were expected to complete the reviews in 8 to 10 weeks. Each course was assigned a minimum of 2 faculty members with the majority of faculty serving on the curriculum or assessment committee. Each course was assigned a clinical faculty member to ensure that the course was appropriately focused on pharmacy practice. The review and reports were based on a rubric developed by the committees to ensure that teams were using the same criteria. The rubric addressed 5 areas: course layout and integration, learning outcomes, assessment of learning, resources and materials, and learner interaction. The available data used for the review process included the course director’s self-reflection and teaching goals inventory, information about the course available through the course learning management system (Blackboard Inc., Washington, DC) and curricular mapping software (Atlas, Rubicon Internal, Portland, OR), end-of-semester course evaluations from the previous course offering, and the rubric completed by current students. Review teams were asked to work collaboratively using the rubric to develop recommendations. All review teams received a guidance document that included a listing of resources available (eg, Blackboard, course evaluations, etc), examples of appropriately structured learning outcomes, a sample report, and a timeline.

The self-reflection document asked course directors to comment on various aspects of the course, including the primary learning goals and objectives, rationale for the course design, how the course aligned with curricular outcomes, efforts made to ensure consistency within the course, efforts to align content between courses, and any perceived learning deficits. Course directors also provided information on student performance, their satisfaction with student performance, and estimates on the percentage of class time spent on various learning activities (eg, lecture, discussion). Finally, they were asked to note strengths and weaknesses of the course.

Students were asked to participate in the process, with approximately 4 students completing the rubric for each course. These students included at least 2 who had recently completed the course and typically 2 who had completed the course at least 1 year prior. For example, a first-year course was reviewed by 2 first-year students, 1 second-year student, and 1 third-year student. The more senior-level students were asked to focus on how the information and skills developed from that course carried forward to later coursework. When possible, students worked collaboratively to complete the rubric.

After the initial course reviews were completed, the committees met 1 time jointly to discuss all the reports before sending them to course directors and their respective division chairs. The reports to course directors summarized the positives of the course and, in most cases, recommended the top 1 to 3 changes that would have the most impact in changing the course. To help facilitate closing the loop, the committees asked the course directors to respond in writing to the committees’ recommendations when they submitted their syllabi for that semester. After the semester, the committees reviewed the course directors’ responses again, the most recent course evaluations, and any other appropriate material (eg, slides, syllabus). Based on the new information, the review teams determined if recommended course changes had been made and if further changes or follow-up were warranted.

The overall process was assessed using 2 methods: the ability of the process to identify areas for improvement and an attitudinal survey of the course review team and course directors. Course evaluations were used pre- and post-course review as 1 indirect metric of the impact of the course review process.

EVALUATION AND ASSESSMENT

From mid- to late-January through mid-August 2010, 30 core courses were reviewed, including 5 pharmaceutical care laboratories and 6 courses related to the professional experience program. The courses of the professional experience program are not experiential training but rather a series of half-credit courses that facilitate student professional development and preparation for full participation in the experiential component of the curriculum. Thirty-seven faculty members served as course reviewers, with 51% of reviewers serving on the curriculum or assessment committee. Thirty percent of the reviewers were off-campus faculty members, and the remaining 70% were campus-based.

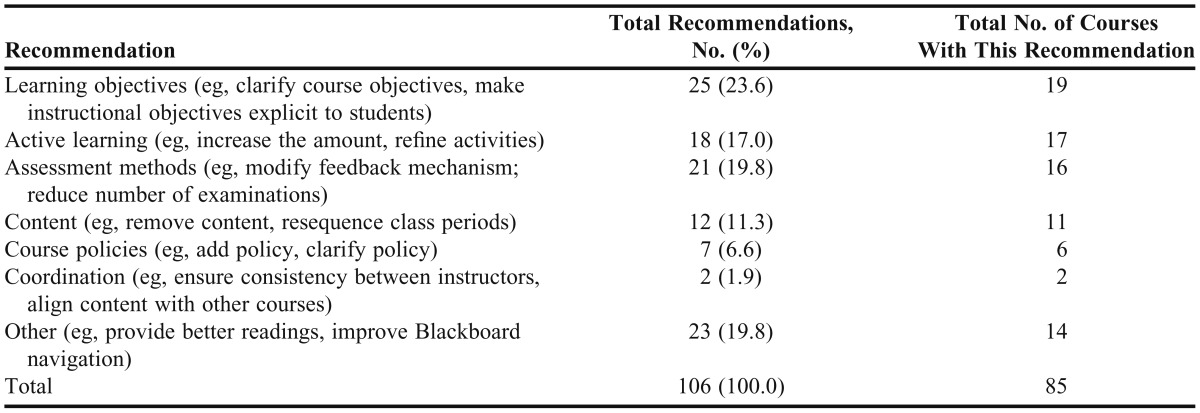

Self-reflection statements and teaching goals inventories were completed for all but 2 courses (course directors were unavailable to provide this information because they were no longer employed by the school). Each course received recommendations for improvement, for a total of 106 recommendations (3.5 recommendations per course). Sixty-four recommendations (60% of all recommendations and approximately 2.1 recommendations per course) were made on teaching (ie, learning objectives and active learning) and assessment methods. These areas for improvement were recommended in 28 courses (90% of reviewed courses). A breakdown of the recommendations can be found in Table 1. Statistical methods were based on distribution of data with significance set at p<0.05. Teaching goals inventory was analyzed with a 1-way ANOVA and a Tukey’s post-hoc. The course evaluations were a 4-category Likert-type scale ranging from 1 (strongly disagree) to 4 (strongly agree), and changes in course evaluations were performed with a Mann-Whitney U-test. Changes in skill development were analyzed with a Chi-squared test.

Table 1.

Categories and Frequency of Recommendations From the Course Review

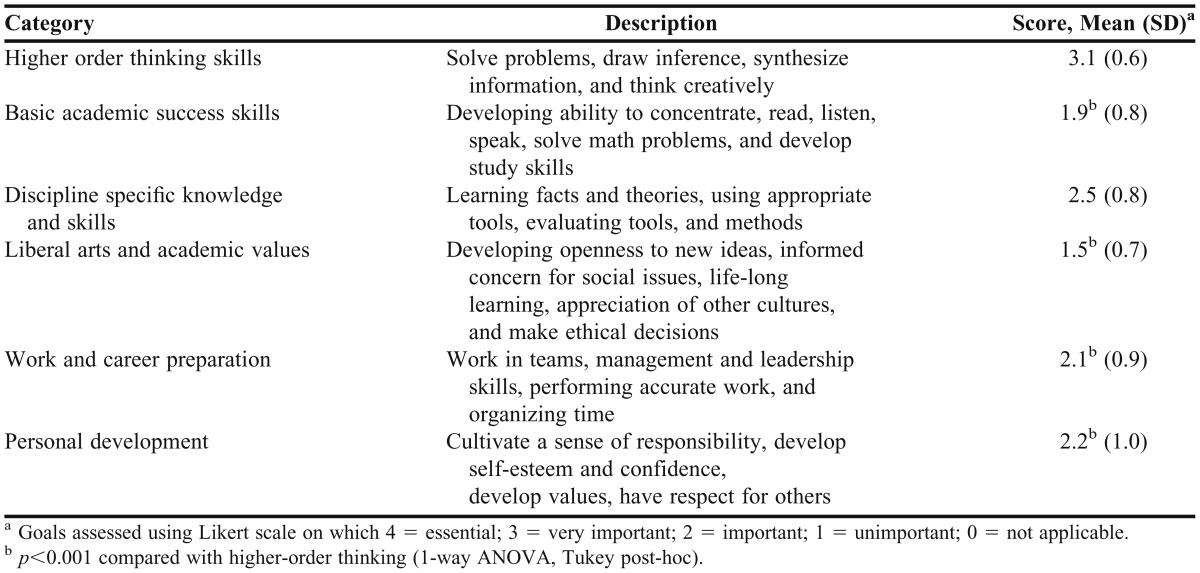

Teaching goals related to higher-order thinking were of significantly greater importance to course directors than all other areas, with the exception of discipline-specific knowledge and skills (p< 0.001) (Table 2). Upon examining individual questions within the teaching goals inventory, the top 5 scores related to increasing the ability to apply principles and generalizations to new problems (3.4), developing problem-solving skills (3.4), learning concepts and theories related to the subject (3.4), developing the ability to synthesize and integrate information (3.3), and learning terms and facts on a subject (3.2).

Table 2.

Assessment of Goals Desired in a Course From the Teaching Goals Inventory

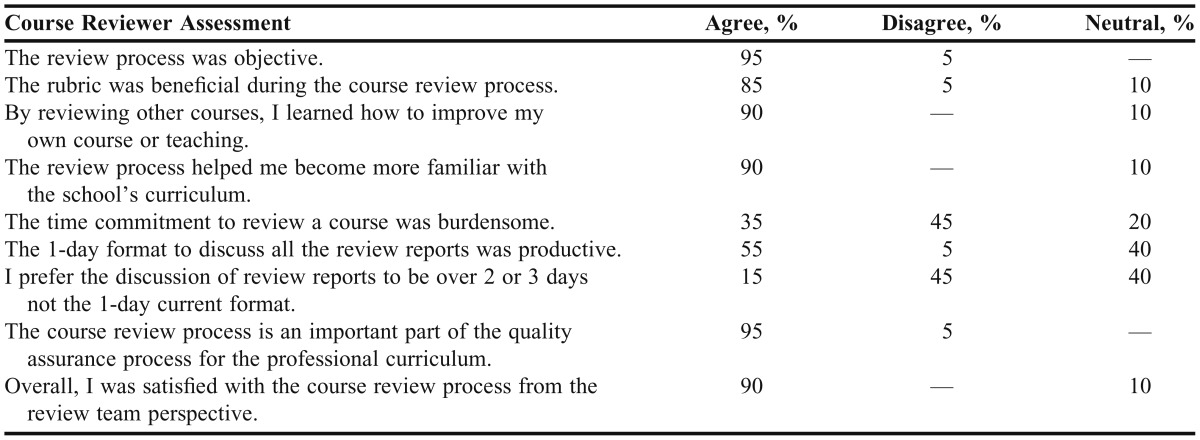

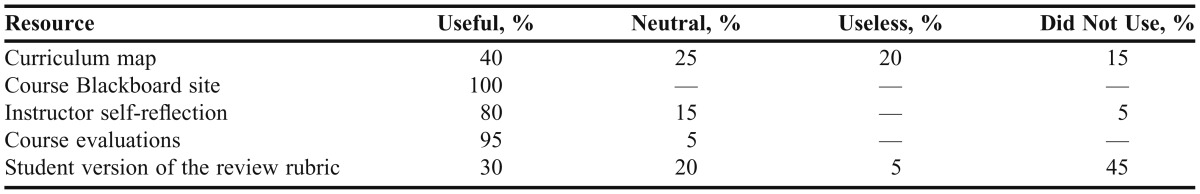

An attitudinal survey was completed by 20 review team members (54%) and 13 course directors (52%). Course reviewers found the review process to be favorable and an important part of quality assurance (Table 3). The median time reported by course reviewers to complete the review was 5 hours (range, 2 to 10 hours). Review team members also were asked which resources were valuable (Table 4). All reviewers felt that the information on Blackboard (ie, syllabus, slide sets, problem sets, etc) was a useful source of information and that course evaluations were the second most useful resource. When review team members were asked what other materials would have been helpful, 1 team member suggested that being able to observe the course would have been useful. Other comments by team members were related to the available material (eg, not used or useful). When asked what could be done to improve the process, review team members emphasized the necessity of closing the loop, performing the review process with a larger period of time before the beginning of the semester so changes could be implemented, and taking more complete minutes of review team meetings to capture the discussion.

Table 3.

Results From the Attitudinal Survey of Course Reviewers (n=20)

Table 4.

Review Team Members’ Assessments of Useful Resources (n=20)

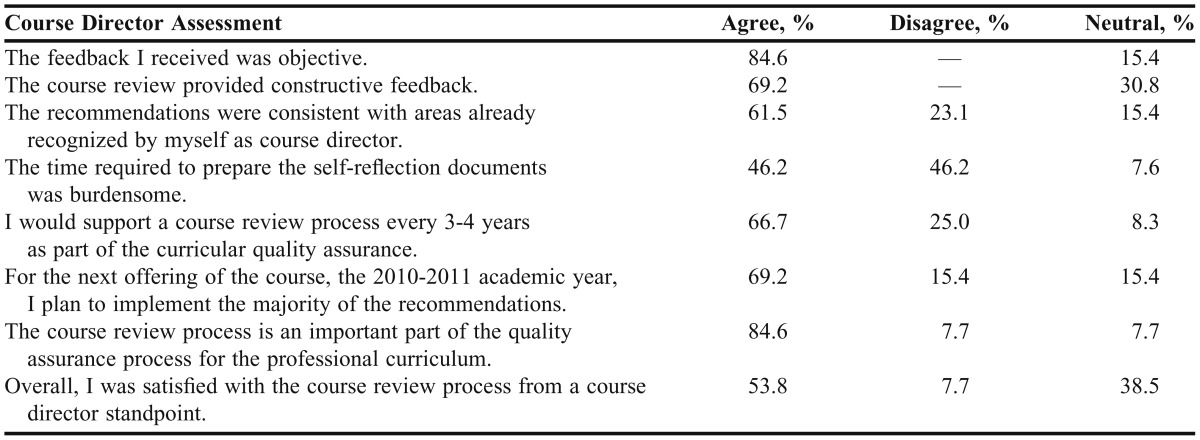

Course directors were satisfied with the process and believed it was an important part of quality assurance (Table 5). The course directors felt that the feedback was objective and constructive and indicated they would implement the majority of changes for the next offering of the course. They reported a median time of 2 hours (range, <1 hour to 17 hours) to complete the self-reflection documents.

Table 5.

Results From the Attitudinal Survey of Course Directors (n=13)

When course directors were asked what barriers exist to the implementation of change, time emerged as a theme (3 of 8 of comments). Other comments addressed barriers related to a disagreement with the recommendations. When course directors were asked about areas for improvement, 2 of the 4 comments were related to the benefit of more face-to-face interaction with review teams; 1 comment addressed the idea of academic freedom and the belief that faculty members should be trusted on how to deliver their courses, and another suggested that “exemplar” examples from other courses be provided to course directors.

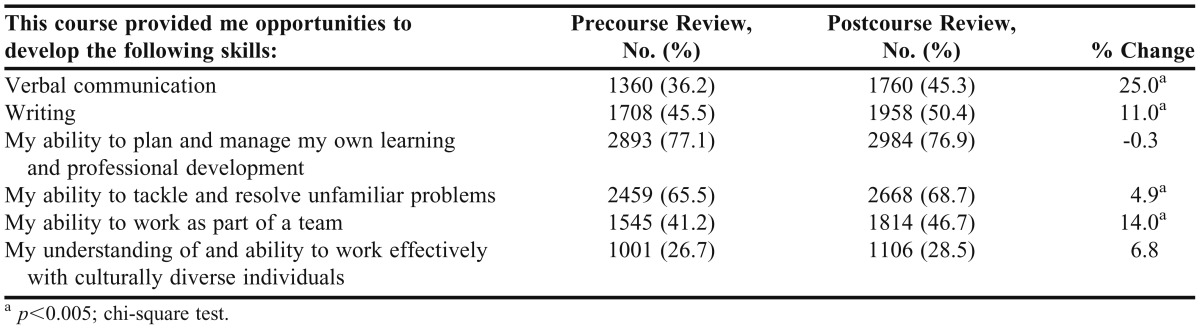

Examining course evaluations before the course review process and immediately after the process was an indirect measure of immediate impact. The one significant change noted was an increase (3.5 to 3.7) in student perception that course content was clearly related to the stated learning outcomes (p<0.001), which could be considered a moderate effect size (Cohen’s d = 0.74). On end-of-semester course evaluations, students were asked whether courses offered opportunities to develop various skills related to communication, working in teams, planning their own work, and addressing unfamiliar problems (Table 6). There were some increases in opportunities for skill development after the course review process and implementation of recommendations.

Table 6.

Student Assessment of Various Skills Developed

DISCUSSION

The course review process appears to have met its goals of establishing an efficient, effective, and economical approach to reviewing courses. It was efficient in that 30 courses were reviewed in a 6-month period. By identifying areas for improvement in the core areas of instruction, assessment of learning, content, and course administration, it was an effective process. The largest area of focus for the review teams’ recommendations was in instructional and assessment methods, with the largest number of recommendations centered on clarifying learning objectives. The second-most cited recommendation was to increase active learning. Examination of the end-of-semester course evaluations following the course review process revealed a potential improvement in ratings and trending toward more opportunities for students to develop skills in written and verbal communication and teamwork. Because of changes prior to the course review process, historical data were not available. Unfortunately, without a historical trend analysis of course evaluations, it is difficult to firmly conclude that changes in course evaluations are attributable to a specific intervention or to the variability associated within independent samples over time. Finally, the described process was considered economical on a per-individual basis (5 hours for review team member, 2 hours for course director), even though the cumulative time could be viewed as resource intensive. Ideally, a follow-up course review would be better able to assess changes, but an intense review of courses every year is considered neither economical nor practical. Subsequent to this study, procedures such as evaluating written responses from the course directors and a more systematic yearly review of course evaluations were implemented to assess course changes (Figure 1).

Examination of the teaching goals inventory indicated that higher-order thinking skills are greatly valued by course directors; however, there was no association between those goals and teaching methods that promote those skills (data not shown). Self-reflection by the course directors indicates that the lecture method remains the predominant method of instruction (approximately 50% of class time), despite research showing that the lecture method is not the best approach for developing “thinking skills.”11 The disconnect between desiring higher-order teaching goals and using instructional methods that do not facilitate these skills may be common among instructors and may be a function of interindividual variability in how cognitive levels of Bloom’s Taxonomy are interpreted.12 Within our institution, faculty members are attempting to reduce the number of lectures in the curriculum.

The course review process is dependent on faculty time, and its usefulness will ultimately depend on the outcomes. The challenge of time exists for any institution, especially at research-intensive universities because of the multimodal mission of scholarship, service, practice, and teaching. Based on survey data, faculty members believe the process is worthwhile only if there is “closing of the loop,” meaning that the curriculum and assessment committees ensure that recommendations are satisfactorily addressed by the course directors. As institutions refine their methods of assessment, this process can be streamlined, although it would still require adequate resources. Interim assessments of courses would need to occur, especially for courses requiring significant revisions. In the current case, interim assessments occur at the end of the semester once course evaluations are closed. An additional issue is that there is no single standard or metric to measure course effectiveness. Using course evaluations and student success based on grades are important metrics, as are the instructional methods and instructional alignment, (ie, course objectives align with course assessments and instructional methods); however, interpretations of these assessments are not always straightforward. For example, course evaluations are often recommended to be examined on a relative scale (ie, compared with similar courses) rather than an absolute scale.13

Within the quality assurance literature, there are several goals related to instructional methodology and course review.2 The first is to continue to make student learning an institutional priority. This was accomplished locally by making the course review process and its findings public, which helped demonstrate that student learning is a priority. The second goal in achieving quality enhancement is to facilitate discussions within the college or school about teaching. This goal is being accomplished locally through various faculty development efforts, which include the formation of a school-supported center focused on faculty development. Academic quality is often mistakenly thought to occur automatically if institutions of higher learning simply recruit the best faculty members and students and then leave them alone.2 Course reviews provide a foundation for individuals to share accountability with respect to improved teaching. This course review process also can provide information on best practices, while the faculty development center can help guide faculty members through an improvement process.

The barriers and challenges found in this study are consistent with reports in the literature. One barrier is to determine who is responsible for initiating change. In this case, the leadership came from the standing curriculum and assessment committees. Faculty resistance to change can be a second barrier, as faculty members often view themselves as having autonomy and academic freedom and may not like being asked to rethink their teaching styles.14 While the current process was viewed positively by most faculty members, there was indication that some viewed themselves as autonomous. One barrier not addressed was the ability of the process to cause curricular change. Kohn believes that much of quality assurance implementation in education fails to address the fundamental questions about learning and, more specifically, whether the curriculum is engaging in the relevant learning processes.15 The associated risk is that there could be too much documentation of processes, which can consume time and effort. Finally, as pointed out by those involved in the course review, quality assurance requires establishing a strong feedback loop, with evaluation being a continuous process rather than being left until the end of the program of study.

There are several limitations to our process and its evaluation. First, assessment may not be viewed as a priority among faculty members. As indicated by faculty members in this report, closing the loop and feedback are important aspects. Rather than making judgments on the course or instructors, the goal was to design a process that is formative and developmental. Hopefully, by eliminating the judgment aspects, the process will facilitate a cultural change in assessment. A related issue is the differentiation between the perception of time and actual time. If the assessment process is viewed as a barrier, the perception of time spent would be augmented. However, having individual faculty members spend 5 hours to review a course (the average time reported here) or 2 hours to self-reflect (as reported here) may not be as burdensome as perceived, especially as the time was spread over several weeks. Additionally, it is challenging to measure the course review process appropriately because multiple factors could be involved (eg, course review, faculty development, personal initiative) and because it is difficult to assess outcomes. An attempt was made to use course directors’ comments about the review and the course evaluations as indirect markers for change.

CONCLUSION

This manuscript describes the use of a course review process that is to be used as a standing quality assurance and quality improvement process. This course review process is a framework that adopts quality assurance principles to reach the core processes in pharmacy education and student learning. The process was able to identify areas for improvement within individual courses. One of the priorities moving forward is a strong feedback loop to ensure that course review team recommendations are addressed. Continual improvements will be made during interim reviews to ensure that the process becomes increasingly efficient and effective.

ACKNOWLEDGEMENTS

The authors thank Kim Deloatch and Mary Roth McClurg for their assistance in developing the course review process and their input during the preparation of the manuscript.

REFERENCES

- 1.Horsburgh M. Course approval process. Qual Assur Higher Educ. 2000;8(2):96–99. [Google Scholar]

- 2.Dill DD. Is there an academic audit in your future? reforming quality assurance in US higher education. Change. 2000;32(4):34–41. [Google Scholar]

- 3.Massy WF. Energizing Quality Work: Higher Education Quality Evaluation in Sweden and Denmark. Project 6, Quality and Productivity in Higher Education. National Center for Postsecondary Improvement SCA; 1999. [Google Scholar]

- 4.Moreland N, Horsburgh R. Auditing: a tool for institutional development. Vocational Aspect Educ. 1992;44(1):29–42. [Google Scholar]

- 5.Stevenson TL, Hornsby LB, Phillippe HM, Kelley K, McDonough S. A quality improvement course review of advanced pharmacy practice experiences. Am J Pharm Educ. 2011;75(6):Article 116. doi: 10.5688/ajpe756116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Peterson SL, Wittstrom KM, Smith MJ. A course assessment process for curricular quality improvement. Am J Pharm Educ. 2011;75(8):Article 157. doi: 10.5688/ajpe758157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ried LD. A model for curricular quality assessment and improvement. Am J Pharm Educ. 2011;75(10):Article 196. doi: 10.5688/ajpe7510196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Elen J, Lindblom-Ylanne S, Clement M. Faculty development in research-intensive universities: the role of academics' conceptions on the relationship between research and teaching. Intl J Acad Develop. 2007;12(2):123–139. [Google Scholar]

- 9.Venkatraman S. A framework for implementing TQM in higher education programs. Qual Assur Educ: Intl Perspective. 2007;15(1):92–112. [Google Scholar]

- 10.Angelo TA, Cross KP. Classroom assessment techniques: a handbook for college teachers. 2nd ed. San Francisco: Jossey-Bass Publishers; 1993. [Google Scholar]

- 11.Bligh DA. What's the Use of Lectures? 1st ed. San Francisco: Jossey-Bass Publishers; 2000. [Google Scholar]

- 12.Nasstrom G. Interpretation of standards with Bloom's revised taxonomy: a comparison of teachers and assessment experts. Intl J Res & Method Educ. 2009;32(1):39–51. [Google Scholar]

- 13.Arreola RA. 3rd ed. Bolton, Mass: Anker Pub. Co; 2007. Developing a Comprehensive Faculty Evaluation System: A Guide to Designing, Building, and Operating Large-Scale Faculty Evaluation Systems. [Google Scholar]

- 14.Blankstein AM. Why TQM can't work–and a school where it did. Educ Digest. 1996;62(1):27. [Google Scholar]

- 15.Kohn A. Turning learning into a business: concerns about total quality. Educ Leadership. 1993;51(1):58. [Google Scholar]