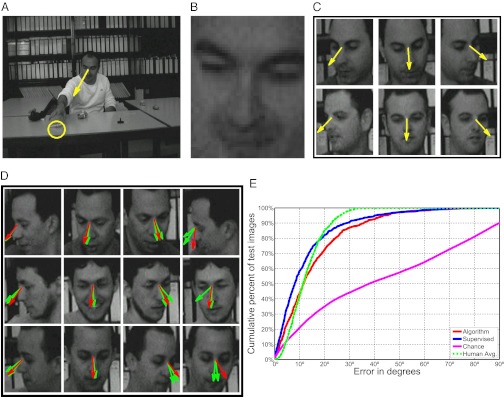

Fig. 3.

Gaze direction (gaze direction recovered within the image plane). Upper: Training. (A) Detected mover event. Yellow circle marks location of event, providing teaching signals for gaze direction (yellow arrow). (B) Face image region used for gaze learning. (C) Examples of face images with automatically estimated gaze direction, used for training. Lower: Results. (D) Predicted direction of gaze: results of algorithm (red arrows) and two human observers (green arrows). (E) Performance of gaze detectors: detector trained on mover events (red), detector trained with full manual supervision (blue), chance level (magenta), and human performance (dashed green). Chance level was computed using all possible draws from the distribution of gaze directions in the test images. Human performance shows the average performance of two human observers. Abscissa: maximum error in degrees; ordinate: cumulative percent of images.