Figure 2.

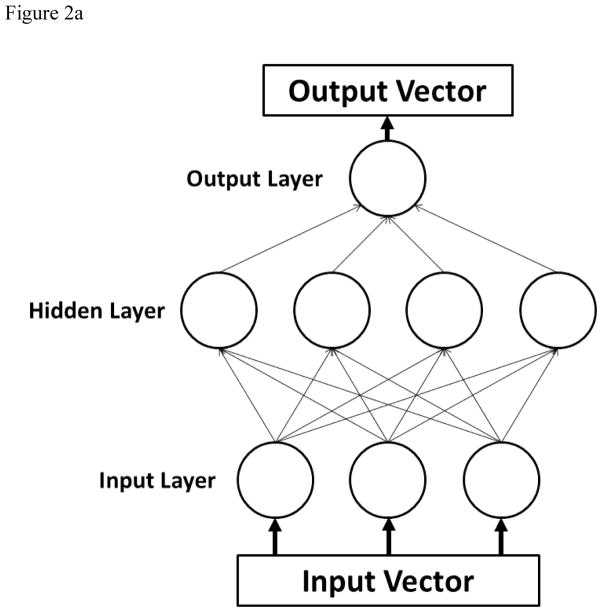

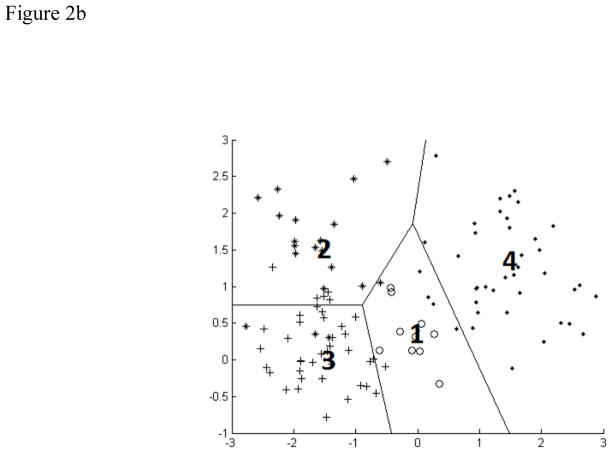

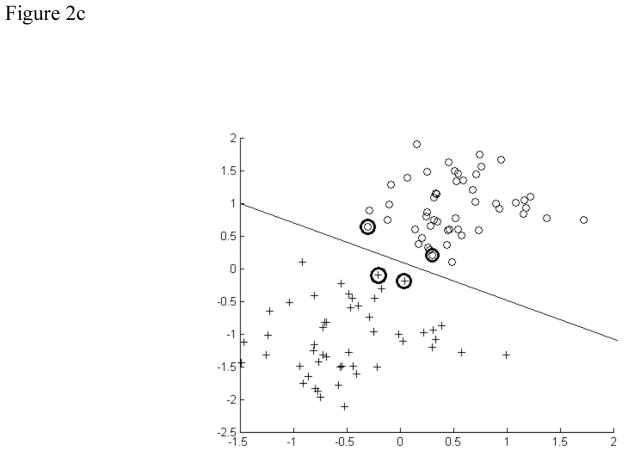

A) Schematic of a multilayer perceptron neural network. Each parameter of interest in the input vector has a corresponding node in the input layer. The hidden layer contains the nodes, the number of which was varied during the experiment. The output vectors are the possible classifications of data, which were normal and disordered in this study. B) Schematic of a learning vector quantization neural network. The four codebook vectors (1–4) represent average positions in the four possible classes of data. The regions established for these classes are outlined. In our study, there were only two classes. C) Schematic of a support vector machine neural network. Support vectors on the periphery of the data clusters (circled) help construct the hyperplane by locating the maximum margin between the classes.