The title of this editorial is not new. For example, it was used nearly a decade ago for an article in the BMJ's Statistics Notes series.1 Altman and Bland considered the dangers of misinterpreting differences that do not reach significance, criticising use of the term “negative” to describe studies that had not found statistically significant differences. Such studies may not have been large enough to exclude important differences. To leave the impression that they have proved that no effect or no difference exists is misleading.

As an example, a randomised trial of behavioural and specific sexually transmitted infection interventions for reducing transmission of HIV-1 was published in the Lancet.2 The incidence rate ratios for the outcome of HIV-1 infection were 0.94 (95% confidence interval 0.60 to 1.45) and 1.00 (0.63 to 1.58) for two intervention groups compared with control. In the abstract, the interpretation is: “The interventions we used were insufficient to reduce HIV-1 incidence...” But, looking again at the confidence intervals, the results in both treatment arms are compatible with a wide range of effects, from a 40% reduction in incidence of HIV-1 to a 50% increase. So, to give a summary of the results that gives the impression that this study has shown that these interventions are not capable of reducing HIV-1 incidence is misleading. What might be the implications for people at risk of HIV-1 infection? It could be that an intervention that does in fact protect against infection is not widely used. It could also be that an intervention that actually harms people by increasing HIV-1 infection is viewed as an intervention which has “no effect.” The truth of these situations can be established only by collecting more evidence, and statements implying that an intervention has no effect might actually discourage further studies by giving the impression that the question has been answered.

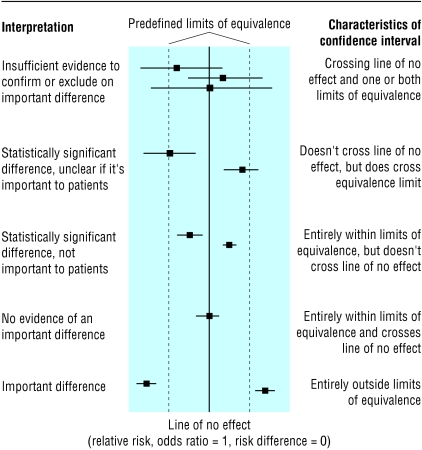

When is it reasonable to claim that a study has proved that no effect or no difference exists? The correct answer is “never,” because some uncertainty will always exist. However, we need to have some rules for deciding when we are fairly sure that we have excluded an important benefit or harm. This implies that some threshold must be decided, in advance, for what size of effect is clinically important in that situation. This concept is not new and is used in designing equivalence studies, which set out to show whether one intervention is as good as another.3 Thresholds, often called limits of equivalence, are set between which an effect is designated as being too small to be important. Outcomes of, for example, studies of effectiveness can then be related to these thresholds. This is shown in the figure, where the confidence interval from a study is interpreted in the context of predefined limits of equivalence.

Figure 1.

Relation between confidence interval, line of no effect, and thresholds for important differences (adapted from Armitage, Berry, and Matthews4)

Of course, setting such thresholds is not straightforward. How big a reduction in the incidence of HIV-1 infection is important? How large an increase in incidence is important? Who should decide? How different should the thresholds be for different groups of patients and different outcomes? These are difficult questions, and although we may not be able to find easy answers to them, we can at least be more explicit in reporting what we have found in our research. Wording such as “our results are compatible with a decrease of this much or an increase of this much” would be more informative.

What can we do to help ensure that in another decade we will be closer to heeding the advice of Altman and Bland? Firstly, considering results of a particular study in the context of all available research which considers the same question can increase statistical power, reduce uncertainty, and thus reduce the confusing reporting of underpowered studies. Such an approach might have clarified the implications of a recent study of passive smoking published in the BMJ.5 Secondly, researchers need to be precise in their interpretation and language and avoid the temptation to save words by reducing the summary of the study to such an extent that the correct meaning is lost. Thirdly, journals need to be willing to publish uncertain results and thus reduce the pressure on researchers to report their results as definitive.6 We need to create a culture that is comfortable with estimating and discussing uncertainty.

I thank Iain Chalmers and Mike Clarke for comments on draft versions.

Competing interests: None declared.

References

- 1.Altman DG, Bland JM. Absence of evidence is not evidence of absence. BMJ 1995;311: 485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kamali A, Quigley M, Nakiyingi J, Kinsman J, Kengeya-Kayondo J, Gopal R, et al. Syndomic management of sexually-transmitted infections and behaviour change interventions on transmission of HIV-1 in rural Uganda: a community randomised trial. Lancet 2003;361: 645-52. [DOI] [PubMed] [Google Scholar]

- 3.Greene WL, Concato J, Feinstein AR. Claims of equivalence in medical research: are they supported by the evidence? Ann Intern Med 2000;132: 715-22. [DOI] [PubMed] [Google Scholar]

- 4.Armitage P, Berry G, Matthews JNS. Statistical methods in medical research. 4th ed. Oxford: Blackwell Science, 2002.

- 5.Enstrom JE, Kabat GC. Environmental tobacco smoke and tobacco related mortality in a prospective study of Californians, 1960-98. BMJ 2003;326: 1057-60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Alderson P, Roberts I. Should journals publish systematic reviews that find no evidence to guide practice? Examples from injury research. BMJ 2000;320: 376-7. [DOI] [PMC free article] [PubMed] [Google Scholar]