Abstract

Background

Stochastic biochemical reaction networks are commonly modelled by the chemical master equation, and can be simulated as first order linear differential equations through a finite state projection. Due to the very high state space dimension of these equations, numerical simulations are computationally expensive. This is a particular problem for analysis tasks requiring repeated simulations for different parameter values. Such tasks are computationally expensive to the point of infeasibility with the chemical master equation.

Results

In this article, we apply parametric model order reduction techniques in order to construct accurate low-dimensional parametric models of the chemical master equation. These surrogate models can be used in various parametric analysis task such as identifiability analysis, parameter estimation, or sensitivity analysis. As biological examples, we consider two models for gene regulation networks, a bistable switch and a network displaying stochastic oscillations.

Conclusions

The results show that the parametric model reduction yields efficient models of stochastic biochemical reaction networks, and that these models can be useful for systems biology applications involving parametric analysis problems such as parameter exploration, optimization, estimation or sensitivity analysis.

Keywords: Stochastic biochemical network, Model reduction, Reduced basis, Genetic regulatory network, Computational efficiency, Parameter estimation

Background

The chemical master equation (CME) is the most basic mathematical description of stochastic biomolecular reaction networks [1,2]. The CME is a generally infinite-dimensional linear differential equation. It characterizes the temporal development of the probabilities that the network is in any of its possible configurations, where the different configurations are characterized by the molecular copy numbers of the network’s chemical species.

Due to its infinite dimension, the CME is usually not directly solvable, not even with numerical methods. A recent breakthrough in the numerical treatment of the CME was the establishment of the finite state projection (FSP) method by Munsky and Khammash [3]. They showed that it is possible to compute a good approximation to the real solution by projecting the CME to a suitable finite subdomain of the network’s state space, and solving the resulting finite-dimensional linear differential equation on that domain. Nevertheless, the FSP approach still yields very high-dimensional models which are computationally expensive to simulate, even for small biochemical networks. The efficient simulation of the CME is an area of active research, and recently other simulation methods have been developed that can also be used for larger networks [4,5].

Despite this progress, the direct simulation of the CME remains a computational bottleneck for common model analysis tasks in systems biology. It is especially problematic for tasks which require the repeated simulation of the model using different parameter values, for example identifiability analysis, parameter estimation, or model sensitivity analysis. Thereby, while a single or a few evaluations of a CME model with the FSP or other approaches may still be computationally feasible, the necessity of many repeated simulations will quickly render higher-level analysis tasks infeasible.

Mathematical methods that approximate the behaviour of a high-dimensional original model through a low-dimensional reduced model are a common way to deal with complex models. Especially for linear differential equations, model order reduction is a well established field and several methods to compute reduced order models are available [6]. Note that the step of generating a reduced model is usually computationally more expensive than a single or even a few simulations of the original high-dimensional model. But the simulation of the resulting reduced models is frequently orders of magnitude faster than the solution of the original model. So, model reduction is worth the effort if many repeated simulations are to be expected. Unfortunately, for analysis tasks which require the repeated model simulation with different parameters, classical model reduction methods are not helpful. With these methods, the reduced model depends on specific parameter values in the original model, and the reduction needs to be redone for different parameter values. Thus, for the mentioned analysis tasks, the model reduction process would have to be repeated for each new parameter value, and no gain in computational efficiency would typically be possible. While classical model reduction techniques have been applied to the CME in the past [7], they are not so suitable for parametric analysis tasks.

Fortunately, model reduction methods where parameters from the original model are retained as adjustable parameters also in the reduced model are now being developed. These methods allow to compute a reduced model which uses the same parameters as the original model, and where the reduced model can directly be simulated with any choice of parameter values [8-11].

The purpose of this paper is to introduce the application of these parametric model reduction methods to finite-state approximations of the chemical master equation, and to show possible usage scenarios of such an approach. The structure is as follows. In the following section, we introduce some background and notation concerning the modelling of chemical reaction networks and parametric model order reduction. We also show how the parametric model order reduction methods can in fact be applied to the CME. Afterwards, we apply the reduction technique on two reaction network models and corresponding parametric analysis tasks.

Methods

We start with some preparatory background on the chemical master equation (CME) and parametric model order reduction. This serves in particular to fix the notation used throughout the remainder of the article. Then the application of parametric model order reduction to the CME is introduced.

The chemical master equation

The structure of a biochemical reaction network is characterized completely by the list of involved species, denoted as X1X2…,Xn, and the list of reactions, denoted as

| (1) |

where m is the number of reactions in the network, and the factors and are the stoichiometric coefficients of the reactant and product species, respectively [12]. The net change in the amount of species i occuring through reaction j is given by

| (2) |

Reversible reactions can always be written in the form (1) by splitting the forward and reverse path into two separate irreversible reactions.

For a stochastic network model, the variables of interest are the probabilities that the network is in any of the possible states which are characterized by the molecular copy numbers of the individual species X1,X2…,Xn. We denote the molecular copy number of Xi by . Then, the state variables of the stochastic model are given by the real numbers

| (3) |

for , i = 1,…,n. As a short-hand notation for (3), we write p(t,x), with .

The transitions from one state to another are determined by chemical reactions according to (1). The changes in the molecule numbers are described by the stoichiometric reaction vectors

| (4) |

To avoid needlessly complicated cases, we assume vj ≠ vk for j ≠ k.

The probabilities of the network being in any of the possible states x evolve over time, and their evolution is governed by the chemical master equation (CME) as derived by [1]. From a given molecular state x, one can compute the propensity νj that reaction j takes place according to the law of mass action as

| (5) |

where is the vector of reaction rate constants, which are model parameters depending on the physical properties of the molecules involved in the reactions. The propensities are related to the probability that reaction j will occur in a short time interval of length dt when the system is in state x:

| (6) |

Taking the possible transitions and the corresponding reaction propensities together yields the chemical master equation (CME), a linear differential equation where the variables are the probabilities that the system is in each of the possible molecular states x:

| (7) |

for . The CME (7) is subject to an initial condition p(t0,x) = p0(x) for .

Despite being linear, the CME is hard to solve numerically. This is due to the problem that the state space is for most systems infinite-dimensional, since all possible states of the reaction network (1) must in general be considered. Instead of directly solving the CME (7), a number of alternative approaches to study the stochastic dynamics of biochemical reaction networks have been suggested. The most common approach is to generate a simulated realization of the stochastic process described by the reaction network (1), using for example the Gillespie algorithm [13]. In this approach, the probabilities p(tx) for the possible system states are obtained from many simulated realizations. However, since this requires a large number of realizations, it is computationally expensive.

As a more direct approach, Munsky and Khammash [3] have proposed the finite state projection (FSP), where the CME is solved on a finite subset of the state space. Here, this subset is denoted by Ω, and is defined as

| (8) |

where the x(i) are the system states for which the probabilities are computed in the projected model. The underlying assumption is that the probabilities for other states will be very low on the time scale of interest—otherwise the FSP may not yield good approximations to the solution of the CME. In particular we assume the time interval of interest to be given by [0,T for final time T > 0. The probabilities for the states x(i) in Ωare written in the vector P(t) approximating p(xt) at the finite number of states Ω:

| (9) |

The equation to be solved with the FSP approximation is

| (10) |

where is the matrix of state transition propensities, and is a vector of initial probabilities for the states in Ω. The elements of the matrix A(θ) are computed as

| (11) |

We will frequently omit the parameter dependence of the solution (and other parametric quantities). Hence the solution P(t), as abbreviation of P(tθ), of (10) is an approximation to the solution p(tx) of the orginal CME on the domain Ω. Munsky and Khammash [3] have also derived an upper bound on the error between the solution P(t) computed via the FSP, and the solution of the original CME p(tx) on Ω.

Here, we consider in addition an output vector defined by

| (12) |

with . Examples for relevant outputs are the probability that the molecular copy numbers are in a certain domain , which is achieved by the row vector output matrix C defined by Ci = 1 if , otherwise Ci = 0, with p = 1, or the expected molecular copy numbers, given by

| (13) |

i.e. C = (x(1),…,x(d)) with p = n.

The basic motivation for the model reduction presented here is that we are interested in parametric analysis of the model, where the model (10) has to be solved many times with different values for the parameters θ. Due to the typical high dimensions of the matrix A(θ), already a single simulation is computationally expensive, and analysis tasks requiring many repeated simulations are often computationally infeasible. Thus, the primary goal is to derive a reduced model which is rapidly solvable and provides an approximation to the output y(t), potentially without any consideration of the original state vector P(t).

Order reduction of parametric models

Model order reduction of parametric problems is a very active research field in systems theory, engineering and applied mathematics. We refer to [8,10,11] and references therein for more information on the topic.

Here, we apply the reduction technique for parametric problems presented in [9] adopted to our notation. It is based on two biorthogonal global projection matrices with r ≪ d and WT V = Id, where r is the dimension of the reduced model. The matrix V is assumed to span a space that approximates the system state variation for all parameters and times. The construction of such matrices will be detailed in the next subsection.

The gain of computational efficiency in repeated simulations comes from a separation of the simulation task into a computationally expensive “offline” phase and a computationally cheap “online” phase. In the offline phase, suitable projection matrices V and W are computed without fixing specific parameter values. With the projection matrices, a reduced model with the same free parameters as the original model is computed. In the online phase, the reduced model is simulated with the actually chosen parameter values, which is typically several orders of magnitude faster than the simulation of the original model. For analysis tasks with repeated simulations, only the online phase has to be repeated for different choices of the parameter values, yielding an overall gain in computational efficiency.

Decomposition in parametric and non-parametric part

The reduction technique assumes a separable parameter dependence of the full system matrices and the initial condition. This means, we assume that there exist a suitable small constant , parameter independent components and parameter dependent scalar coefficient functions for q = 1,…,QA such that

| (14) |

and similarly for the system matrix C and initial condition P0. We assume that stems from some domain of admissible parameters. In the next step, the reduced component matrices and initial conditions are determined by

| (15) |

for q = 1,…,QA. The resulting quantities , , and are r-dimensional vectors or matrices and independent of the high dimension d. The basis computation and the computation of these reduced system components is performed once and parameter-independently in the offline-phase. Then, in the online-phase, for any new parameter θthe reduced system matrices and the initial condition are assembled by

| (16) |

and similarly for Pr0(θ) and Cr(θ). The low dimensional reduced system that remains to be solved is

| (17) |

From the reduced state Pr(t), an approximate state for the full system can be reconstructed at any desired time by . Also the difference between the approximated output and the output y(t) of the original model can be bounded by so called error estimators. A-posteriori error bounds for the reduced systems as considered here are given in [9].

Basis generation

Different methods for the computation of the projection bases V and W exist. In systems theory, methods like balanced truncation, Hankel-norm approximation or moment matching are applied, that approximate the input-output behaviour of a linear time-invariant system [6]. The resulting reduced models can be applied for varying input signals. Extensions to parametric problems exist, e.g. [8,11]. As we do not have varying inputs in the problem studied here, we consider snapshot-based approaches to be more suitable. This means, the projection bases are constructed by solution snapshots, i.e. special solutions computed for selected parameter values.

The generation of projection matrices V and W must be done in such a way, that they are globally well approximating the system states over the parameter and time domain. A possible way to achieve this is the POD-Greedy algorithm, which has been introduced in [14] and is meanwhile a standard procedure in reduced basis methods [15,16]. The algorithm makes use of a repeated proper orthogonal decomposition (POD) of trajectories , which for our purposes can be defined as

| (18) |

Intuitively, is a state space vector representing the single dominant mode that minimizes the squared mean projection error. Computationally, this minimization task is solved by a reformulation as a suitable eigenvalue problem. Consider the correlation matrix . Then, is an eigenvector corresponding to the largest eigenvalue λmax of C, i.e., . For additional theoretical and computational details on POD we refer to [17,18]. We further require a finite subset of parameters , that are used in the basis generation process. As error indicator Δ(θV) we use the projection error of the full system trajectory on the reduced space spanned by the orthonormal columns of V , i.e.

| (19) |

The POD-Greedy procedure which is given in the pseudo-code below, starts with an arbitrary orthonormal initial basis and performs an incremental basis extension. The algorithm repeatedly identifies the currently worst resolved parameter (a), orthogonalizes the corresponding full trajectory with the current reduced space (b), computes a POD of the error trajectory (c), and inserts the dominant mode into the basis (d).

function V = POD-Greedy

1. N := N0

2. while

(a)

(b)

(c) vN + 1 := POD(E)

(d) VN + 1 := [VN,vN + 1]

(e) N := N + 1

3. end while

Note that the algorithm is implemented such that the simulation of the full model, yielding P(t,θ) in (19), is only performed once for each θin the training set .

For concluding the basis generation, we set W := V. This satisfies the biorthogonality condition WT V = Id, as V has orthonormal columns by construction. In practice the time-integrals in (18) are realized by a finite sampling of the time interval.

A theoretical underpinning for the POD-Greedy algorithm has recently been provided by the analysis of convergence rates [19]. This is based on the approximation-theoretical notion of the Kolmogorov n-width of a given set , which quantifies how well the set can be approximated by arbitrary N-dimensional linear subspaces of . The convergence statement for the case of exponential convergence then can be summarized as follows: If the set of solutions is compact and has an exponentially decaying Kolmogorov n-width for some Ma α > 0 and all , then the error sequence generated by the POD-Greedy procedure (cf. the definition in Step 2. in the pseudo code) also decays with an exponential rate, with suitable constants βcC > 0 depending on M,a,α. Thus, if the set of solutions can be approximated by linear subspaces with an exponentially decaying error term, then the POD-Greedy algorithm will in fact find an approximation with an exponentially decaying error term, though possibly with suboptimal parameters in the error bound.

Extensions of the POD-Greedy algorithm exist, e.g. allowing more than one mode per extension step, performing adaptive parameter and time-interval partitioning, or enabling training-set adaptation [15,16,20].

Reduced models of the parametrized chemical master equation

In this section, we describe how to apply the reduction method for parametrized models presented in the previous section to FSP models for the chemical master equation.

As discussed in the previous section, the first step in the proposed reduction method is a decomposition of the d-dimensional system matrix A(θ) as in (14). Such a decomposition is possible for the case of mass action reaction propensities, as defined in (5), or generalized mass action, as recently suggested for the chemical master equation [21]. In this case, the length of the parameter vector θ is equal to the number of reactions m, and we decompose A(θ) into m terms as

| (20) |

Hence, concerning the notation given before, we have QA = m components A[q] and coefficient functions . Each matrix A[q] in this decomposition comes from just the transition propensities corresponding to reaction q, and is defined by

| (21) |

More generally, such a decomposition is also possible if reaction rate propensities can be decomposed into the product of two terms, with the first term depending on parameters only, and the second term on molecule numbers only. This case is for example encountered when the temperature-dependance of the reaction rate constant is relevant, and the temperature T is a variable parameter in the Arrhenius equation . Since the output matrix C and the initial condition P0 are usually not depending on parameters in this framework, a decomposition of C and P0 is not considered.

The situation is more difficult for reaction propensities involving for example rational terms with parameters in the denominator. The denominator parameters can not be included in the reduced order model by the decomposition outlined in (20) and (21). If variations in these parameters are however not relevant to the planned analysis, then they can be set to their nominal value, and the decomposition can directly be done as described above. Alternatively, approximation steps can be performed, such as Taylor series expansion or empirical interpolation [22], that generate an approximating parameter-separable expansion.

Results

In this section, we present the study of two example networks with the proposed model reduction method. With these examples, the applicability of the reduced modeling approach especially for analysis tasks requiring repeated simulations with different parameter values is illustrated. The first network is a bistable genetic toggle switch, where cells may switch randomly between two states, based on the model in [23]. For this network, the problem of parameter estimation with a reduced model is studied. The second network is a second-order genetic oscillator, based on [24], where we perform a sensitivity analysis over a wide parameter range.

Parameter estimation in a genetic toggle switch model

Network description

The genetic toggle switch considered here is an ovarian follicle switch model from [23]. It is a system of two genes which activate each other. The switch is modelled as a reaction network with two species X1, X2, representing the gene products. The network reactions are specified in Table 1, and the network parameters in Table 2.

Table 1.

The follicle switch model

| Reaction | Stoichiometry vj | Propensity νj |

|---|---|---|

| Production of X1 |

(1,0)T |

|

| Degradation of X1 |

(−1,0)T |

u1x1 |

| Production of X2 |

(0,1)T |

|

| Degradation of X2 | (0,−1)T | u2x2 |

List of reactions and reaction propensity functions for the follicle switch model [23].

Table 2.

Parameters for the follicle switch model

| k1 | V1 | M1 | u1 | V2 | M2 | u2 | |

|---|---|---|---|---|---|---|---|

| 4 | 75 | 25 | 75 | 25 |

Parameter values for the follicle switch model in Table 1.

In [23], this network was shown to describe a bistable switch with two probability peaks, one close to x(off) = (0,0)T and the other close to .

In the study [23], only the lower probability peak was of interest. Here, we are interested in the transition of the system from x(off) to x(on). Therefore, the system is truncated to a rectangle such that , yielding a good approximation in the finite state projection to the infinite-dimensional chemical master equation.

The next step is to apply the decomposition of the matrix A(θ) as described in the methods section. Note that A(θ) for the switch network contains rational terms with the parameters M1 and M2. Considering these two parameters as fixed quantities, the truncated CME for the follicle switch can be written as

| (22) |

where A[i], i = 1,…,5 are of dimension 1512 × 1512 = 22801 × 22801.

As initial condition we choose a probability distributed over some lower states

| (23) |

For the parametric model reduction, we consider only variations in the parameters u1 and u2. These influence both the steady state level of gene activity in the on-state as well as the switching kinetics and are thus of high biological significance in the model. Hence we set as the parametric domain . As final time we choose T = 107 which corresponds to a time range of approximately 19 years, i.e. about three times the half-life time of the off-state estimated in [23].

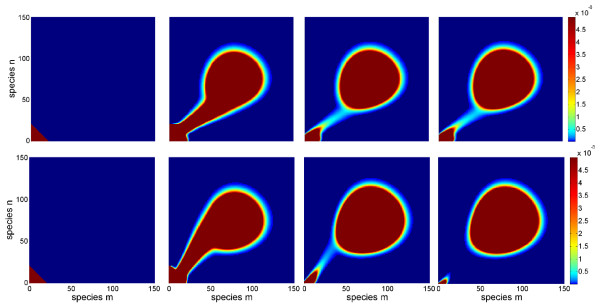

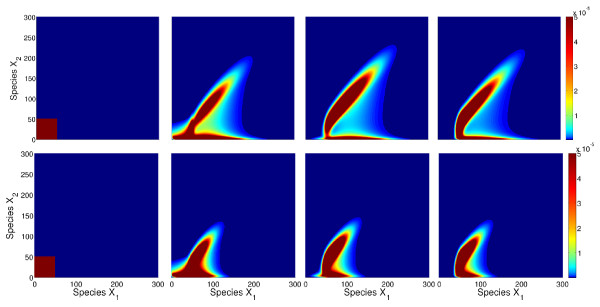

Some state plots from the simulation of the full model are shown in Figure 1. These snapshots clearly show the transition of the switch from the off-state with low values for x1 and x2 to the on state with high values. The parameter influence is mainly reflected in the speed of the transition: for the parameter vector (u1, u2) = (0.005, 0.02) in the lower row, most of the probability is already arranged around the on-state at the end of the simulation time. In contrast, for the parameter vector (u1, u2) = (0.05, 0.005) in the upper row, a significant portion of the probability is still located around the off-state at this time point. Also, the transition paths are different: in the first case, the values for x2 are lower than the values for x1during the transition, while in the second case, this relation is reversed.

Figure 1.

Illustration of solution snapshots of the switch model. Illustration of some solution snapshots P(t) of the switch model (22) for parameter vector (u1,u2) = (0.05,0.005) (upper row) and (u1,u2) = (0.005,0.02) (lower row) at times t = 0, 2·105, 5 · 106, and 1 · 107from left to right.

As typical simulation time for a single trajectory of the full system, we obtain 98.2 seconds on a IBM Lenovo 2.53 GHz Dual Core Laptop.

Basis generation

We generated a reduced basis with the POD-Greedy algorithm, where the training set was chosen as the vertices of a mesh with 92 logarithmically equidistant parameter values over the parameter domain . We set as target accuracy. We use the projection error as error measure, hence precompute the 81 trajectories for construction of the reduced basis. As initial basis we set N0 = 1 and using the parameter independent initial condition.

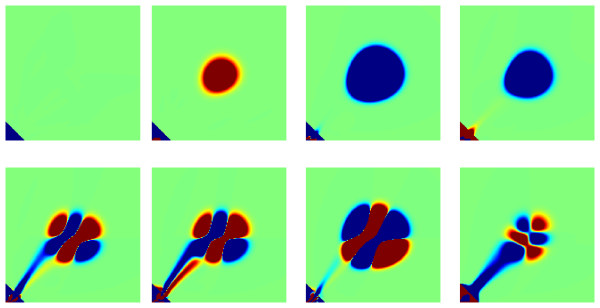

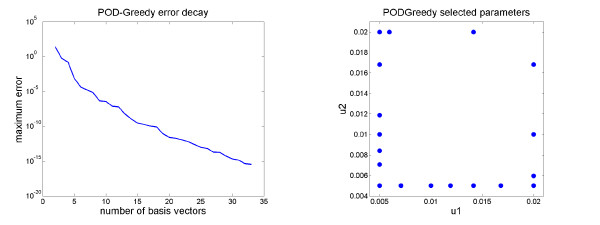

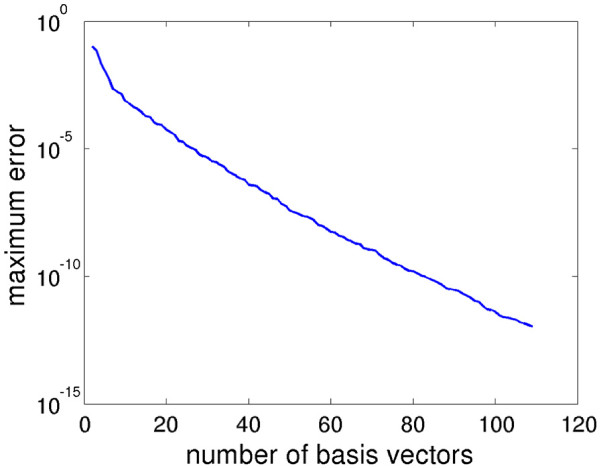

The POD-Greedy algorithm produces a basis of 33 vectors and the overall computation of the reduced basis takes 7.9 hours, the dominating computation time being spent in the error evaluations and POD computations. Some of the resulting orthonormal basis vectors are illustrated in Figure 2. The error decay curve and the selected parameters in the parameter domain are illustrated in Figure 3. We nicely observe an exponential error decay, which indicates a parametric smoothness of the solution manifold, cf. the convergence rate statement given before for the POD-Greedy algorithm. The selected parameters seem to be located at the boundary of the parameter domain, indicating that the model behaviour in between can well be interpolated from the model behaviours along the boundary of the parameter domain.

Figure 2.

Basis vectors for the switch model. Illustration of the first eight basis vectors for the switch model generated by the POD-Greedy algorithm.

Figure 3.

Results of the POD-Greedy algorithm for the switch model. Illustration of the error decay during the POD-Greedy algorithm (left) applied to the switch model and the selected parameters (right) being a small subset of the 81 training parameter points.

The final reduced model of dimension 33 can then be simulated in 0.135 seconds, corresponding to a computational speedup factor of more than 700.

Parameter estimation

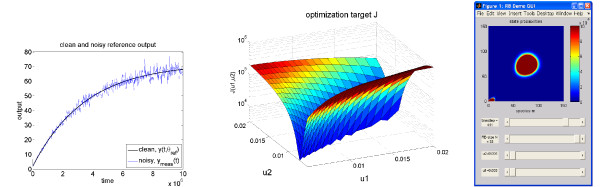

We exemplify a possible application of the reduced order model in parameter estimation, where we assume that a distorted output y(t) as the expected values E[x1] is available from population-averaged measurements. The task is to estimate the parameter values u1 and u2 from such a noisy measurement.

The reference parameter is , and, for the purpose of this example, the measured output is produced by simulating the original model with the reference parameter values and adding 5% relative random white noise n(t) sampled from a standard normal distribution, ymeas(t) := y(t,θref)(1 + 0.05n(t)). An illustration of the reference output y(t,θref) and the noisy signal ymeas(t) is given in the left of Figure 4.

Figure 4.

Parametric analysis for the reduced switch model. Application of parametric reduced models for parametric analysis: Illustration of the clean and noisy signals y(t,θref) and ymeas(t), respectively (left), the optimization target J(θ) over the parameter domain (middle), interactive parameter exploration by a graphical user interface (right).

We want to recover the values of the parameters u1 and u2 based on fitting the reduced parametric model’s output to the measured output ymeas(t). As is commonly done in parameter estimation, we formulate a least squares cost function as

| (24) |

and estimate the parameters by

| (25) |

In such an optimization problem, typically many forward simulations are required for adjusting to the measurement. This is a particular beneficial scenario for reduced order models, as these simulations can be computed rapidly.

In order to gain a deeper insight into the optimization problem (25), we plot the values of the error functional J(θ) over the parameter domain (middle of Figure 4). Using the reduced model, the computation of the required 212 = 441 trajectories is realized in less than one minute. This would be a significant computational effort when using a non-reduced model.

From the cost function plot, we observe a narrow area of parameters which seem to produce a similar output as the reference parameter θref. This shows that the two model parameters are not simultaneously identifiable from the considered output, and indicates that there may exist a functional dependence between the parameters u1 and u2 such that the model yields similar outputs y(t).

Assuming a functional dependence of u1and u2we now consider the 1-dimensional optimization problem along the line u2 = u2,ref = 0.01. We would like to recover u1from the optimization problem. The corresponding value of the cost function is J(θref) = 3330.68, indicating a significant contribution of the noise. This restricted optimization problem is well conditioned and the optimization with a standard active set algorithm by MATLAB’s command fmincon yields the estimated parameter θest := (u1,est,0.01) with u1,est = 0.0100204, using 27 evaluations of the cost function. This accounts to a relative error in the u1value of 0.204%, hence excellent recovery. We refrain from plotting the recovered output as it is visually indiscriminable from the output in the left of Figure 4. Interestingly, the optimization target value J(θest) = 3329.56 implies J(θest) < J(θref), which may stem from a slight approximation error in the reduced model or from the effects of the measurement noise.

The right plot in Figure 4 illustrates another application of reduced parametric models: We incorporated the model in an interactive graphical user interface in RBmatlab, a matlab package for model order reduction, available for download at http://www.morepas.org. This allows interactive parameter variations and instantaneous simulation response.

Sensitivity analysis in a stochastic oscillator

Network description

The second case study is built on a genetic oscillator model showing stochastic resonance, which was presented in [24]. The oscillator is based on a negative feedback loop between two genes with one gene having positive autoregulation. The oscillator is modelled as a reaction network with two species X1, X2, representing the gene products. The network reactions are specified in Table 4, with parameters as in Table 3. In the original model in [24], the dynamics were described as stochastic differential equation for the amounts of X1 and X2, coming from a Langevin approximation to the stochastic dynamics [12]. For the framework used in this paper, the dynamics have to be described directly by the underlying CME. To achieve this, we introduce the parameter s which maps the dimensionless state variables from [24] to actual molecule numbers as required for the CME. Thus, s is also a measure for the network’s noise level: the higher s, the larger the molecule number that is considered, and the smaller the noise level will be.

Table 4.

Parameters for the oscillator model

| k1 | k2 | k3 | k4 | k5 | k6 | k7 | s | |

|---|---|---|---|---|---|---|---|---|

| 0.2 | 6.5 | 10 |

Parameter values for the oscillator model from Table 3.

Table 3.

The oscillator model

| Reaction | Stoichiometry vj | Propensity νj |

|---|---|---|

| Production of X1 |

(1,0)T |

|

| Degradation of X1 |

(−1,0)T |

k3x1 |

| Production of X2 |

(0,1)T |

|

| Degradation of X2 | (0,−1)T | k7x2 |

List of reactions and reaction propensity functions for the oscillator model adopted from [24].

The network model in Table 4 shows oscillations only in a stochastic description. The deterministic model has a unique asymptotically stable equilibrium point, but in a stochastic model, fluctuations may push the molecular numbers beyond a certain threshold, inducing a dynamical response along a slow manifold, which corresponds to one oscillatory period [24]. Depending on the noise level, such responses will be initiated more or less often, corresponding to a more or less regular oscillatory pattern.

The system is truncated to the rectangle , which contains the relevant system states for the parameter ranges of interest.

Similarly as in the switch example, the reaction propensity expressions contain rational terms in the parameters s, k2, and k6. These three cannot be decomposed directly, so we do the decomposition described in the methods section for the other five parameters only. With this decomposition, the truncated CME for the genetic oscillator can be written as

| (26) |

where A[i], i = 1,…,5 are of dimension 3012 × 3012 = 90601 × 90601. The initial condition for (26) is chosen as a uniform distribution over the rectangle {0,…,50} × {0,…,50}:

| (27) |

The time scale of interest for the model in (26) is for 0 ≤ t ≤ T = 6. At the end of the interval, the probability distribution seems to approach a steady state.

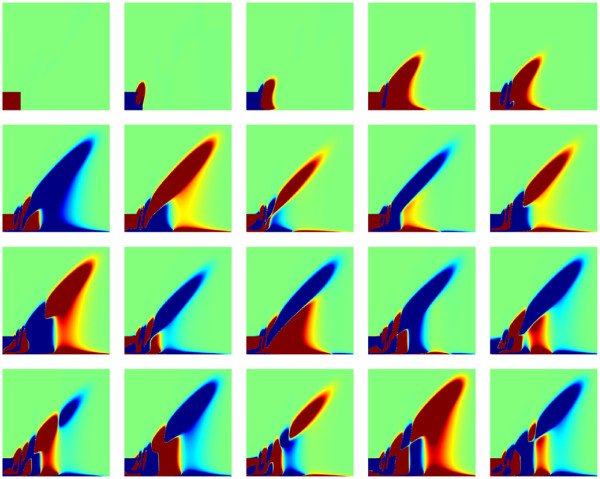

Some state plots are given in Figure 5. One observes a significant effect of the parameter k4on the amplitude of the oscillations. The simulation time for the detailed model was in average 7.3 minutes on a Dell desktop computer with 3.2 GHz dual-core Intel 4 processor and 1 GB RAM, without including the computation time for the construction of the state transition matrix A(θ).

Figure 5.

Illustration of solution snapshots of the oscillator model. Illustration of some solution snapshots P(t) of the oscillator CME model for parameter values k4 = 15 (upper row) and k4 = 30 (lower row) at times t = 0, 0.2, 0.6, 6.0 from left to right.

Basis generation

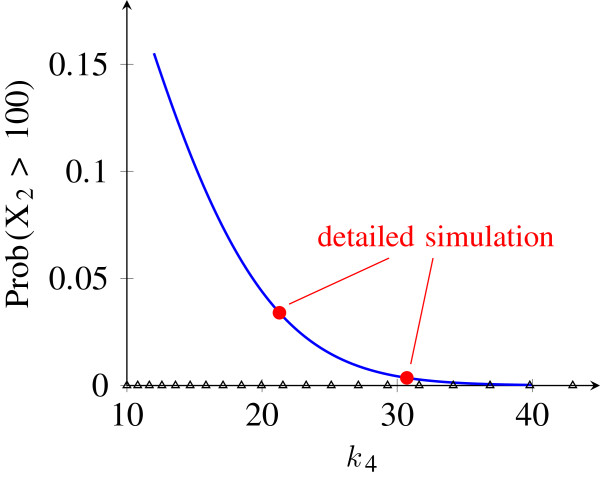

For the basis generation, the parameter k4 was assumed to vary within the interval [10, 100]. A reduced basis with the POD-Greedy algorithm was computed from a training set of 30 logarithmically equidistant parameters over the parameter domain (Figure 6). As in the switch example, the target accuracy was chosen as , and the initial basis was chosen from the initial condition V1 := P0.

Figure 6.

Parametric analysis results for the oscillator model. Sensitivity analysis of oscillation amplitude over a parameter interval. Blue line shows oscillatory amplitude over the parameter k4 predicted from the reduced model. Red dots are validation results from a simulation of the original model. Triangles on the parameter axis indicate parameter values which were used in the construction of the reduced basis.

The POD-Greedy algorithm produces a basis of 109 vectors, with an overall computation time of 16.5 hours on the hardware as in the previous subsection. The first 20 basis vectors are shown in Figure 7. It is apparent that several of the basis vectors are directly included in order to reproduce the different amplitudes of oscillations that will occur under variations of the parameter k4. The error decay curve is shown in Figure 8, displaying an exponential error decay as also observed for the switch example.

Figure 7.

Basis vectors for the oscillator model. First 20 basis vectors for the oscillator model.

Figure 8.

Results of the POD-Greedy algorithm for the oscillator model. Error decay curve for the oscillator model.

With the reduced basis , we can construct a reduced parametric model for the CME of the oscillator as

| (28) |

with and . Note that since only k4 has been varied in the reduction process, the other parameters are no longer present as parameters in the reduced model, but just take their nominal values. While the same basis V could be used to construct another reduced model where all parameters are retained, it is unlikely that this other model will be a good approximation of the original one for varying values of the other parameters.

Sensitivity analysis of the oscillation amplitude

As an application of the reduced order parametric model obtained in the previous section, we study the variations of oscillatory amplitude over a parameter range. Specifically, we consider 200 equally spaced values for the parameter k4 in the interval [12, 40] and compute the probability that the amount of X2 is larger than 100:

| (29) |

with T = 6 the final time of the simulation. The results are shown in Figure 6 and show a clear decay of oscillatory amplitude for increasing values of k4. Due to the significant time savings from the reduced model, this sensitivity curve can be computed with a high resolution.

To evaluate the quality of the reduced model, we also computed the probability (29) using the original model (26) at two points within the considered interval for the parameter k4. As shown in Figure 6, the results from the original model are in perfect agreement with the predictions from the reduced model at these points. Since the points at which the original model was evaluated in this experiment were not part of the training set (shown as triangles on the parameter axis in Figure 6), this shows that it is in fact possible to extrapolate the reduced model to parameter values that were not used to construct the basis.

Conclusions

In this paper, we have introduced the application of parametric model reduction methods to finite-state approximations of the chemical master equation. We have also presented two case studies where these methods are applied to CME models of different networks in order to make parametric analysis tasks computationally efficient. By this, it has become clear that parametric model reduction methods are a very useful tool for the analysis of stochastic biochemical reaction network described by the CME.

Especially analysis tasks where many repeated simulations of a network with different parameter values are required can profit significantly from parametric model reduction. This includes for example sensitivity analysis or parameter optimization tasks such as identifiability analysis or estimation. Moreover, the significant speedup of the simulation for the reduced model allows an interactive exploration of the network’s dynamics within the parameter space within a suitable graphical user interface.

This contribution is just a first step in the application of parametric model reduction methods to the CME. One particularly important aspect that we have not discussed here is the computation of error estimates for certifying that the simulation output of the reduced model is within some tolerance of the corresponding simulation output of the original model. To maintain computational efficiency, the error estimation should be done without actually simulating the original model. Error estimation methods have been developed for parametric model reduction of generic models [9], but tighter estimates could likely be obtained by taking into account the special structure of the CME models. Recent work for example refined the previous generic error bounds for stable models [25].

Competing interests

Both authors declare that they have no competing interests.

Authors contributions

SW and BH conceived of the study, performed the study, and wrote the manuscript. Both authors read and approved the final manuscript.

Contributor Information

Steffen Waldherr, Email: waldherr@ist.uni-stuttgart.de.

Bernard Haasdonk, Email: haasdonk@mathematik.uni-stuttgart.de.

Acknowledgements

We thank Wolfgang Halter for programming support in the oscillator case study. The authors would like to thank the German Research Foundation (DFG) for financial support of the project within the Cluster of Excellence in Simulation Technology at the University of Stuttgart. BH also acknowledges the Baden-Württemberg Stiftung gGmbH for funding. This work was also supported by the German Research Foundation (DFG) within the funding programme Open Access Publishing.

References

- Gillespie DT. A rigorous derivation of the chemical master equation. Physica A: Statist Theor Phys. 1992;188(1-3):404–425. doi: 10.1016/0378-4371(92)90283-V. [ http://www.sciencedirect.com/science/article/B6TVG-46FX396-7N/2/a0537c1efc0f5c330fa05b5e4ae61b98] [DOI] [Google Scholar]

- van Kampen NG. Stochastic Processes in Physics and Chemistry. Amsterdam, The Netherlands: North-Holland; 1981. [Google Scholar]

- Munsky B, Khammash M. The finite state projection algorithm for the solution of the chemical master equation. J Chem Phys. 2006;124(4):044104. doi: 10.1063/1.2145882. [ http://dx.doi.org/10.1063/1.2145882] [DOI] [PubMed] [Google Scholar]

- Jahnke T, Huisinga W. A Dynamical Low-Rank Approach to the Chemical Master Equation. Bull Math Biol. 2008;70:2283–2302. doi: 10.1007/s11538-008-9346-x. [DOI] [PubMed] [Google Scholar]

- Hegland M, Hellander A, Lötstedt P. Sparse grids and hybrid methods for the chemical master equation. BIT Numerical Mathematics. 2008;48:265–283. doi: 10.1007/s10543-008-0174-z. [DOI] [Google Scholar]

- Antoulas AC. Approximation of Large-Scale Dynamical Systems. Philadelphia, USA: SIAM; 2005. [Google Scholar]

- Munsky B, Khammash M. The Finite State Projection Approach for the Analysis of Stochastic Noise in Gene Networks. Automatic Control, IEEE Transactions on. 2008;53(Special Issue):201–214. [Google Scholar]

- Baur U, Benner P. Parametrische Modellreduktion mit dünnen Gittern. GMA-Fachausschuss 1.30, Modellbildung, Identifizierung und Simulation in der Automatisierungstechnik, Salzburg ISBN 978-3-9502451-3-4. 2008. pp. 262–271.

- Haasdonk B, Ohlberger M. Efficient Reduced Models and A-Posteriori Error Estimation for Parametrized Dynamical Systems by Offline/Online Decomposition. MCMDS, Mathematical and Computer Modelling of Dynamical Systems. 2011;17(2):145–161. doi: 10.1080/13873954.2010.514703. [DOI] [Google Scholar]

- Daniel L, Siong O, Chay L, Lee K, White J. Multi-parameter moment-matching model-reduction approach for generating geometrically parameterized interconnect performance models. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems. 2004;23(5):678–693. doi: 10.1109/TCAD.2004.826583. [DOI] [Google Scholar]

- Moosmann C, Rudnyi E, Greiner A, Korvink J, Hornung M. Parameter Preserving Model Order Reduction of a Flow Meter. Technical Proceedings of Nanotech 2005. 2005.

- Higham DJ. Modeling and Simulating Chemical Reactions. SIAM Rev. 2008;50(2):347–368. doi: 10.1137/060666457. [ http://link.aip.org/link/?SIR/50/347/1] [DOI] [Google Scholar]

- Gillespie DT. Exact stochastic simulation of coupled chemical reactions. J Phys Chem. 1977;81(25):2340–2361. doi: 10.1021/j100540a008. [ http://pubs.acs.org/cgibin/abstract.cgi/jpchax/1977/81/i25/f-pdf/f_j100540a008.pdf] [DOI] [Google Scholar]

- Haasdonk B, Ohlberger M. Reduced Basis Method for Finite Volume Approximations of Parametrized Linear Evolution Equations. M2AN, Math Model Numer Anal. 2008;42(2):277–302. doi: 10.1051/m2an:2008001. [DOI] [Google Scholar]

- Eftang JL, Knezevic DJ, Patera AT. An hp Certified Reduced Basis Method for Parametrized Parabolic Partial Differential Equations. MCMDS, Mathematical and Computer Modelling of Dynamical Systems. 2011;17(4):395–422. doi: 10.1080/13873954.2011.547670. [DOI] [Google Scholar]

- Knezevic D, Patera A. A Certified Reduced Basis Method for the Fokker-Planck Equation of Dilute Polymeric Fluids: FENE Dumbbells in Extensional Flow. SIAM Journal of Scientific Computing. 2010;32(2):793–817. doi: 10.1137/090759239. [DOI] [Google Scholar]

- Volkwein S. Model Reduction using Proper Orthogonal Decomposition. 2011. [ http://www.uni-graz.at/imawww/volkwein/publist.html]. [Lecture Notes, University of Constance]

- Jolliffe IT. Principal Component Analysis. New York, USA: Springer-Verlag; 2002. [Google Scholar]

- Haasdonk B. Convergence Rates of the POD-Greedy Method. 2011. Simtech preprint 2011-23, University of Stuttgart, Germany.

- Haasdonk B, Dihlmann M, Ohlberger M. A Training Set and Multiple Bases Generation Approach for Parametrized Model Reduction Based on Adaptive Grids in Parameter Space. MCMDS, Mathematical and Computer Modelling of Dynamical Systems. 2011;17(4):423–442. doi: 10.1080/13873954.2011.547674. [DOI] [Google Scholar]

- Wu J, Vidakovic B, Voit EO. Constructing stochastic models from deterministic process equations by propensity adjustment. BMC Syst Biol. 2011;5:187. doi: 10.1186/1752-0509-5-187. [ http://dx.doi.org/10.1186/1752-0509-5-187] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrault M, Maday Y, Nguyen N, Patera A. An ’empirical interpolation’ method: application to efficient reduced-basis discretization of partial differential equations. C R Math Acad Sci Paris Series I. 2004;339:667–672. doi: 10.1016/j.crma.2004.08.006. [DOI] [Google Scholar]

- Waldherr S, Wu J, Allgöwer F. Bridging time scales in cellular decision making with a stochastic bistable switch. BMC Syst Biol. 2010;4:108. doi: 10.1186/1752-0509-4-108. [ http://www.biomedcentral.com/1752-0509/4/108] [DOI] [PMC free article] [PubMed] [Google Scholar]

- El-Samad H, Khammash M. Proc. of the 45th Conf. Dec. Contr. (CDC) San Diego, USA; 2006. Coherence resonance: a mechanism for noise induced stable oscillations in gene regulatory networks; pp. 2382–2387. [Google Scholar]

- Hasenauer J, Löhning M, Khammash M, Allgöwer F. Dynamical optimization using reduced order models: A method to guarantee performance. 2012. [Journal of Process Control, Online Publication before print]