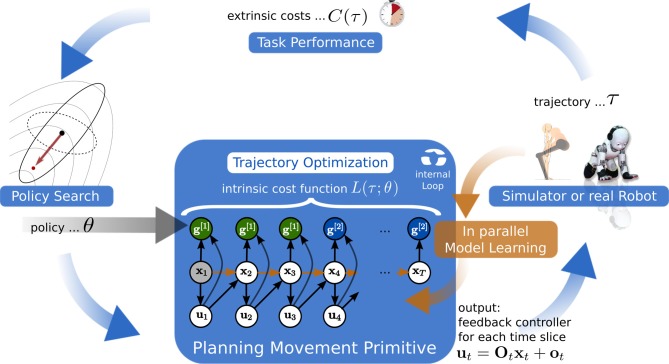

Figure 2.

We decompose motor skill learning into two different learning problems. At the highest level we learn parameters θ of an intrinsic cost function L(τ;θ) using policy search (model-free RL). Given parameters θ the probabilistic planner at the lower level uses the intrinsic cost function L(τ;θ) and the learned dynamics model to estimate a linear feedback controller for each time-step (model-based RL). The feedback controller is subsequently executed on the simulated or the real robot and the extrinsic cost C(τ) is evaluated. Based on this evidence the policy search method computes a new parameter vector. Simultaneously we collect samples of the system dynamics while executing the movement primitive. These samples are used to improve our learned dynamics model which is used for planning.