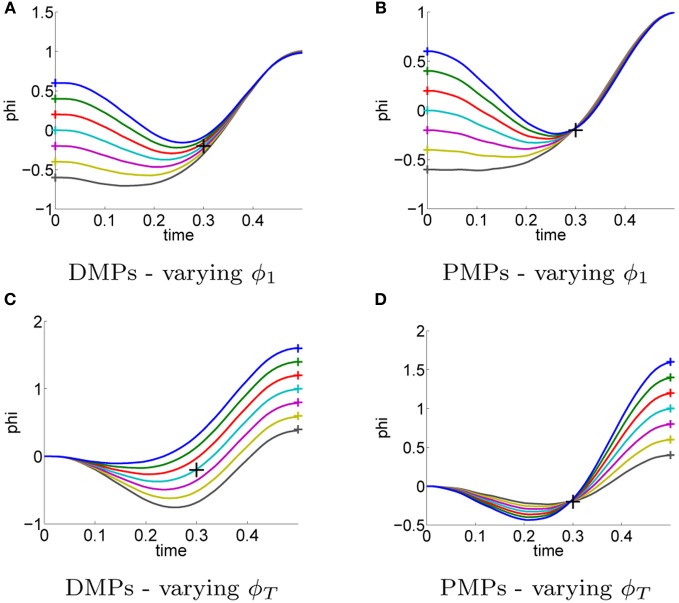

Figure 5.

For the previous task illustrated in Figure 3 the movement was learned for a single initial state ϕ1 = 0 and a single target state ϕT = 1. The initial and the target state were assumed as prior knowledge. In this experiment we evaluated the generalization of the learned policies to different initial states ϕ1 ∈ {−0.6, −0.4, −0.2, 0, 0.2, 0.4, 0.6} (A–B) and different target states ϕT ∈ {1.5, 1.25, 1, 0.75, 0.5} (C–D). Always the same parameters θ have been used, i.e., the parameters were not re-learned. Illustrated are the mean trajectories. The DMPs (A,C) are not aware of task-relevant features and hence do not pass through the via-point any more. (B,D) PMPs can adapt to varying initial or target states with small effects on passing through the learned via-point.