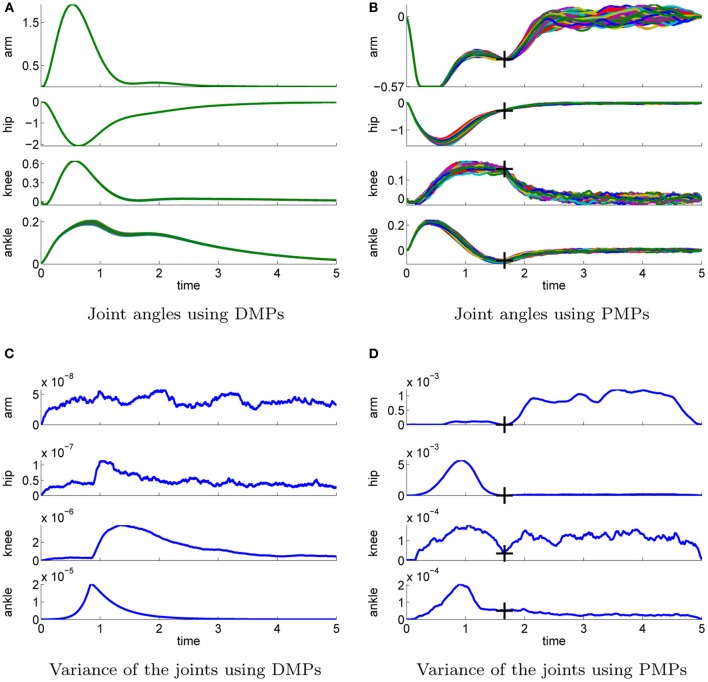

Figure 7.

This figure illustrates the best learned policies for the 4-link balancing task using DMPs (left column) and PMPs (right column). Shown are the joint angle trajectories (A–B) and the variance of these trajectories (C–D). The applied controls are illustrated in Figure 8. We evaluated 100 roll-outs using the same parameter setting θ for each approach. The controls were perturbed by zero-mean Gaussian noise with σ = 10 Ns. PMPs can exploit the power of stochastic optimal control and the system is only controlled if necessary, see the arm joint trajectories in (B). The learned via-points are marked by crosses in (B) and (C). For DMPs the variance of the joint trajectories (C) is determined by the learned controller gains of the inverse dynamics controller. As constant controller gains are used the variance can not be adapted during the movement and is smaller compared to (D). For DMPs the best available policy achieved cost values of 568 whereas the best result using PMPs was 307.