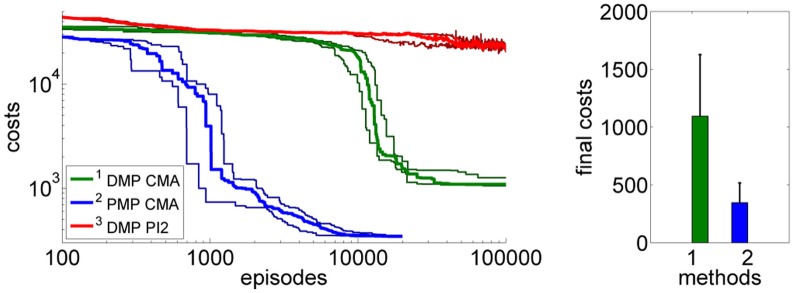

Figure 9.

The figure illustrates the learning performance of the two movement representations, DMPs and PMPs for the 4-link balancing task. Illustrated are mean values and standard deviations over 20 runs after CMA policy search. The controls (torques) are perturbed by zero-mean Gaussian noise with σ = 10 Nm. The PMPs are able to extract characteristic features of this task which is a specific posture during the bending movement, shown in Figure 7B. Using the proposed Planning Movement Primitives good policies could be found at least one order of magnitude faster compared to the trajectory-based DMP approach. Also, the quality of the best-found policy was considerably better for the PMP approach (993 ± 449 for the DMPs and 451 ± 212 for the PMPs). For the DMP approach we additionally evaluated PI2 for policy search, which could not find as good solutions as the CMA policy search approach.