Abstract

The current research aimed at specifying the activation time course of different types of semantic information during object conceptual processing and the effect of context on this time course. We distinguished between thematic and functional knowledge and the specificity of functional similarity. Two experiments were conducted with healthy older adults using eye tracking in a word-to-picture matching task. The time course of gaze fixations was used to assess activation of distractor objects during the identification of manipulable artifact targets (e.g., broom). Distractors were (a) thematically related (e.g., dustpan), (b) related by a specific function (e.g., vacuum cleaner), or (c) related by a general function (e.g., sponge). Growth curve analyses were used to assess competition effects when target words were presented in isolation (Experiment 1) and embedded in contextual sentences of different generality levels (Experiment 2). In the absence of context, there was earlier and shorter lasting activation of thematically related as compared to functionally related objects. The time course difference was more pronounced for general functions than specific functions. When contexts were provided, functional similarities that were congruent with context generality level increased in salience with earlier activation of those objects. Context had little impact on thematic activation time course. These data demonstrate that processing a single manipulable artifact concept implicitly activates thematic and functional knowledge with different time courses and that context speeds activation of context-congruent functional similarity.

Keywords: thematic knowledge, functional knowledge, manipulable object concepts, context, eye tracking

A core aim in work on semantic processing is to determine what type of semantic information is activated when accessing a concept and how this information is activated. Different semantic relationships may be highly and equally relevant for the same object. The challenge for researchers, then, is to assess whether the relationships are differentially processed, for example, whether one type of information is activated more quickly than another, and what factors influence the relative activation speed of the different types of semantic information.

The kinds of semantic information most studied include perceptual and functional features. Commonality in perceptual and functional features between two objects would determine the intensity of the semantic relation between them. In many studies, degree of feature overlap predicts magnitude of semantic priming and semantic competition effects (e.g., Cree, McRae, & McNorgan, 1999; Mirman & Magnuson, 2009; Vigliocco, Vinson, Lewis, & Garrett, 2004), confirming the psychological relevance of feature similarity relationships during semantic processing. Beyond feature overlap, other semantic relationships that may be relevant relate to event representations (Bonthoux & Kalénine, 2007; Estes, Golonka, & Jones, 2011; McRae, Hare, Elman, & Ferretti, 2005; Nelson, 1983, 1985), specifically, the thematic roles fulfilled by objects in an event. For example, knife and steak are semantically related because knife is typically used upon steak in the eating steak event, although they do not share any obvious important features (see Estes et al., 2011, for definition and differentiation).

Confirmatory of the importance of thematic relationships for semantic processing, priming between thematically related word pairs (e.g., key– door) has been demonstrated in a number of studies (Estes & Jones, 2009; Hare, Jones, Thomson, Kelly, & McRae, 2009; Mirman & Graziano, 2011; Moss, Ostrin, Tyler, & Marslen-Wilson, 1995). Knowledge about objects’ thematic relations can also be recruited on-line during sentence comprehension and constrain expectations for words that are upcoming in language (Kamide, Altmann, & Haywood, 2003; Kukona, Fang, Aicher, Chen, & Magnuson, 2011; Matsuki et al., 2011). Thematic relations can also influence explicit categorization choices. For example, when adults are given a choice between two pictures and asked to “choose the one that goes with” a target object, they tend to group object pictures thematically (Lin & Murphy, 2001).

Taken together, prior research suggests that object semantic processing is dependent on the activation of multiple types of information, in particular, information about feature similarity and information about thematic roles in events. There is even evidence that individuals differ in how they weight feature-based versus event-based relationships across tasks (Mirman & Graziano, 2011). However, these studies have not directly contrasted how individuals process semantic relationships based on feature similarity as well as thematic relationships within the same objects. Thus, a key aim of the present study was to delineate and characterize the activation of both feature similarity and thematic relationships during semantic processing of the same objects.

Such a distinction may be particularly important to understand the semantic processing of manipulable artifacts concepts (i.e., manipulable constructed objects). In contrast with natural object concepts, functional features tend to be more salient than perceptual features for artifact concepts (Cree & McRae, 2003; Farah & McClelland, 1991; Garrard, Lambon Ralph, Hodges, & Patterson, 2001; Ventura, Morais, Brito-Mendes, & Kolinsky, 2005; Vinson & Vigliocco, 2008; Warrington & Shallice, 1984). This suggests that feature similarity relationships between artifacts strongly rely on functional feature overlap (hereafter, functional similarity). Moreover, compared to nonmanipulable artifacts, thematic knowledge for manipulable artifacts (e.g., screwdriver–screw) would involve additional action information about object direct use. To illustrate, it has been shown that the automatic allocation of spatial attention typically observed when manipulable objects are presented (Handy, Grafton, Shroff, Ketay, & Gazzaniga, 2003) is particularly obvious for thematically related objects when they are displayed in a way that is congruent with action (e.g., bottle and corkscrew in action-compatible position) but less evident for familiar object associations that are not action related (e.g., spoon and fork; Riddoch, Humphreys, Edwards, Baker, & Willson, 2003). Moreover, recent neuroimaging and lesion analysis data (Kalénine et al., 2009; Schwartz et al., 2011) have shown that thematic relationships rely on regions of the temporo-parietal cortex associated with action knowledge, particularly for manipulable artifacts (Kalénine et al., 2009). Thus, for manipulable object concepts, feature similarity processing would be importantly based on function information, and thematic relationships processing would involve action information. However, it is still unclear whether function and action processing relies on distinct cognitive and neural mechanisms (Borghi, 2005; Boronat et al., 2005; Gerlach, Law, & Paulson, 2002; Kellenbach, Brett, & Patterson, 2003). Thus, evidence supporting the hypothesis that functional similarity and thematic relationship processing can be dissociated for manipulable artifact concepts might also inform about processing differences between function and action knowledge.

One study compared the identification speed of relationships based on feature similarity, particularly functional similarity (e.g., bowl–fork, hammer–pliers) and thematic relationships (e.g., bowl–toast, hammer–nail) for the same manipulable artifact targets in an explicit forced-choice task (Kalénine et al., 2009). These relationships were equivalent in terms of overall semantic relatedness. On each trial, participants had to choose, between two pictures, the one that went with the target picture. Results showed faster identification of thematic relationships compared to functional similarity relationships, suggesting different time courses of activation for the two types of information. However, these findings do not inform on whether these two types of semantic information would be differentially activated when the task does not require explicit identification of semantic relations. In addition, this study did not take into account the fact that a single manipulable artifact may have several relevant functional relationships with different objects.

Indeed, a given manipulable object (e.g., screw) may have multiple functional features that are more or less specific to exemplars of its category (e.g., used for holding things together, used for carpentry) as evidenced by property generation studies (e.g., McRae et al., 2005). Thus, the same object may share multiple functional similarity relationships at different levels of generality with various sets of objects. Using concept and property examples from McRae et al. (2005), screw is functionally similar to clamp and hammer if the function considered is “used for carpentry,” but screw is only similar to clamp if the function considered is “used for holding things together.” To what extent the activation of each of these multiple levels of functional similarity differs from the activation of thematic knowledge (e.g., “used with screwdriver”) is an open question.

The diversity of relevant semantic relationships for a given object raises the question of the flexibility of functional similarity and thematic knowledge activation. Context and goals may be important factors that impact semantic processing (Barsalou, 1991, 2003). In the above example, the functional similarity relationship between screwdriver and pliers may be processed more rapidly in the context of carpentry than in the context of tightening. There is very little known about the effect of context on thematic and functional similarity processing. A few studies suggest that a congruent context can increase the perceived functional similarity between objects (Jones & Love, 2007; Ross & Murphy, 1999; Wisniewski & Bassok, 1999). For example, Ross and Murphy (1999) showed that providing a contextual cue (e.g., breakfast foods) increased the judged similarity between objects (e.g., bacon and eggs) but did not distinguish between thematic and functional relations. Thus, the effect of contextual clues on implicit processing of thematic and functional similarity relationships has not been dissociated. Moreover, it remains uncertain whether contexts with different levels of generality could differentially affect general and specific functional similarity activation time courses.

In the light of these findings, the goals of the present study were to (a) compare the time course of activation of thematic relationships, specific functional similarity relationships, and general functional similarity relationships1 of equal overall semantic relatedness for the same manipulable artifact targets in a task that does not require explicit identification of semantic relations and (b) evaluate the influence of contexts of different levels of generality on the activation time course of these three semantic relationships. Following Kalénine et al. (2009), we hypothesized that there would be earlier activation of thematic relationships compared to functional relationships. We further wanted to test whether a different temporal pattern of activation would be observed for specific and general functional similarity relationships, a distinction that has never been tested. Moreover, we assumed that context would influence the time course of activation of these relations. Since objects functionally similar at the specific level are also functionally similar at the general level, we expected general contexts (i.e., contexts that specify a goal compatible with a general function of the object) to speed the processing of general and specific functional similarity relationships. In contrast, objects functionally similar only at the general level do not share more specific functions. Thus, specific contexts (i.e., contexts that specify a goal compatible with a specific function of the object) would speed the processing of specific functional similarity relationships, but not general functional similarity relationships. The extent to which such contexts would affect the activation time course of thematic relationships was of secondary interest. On the one hand, thematically related objects (e.g., screwdriver–screw), although not functionally similar, can easily participate in both specific and general contexts (e.g., tightening, carpentry job). Thus, one could assume that both general and specific contexts would speed implicit processing of thematic relationships. On the other hand, if thematic and functional similarity processes are dissociable and the context emphasizes a given function, one may expect context to speed functional similarity processing, but not thematic processing.

These hypotheses were tested using eye tracking in the visual world paradigm. Eye tracking is a highly sensitive method that allows the collection of implicit and fine-grained measures of cognitive processes and was therefore perfectly suited for the effects we wanted to assess. Contrary to explicit categorization tasks, eye tracking made it possible to compare processing of different semantic relationships without requiring the identification of those relations, which, we believe, offers a more naturalistic assessment of semantic processing. Eye tracking further provides finer time course information than priming paradigms and is closer to ideal for detecting the potentially subtle effects from our manipulations of function and context levels.

In the visual world paradigm, a set of pictures with experimentally controlled relationships is presented to a participant, and eye movements are recorded while the participant locates the target given an auditory prompt. A key feature of the visual world paradigm is that, prior to target identification, distractor pictures that are related to the target in some way compete for attention and are fixated more compared to unrelated distractor pictures. This pattern is referred as a competition effect, and the related distractors are thus considered competitors. For example, when participants hear the target word key and are presented with a four-picture display including the target object (key), a semantic competitor (lock), and two unrelated distractors (deer, apple), they look more to the lock than to the unrelated distractors before clicking on the key. This pattern reflects the activation of the information shared by the target and related distractors (keys are used on locks) when identifying the target word (key). Using this paradigm, several studies have demonstrated automatic activation of semantic (Huettig & Altmann, 2005; Huettig & Hartsuiker, 2008; Mirman & Graziano, 2011; Mirman & Magnuson, 2009; Yee, Huffstetler, & Thompson-Schill, 2011; Yee & Sedivy, 2006,), phonological (Allopenna, Magnuson, & Tanenhaus, 1998), visual (Dahan & Tanenhaus, 2005; Huettig & Hartsuiker, 2008), and motor (Lee, Middleton, Mirman, Kalénine, & Buxbaum, in press; Myung, Blumstein, & Sedivy, 2006) information in response to target words. The visual world paradigm has the major advantage of informing on both the magnitude and the temporal dynamics of semantic activation (Allopenna et al., 1998; Mirman & Magnuson, 2009). The amount of extra fixations on the competitor compared to unrelated objects (i.e., the size of the competition effect) reflects semantic activation magnitude, typically related to the overall semantic relatedness between target and competitor. The time course of extra fixations on the competitor compared to unrelated objects (i.e., the shape of the competition effect) reveals the precise temporal dynamics of semantic activation and may highlight different cognitive processes.

In the present study, a manipulable artifact target noun (e.g., broom) was presented in three conditions: with a thematic competitor (e.g., dustpan) in the thematic condition, with a competitor with a similar specific function (vacuum cleaner, cleaning the floor) in the specific function condition, and with a competitor with a similar general function (sponge, cleaning the house) in the general function condition. The overall semantic relatedness between target and competitor was equivalent between conditions. Fixations over time on the different object pictures (target, competitor, unrelated objects) were recorded in each of the three conditions before target identification in the absence of context (target words presented in isolation: Experiment 1) and in the presence of contexts of different generality (target words embedded in sentences: Experiment 2). In Experiment 1, we directly compared the time course of competition effects between the three conditions. In Experiment 2, we contrasted the effect of specific and general contexts on the competition effect time course in each condition. The specific predictions regarding each experiment are detailed in the following sections.

Experiment 1

Experiment 1 was designed to examine competition effects between objects that share (a) a thematic, (b) a specific function, or (c) a general function relationship during object identification and to compare the time course of those effects. On the basis of previous studies showing semantic competition based on functional and thematic relations (Mirman & Graziano, 2011; Yee et al., 2011), we expected the three types of conceptual relationships to elicit competition effects during target word processing. Moreover, we expected these competition effects to be of similar magnitude, since we controlled the overall semantic relatedness between conditions (overall semantic relatedness norms are shown in Appendix B and below). However, since Kalénine et al. (2009) found faster identification of thematic relations compared to functional relations for manipulable artifact targets, we expected thematic knowledge to be activated earlier than functional knowledge from target nouns. Thus, we predicted that in the absence of context, competition effects would be overall earlier and more transient in the thematic than in the function conditions. We also aimed at testing whether time course differences between thematic and functional similarity processing would be as pronounced in the specific function and general function conditions.

Participants

Participants were 16 older adults2 recruited from a large database of potential research subjects in the Philadelphia, PA, area maintained by the Moss Rehabilitation Research Institute. All subjects gave informed consent to participate in accordance with the Institutional Review Board guidelines of the Einstein Health-care Network and were paid for their participation. Participants completed the Mini-Mental State Examination (MMSE; Folstein, Folstein, & McHugh, 1975) prior to the experiment. Initial inclusion criteria were set to a minimum score of 27/30 on the MMSE, normal or corrected-to-normal vision, and normal hearing. One participant was excluded because he had difficulties hearing the verbal stimuli. The final sample included 15 subjects (10 females, 5 males), with a mean age of 65 (SD = 6.5 years), a mean education level of 14 years (SD = 2 years), and a mean score of 29.5 (SD = 0.6) on the MMSE.

Materials and Methods

Stimuli

The picture stimuli were 96 color photographs of objects selected from a normative study (see Appendixes A and B), including 16 reference object pictures, 48 semantically related pictures (16 thematic, 16 specific function, 16 general function), and 32 unrelated pictures. An additional set of 139 pictures was also used for practice and filler trials. A complete list of the items is provided in Appendix A. The norms collected on these materials are summarized below and detailed in Appendix B.

Sixteen critical pictures of artifacts were selected (i.e., the reference object pictures). For each reference picture, three semantically related pictures and two unrelated pictures were designated. The type of semantic relationship was manipulated in three conditions. In the thematic condition, the competitor could be used to act upon/with the reference object (e.g., broom– dustpan). In the specific function condition, the competitor and the reference object were functionally similar at a more specific level (cleaning the floor). In the general function condition, the competitor and the reference object were functionally similar at a more general level (cleaning the house). Unrelated pictures were neither semantically nor phonologically related to the reference object.

All 96 critical pictures had at least 90% name agreement (see Name Agreement in Appendix B). Visual similarity between the reference objects and their corresponding related and unrelated objects was low and equivalent between conditions. Manipulation similarity was slightly higher in the specific function relationship condition compared to other conditions (see Visual and Manipulation Similarity in Appendix B). Consequently, manipulation similarity ratings were used as a covariate in the analysis of gaze data to be reported.

The normative ratings (see Type of Semantic Relatedness in Appendix B) confirmed that related objects in the thematic condition were consistently used to act with/upon each other. In the same way, related objects in the specific function and general function conditions were judged highly functionally similar at the specific and general levels, respectively. Moreover, related objects in the specific function condition were judged functionally similar at both the specific and general levels, while objects in the general function condition were functionally similar at the general level only, which ensured that the specific functions used (clean the floor) were subfunctions of the general ones (clean the house).

A corpus-based semantic similarity measure (COALS) was used to assess overall semantic relatedness (see Overall Semantic Relatedness in Appendix B). Related object noun pairs were more semantically similar than unrelated pairs. More importantly, overall semantic relatedness between the reference object noun and the related object nouns did not significantly differ between conditions. Thus, any difference in the pattern of gaze data between the three conditions could not be attributed to differences in the degree or amount of overall semantic relatedness but rather to differences in the type of semantic relatedness.

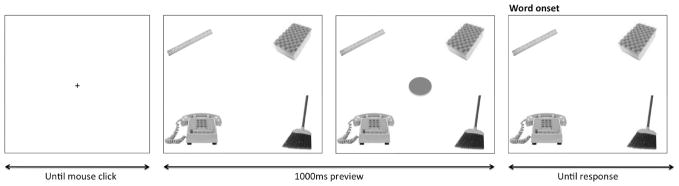

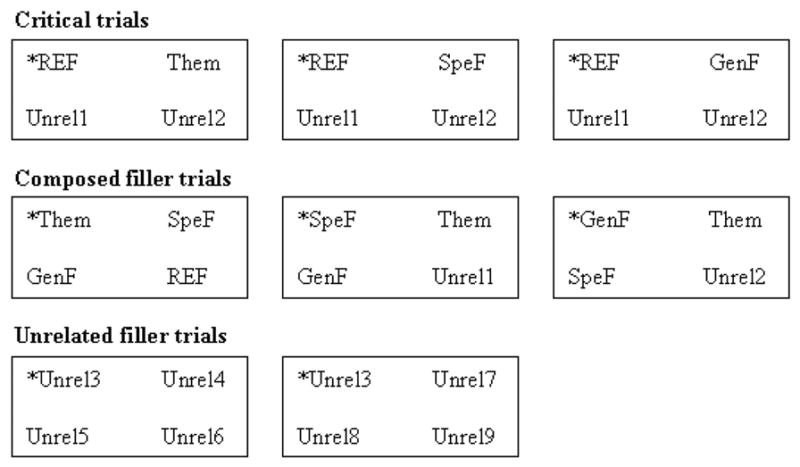

Eight 4-picture displays were derived for each reference object (see Figure 1). Three displays were used for critical trials, one in each semantic relationship condition. Three other displays were used for composed filler trials, and two served as unrelated filler trials.

Figure 1.

Illustration of the eight different four-picture displays designed for each reference object. The asterisk indicates the target picture for each display. The position of the pictures in the display was randomized but is standardized here for simplicity. Critical and composed filler trials involved the reference object (REF), the semantically related pictures in the thematic, specific function, and general function relationship conditions (Them, SpeF, and GenF, respectively) and two unrelated pictures (Unrel1 and Unrel2). Unrelated filler trials involved different unrelated pictures (Unrel3–Unrel9).

On critical trials, the reference object (e.g., broom) was always the actual target, one object was related to the target (i.e., the competitor), and the last two objects were semantically and phonologically unrelated to both the target and the competitor. The competitor was thematically related to the target in the thematic displays (e.g., dustpan), shared a specific function with the target in the specific function displays (e.g., vacuum cleaner), or shared a general function in the general function displays (e.g., sponge).

Since each target was repeated for each display type, composed filler trials were added to allow the related objects to be targets so that participants would not be able to guess which object was the target based on prior exposure. On those trials, the pictures used for critical trials were rearranged, and one of the related pictures became the target (see Figure 1). Unrelated filler trials involved novel pictures unrelated to each other, one of them being presented twice as the target. All of the critical pictures were repeated four times. Overall, pictures could be presented four times, two times, or one time. Pictures could be presented as targets between zero and three times. Overall, there were 16 × 8 = 128 trials, including 48 critical trials: 16 thematic displays, 16 specific function displays, and 16 general function displays. Ten practice trials were also designed on the same model.

The audio stimuli corresponded to the names of the 16 reference objects and 80 noncritical target objects. Average duration was 650 ms for the reference object nouns (SD = 80 ms). They were recorded by a female native speaker of American English using Audacity open source software for recording and editing sounds. Sounds were digitized at 22 KHz and their amplitude normalized.

Apparatus

Gaze position and duration were recorded using an EyeLink 1000 desktop eyetracker at 250 Hz. Stimulus presentation and response recording were conducted by E-Prime software (Psychological Software Tools, Pittsburgh, PA).

Procedure

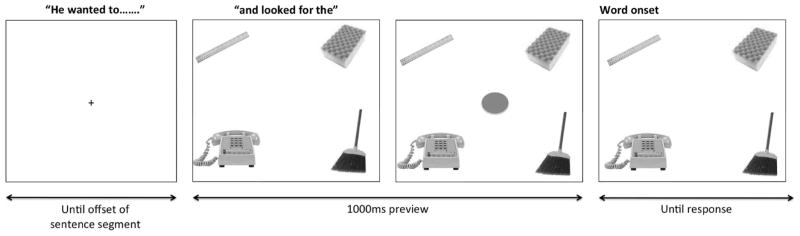

Participants were seated with their eyes approximately 27 in. from a 17-in. screen with resolution set to 1,024 × 768 pixels. They were asked to use their left hand to respond.3 To ensure that each trial began with the participant fixating the neutral central location, participants clicked on a central fixation cross to begin each trial. On each trial, participants saw four images; each image was presented near one of the screen corners. Images had a maximum size of 200 × 200 pixels and were scaled such that at least one dimension was 200 pixels. Therefore, each picture subtended about 3.5° of visual angle. The position of the four pictures was randomized. The display was presented for a 1-s preview to allow for initial fixations driven by random factors or visual salience rather than word processing. Two hundred and fifty milliseconds before the offset of the preview, a red circle appeared in the center of the screen to drive attention back to the neutral central location. Then, participants heard the target word through speakers and had to click on the image that corresponded to the target word (see Figure 2). Eye movements were recorded starting from when the display appeared on the screen and ending when the participant clicked on the target picture. The same procedure was followed for the 10 practice trials and the 128 test trials.

Figure 2.

Procedure used in each trial of Experiment 1. The display presents the target object (e.g., broom), a semantic competitor (e.g., sponge), and two unrelated objects (e.g., phone and ruler). Target words were delivered after a 1,000-ms preview of the display.

Experimental design

In this first experiment, we analyzed the proportion of fixation on a given object (dependent variable) as a function of the following independent variables: time (continuous variable); object relatedness, that is, the type of object in the display (two levels: competitor vs. unrelated); and display type, that is, the type of semantic relationship present in the display (three levels: thematic vs. specific function vs. general function).

Data analysis

Four areas of interest (AOIs) associated with the four object pictures were defined in the display. Each AOI corresponded to a 400 × 300 pixel quadrant situated in one of the four corners of the computer screen. Accordingly, fixations that fell into one of these AOIs were considered object fixations, while fixations that fell out of any of the AOIs were nonobject fixations. Note that overall, participants fixated the objects 88% of the time, confirming that they were performing the task correctly. At any moment on a single trial, a participant can either fixate an object or not; thus, fixation proportion of each AOI can be either 0 or 1 at any point in time. For each trial of each participant, we computed the proportion of time spent fixating each AOI for each 50-ms time bin. Critical trial data were averaged over items and participants to obtain a time course estimate of the fixations on the target, competitor, and unrelated objects. Data from filler trials were not analyzed. The proportion of fixations on the two unrelated objects was averaged.

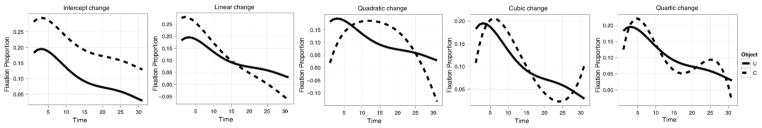

Growth curve analysis (GCA) with orthogonal polynomials was used to quantify differences in the fixation time course for semantically related pictures relative to unrelated pictures in the thematic, specific function, and general function displays during target identification (see Mirman, Dixon, & Magnuson, 2008, for a detailed description of the principles and advantages of the approach). Briefly, GCA uses hierarchically related submodels to capture the data pattern. The first submodel, called Level 1, captures the effect of time on fixation proportions using fourth-order orthogonal polynomials. The intercept term reflects the averaged height of the curve, the linear term reflects the angle of the curve, the quadratic term reflects the central inflexion of the curve, and the cubic and quartic terms reflect the inflexions at the extremities of the curve (see Figure 3). As detailed in Mirman et al. (2008) and visible on the figure, differences in competition magnitude are obvious on the intercept term. When competition magnitude is held constant, differences in competition temporal dynamics impact the other terms. The extent to which the competition is centered versus spread over the whole processing time course is captured by the quadric term. The extent to which the competition is compressed early versus late in processing can be captured by the linear, cubic, and quartic terms.

Figure 3.

Illustration of independent intercept, linear, quadratic, cubic, and quartic differences on fixation proportion time course on competitor (C) and unrelated (U) objects.

The Level 2 submodels capture the effects of experimental manipulations: object relatedness (competitor vs. unrelated), display type (thematic vs. specific function vs. general function), and the Object Relatedness × Display Type interaction. In addition, Level 2 submodels capture overall differences between participants or items with analogous submodels used in the by-subject and the by-item analyses. Since the manipulation similarity ratings collected in the normative study indicated that manipulation similarity may be particularly high for some item pairs, manipulation similarity scores were also introduced in the by-item analysis to disentangle effects of semantic similarity from effects of manipulation similarity.

Models were fit using maximum-likelihood estimation and compared using the −2LL deviance statistic (minus 2 times the log-likelihood), which is distributed like chi-square with k degrees of freedom corresponding to the k parameters added. Such factor-level comparisons were used to evaluate the overall effects of factors (i.e., Object Relatedness, Display Type, and Object Relatedness × Display Type), and tests on individual parameter estimates were used to evaluate specific condition differences on individual orthogonal time terms.

Overall, we predicted a competition effect for each display type, such that competitors would be fixated more than unrelated objects (effect of object relatedness). More crucially, we examined whether competition effect shape differed between display types. Therefore, after verifying the significance of the overall Object Relatedness × Display Type interaction, we performed paired comparisons on competition effect estimates between display types. Significant differences were not expected on the intercept term (overall competition effect magnitude). However, we predicted that competition would be shifted earlier for thematic compared to function displays and expected differences on the linear and/or cubic and/or quartic terms between thematic and function displays. We also tested the existence of competition time course differences between specific and general function displays.

Results

Participants were highly accurate in identifying the target object among distractors in all three conditions, performing at 98.5%, 98%, and 99.5% correct in the thematic, specific function, and general function displays, respectively, F(2, 28) = 1.09, p =.35. Mean mouse click reaction times from display onset were about 3,000 ms (M = 3,137, SD = 326) irrespective of display type (F < 1).

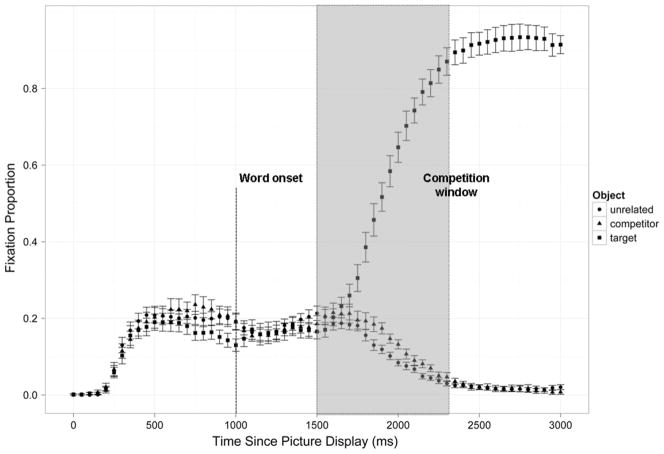

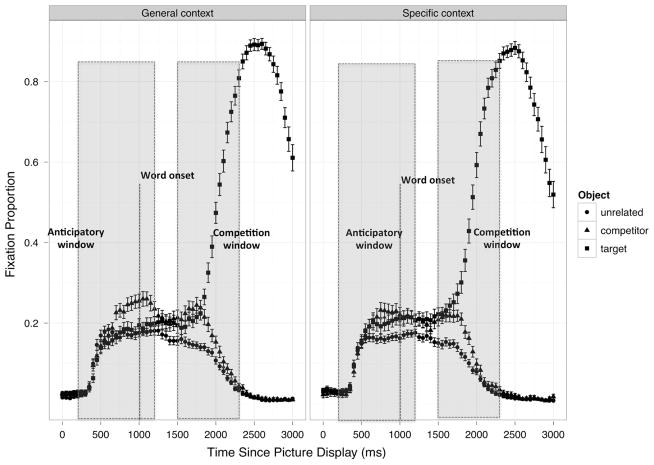

Gaze data were collected from the onset of each trial (i.e., the presentation of the four-picture display) to the end of the trial (i.e., the mouse click). No trial had to be excluded because of a lack of gaze data (track loss or off-screen fixations). Each trial received between two and 27 fixations (M = 9.5, SD = 2.8). Trials where participants made an incorrect response or the reaction time was more than three standard deviations from the participant’s condition mean (3% of the trials) were excluded from the fixation analysis. Figure 4 shows the averaged time course of fixations to the target, competitor, and unrelated objects from the presentation of the display.

Figure 4.

Mean fixation proportion (points) and standard errors (error bars) to the target, competitor, and unrelated objects as a function of time since the presentation of the picture display. The statistical analysis was computed on the data from the competition window (500 –1,300 ms after word onset).

The statistical analysis was restricted to competition effects driven by linguistic input. Accordingly, we compared fixation proportion between competitors and unrelated distractors from 500 ms after word onset until 1,300 ms after word onset. This analysis window was chosen because it starts slightly before target fixation proportions begin to rise above distractor fixations (i.e., when fixations start to be driven by target word processing) and ends when the competition has been resolved and target fixation proportions have reached their ceiling.

Effects of object relatedness and display type

In the by-subject analysis, overall there was no effect of display type, χ2(10) = 14.87, p =.13, but an effect of object relatedness, χ2(5) = 46.38, p < .0001, reflecting more fixations to competitors than unrelated distractors. This effect was statistically significant for each of the three display types, general function: χ2(5) = 80.52, p < .0001; specific function: χ2(5) = 14.46, p < .05; and thematic: χ2(5) = 59.54, p < .0001. There was also a statistically significant Object Relatedness × Display Type interaction, χ2(10) = 70.44, p < .0001, indicating differences in the time course of competition across the three types of competitors (see Table 1, model fit, and Figure 5).

Table 1.

Results of the Object Relatedness × Display Type Interaction Model

| Term | Model fit

|

Thematic–general function

|

Specific function–general function

|

Thematic–specific function

|

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| LL | χ2 | p | Estimate | t | p | Estimate | t | p | Estimate | t | p | |

| Intercept | 2,205 | 0.27 | .87 | 0.004 | 0.25 | .80 | 0.011 | 0.67 | .50 | −0.007 | −0.45 | .65 |

| Linear | 2,205 | 1.16 | .56 | −0.066 | −0.92 | .35 | −0.062 | −0.88 | .38 | −0.003 | 0.04 | .96 |

| Quadratic | 2,213 | 16.32 | <.001 | 0.047 | 1.78 | .08 | 0.106 | 3.98 | <.001 | −0.059 | −2.14 | <.05 |

| Cubic | 2,232 | 38.30 | <.001 | 0.156 | 5.83 | <.001 | 0.040 | 1.50 | .13 | 0.116 | 4.19 | <.001 |

| Quartic | 2,240 | 14.38 | <.001 | −0.075 | −2.83 | <.01 | −0.092 | −3.45 | <.01 | 0.016 | 0.60 | .55 |

Note. The table provides the test of the overall interaction (model fit) as well as the test of the specific comparisons between thematic and general function (left), specific function and general function (middle), and thematic and specific function (right) displays for each term of the model (intercept, linear, quadratic, cubic, and quartic). Results in boldface are presented in the text. LL = log-likelihood.

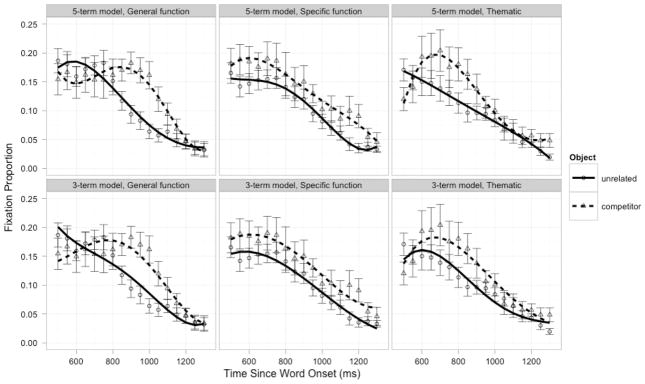

Figure 5.

Model fit (lines) of the fixation data (points = means; error bars = standard errors) from the competition time window for the general function (left), specific function (middle), and thematic (right) displays. The top panel presents the results of the full Object Relatedness × Display Type interaction model (including cubic and quartic terms). These high-order terms capture the earlier/short-lasting competition effect in the thematic display (right). The bottom panel presents the results of the interaction model without the cubic and quartic terms.

Very similar results were found in the by-items analysis that controlled for manipulation similarity between the distractor and the target object. Not surprisingly, manipulation similarity significantly improved the model fit, χ2(5) = 15.83, p < .01. Critically, the Object Relatedness × Display Type interaction still significantly improved the model fit after manipulation similarity ratings were included in the model, χ2(5) = 60.76, p < .0001. Thus, manipulation similarity differences between target and distractors across display types cannot account for competition effect time course variations. Note that visual similarity was not introduced in the model since normative data did not show any object visual similarity difference between display types.

Direct comparison of object relatedness among display types

Significance tests on the individual parameter estimates (see Table 1) revealed that there was no difference in overall amount of competition between the display types (effects on intercept term was not significant, all ps > .5). As expected, competition effect time course in the thematic displays differed from the other displays on the higher order terms (cubic and quartic terms for thematic– general function, Table 1, left panel; cubic term for thematic–specific function, Table 1, right panel). Differences on the cubic and quartic terms captured the fact that, compared to the other display types, the competition effect in the thematic displays is concentrated in the earlier part of the time window, despite a similar magnitude.

To illustrate this, Figure 5 shows how the model (lines) would fit the raw fixation data (points) with (top) and without (bottom) these higher order terms. The thematic displays (see Figure 5, right panel) showed an earlier and more transient competition effect, and removing the higher order terms made the competition effect smaller early in the time course and larger late in the time course and, therefore, similar to the competition effects in the specific function (see Figure 5, middle panel) and general function (see Figure 5, left panel) displays.

Interestingly, the specific function displays significantly differed from the other two display types on the quadratic term (estimate = 0.106, p < .01, and estimate = −0.059, p < .05, Table 1, middle and right panels), indicating that the competition with specific function competitor is indeed longer lasting than competition with thematic competitors but starts earlier than competition with general competitors, leading to a relatively flat competition time course (see Figure 5, middle panel). This reveals that the difference between thematic and function similarity processing time courses is less pronounced for specific function displays than general function displays.

The same pattern of results was highlighted in the by-item analysis after controlling for manipulation similarity: Thematic displays could be mostly differentiated from function displays on the higher order terms, and the two function displays differed significantly from each other on the quadratic term (all ps < .05).

Discussion

Experiment 1 revealed two main findings. First, during object identification, concepts that share a thematic relationship, a specific function, or a general function with the target concept were activated, as evidenced by a greater tendency to fixate on such distractor objects than unrelated objects. Second, activation time course differed across the three types of relations.

The existence of a competition effect in all display types indicates that in a word-to-picture matching task, thematic, specific functional, and general functional information is implicitly activated. The importance of both thematic and functional information for object concepts has been highlighted in several studies investigating object semantic structure using explicit tasks (Cree & McRae, 2003; Jones & Love, 2007; Lin & Murphy, 2001; Wisniewski & Bassok, 1999). Moreover, implicit semantic priming has been demonstrated between objects that share functional features as well as between thematically related objects (tools–instruments relationships; Hare et al., 2009; Moss et al., 1995). The current findings replicate and extend those results by showing that, while this information is not needed to complete the task (word-to-picture matching), a single object can activate various functional and thematic features.

The data also demonstrate, for the first time, that thematic and functional similarity relationships differ in their incidental activation time course. One possibility is that the earlier and more transient activation of thematic knowledge, visible in significant differences on the cubic and quartic terms, reflects the close connection between thematic knowledge and action experience. In the specific case of manipulable artifacts, thematic knowledge would make reference to action-related information about how the objects are used together (e.g., the seen and/or experienced gesture associated with sweeping the floor with broom and dustpan). Action knowledge shows greater reliance on regions of the visuo-motor system than functional knowledge (Boronat et al., 2005; Kellenbach, Brett, & Patterson, 2003), which may translate into quicker activation of action-related information from visual stimuli. Differences in activation time course of thematic and function competitors may reflect differences in how action and function information is represented. These data suggest that action and function knowledge implicit activation during manipulable artifacts semantic processing may rely on partially distinct processes.

It is worth noting that time course differences in the fixation data are not likely to be related to differences in overall relatedness between thematic and function conditions. First, target identification speed (reaction time) did not differ as a function of the type of semantic competitor present in the display. Second, according to semantic norms provided earlier (COALS), the thematic and function conditions were similar in terms of overall semantic relatedness between target and competitor object nouns. Third, the overall amount of extra fixation on competitors compared to unrelated objects did not differ between display types. Thus, it seems reasonable to argue that the temporal dynamics of competition effects between thematically and functionally related objects reflect qualitative rather than quantitative differences in semantic relatedness between objects. That is, the difference is in the type of semantic relatedness, not the overall amount of semantic relatedness.

Furthermore, the specific function competitors exhibited an intermediate pattern—relatively extended competition that started early in the time window like the thematic competitors and continued late like the general function competitors. This is the first demonstration to distinguish—in an implicit task—thematic knowledge and different levels of functional similarity. Using the fine-grained temporal resolution provided by eye-tracking measures, we showed that thematic activation is actually temporally less distinct from specific function compared to general function activation, although specific and general functional relationships are typically characterized by the same semantic determinant (i.e., functional feature similarity). This pattern suggests that processing objects sharing a specific function may involve processing of both object thematic relations and functional similarities, causing a mixture of earlier and later activation. This interpretation follows from the idea that thematic knowledge and feature similarity are both at play in semantic processing, but in a graded way, and may have different weights depending on the semantic relationship considered. This general hypothesis has already been advanced to explain children’s categorization behaviors (Blaye & Bonthoux, 2001) and neuropsychological dissociations between abstract and concrete words (Crutch & Warrington, 2010). The present findings bring new fine-grained time course evidence to this issue in the domain of object implicit semantic processing.

In Experiment 1, we examined competition between thematically related objects and objects related by a specific or a general function. We found competition driven by faster and more transient activation of thematic knowledge and slower rising and longer lasting implicit activation of functional knowledge, this difference being more pronounced for general functions. These findings suggest that in the absence of context, thematic, specific function, and general function relationships show different implicit activation time courses. An open question is whether these temporal dynamics can be modified by contexts that present an action goal compatible with either a general or a specific function of the object. We tested this hypothesis in Experiment 2.

Experiment 2

The objective of Experiment 2 was to assess whether context could shape the competition effects observed in Experiment 1. Previous work suggests that a congruent context can increase the perceived similarity between objects in explicit judgment and categorization tasks (e.g., Jones & Love, 2007; Ross & Murphy, 1999; Wisniewski & Bassok, 1999). However, it is unclear whether greater perceived similarity between two objects reflects greater activation of thematic and/or functional knowledge when processing the two objects or how it might influence the time course of activation and competition. Specifically, context influence on implicit semantic processing of distinct thematic and functional relationships has not been tested. Thus, Experiment 2 aimed at evaluating whether implicit processing of thematic and functional similarity relationships would be speeded by congruent contexts. Moreover, as different functional similarity relationships can be relevant for the same object depending on the level of generality of the function considered, we specifically assessed whether functional activation would show greater acceleration when the level of generality of context matches the level of generality of the functional relationship.

In Experiment 2, the target nouns (e.g., broom) were preceded by contextual sentences that described a situation where the goal of the actor is oriented toward a general function (general context; e.g., “he wanted to clean the house and looked for the”) or a specific function (specific context; “he wanted to clean the floor and looked for the”). Consistent with the definition of general and specific function relationships (see Type of Semantic Relatedness Norms in Appendix B), sentence-noun plausibility ratings (see Contextual Sentence-Object Plausibility in Appendix B) indicated that general function competitors (e.g., sponge) were judged highly plausible after general contexts, but not after specific contexts. In contrast, specific function competitors (e.g., vacuum cleaner) were judged highly plausible after both specific and general contexts. Thematic competitors (e.g., dustpan) were judged moderately plausible after both the specific and general contexts.

Since objects that are designated functionally similar at the general level in the stimulus set are not functionally similar at the specific level, we predicted that general contexts, but not specific contexts, would facilitate processing of general function competitors and that general function competition effects would be earlier and more transient after general than specific contexts. In contrast, as objects sharing a specific function also share the more general one, both contexts would facilitate processing of specific function competitors, and competition effect time course in the specific function condition was not expected to be sensitive to the level of generality of context.

We did not have strong a priori predictions for the impact of context generality on thematic competition effect time course. Although it certainly is an interesting topic for further research, it was not a primary focus of Experiment 2. Thematic displays were kept to evaluate context effects on thematic competition regardless of generality level, in comparison with Experiment 1. Since thematic competitors were judged congruent with both contexts, both contexts may facilitate thematic processing. Alternatively, one may expect that contexts presenting different object functions as goals would facilitate functional similarity, but not thematic processing, and that thematic competition time course would not be influenced by context at all.

Participants

Seventeen older adults recruited from the Moss Rehabilitation Research Institute database who did not take part in Experiment 1 participated in Experiment 2. All subjects gave informed consent to participate in accordance with the guidelines of the Institutional Review Board of the Einstein Healthcare Network and were paid for their participation. Participants completed the MMSE prior to the experiment. Initial inclusion criteria were set to a minimum score of 27/30 on the MMSE, normal or corrected-to-normal vision, and normal hearing. Two participants were excluded on the basis of these criteria (MMSE score < 27). The final sample included 15 subjects, 12 females and three males, with a mean age of 58 (SD = 8.5 years), a mean education level of 16.5 years (SD = 4.5 years), and a mean score of 28.9 (SD = 1.10) on the MMSE.

Material and Methods

Stimuli

Experiment 2 used the same critical picture stimuli as in Experiment 1. For each critical target, the three critical displays (thematic, specific function, general function) were repeated twice, once with the general context and once with the specific context, leading to six critical trials for each of the 16 items (96 total).

The composed filler displays used in Experiment 1 were also repeated twice, either with the same context (general OR specific) or once with each context (general AND specific) in a randomized way. Unrelated filler displays were replaced by additional composed filler displays that involved the same pictures as the ones used on critical trials but with different contextual sentences and using the unrelated filler objects as targets. This was done because the contextual sentences ruled out unrelated pictures as possible targets, so unrelated filler trials were not needed. To make sure that participants could not rule out some objects simply because they were never presented as the target, additional composed filler trials were created where the unrelated objects were presented with new congruent contextual sentences (e.g., “he wanted to take a picture and looked for the sheep”). Three extra composed filler displays were designed for each item set, each of them being repeated only once with a congruent context.

There were 96 critical trials (2 contexts × 3 display types × 16 items), 144 filler trials, and 10 practice trials. Overall, all of the pictures were repeated 10 times. The probability that a given object would be the target on a given trial varied between 0.1 and 0.6 (six presentations for critical targets, two or three for related distractors, and one or two for unrelated distractors).

The audio stimuli corresponded to the names of the 80 object pictures (most of them used in Experiment 1) and the contextual sentences. The contextual sentences were composed of two segments, a meaningful segment of variable duration describing the goal of the actor (e.g., “he wanted to clean the house”) and a segment that did not provide any relevant information for the task, that is, “and looked for the,” with a fixed duration of 1,000 ms. They were recorded by the same female native speaker of American English using Audacity open source software for recording and editing sounds. Sounds were digitized at 22 KHz and their amplitude normalized. Gaze position and duration were recorded using an EyeLink 1000 desktop eyetracker.

Procedure

Experiment 2 followed the same procedure as Experiment 1, except for the presentation of the contextual sentence. On each trial, the meaningful segment of the sentence was delivered before the presentation of the four-picture display while only the initial fixation cross was present on the screen. The display appeared at the time the task-irrelevant segment of the sentence (“and looked for the”) was delivered (see Figure 6). After a 1-s preview period corresponding to the offset of the second part of the sentence, participants heard the target word and had to click on the image that corresponded to the last word of the sentence. The instructions specified that they had to listen carefully to the whole sentence because the information at the beginning of the sentence would help them to find the correct picture quickly.

Figure 6.

Procedure used in each trial of Experiment 2. The display presents the target object (e.g., broom), a semantic competitor (e.g., sponge), and two unrelated objects (e.g., phone and ruler). Target words were delivered after a 1,000-ms preview of the display. The contextual sentence was presented before the onset of the display.

Experimental design

The proportion of fixations on a given object (dependent variable) was analyzed as a function of the following independent variables: time (continuous variable); object relatedness, that is, the type of object in the display (two levels: competitor vs. unrelated); display type, that is, the type of semantic relationship present in the display (three levels: thematic vs. specific function vs. general function); and context (two levels: general vs. specific).

Data analysis

The same GCA approach used in Experiment 1 was used in Experiment 2. The specific goal of Experiment 2 was to assess the effect of context on the Object Relatedness × Display Type interaction observed in Experiment 1, so the most critical term of the model was the three-way Object Relatedness × Display Type × Context interaction. After verifying the overall effect of adding this interaction term to the model, we directly compared the competition effects between the general and specific contexts for the three display type conditions.

Unlike Experiment 1, relevant linguistic information was provided on two occasions: The context was provided immediately before the appearance of the four-picture display, and the target word was provided 1 s after the display. In addition to the competition effects related to the identification of the target object after word onset, we expected the meaningful segment of the contextual sentence to drive anticipatory looks to context-relevant objects (including the competitor) in the display before hearing the target word. Such anticipatory effects have previously been described in studies investigating eye movements toward visual stimuli during sentence processing (Altmann & Kamide, 1999, 2009; Kamide, Scheepers, & Altmann, 2003; Kukona et al., 2011). Thus, the competitor was expected to receive more looks than unrelated pictures after but also before target word onset.

Accordingly, we tested the existence of an Object Relatedness × Display Type × Context interaction both during the display preview (after presentation of the context and before presentation of the target word: anticipatory window) and after target word onset (competition window). As in Experiment 1, only competitor and unrelated objects were considered in the analysis of anticipatory and competition effects. In addition, the goal of Experiment 2 was to test the effect of context on the three patterns of competition effects highlighted in Experiment 1. Therefore, planned comparisons were restricted to comparison of competition effect curves between the two contexts in each display type separately (general function, specific function, thematic). We expected competition effects to be earlier rising and more transient after general context than specific context in general function displays, but not in specific function and thematic displays.

In Experiment 2, we hypothesized that contextual influences on competitor fixation time course would reflect sensitivity of semantic information activation to the real-world events conveyed by contextual sentences. To disentangle sentence-noun plausibility from event activation impact on semantic processing time course, sentence-noun plausibility ratings were introduced as a covariate in the by-item analysis of fixation data of the anticipatory time window (before word onset) and competition time window (after word onset).

Moreover, it was important to assess anticipatory and competition effects separately. Competition effect time course during target word identification was assumed to reflect the dynamics of semantic information activation from object nouns. However, competitor fixation time course during target identification may be at least partially related to the extent to which these competitors have been anticipated before target word onset. In other words, variations in the competition effects after target word onset could derive from differential anticipatory effects, rather than (or in addition to) target word processing. To control for this possibility, the amount of anticipatory fixation on the different objects was also introduced in the by-item analysis of the competition window fixation data (after word onset).

Results

Participants were highly accurate in identifying the target object among distractors in all conditions (M = 99%, SD = 0.3%), with no significant differences between display types or between contexts and no interaction between context and display type. Mean mouse click reaction time from display onset was 2,937 ms (SD = 285). There was an effect of display type on reaction times, F(2, 28) = 4.73, p < .05, with longer reaction times for the specific function displays than for the other two (p < .05), as well as an effect of context, F(1, 14) = 9.2, p < .01, with shorter reaction times in the specific than in the general context. However, there was no interaction between context and display type, F(2, 28) < 1.

Gaze data were collected on each trial from the onset of the trial (i.e., the onset of the contextual sentence) to the end of the trial (i.e., the mouse click), even though fixations before the appearance of the picture display were not informative. No trial had to be excluded because of a lack of gaze data (track loss or off-screen fixations). Each trial received between three and 35 fixations (M = 13.4, SD = 3.5). Trials where participants made an incorrect response or the reaction time was more than three standard deviations from the participant’s condition mean (1% of the trials) were excluded from the fixation analysis.

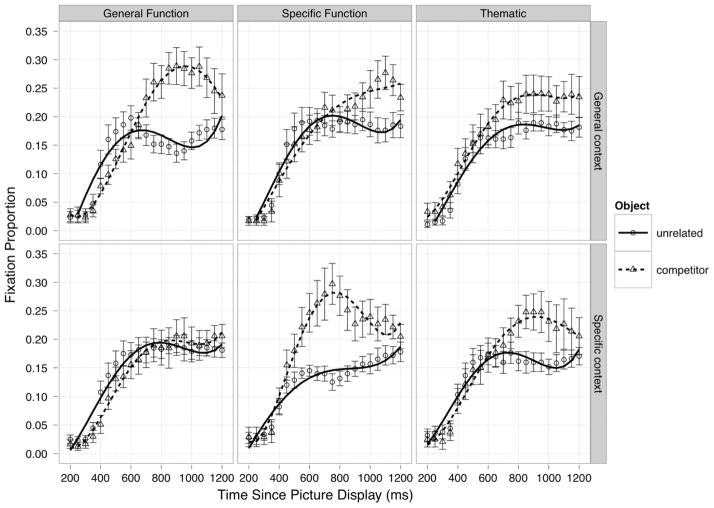

Figure 7 shows the averaged time course of fixations to the target, competitor, and unrelated objects from the presentation of the display after hearing either general contextual sentences or specific contextual sentences. Separate statistical analyses were computed on the two different portions of the gaze data. Fixation proportions to the different objects were compared during the anticipatory time window before any target-driven fixation could be made (200 –1,200 ms after the presentation of the display) and during the competition time window following target word onset (500 –1,300 ms after word onset, as in Experiment 1, corresponding to 1,500 –2,300 ms after the presentation of the display).

Figure 7.

Mean fixation proportion (points) and standard errors (error bars) to the target, competitor, and unrelated objects as a function of time since the presentation of the picture display after hearing the general context (left) or the specific context (right). The statistical analysis was computed on the data from the anticipatory window (200 –1,200 ms after display onset) and on the data from the competition window (500 –1,300 ms after word onset).

Anticipatory effects

Effects of object relatedness, display type, and context

In the by-subject analysis, there was an anticipatory effect of object relatedness—competitor versus unrelated, χ2(5) = 123.33, p < .0001—reflecting that there were more fixations to competitors than unrelated objects, which replicates and extends previous findings of anticipatory fixations (e.g., Altmann & Kamide, 1999). More critically, there was a statistically significant Object Relatedness × Display Type × Context interaction, χ2(10) = 103.59, p < .0001. The interaction reached significance on the intercept, χ2(2) = 18.91, p < .001, confirming differences in the amount of anticipatory looks to competitor versus unrelated objects between the six experimental situations (see Table 2 and Figure 8).

Table 2.

Results of the Object Relatedness × Display Type × Context Interaction Model on the Fixation Data From the Anticipatory Window

| Term | Model fit

|

Specific vs. general context: competitor–unrelated

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| General function

|

Specific function

|

Thematic

|

||||||||||

| LL | χ2 | p | Estimate | t | p | Estimate | t | p | Estimate | t | p | |

| Intercept | 4,923 | 18.91 | <.001 | −0.051 | −3.21 | <.01 | 0.048 | 2.73 | <.01 | −0.009 | −0.65 | .52 |

| Linear | 4,926 | 4.82 | .09 | −0.164 | −2.57 | <.01 | −0.050 | −0.67 | .50 | 0.077 | 0.84 | .40 |

| Quadratic | 4,948 | 44.19 | <.0001 | 0.050 | 1.66 | .10 | −0.23 | −7.54 | <.001 | −0.026 | −0.83 | .41 |

| Cubic | 4,962 | 27.63 | <.000 | 0.16 | 5.20 | <.001 | 0.001 | 0.04 | .97 | −0.064 | −2.02 | <.05 |

| Quartic | 4,967 | 10.42 | <.01 | 0.022 | 0.73 | .46 | 0.113 | 3.69 | <.001 | −0.025 | −0.78 | .43 |

Note. The table provides the test of the overall interaction (model fit) as well as the test of the specific comparisons between the two contexts for the general function (left), specific function (middle), and thematic (right) displays for each term of the model (intercept, linear, quadratic, cubic, and quartic). Results in boldface are presented in the text. LL = log-likelihood.

Figure 8.

Model fit (lines) of the fixation data (points = means; error bars = standard errors) from the anticipatory time window after hearing the general (top) and specific (bottom) contexts for the general function (left), specific function (middle), and thematic (right) displays.

In the by-item analysis, adding sentence-noun plausibility did not significantly improve the model fit of the data, χ2(5) = 4.21, p =.52, but adding the Object Relatedness × Display Type × Context interaction did, χ2(10) = 101.06, p < .0001. Thus, the differential influence of context on anticipatory fixations was not due to variations in sentence-noun plausibility.

Direct comparison of context effects on anticipation in each display type

In the by-subject analysis, the comparison of the pattern of fixations between the two contexts in each display type (general function, specific function, thematic) revealed important differential effects of general versus specific contexts across the two function displays (see Figure 8). There were more looks to competitor objects in the general function displays when participants heard general contextual sentences in comparison to specific contextual sentences (intercept: estimate = −0.051, t = −3.21, p < .01; see Table 2, left panel). This anticipatory pattern reversed in the specific function relationship condition: There were more looks to competitor objects when participants heard specific contextual sentences in comparison to general contextual sentences (intercept: estimate = 0.048, t = 2.73, p < 0 .01; see Table 2, middle panel). Finally, there was no effect of context on the overall amount of anticipatory fixations in the thematic displays (see Table 2, right panel). The same pattern of results was observed in the by-item analysis (all ps < .05).

Overall, the influence of context was clearly visible in the amount of anticipatory fixations to competitors (intercept). It was also noticeable in the shape of the anticipatory fixation curves with differences on the linear term (general function displays), on the quadratic term (specific function displays), and on the cubic and quartic terms (all display types), which are not detailed here but are presented in Table 2.

Competition effects

Effects of object relatedness, display type, and context

In the by-subject analysis following word onset, competition effect differences across competitor types were modulated by context, Object Relatedness × Display Type × Context interaction: χ2(10) = 31.53, p < .001. This three-way interaction did not reach significance on the intercept term, suggesting differences in the time course of fixations rather than on overall fixation proportions. Specifically, the effect was strongest on the cubic term, χ2(2) = 24.29, p < .0001, suggesting differences at the extremities of the curves (see Table 3 and Figure 9).

Table 3.

Results of the Object Relatedness × Display Type × Context Interaction Model on the Fixation Data From the Competition Window

| Term | Model fit

|

Specific vs. general context: competitor–unrelated

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| General function

|

Specific function

|

Thematic

|

||||||||||

| LL | χ2 | p | Estimate | t | p | Estimate | t | p | Estimate | t | p | |

| Intercept | 4,527 | 2.85 | .24 | −0.012 | −0.62 | .53 | −0.022 | −1.37 | .17 | 0.008 | 0.34 | .73 |

| Linear | 4,528 | 1.73 | .42 | 0.064 | 0.84 | .40 | −0.073 | −1.07 | .28 | −0.010 | −0.13 | .90 |

| Quadratic | 4,529 | 2.37 | .30 | 0.051 | 2.10 | <.05 | 0.031 | 1.21 | .22 | −0.003 | −0.12 | .90 |

| Cubic | 4,541 | 24.29 | <.0001 | −0.070 | −2.91 | <.01 | 0.096 | 3.73 | <.001 | −0.034 | −1.34 | .18 |

| Quartic | 4,541 | 0.28 | .87 | 0.001 | 0.06 | .95 | 0.020 | 0.77 | .44 | 0.013 | 0.53 | .59 |

Note. The table provides the test of the overall interaction (model fit) as well as the test of the specific comparisons between the two contexts for the general function (left), specific function (middle), and thematic (right) displays for each term of the model (intercept, linear, quadratic, cubic, and quartic). Results in boldface are presented in the text. LL = log-likelihood.

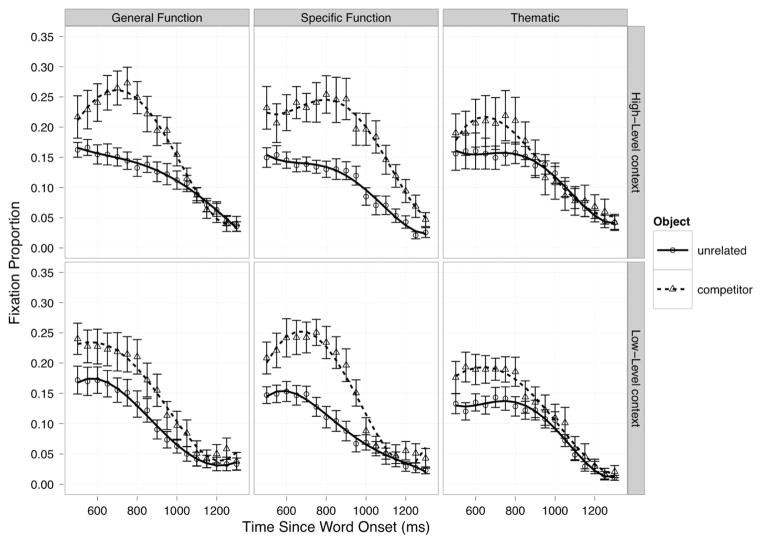

Figure 9.

Model fit (lines) of the fixation data (points = means; error bars = standard errors) from the competition time window after hearing the general (top) and specific (bottom) contexts for the general function (left), specific function (middle), and thematic (right) displays.

In the by-item analysis, model fit of fixation data from the competition time window was improved after adding anticipatory fixations, χ2(5) = 124.69, p < .0001, and sentence-noun plausibility ratings, χ2(5) = 37.76, p < .0001, to the model. Critically, after these covariates were taken into account, the Object Relatedness × Display Type × Context interaction still significantly improved the model fit, χ2(10) = 37.71, p < .0005.

Direct comparison of context effect on competition in each display type

Paired comparisons between the two contexts for each display type (general function, specific function, thematic) indicated that they had an opposite effect on competition effect curves in the general function and specific function displays. The competition effect was shifted earlier after general compared to specific context in general function displays, as reflected by significant differences on the quadratic term (estimate = 0.051, t = 2.10, p < .05) and cubic term (specific– general context: estimate = −0.070, t = −2.91, p < .01; see Table 3, left panel). In contrast, the competition effect was compressed earlier after specific compared to general context in specific function displays, as reflected by a significant difference in the opposite direction on the cubic term (specific– general context: estimate = 0.096, t = 3.73, p < .001; see Table 3, middle panel). That is, the effects of context on the cubic term were in opposite directions (negative for the general function displays and positive for the specific function displays), indicating that the contexts had opposite effects on activation of general and specific function relations. Competition effect curves in the thematic displays did not significantly differ between the two contexts (see Table 3, right panel).

The same pattern of results emerged in the by-item analysis, controlling for anticipatory fixations and sentence-noun plausibility (all ps < .05). Thus, competition effect time course variations between contexts observed during target word identification cannot be explained by these factors. We return to the possible accounts of the contextual effects observed in the Discussion section of this experiment.

Comparison between Experiments 1 and 2

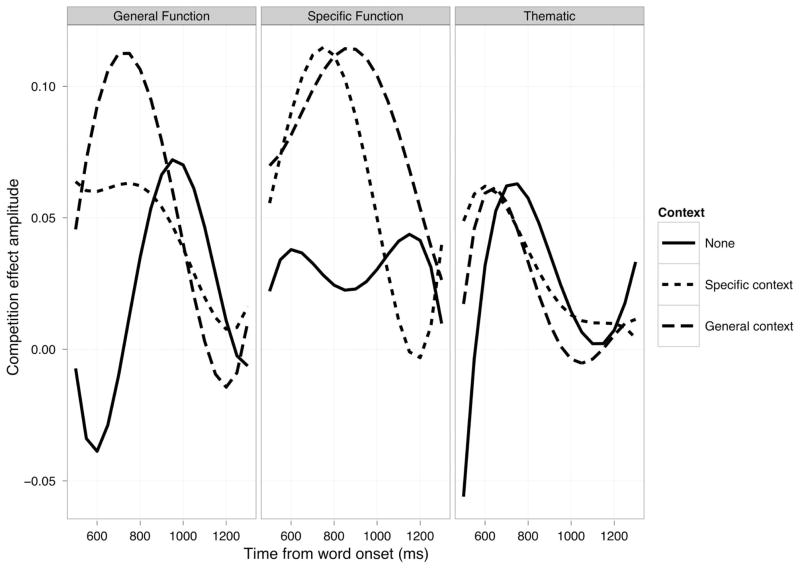

Figure 10 provides an illustration of the competition effects (competitor–unrelated) for the competition time window (500 –1,300 ms after target word onset) for each of the three display types (general function, specific function, thematic) and experiments (Experiment 1: no context; Experiment 2: general context, specific context).

Figure 10.

Competitor– unrelated raw proportion of fixations (i.e., competition effect amplitude) for the general function (left), specific function (middle), and thematic (right) displays when the target word was presented in the absence of context (Experiment 1) and with general context and with specific context (Experiment 2). Note that at target word onset + 500 ms (competition window), participants had already received linguistic information in Experiment 2, but not in Experiment 1.

For general function competitors (see Figure 10, left panel), the presentation of the context increased overall fixation proportion for competitors compared to unrelated objects, particularly when the context was general. Furthermore, the context tended to modulate the shape of the fixation time course toward earlier shifted and more transient competition effects. A similar pattern emerged for specific function competitors (see Figure 10, middle panel): The tendency to fixate competitors compared to unrelated objects was increased in the presence of context, and context’s biggest influence was on the fixation curve inflexion points. That is, when the target word was presented in isolation, the competition was spread over the whole time window, but the effect was brought closer to word onset in the presence of context. This tendency was even more pronounced after specific contexts, where the competition effect was the most compressed early after word onset in this condition. Finally, the context did not substantially affect the competition effects observed in the thematic displays (see Figure 10, right panel).

Discussion

The results from Experiment 2 revealed that context modulated temporal activation dynamics of different types of semantic information during object identification. Critically, the context differentially affected object fixations in the specific function and general function displays. Semantic competitors were more anticipated than unrelated objects when the level of their functional relationship was congruent with the level of generality of the context sentence, that is, specific context for the specific function displays and general context for the general function displays (significant differences on the intercept term). Similarly, the results observed during the competition window demonstrated earlier and shorter lasting competition effects when the level of the functional similarity between target and competitor matched the level of generality of the contextual sentence (significant differences on the cubic term). We now turn to a discussion of various accounts of the obtained contextual effects.

Source of contextual effects

First, one might argue that sheer lexical form associations between individual words can explain the pattern of contextual effects observed. For example, vacuum cleaner and floor are read or heard together in sentences more often than vacuum cleaner and house. Thus, vacuum cleaner competes with broom earlier after hearing “he wanted to clean the floor” than “he wanted to clean the house.” However, a recent study using the visual world paradigm elegantly demonstrated that sheer lexical form associations do not induce competition effects in this kind of paradigm (Yee, Overton, & Thompson-Schill, 2009). In their experiment, Yee et al. (2009) reported competition effects between semantically related objects (e.g., ham– eggs) but not between mere lexical form associations (e.g., iceberg–lettuce). This supports the contention that competition effects between semantically related words (that are typically also lexically associated) cannot be reducible to effects of lexical form associations. Thus, it is unlikely that the contextual effects on competitor fixations obtained here were due to lexical form associations between individual words of the sentence and object nouns.

A second possibility is that contextual sentence effects on competitor fixations were observed because of semantic priming between individual words. If so, sentence comprehension should be sensitive to semantic relationship strength between individual words. Glenberg and Robertson (2000) have shown that this is not the case using sentences describing novel events. Participants’ discrimination between sentences describing plausible or implausible novel events such as “Mike was freezing . . . . he bought a newspaper/matchbox to cover his face” was related to participants’ envisioning judgments of the event described in the sentence (i.e., covering face with newspaper is easier to imagine than covering face with matchbox) but not to strength of semantic relations between individual words (e.g., face is equally related to newspaper and matchbox). Even more closely related to the procedure used in the present study, Kukona et al. (2011) nicely demonstrated that during sentence comprehension (e.g., “Toby arrests the . . . .”), fixations following verb presentation increase overall for semantically related objects (e.g., crook, policeman) compared to unrelated objects, but to a greater extent for a sentence-appropriate patient (e.g., crook). These findings indicate that contextual sentence effects on object fixations are not reducible to lexico-semantic priming between individual words.

A third explanation would be that earlier competitor fixations after word onset in the presence of a given context reflect not earlier activation of target object semantic properties that are relevant for the event described in the sentence but a greater contextual sentence-competitor noun plausibility. For example, when hearing broom, there would be earlier looks to sponge when the contextual sentence was “he wanted to clean the house and looked for the . . . .” compared to “he wanted to clean the floor and looked for the . . . .” because sponge is more plausible after “he wanted to clean the house and looked for the . . . .” However, the pattern of contextual effects observed was still significant after controlling for sentence-noun plausibility differences. Even more compelling, the ratings showed that general function competitors are less plausible after general than specific contextual sentences but that specific function competitors are equally plausible after the two contextual sentences. The pattern of gaze data observed before and after word onset does not show the asymmetry obtained in the ratings. In both time windows, there was a symmetric influence of context: greater and earlier fixations to general function competitors after general contextual sentences and greater and earlier fixations to specific function competitors after specific contextual sentences. We go back to the discrepancy between explicit and implicit results in the General Discussion.

Finally, earlier competitor fixations after word onset following a given contextual sentence could be due to greater competitor anticipation before knowing which object would be the target. Greater competitor anticipation would be reflected by greater fixations on this object before the target word is heard (i.e., increased anticipatory fixations). Although anticipatory fixations have been related to differences in object noun plausibility given the contextual sentence (Altmann & Kamide, 1999), sentence-noun plausibility ratings did not predict anticipatory fixations in our experiment. This might be explained by the fact that target nouns were immediately preceded by a 1-s nonin-formative clause (“and looked for the”) common to all contextual sentences. Eye movements, but not explicit ratings, might be sensitive to the 1-s delay between meaningful clause (e.g., “he wanted to clean the floor”) and object noun (e.g., “broom”) presentation. Regardless, amount of anticipatory fixations did not entirely account for competition effect time course differences after word onset.

Taken together, these results argue that, in Experiment 2, contextual sentences activated event representations, which modulated the time course of activation of different semantic relationships.

Context-independent activation of thematic knowledge