Abstract

A picture can be a powerful communication tool. However, creating pictures to illustrate patient instructions can be a costly and time-consuming task. Building on our prior research in this area, we developed a computer application that automatically converts text to pictures using natural language processing and computer graphics techniques. After iterative testing, the automated illustration system was evaluated using 49 previously unseen cardiology discharge instructions. The completeness of the system-generated illustrations was assessed by three raters using a three-level scale. The average inter-rater agreement for text correctly represented in the pictograph was about 66 percent. Since illustration in this context is intended to enhance rather than replace text, these results support the feasibility of conducting automated illustration.

Introduction

Prior research has shown that complementing patient instructions with illustrations has the potential to enhance the recall and comprehension of those instructions1–3. Sometimes, a picture is worth a thousand words.

The effective use of pictures is complicated by the fact that their interpretation most of the times cannot rely on pre-established conventions as is the case of verbal communication. A picture can mean many different things depending on the context and on the cultural background of the intended audience. Presently, a comprehensive and high quality library of illustrations does not exist in the health domain. Manually creating and testing the illustrations is costly, time-consuming, and requires graphic skills that clinical and research teams usually lack.

In this paper we describe a project to facilitate the use of pictures in patient instructions through the development of a computer application that automatically converts text to pictures using natural language processing (NLP) and computer graphics techniques. Research on this kind of automatic conversion is quite sparse and has never been attempted in the health care domain.

Background

Patient Instructions

Patient instructions, oral or written, are an essential tool for patients to manage their own care. In inpatient settings, discharge instructions are routinely provided and orally explained by nurses or physicians. In outpatient settings, written after-visit summaries containing instructions are becoming more common.

A major problem arises when patients don’t understand and cannot remember the instructions4, 5. Past studies showed that more than half of the patients don’t fully understand the content of instructions6, and forget half of what they were told by the doctor within 5 minutes after the consultation 7. Sanderson et al. conducted telephone interviews with patients with acute myocardial infarction (AMI) and demonstrated that less than half of patients can remember their diagnosis and the associated risk factors 8. Crane studied the patient comprehension at the emergency discharge setting and reported only 60% instructions were recognized correctly 9.

The problem is in part attributed to the low health-literacy of the patient population. The Institute of Medicine (IOM) reports that almost half of Americans have an inadequate or poor level of health literacy 10. This presents a significant barrier for patients’ capability to understand medical information and manage their own health.

The prevalence of poor health literacy is difficult to change in the short term. What we can change is the way of giving instructions to patients, more specifically the content of the materials to improve readability. Adding illustrations, for example, is a way to enhance comprehension6, 11, 12.

Use of Illustrations in Patient Information and Education Materials

In health communication research, pictographs have been shown to improve patient comprehension and recall. Research has indicated that illustrations especially improve recall of conceptual knowledge and problem solving information of individuals who have little expertise in the subject matter13. Thus, pictographs are particularly helpful for conveying medical information to a lay audience. A study by Kools showed that when pictures were added to enhance two existing textual instructions for asthma devices (inhaler chamber and peak flow meter), significant improvement in patient recall and performance of both instructions resulted14. In another study, Morrow el al. reported that subjects answered questions regarding dose and time information quicker and more accurately when icons were present in the medication instructions15. Similarly, Kripalani et al. designed an illustrated medication schedule using pill images and icons. Nearly all patients in the trial considered the illustrated schedule helpful for their medication management16.

Indeed not all illustrations lead to better comprehension and recall17. Hwang et al. showed that adding icons to medication instructions do not actually improve patient comprehension18. A study by Mayer and Gallini demonstrated that only particular category of illustrations (parts and steps) can improve recall performance on conceptual information, but not nonconceptual information19. This highlights the need to develop effective and high quality pictographs.

Automated Illustration

Most of the above-mentioned studies used manually generated pictographs. Manually creating pictographs, however, is not a sustainable or generalizable solution. There is no prior automated illustration work in the biomedical domain.

In computer science research, related efforts included image retrieval, text-to-picture and text-to-scene studies. Delgado et al. proposed an image retrieval method to illustrate news story20. Story Picture Engine is another image retrieval system that searches and ranks images from a database21. These two systems focus on the selection of the most relevant images but do not compose new images. The Word2Image system by Li et al. is a slight variation of the image retrieval system22. It can generate a summarized image composed of relevant images according to semantic topics.

In the past several years, several more sophisticated text-to-picture synthesis systems have been developed. Zhu et al. proposed text-to-picture system which is able to crate collages of pictures from narrative text23. A text-to-picture algorithm proposed by Goldberg et al. further illustrated the relations with a collage of pictures24. UzZaman et al. proposed multimodal summarization which included pictures in the summaries25. With recent advances in 3D graphics, several studies introduced text-to-scene technique to generate 3D scenes from text. The Carsim system proposed by Johansson et al. applied text to 3D scene conversion to the illustration of traffic accidents 26, 27. WordsEye is an advanced language-based 3D scene generation system with an emphasis on spatial relations28. None of these systems were formally evaluated. In comparison to these systems, our automated illustration system conducts more in-depth text analysis on the syntactic and semantic levels, though only employs 2D graphics.

Prior Work

As a pilot study, we developed a set of pictographs through a participatory design process and used them to enhance two mock-up discharge instructions29. Tested on 13 subjects, the pictograph enhancement resulted in statistically significant better recall rates (p<0.001). This suggests that the recall of discharge instructions can be improved by supplementing them with pictographs. We also assessed the recognition levels of 20 pictographs developed in our research laboratory against 20 open source pictographs developed by the Hablamos Juntos initiative using a asymmetrical pictograph–text label-linking test30. Thirty-seven subjects completed 719 test items. Our analysis showed that the participants recognized the pictographs developed in-house significantly better than those included in the study as a baseline (P< 0.001).

Assessing how easy to interpret a given pictograph requires a recognition study where subjects are asked to guess the meaning of each pictograph shown to them. The major limitation of recognition studies, however, is that their findings cannot be generalized to other pictographs. Every new or revised pictograph requires a new recognition study. This is an important constraint in projects that rely on extensive pictograph libraries (like the one we are currently conducting). To work around this limitation, we developed a comprehensive taxonomy of representation strategies based on a systematic analysis of 846 health-related pictographs collected from several journal articles and websites31. Each representation strategy taxon is currently being test to assess the recognition levels associated with it. For example, if we know that a representation strategy based on a container-contained semantic association yields a recognition level of approximately 80%, we can predict that a picture of a wine bottle to indicate the concept “alcohol” or the picture of a milk carton to indicate the concept “milk” would likely be correctly interpreted by about eight in every ten people.

Finally, we have also developed and tested a manual text-to-pictograph conversion application to support the creation of illustrated patient education materials32. The system is composed of three modules: a graphic lexicon, a graphic syntax, and a computer application that enables its user to assemble specific concepts (atomic pictograms) into sentences (composite pictograms). We tested the completeness of 25 pictographs created using the application against an analogous set of pictographs developed by professional graphic designers (our gold standard). The two pictograms sets (N=50) underwent a recognition test. We found no significant difference in recognition rates between the two sets. Further, 49 of the 50 pictograms scored above the 67% minimal recognition rate set by the International Organization for Standardization.

Methods

The Glyph System

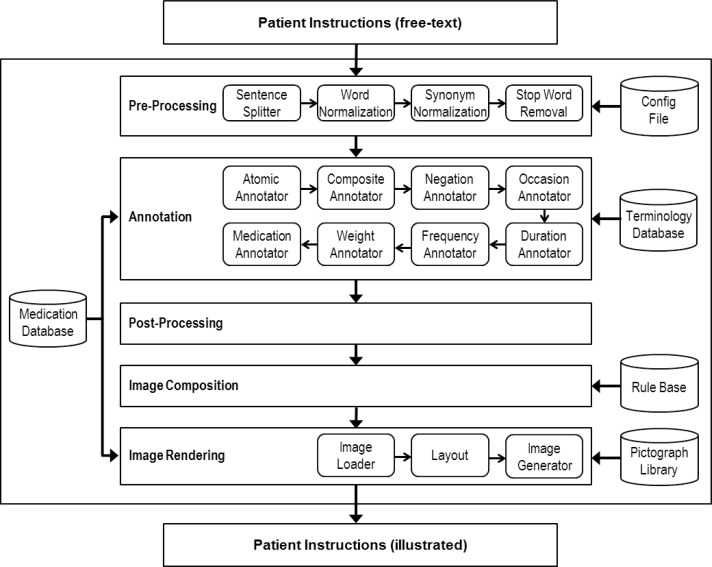

We developed an automated illustration system to enhance patient instructions. The system architecture is shown in Figure 1. The system follows a pipeline design where data flows through a sequence of processing modules and stages. The five main processing stages are: pre-processing, annotation, post-processing, image composition, and image rendering. The first three stages involve text processing. The pre-processing stage can be customized using a configuration file to accommodate different types of text input. The annotation stage makes use of a Terminology Database and a Medication Database. In the image composition stage, the system determines how individual images should be combined using a rule base. Finally, in the image rendering stage, the system generates an illustration for the text using pictures from the Pictograph Library. Medication images are generated based on the information from the Medication Database.

Figure 1.

Glyph’s system architecture.

Pictograph Library

We have developed a pictograph library, which currently contains more than 600 pictograph images that have been produced by a professional graphic designer in our research team. The images are stored as files, while the metadata (i.e. information about the images) are stored in a terminology database.

Terminology Database

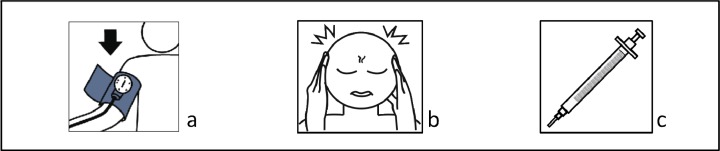

Each pictograph in the pictograph library has text labels and is classified semantically and graphically. The labels and classifications are stored in the Terminology Database. Each pictograph has a preferred name and, in most cases, several synonyms. For example, Figure 2a has a preferred term “low blood pressure”, and several synonyms such as “blood pressure low” or “decreasing blood pressure”. To meet the need for image composition and to craft more sophisticated composition rules, images are classified according to the 15 UMLS semantic groups 33. For example Figure 2b (headache) is classified as a disorder and figure 2c (syringe) is classified as a device.

Figure 2.

(a) Low blood pressure; (b) headache; (c) syringe.

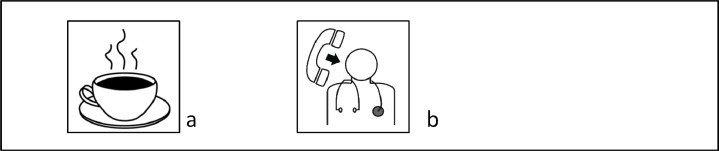

In addition, pictograph images are classified as either atomic or composite images. Atomic images represent a single concept (e.g. “coffee”) (Figure 3a). Composite images represent multiple concepts or a phrase (e.g. “call your doctor”) (Figure 3b).

Figure 3.

(a) Coffee; (b) call your doctor.

Medication Database

To recognize and illustrate medications, we created a Medication Database based on information downloaded from the DailyMed. The DailyMed34 website provides labeling information on more than 34 thousands medications that were submitted to the Food and Drug Administration (FDA). It contains information such as brand names, generic names, ingredients, strength, routes, forms, shapes, colors, sizes, and imprints. The labeling information (e.g. name and ingredient) supports the Medication Annotator in the Annotation stage. The description information (e.g. shape and color) is used in the Image Rendering stage to create medication images.

Pre-Processing

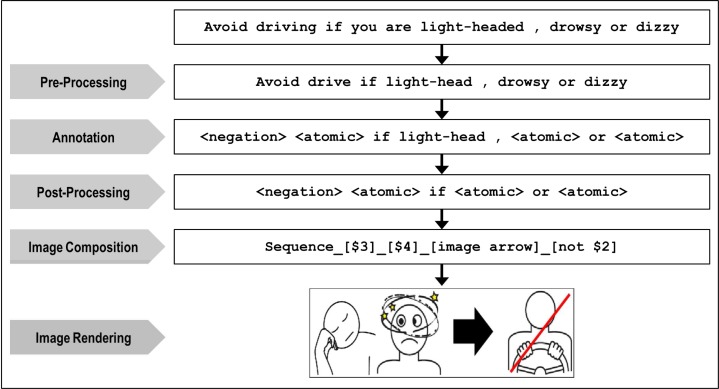

The input to the Glyph system is free text, which the system processes and annotates in three stages. The first stage, pre-processing, uses four text-processing modules. The Sentence Splitter module breaks the text into sentences. The Word Normalization module coverts words into normalized form, using the Lexical Variants Generation (LVG) normalization functionality35. The Synonym Normalization module maps certain terms to a set of pre-established terms (e.g., mapping “prior to” to “before” and “while” to “when”). This module is not a general-purpose concept mapper, but a specialized normalization tool to reduce the number of terms (especially conjunction terms) to be considered by the image composition rules. The Stop Word removal module removes words that should not be included in the picture (e.g., articles, conjunctions, certain adverbs and adjectives). An example of the preprocessing result is shown in Figure 4.

Figure 4.

Demonstration of system walkthrough.

Annotation

The annotation stage involves a set of concept-extraction modules that locate and annotate text strings into predefined categories such as negation, weight, and medication. These modules were developed to meet the specific needs of an automated text-to-picture conversion system. The Atomic Annotator looks up for strings that match the labels of atomic images in the Pictograph Library. The Composite Annotator looks up for strings that match the labels of composite images. Table 1 shows the full list of annotators and targeted entities. An example of the annotation result is shown in Figure 4.

Table 1.

Annotators and target concepts.

| Module | Target Concepts | Examples |

|---|---|---|

| Atomic Annotator | Phrases matching the labels of atomic images | Add salt, take, eat, drink, drive. |

| Composite Annotator | Phrases matching the labels of composite images | Take with water, talk to your doctor. |

| Negation Annotator | Phrases that negate subsequent noun or verb phrases | No, do not, don’t, avoid, stop. |

| Duration Annotator | Length of time | Three hours, 3 weeks, a month. |

| Frequency Annotator | Frequency | Twice daily, 3 time a week. |

| Occasion Annotator | Occasion (particular time/instance of an event) | In the morning, at bedtime, at night. |

| Weight Annotator | Weight | Three pounds, 3 lbs, 5 kg. |

| Medication Annotator | Phrases matching drug label information such as brand name, strength, unit, dose form. | Prozac 20 mg, cycloserine 250 mg. |

Post-Processing

Following the Annotation stage, the text may contain terms that are not recognized by the annotators, such as words that cannot be illustrated, misspelled words, or special characters. In the Post-processing stage, the system eliminates those terms to increase the chances of matching tagged sentences against a standardized rule set. An example of the post-processing result is shown in Figure 4.

Image Composition

The Image Composition stage looks for grammar patterns from the tagged sentences to determine how the individual pictures can be combined into composite pictures. The grammar rules have been developed through a linguistic analysis of the University of Utah Cardiology Service’s discharge instructions and stored in a Rule Base. The rules in the Rule Base are ordered. When more than one rules can be triggered, the rule with a higher ranking is processed first. Each rule contains two parts: the grammar pattern and the graphic composition instruction. For example:

<negation> <composite> if <composite> or <composite> --> sequence_[$3]_[$4]_[image arrow]_[not $2]

An example of how this rule is applied is shown in Figure 4. Sometimes, a rule only applies to images associated with a few semantics types. For example, the following rule instructs the system on how to combine three images of the semantic types “Concept & Idea”, “Objects” or “Organization”:

<atomic ci obj org>, <atomic ci obj org> and <atomic ci obj org> --> create_composite_[$1]_[$2]_[$3]

Image Rendering

The Image Rendering stage parses the graphic instructions generated by the rule engine and renders the images for the corresponding text. Source images are either retrieved from the Pictograph Library through the Image Loader or dynamically generated by the Image Generator for medication images.

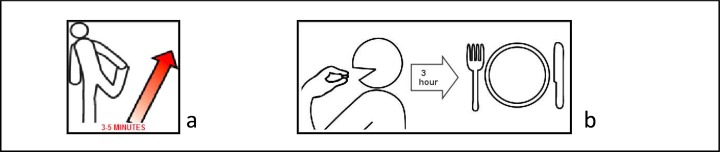

Aside from retrieving existing atomic and composite images, the Image Loader module may also superimpose additional details regarding frequency, duration and weight in text. Two examples are shown in Figure 5.

Figure 5.

(a) Warm-up for three to five minutes; (b) Take three hours before meals.

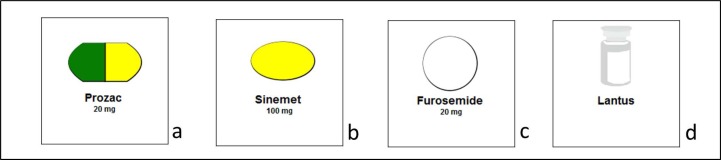

The image generator creates medication images based on the information from the Medication Database. It draws pill/capsule shapes (round, oblong, oval, square, rectangle, diamond, triangle, capsule, etc.) and fills the shapes with appropriate colors (white, off-white, clear, black, gray, brown, tan, etc.). For non-pill-form medication, the container (ampule, bag, bottle, inhalant, jar, spray, tube, vial, etc.) is illustrated. Examples of dynamically-generated medication illustrations are shown in Figure 6.

Figure 6.

Examples of medication illustrations – (a) Prozac, (b) Sinemet, (c) Furosemide and (d) Lantus.

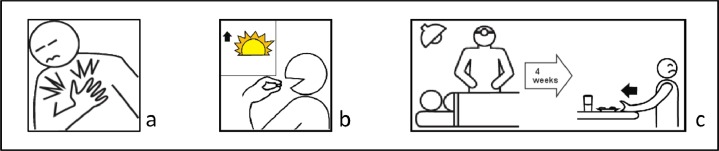

The Layout module produces the final images using 3 types of layouts: basic, overlay, and sequence. The basic layout is a simple 200x200 pixel square rendering a single atomic (Figure 7a) or composite image. The overlay layout may superimpose an image over another or overlay one subordinate image on the upper left corner of a main image (Figure 7b). In the sequence layout multiple images are displayed in parallel (Figure 7c).

Figure 7.

(a) Example of basic layout (chest pain); (b) example of overlay layout (Take in the morning); (c) example of sequence layout (You may not have much of an appetite for up to 4 weeks after your surgery).

Iterative Testing

We collected a large number of discharge instructions from the University of Utah Cardiovascular Center to iteratively test the system. A total of 2000 unique instructions were obtained along with approximately 100 sets of instruction (each containing 10 to 50 distinct instructions) from the Web. In the iterative testing process, the Glyph system was applied to a batch of 50 to 100 randomly selected instructions in each iteration. The development team then reviewed the batch of illustrations. According to the review, the system was refined and re-tested on the same batch before proceeding to the next batch and iteration. To date, over 300 instructions had undergone team review.

Preliminary Evaluation

We conducted a preliminary evaluation of Glyph’s output by using it to illustrate 49 patient instructions that were not part of the original instruction sets used to iteratively test the application. Both the evaluation and iterative testing data sets contain discharge instructions intended for cardiology patients. The evaluation set was produced by combining the instructions contained in ten different discharge instruction templates (e.g., CHF, ablation, thoracotomy) obtained from the University of Utah Cardiology Service. After performing a content analysis to combine overlapping instructions and eliminate redundancy, 49 instructions remained.

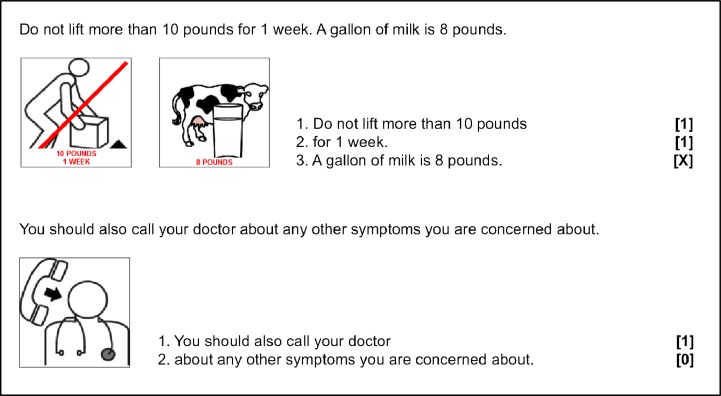

The 49 instructions were broken down into 160 smaller semantic units to facilitate coding. For example, the instruction “Weigh yourself every morning, on the same scale. Wear the same clothes each time” was divided into four parts: (1) weight yourself; (2) every morning; (3) on the same scale; and (4) wear the same clothes each time. This was done to facilitate and increase the precision of the annotation process.

One R.N., one graphic designer and one software engineer were asked to rate the pictographs according to the following the scheme:

[1] For parts of the instruction that were correctly represented in the corresponding pictograph.

[0] For parts of the instruction that were not represented in the corresponding pictograph.

[X] For parts of the instruction that were wrongly or only partially represented in the corresponding pictograph.

Results

Glyph was able to generate illustrations for all 49 instructions used for the preliminary evaluation. However, there was a great variation in the output in regards to the completeness of the generated pictographs. The ratings for the pictographs are shown in Table 2. Inter-rater agreement was 81.9% between raters 1 and 2, 83.1% between raters 1 and 3, and 80% between raters 2 and 3. Overall inter-rater agreement was 73.1%. Examples of ratings in which the three raters agreed are provided in Figure 8.

Table 2.

Pictograph completeness rating.

| Rating | Rater 1 | Rater 2 | Rater 3 | Average |

|---|---|---|---|---|

| Correctly represented [1] | 103 (64.4%) | 103 (64.4%) | 112 (70.0%) | 106 (66.2%) |

| Not represented [0] | 34 (21.2%) | 31(19.4%) | 32 (20.0%) | 32.3 (20.2%) |

| Wrongly or partially represented [X] | 23 (14.4%) | 26 (16.2%) | 16 (10.0%) | 21.7 (13.5%) |

Figure 8.

Examples of ratings with complete agreement among the three raters.

Discussion

To the best of our knowledge Glyph is the first application developed in the health care domain that is able to automatically enhance text with pictures. As we have argued before, the use of illustrations has proved to enhance the recall and understanding of patient instructions. However, clinical teams seldom have the graphic skills and time required to produce customized illustrations for the written instructions given to patients. Glyph was developed to address that issue by allowing health care providers to easily and quickly create illustrations from free text. We intend to make both Glyph and its pictograph library freely available online in the future. We also intend to add the pictograph library to the database of the Open Access Collaborative (OAC) Consumer Health Vocabulary (CHV) initiative36, the only CHV in the UMLS.

Since the illustrations are intended to enhance rather than replace text, we found the results of the auto-generated illustration to be very promising. On average, 66% of the text was deemed as correctly illustrated. The illustrations do reflect the content of the text but also contain some ambiguity. We have described several related systems outside of the health domain in the background section. Because none of these systems were formally evaluated, we cannot make any comparisons with their performances.

The evaluation of the outcomes of the automatic text-to-picture conversion is one of the most challenging parts of this study. There are no existing standard metrics to measure with precision how much of the text is actually transferred to the corresponding pictograph. For this study, we created our own coding system but we acknowledge that, like any other type of qualitative analysis, it can be distorted by raters’ subjectivity. Indeed, we intend to increase the precision of our evaluation methods. However, we are aware that, for this type of study, highly precise metrics are not possible. Although there is evidence showing that pictographs can increase recall and comprehension, they are not highly reliable communication tools because they lack a code shared by all those involved in the communication process. Without a common code, the interpretation of the resulting pictographs relies heavily on the reader’s inferential skills to link the graphic representation to the intended message. That is, the reader’s background (e.g., familiarity with the graphic conventions used, such as arrows) greatly influences the interpretation of pictographs.

In our efforts to develop a set of rules to convert text into pictures, we realized that many patient instructions were ambiguous, redundant or lacking details. For example, the instruction “Call your doctor if you gain 2 to 5 pounds overnight” is partially redundant since the constructions asks for a threshold, not a range. Only the lower value (i.e., 2 pounds) is necessary to allow patients to properly follow the instruction. We are keeping track of the shortcomings identified in the wording of the original patient instructions and intend to offer that information to the clinical teams so that the templates of the instructions can be further improved.

Our analyses indicate that certain concepts are poor candidates for illustration. Concepts that are unlikely to yield easy-to-interpret illustrations include concepts that do not have a clear visual counterpart (e.g., memory loss) or are overly generic (e.g., diet). Further, the types of concepts that can be effectively illustrated do not completely overlap with the concepts that, from a cognitive perspective, are likely to benefit from illustrations the most.

Even in a restricted domain (in our case, cardiology) instructions can often be worded, both syntactically and lexically, in many different ways. Thus, one of the greatest challenges in this project is the forecasting of all possible variations of the same instruction.

In the future, we plan to conduct more in-depth testing of Glyph’s output to verify if illustrations produced in an automated manner yield the same levels of increase in recall and comprehension as custom-made illustrations. The interrater agreement levels we obtained so far points to the necessity of a more systematic method to assess picture completeness and quality.

Acknowledgments

This work was supported by NIH grant R01 LM07222. We would like to thank Lauren Argo, Erik-Dean Larsen, and Brent Hill for their contributions in graphic design and iterative testing.

References

- 1.Houts PS, Bachrach R, Witmer JT, Tringali CA, Bucher JA, Localio RA. Using pictographs to enhance recall of spoken medical instructions. Patient education and counseling. 1998;35(2):83–8. doi: 10.1016/s0738-3991(98)00065-2. [DOI] [PubMed] [Google Scholar]

- 2.Houts PS, Witmer JT, Egeth HE, Loscalzo MJ, Zabora JR. Using pictographs to enhance recall of spoken medical instructions II. Patient education and counseling. 2001;43(3):231–42. doi: 10.1016/s0738-3991(00)00171-3. [DOI] [PubMed] [Google Scholar]

- 3.Houts PS, Doak CC, Doak LG, Loscalzo MJ. The role of pictures in improving health communication: A review of research on attention, comprehension, recall, and adherence. Patient education and counseling. 2006;61(2):173–90. doi: 10.1016/j.pec.2005.05.004. [DOI] [PubMed] [Google Scholar]

- 4.Spandorfer JM, Karras DJ, Hughes LA, Caputo C. Comprehension of discharge instructions by patients in an urban emergency department. Ann Emerg Med. 1995;25(1):71–4. doi: 10.1016/s0196-0644(95)70358-6. Epub 1995/01/01. [DOI] [PubMed] [Google Scholar]

- 5.Jolly BT, Scott JL, Sanford SM. Simplification of emergency department discharge instructions improves patient comprehension. Ann Emerg Med. 1995;26(4):443–6. doi: 10.1016/s0196-0644(95)70112-5. Epub 1995/10/01. [DOI] [PubMed] [Google Scholar]

- 6.Austin PE, Matlack R, 2nd, Dunn KA, Kesler C, Brown CK. Discharge instructions: do illustrations help our patients understand them? Ann Emerg Med. 1995;25(3):317–20. doi: 10.1016/s0196-0644(95)70286-5. [DOI] [PubMed] [Google Scholar]

- 7.Kitching JB. Patient information leaflets--the state of the art. J R Soc Med. 1990;83(5):298–300. doi: 10.1177/014107689008300506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sanderson BK, Thompson J, Brown TM, Tucker MJ, Bittner V. Assessing Patient Recall of Discharge Instructions for Acute Myocardial Infarction. Journal for Healthcare Quality. 2009;31(6):25–34. doi: 10.1111/j.1945-1474.2009.00052.x. [DOI] [PubMed] [Google Scholar]

- 9.Crane JA. Patient comprehension of doctor-patient communication on discharge from the emergency department. The Journal of emergency medicine. 1997;15(1):1–7. doi: 10.1016/s0736-4679(96)00261-2. Epub 1997/01/01. [DOI] [PubMed] [Google Scholar]

- 10.Health literacy: a prescription to end confusion. Washington DC: Institute of Medicine (IOM); 2004. [Google Scholar]

- 11.Michielutte R, Bahnson J, Dignan MB, Schroeder EM. The use of illustrations and narrative text style to improve readability of a health education brochure. Journal of Cancer Education. 1992;7(3):251–60. doi: 10.1080/08858199209528176. [DOI] [PubMed] [Google Scholar]

- 12.Mansoor LE, Dowse R. Effect of pictograms on readability of patient information materials. The Annals of pharmacotherapy. 2003;37(7–8):1003–9. doi: 10.1345/aph.1C449. Epub 2003/07/05. [DOI] [PubMed] [Google Scholar]

- 13.Mayer RE, Gallini JK. When is an illustration worth ten thousand words? Journal of Educational Psychology. 1990;82(4):715–26. [Google Scholar]

- 14.Kools M, van de Wiel MW, Ruiter RA, Kok G. Pictures and text in instructions for medical devices: effects on recall and actual performance. Patient Educ Couns. 2006;64(1–3):104–11. doi: 10.1016/j.pec.2005.12.003. Epub 2006/02/14. [DOI] [PubMed] [Google Scholar]

- 15.Morrow DG, Hier CM, Menard WE, Leirer VO. Icons improve older and younger adults’ comprehension of medication information. The journals of gerontology Series B, Psychological sciences and social sciences. 1998;53(4):P240–54. doi: 10.1093/geronb/53b.4.p240. Epub 1998/07/29. [DOI] [PubMed] [Google Scholar]

- 16.Kripalani S, Robertson R, Love-Ghaffari MH, Henderson LE, Praska J, Strawder A, et al. Development of an illustrated medication schedule as a low-literacy patient education tool. Patient education and counseling. 2007;66(3):368–77. doi: 10.1016/j.pec.2007.01.020. [DOI] [PubMed] [Google Scholar]

- 17.Lajoie SP, Nakamura C. Cambridge Handbook of Multimedia Learning. Cambridge University Press; 2005. Multimedia learning of cognitive skills; pp. 489–504. [Google Scholar]

- 18.Hwang SW, Tram CQ, Knarr N. The effect of illustrations on patient comprehension of medication instruction labels. BMC family practice. 2005;6(1):26. doi: 10.1186/1471-2296-6-26. Epub 2005/06/18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Mayer RE, Gallini JK. When is an illustration worth ten thousand words? Journal of Educational Psychology. 1990;82(4):715–26. [Google Scholar]

- 20.Delgado D, Magalhaes J, Correia N. Automated Illustration of News Stories. Semantic Computing (ICSC), 2010 IEEE Fourth International Conference; 22–24 Sept. 2010; 2010. pp. 73–8. [Google Scholar]

- 21.Joshi D, Wang JZ, Li J. The Story Picturing Engine---a system for automatic text illustration. ACM Trans Multimedia Comput Commun Appl. 2006;2(1):68–89. [Google Scholar]

- 22.Li H, Tang J, Li G, Chua TS. Word2Image: towards visual interpreting of words. Proceedings of the 16th ACM international conference on Multimedia; Vancouver, British Columbia, Canada. ACM; 2008. pp. 813–6. 1459494. [Google Scholar]

- 23.Zhu X, Goldberg AB, Eldawy M, Dyer CR, Strock B. A text-to-picture synthesis system for augmenting communication. Proceedings of the 22nd national conference on Artificial intelligence - Volume 2; Vancouver, British Columbia, Canada. AAAI Press; 2007. pp. 1590–5. 1619900. [Google Scholar]

- 24.Goldberg AB, Rosin J, Zhu X, Dyer CR. Toward Text-to-Picture Synthesis. NIPS2009.

- 25.UzZaman N, Bigham JP, Allen JF. Multimodal summarization of complex sentences. Proceedings of the 16th international conference on Intelligent user interfaces; Palo Alto, CA, USA. ACM; 2011. pp. 43–52. 1943412. [Google Scholar]

- 26.Johansson R, Berglund A, Danielsson M, Nugues P. Automatic text-to-scene conversion in the traffic accident domain. Proceedings of the 19th international joint conference on Artificial intelligence; Edinburgh, Scotland. Morgan Kaufmann Publishers Inc; 2005. pp. 1073–8. 1642465. [Google Scholar]

- 27.Egges A, Nugues P, Nijholt A. Generating a 3D Simulation of a Car Accident from a Formal Description: the CarSim System. In: Giagourta V, Strintzis MG, editors. Proceedings International Conference on Augmented, Virtual Environments and Three-dimensional Imaging (ICAV3D); Mykonos, Greece. CERTH; 2001. pp. 220–3. [Google Scholar]

- 28.Coyne B, Sproat R. WordsEye: an automatic text-to-scene conversion system. Proceedings of the 28th annual conference on Computer graphics and interactive techniques. 383316; ACM; 2001. pp. 487–96. [Google Scholar]

- 29.Zeng-Treitler Q, Kim H, Hunter M. Improving patient comprehension and recall of discharge instructions by supplementing free texts with pictographs. AMIA Annual Symposium proceedings / AMIA Symposium AMIA Symposium; 2008. pp. 849–53. Epub 2008/11/13. [PMC free article] [PubMed] [Google Scholar]

- 30.Kim H, Nakamura C, Zeng-Treitler Q. Assessment of Pictographs Developed Through a Participatory Design Process Using an Online Survey Tool. J Med Internet Res. 2009;11(1):e5. doi: 10.2196/jmir.1129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Nakamura C, Zeng-Treitler Q. A taxonomy of representation strategies in iconic communication. International Journal of Human-Computer Studies. 2012;70(8):535–51. doi: 10.1016/j.ijhcs.2012.02.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Nakamura C, Zeng Q. The Pictogram Builder: Development and Testing of a System to Help Clinicians Illustrate Patient Education Materials. World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education 2011; Honolulu, Hawaii, USA. AACE; 2011. pp. 324–30. [Google Scholar]

- 33.McCray AT, Burgun A, Bodenreider O. Aggregating UMLS semantic types for reducing conceptual complexity. Stud Health Technol Inform. 2001;84(Pt 1):216–20. Epub 2001/10/18. [PMC free article] [PubMed] [Google Scholar]

- 34.FDA electronic drug labels to improve patient safety. J Pain Palliat Care Pharmacother. 2006;20(2):96–7. Epub 2006/06/23. [PubMed] [Google Scholar]

- 35.Browne AC, Divita G, Aronson AR, McCray AT. UMLS language and vocabulary tools. AMIA Annual Symposium proceedings / AMIA Symposium AMIA Symposium; 2003. p. 798. Epub 2004/01/20. [PMC free article] [PubMed] [Google Scholar]

- 36.Keselman A, Smith CA, Divita G, Kim H, Browne AC, Leroy G, et al. Consumer health concepts that do not map to the UMLS: where do they fit? Journal of the American Medical Informatics Association : JAMIA. 2008;15(4):496–505. doi: 10.1197/jamia.M2599. [DOI] [PMC free article] [PubMed] [Google Scholar]