Abstract

The current project examined the effectiveness of a functional analysis skills training package for practitioners with advanced degrees working within an applied setting. Skills included appropriately carrying out the functional analysis conditions as outlined by Iwata, Dorsey, Slifer, Bauman, and Richman (1982/1994), interpreting multielement functional analysis graphs using the methodology described by Hagopian et al. (1997), determining next steps when functional analysis data are undifferentiated, and selecting function-based interventions once functional analysis data are conclusive. The performance of three participants was examined within a multiple baseline design across participants. Although performance varied, baseline skill level was insufficient prior to intervention across participants and skill areas. Mastery was attained for all participants within four to eight training sessions per skill and the acquired skills were demonstrated effectively during generalization trials. Minimal retraining was required for some component skills at a 3-month follow up.

Keywords: functional analysis, staff training, treatment development, visual inspection

The term functional analysis (FA) was first used by Skinner (1953) to describe a process in which the manipulation of environmental variables is conducted to demonstrate their effects on behavior. According to Skinner, these “causes of behavior” are “the external conditions of which behavior is a function” (p. 35). Skinner believed that an understanding of these cause and effect relations is the bedrock of the science of human behavior. In the ensuing decades, a number of researchers demonstrated the impact the application and removal of specific environmental variables could have on behavior (e.g., Allen, Hart, Buell, Harris, & Wolf, 1964; Schaeffer, 1970). Carr (1977) reviewed evidence that a variety of contingencies could influence problem behavior, such as positive reinforcement, negative reinforcement, or reinforcement produced by the behavior itself (i.e., automatic reinforcement). However, it was not until the landmark study by Iwata, Dorsey, Slifer, Bauman, and Richman (1982/1994) that a systematic experimental methodology was established for determining a behavior's maintaining variables prior to treatment selection. Iwata et al. provided a technological description of how to evaluate the impact of the various contingencies outlined by Carr in comparison to a control condition. In addition, Iwata et al. demonstrated that participants' problem behavior predictably increased and decreased based upon the alteration of specific contingencies.

Since the publication of Iwata et al. (1982/1994) 30 years ago, research has shown that selecting interventions based upon the results of an FA greatly improves treatment outcomes for problem behavior (e.g., Pelios, Morren, Tesch, & Axelrod, 1999; Hastings & Noone, 2005). Moreover, inaccurate interpretation of FA data can lead to the selection of ineffective interventions, and possibly, interventions that exacerbate problem behavior (e.g., Iwata, Pace, Cowdery, & Miltenberger, 1994). Thus, using FAs to guide treatment selection is an important tool for behavior analysts working within applied settings.

Several studies have demonstrated that FA skills can be taught efficiently to professionals and nonprofessionals in a variety of settings. For example, Iwata et al. (2000) demonstrated that upper-level undergraduate students could respond accurately in simulated FA sessions after experiencing a series of interventions: reading summaries of the various FA conditions, watching a videotape of simulated conditions, taking a quiz, and then practicing the role of a therapist conducting FA conditions. Responding below certain thresholds resulted in experimenter feedback. All 11 participants met the 95% accuracy criterion after approximately 2 hr of training. Hagopian et al. (1997) developed structured criteria for interpreting multielement FA graphs and found that graduate-level psychology students could apply the criteria accurately after 1–2 hr of training.

A host of subsequent studies demonstrated that teachers with little experience delivering behavior-analytic services can be trained to conduct FA sessions. These studies have assessed skills in both simulated (Moore et al., 2002; Wallace, Doney, Mintz-Resudek, & Tarbox, 2004) and natural settings (Erbas, Tekin-Iftar, & Yucesoy, 2006), respectively. Phillips and Mudford (2008) demonstrated that residential caregivers could be taught to conduct FA sessions when assessing client's behavior in clinical settings.

Studies reviewed thus far demonstrated that individuals can be trained to respond accurately during FA conditions, and in one case, to interpret FA graphs (i.e., Hagopian et al.). Conducting and applying the results of FAs, however, requires a larger set of component skills (Iwata & Dozier, 2008; Hanley, 2012; Hanley, Iwata, & McCord, 2003). If data collected during the FA are inconclusive, the practitioner must then decide the next course of action. After a function(s) is identified, a function-based treatment must be selected. As part of an initiative at our agency, we developed and evaluated an FA training package that addresses these skills. The purpose of this article is to describe our training package and outcomes for three staff members in the hope that other practitioners will use this information to teach this set of FA skills in their organizations.

Method

Participants and Setting

Participants in this study were three individuals who worked at a private day and residential school for children with autism and other developmental disabilities. The project necessitated participating on a holiday, so each participant was remunerated with a stipend of $300 for their efforts. All participants possessed a master's degree and had recently been credentialed as Board Certified Behavior Analysts® (BCBA®). Individuals with advanced training were selected for this study because FAs are complex and should be facilitated by professionals with a strong foundation in the ethical and conceptual principles that characterize the discipline of applied behavior analysis. We viewed the current training program as an initial step toward disseminating the complex set of skills needed to conduct FAs independently.

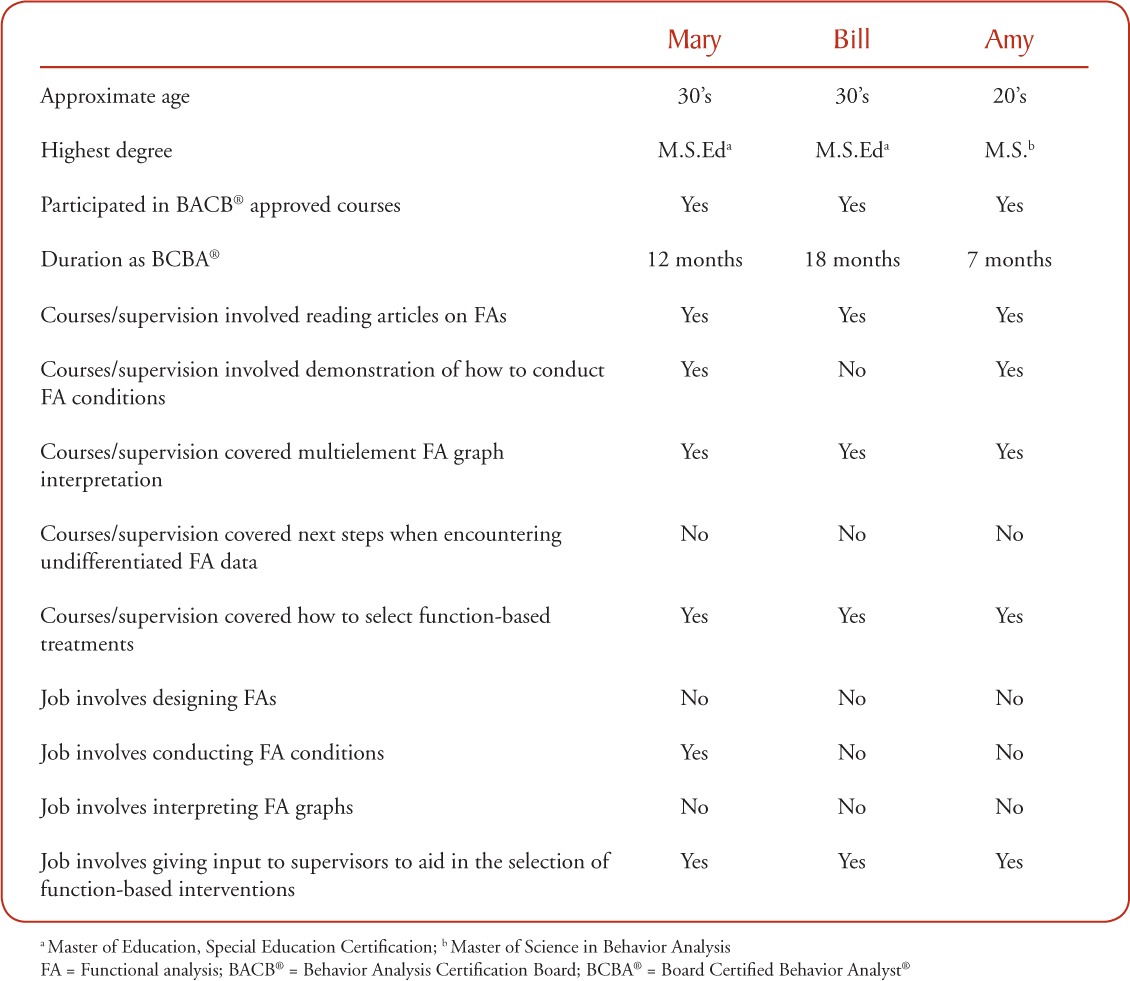

Table 1 displays the training background of each of the three participants. This information was gathered using a questionnaire that participants completed following the maintenance phase of this experiment. All participants had coursework related to the design and implementation of FAs and function-based treatments; however, only one participant, Mary, had experience in conducting a single FA, which occurred 4 years prior to the study.

Table 1.

Participant Characteristics

Response Measurement

We studied four component skill sets that contribute to performing FAs and using the results to inform treatment selection: (a) conducting FA sessions (b) interpreting graphs, (c) responding to undifferentiated FA data, and (d) selecting interventions. Each skill was assessed across a Training Set and a Generalization Set of materials.

Conducting FA sessions.

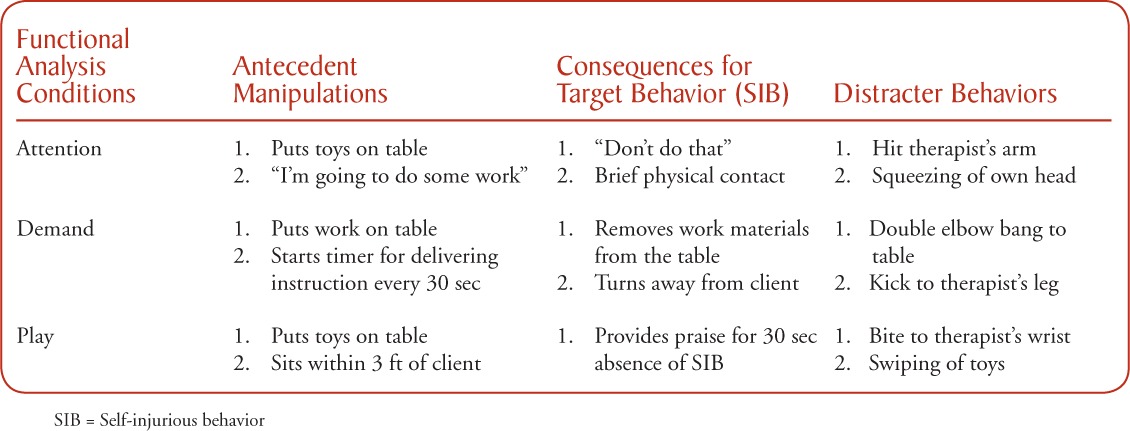

An accuracy checklist was used to measure correct responding during each FA condition (go to the supplemental materials webpage for a copy of the checklist: http://www.abainternational.org/Journals/bap_supplements.asp). Performance in each condition constituted a session, and three consecutive sessions (i.e., attention, demand, play) comprised a session block. Examples of correct responses from the three FA conditions we trained can be found in Table 2. A response was considered accurate if the participant completed the appropriate antecedent manipulation within 3 s of its scheduled time, refrained from responding when appropriate (e.g., ignored a distracter behavior in the attention condition), or produced the correct response within 3 s of the experimenter exhibiting a particular behavior. The number of possible correct responses varied across conditions, ranging from 34 possible correct responses in the play condition to 42 possible correct responses in the demand condition. Given the likelihood that a participant could respond correctly but also engage behaviors not relevant to a particular condition, errors of commission were measured using a 10-s partial interval recording system for each 5-min session. An error of commission was defined as any participant behavior that was not prescribed for a given FA condition. For example, a participant might implement a prescribed step correctly (e.g., removing academic materials contingent upon the target behavior in the demand condition), but also emit an additional incorrect response (e.g., attending to the behavior by saying, “Don't do that”).

Table 2.

Examples of Participant Accuracy Items for Functional Analysis Sessions

The mastery criterion was defined as participants achieving 95% accuracy or higher across all three conditions (attention, demand, play) for three consecutive session blocks (nine consecutive sessions). The criterion for errors of commission was less than 5% across conditions for three consecutive session blocks.

Interpreting graphs.

Graph interpretation scores were based on the percentage of correct responses (i.e., participant names the correct function) per session, which included interpreting a set of 12 graphs. Participants had to select the correct function(s) from a multiple-choice bank that included 12 options: (a) undifferentiated; (b) attention; (c) escape; (d) tangible; (e) automatic; (f ) attention and escape; (g) attention and tangible; (h) tangible and escape; (i) escape and automatic; (j) automatic and attention; (k) automatic and tangible; and (l) attention, tangible, and escape. Responses were scored as either correct or incorrect—no partial credit was given. The mastery criterion for this phase was defined as 95% accuracy or higher for three consecutive sessions.

Responding to undifferentiated FA data.

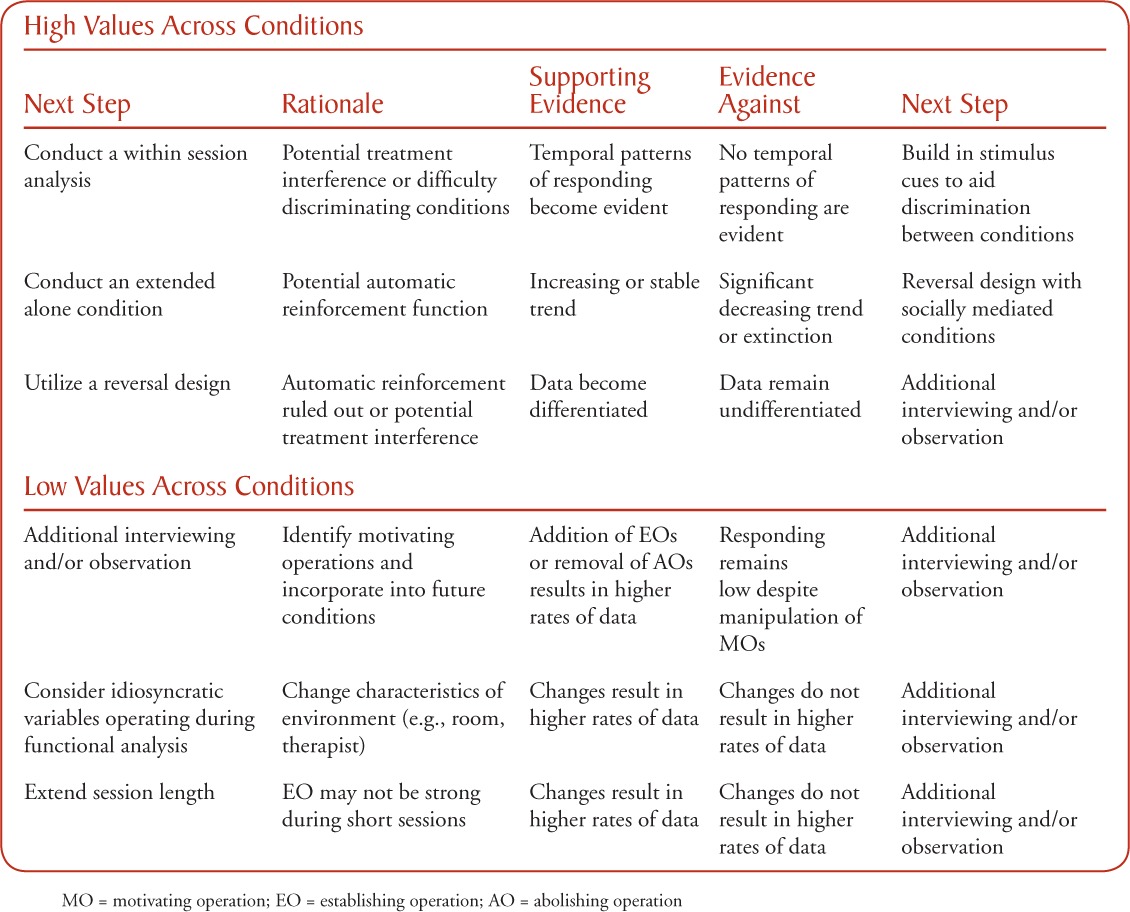

During this phase, participants were required to inspect undifferentiated FA outcomes and to verbally describe next steps. Points were distributed according to whether or not each response contained a description of a next step (1 pt; see Table 3 for sample answers), a rationale for that next step (1 pt), a description of the evidence that would support their hypothesis about why data were undifferentiated (1 pt), a description of evidence against their hypothesis (1 pt), and what to do if their next step did not lead to identification of a function (1 pt). Table 3 contains a sampling of next steps that were considered accurate responses; the list is not comprehensive. So long as the response satisfied the criteria above, full credit was given. The maximum score per session was 10 points (5 points per graph, two graphs per set), and each score was converted to a percentage. The mastery criterion was defined as 95% accuracy or higher for three consecutive sets of undifferentiated graphs.

Table 3.

Examples of Acceptable Next Steps When Managing Undifferentiated Functional Analysis Data

Selecting interventions.

Each session of this phase of training involved providing written essay responses for three scenarios. The scenarios remained the same during consecutive sessions and the maximum score per session was 15 points (5 points per scenario). Responses to the scenarios were scored using a rubric (go to the supplemental materials webpage for a copy of the rubric). The rubric contains sample responses that would receive credit, but all accurate responses are not listed. To illustrate the use of the rubric, consider a child whose behavior of food stealing is maintained by tangible reinforcers. A participant might describe implementing a differential reinforcement of alternative behavior procedure in which requests for food are granted and attempts to steal food are blocked. This response would receive full credit because (a) a function-based treatment was selected to increase a functional alternative behavior and to decrease problem behavior and (b) the procedure was described using a principle of behavior (e.g., reinforcement) or its common name from the behavior-analytic literature (i.e., functional communication training). A percentage accuracy score was derived for each session by dividing the participant's total points across the three scenarios by 15, and multiplying that result by 100. Mastery was defined as a participant achieving at least 95% accuracy for three consecutive sessions.

Interobserver Agreement

A subset of at least 24% of baseline, training, and generalization sessions from each participant and training phase was randomly selected for review by a second, independent observer. Permanent products were assessed (video recordings for FA sessions, written responses for the other phases).

Trial-by-trial agreement was assessed for accuracy and interval-by-interval IOA was assessed for errors of commission during 24% of FA sessions. For the accuracy checklist, an agreement was defined as both raters scoring the response as present for the same trial, or both raters scoring the response as omitted for the same trial. With regard to errors of commission, an agreement was defined as both raters scoring an error of commission as present for each interval, or both raters scoring an error of commission as absent for each trial. Mean IOA across sessions was 96% (session range, 81% to 100%) for accuracy and 99% (session range, 90% to 100%) for errors of commission during the FA sessions across participants.

Trial-by-trial IOA was computed for 31% of interpreting graphs sessions. Each graph in a set was considered a trial, and IOA was calculated by dividing the number of agreements by 12. Mean IOA across sessions was 100% for graph interpretation across all participants.

Trial-by-trial IOA was also calculated across participants for 36% of sessions in the responding to undifferentiated data condition and 33% of the selecting interventions sessions. Each step in the scoring rubrics was deemed a trial. An agreement was defined as both raters providing equivalent scores for each step in the rubric. For example, if one rater gave a score of 0.5 points for the first step in the selecting interventions scoring rubric, and the second rater awarded a score of 0.5, this was considered an agreement. Mean IOA across sessions was 99% (session range, 89% to 100%) for the undifferentiated FA data sessions and 93% (session range, 73% to 100%) for selecting interventions across all participants.

Procedural Integrity

Procedural integrity was assessed during 24% of FA sessions across participants, phases, and training and generalization sets to determine if the experimenter performed the responses on the script accurately. Sessions were scored by watching a videotape. The experimenter was given credit for a correct response if that response was performed within 3 s of its scheduled time on each script. Mean procedural integrity across sessions for this component of the study was 99.6% (session range, 94% to 100%), indicating that the experimenter performed target and distracter responses with high accuracy.

Procedural integrity was assessed using videotape review for 33% of the initial 30 min training sessions and for the feedback sessions (session range, 31% to 33% of sessions) for each of the four training conditions, across participants. Procedural integrity was measured for initial training sessions by calculating the percentage of steps completed by the trainer (e.g., covering each of the Hagopian et al. (1997) rules, describing the essential elements of selecting function-based treatments). Procedural integrity for feedback sessions was calculated by scoring whether or not the trainer completed essential components of the feedback process, such as providing praise for correct responses, describing incorrect responses, and suggesting ways to improve incorrect responses. Procedural integrity across sessions was found to be 100% for both subsets of initial training sessions and feedback sessions.

Design and Procedures

The effects of training on FA component skills were assessed using a concurrent multiple-baseline design across participants. Each participant was in baseline for a predetermined, randomly assigned period of time. A participant was not required to master one skill set (e.g., interpreting graphs) prior to receiving instruction for another skill set (e.g., managing undifferentiated FA data). Given the resources required to conduct this study concurrently with individuals working in a school setting, the bulk of the project was completed during a week in which two-thirds of the students had a school vacation.

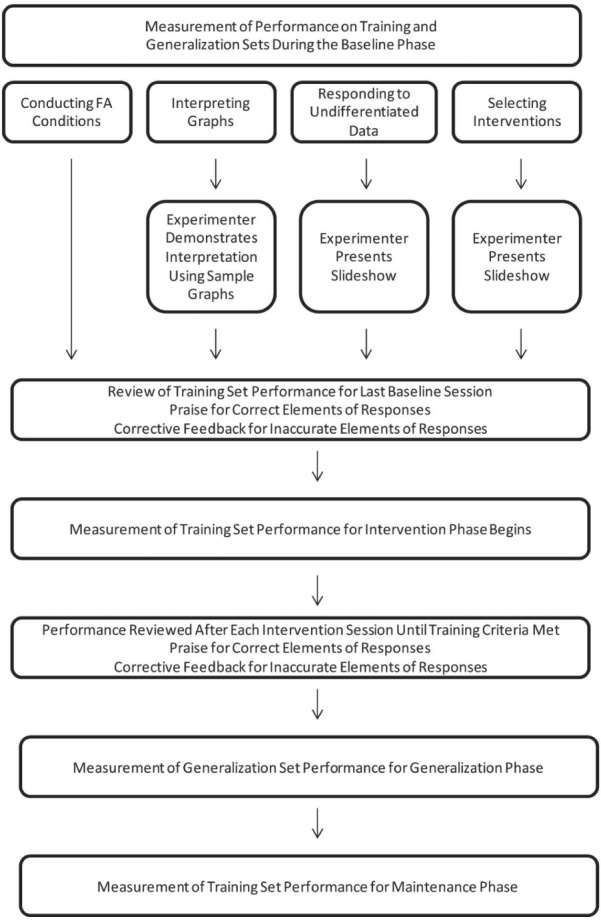

Figure 1 displays an outline of the training progression. We began by measuring the baseline performance level across FA skills with each participant. The training package for conducting FA sessions included an educational presentation, repeated rehearsal opportunities, and performance feedback. The educational presentation involved an oral review of the purpose of each test condition in relation to the control condition and description of a task analysis of the essential components of each FA condition. The trainee had access to a typed handout of each task analysis during the presentation. Training for interpreting graphs included the aforementioned components plus modeling (i.e., behavioral skills training; Miltenberger et al., 2004) of how to apply the method described by Hagopian et al. (1997). Finally, generalization probes were conducted prior to and following training, and maintenance checks were performed 3 months after training concluded.

Figure 1.

Sequence of response measurement and participant training during the study.

Each component skill (i.e., conducting FA sessions, interpreting graphs, responding to undifferentiated FA data, and selecting interventions) was measured using a training set and a generalization set. The second author conducted all interpreting graph sessions in his office, and the other skills were assessed by the lead author in a separate office. All skills were assessed twice a day (once in the morning, once in the afternoon) with each participant. The order of assessments varied across participants based upon the availability of the experimenters.

Conducting FA Sessions

Baseline.

Participants were not provided with any instruction or preparatory materials during session blocks. A session block included one session each of the attention, demand, and play conditions, which were conducted in a fixed order across blocks. Upon entering the training room, participants were directed to a desk that had been cleared of all materials except those that might be relevant to conducting an FA: academic materials (i.e., coins) for the demand condition, leisure materials (i.e., figurines) for the play and attention conditions, a book for the participant to read during the attention condition, and two stopwatches. Participants were oriented to a piece of paper on the desk that read, “Below are some materials that you might use during an FA. We will be starting with the attention condition. Please conduct the condition in the traditional manner, as initially outlined by Iwata et al. (1982/1994). Each condition should last five minutes.” Another piece of paper located under the coins read, “Coin ID: Arrange the quarter, dime, and nickel in front of the student and state, ‘Point to the quarter.'” Finally, a piece of paper located under the figurines read, “Toys.”

The experimenter was seated at a nearby table and followed a standardized script for the three FA conditions. Six unique scripts were constructed (three for the training set, three for the generalization set) and each script contained nine target behaviors and eight distracter behaviors that the experimenter exhibited (go to http://www.abainternational.org/Journals/bap_supplements.asp for a sample script). Generalization set scripts included different target and distracter responses that occurred in a different temporal sequence than the training set scripts. A distracter response was similar to the target responses, but it was not specifically identified in the operational definition of the target behavior. For example, if target behaviors included self-injury except for knee banging, instances of knee banging were scheduled as distracter behaviors. The experimenter had the script discreetly in his view during each session so he knew when to exhibit certain behaviors.

Training.

Prior to teaching participants how to conduct FA sessions, the lead author interviewed each participant to determine if they completed any recent studying prior to their participation in this project. All participants denied reviewing materials in preparation for the study but indicated they had read the Iwata et al. (1982/1994) article during their graduate coursework. In addition, they reported that they were attempting to conduct sessions in a manner consistent with its methodology during baseline.

Following this interview, the experimenter provided feedback for the participant's last baseline session block and instructions detailing how to accurately implement FA sessions by discussing the critical elements of each condition, reviewing a task analysis for each condition, and then reviewing the accuracy checklist with the participant; if errors of commission occurred, they were also highlighted. Instructions were reviewed and performance feedback was given in this manner after each session block. The experimenter also responded to participant questions about their performance.

Interpreting Graphs

Baseline.

Participants were provided with 24 multielement FA graphs for interpretation (12 graphs in the training set, 12 graphs in the generalization set). Each graph contained a data path with 10 data points for each of the five FA conditions: (a) attention; (b) demand; (c) play; (d) tangible; and (e) alone. Initially, a set of 100 graphs was created by the second author using hypothetical data. This set was provided to the lead author to determine the function of the behavior based upon visual analysis of the data paths. A subset of graphs was selected based upon concordance between the lead author's analysis of function and the function determined using the Hagopian et al. (1997) criteria. The participant was asked to select the function(s) of behavior from the 12-option choice bank for each graph. Each function or combination of functions was represented a single time in each set of 12 graphs, but participants were not informed that this was the case. Participants were given a worksheet labeled with the numbers 1–12 followed by a blank field; they were asked to write in the correct choice from the bank (each choice represented by a letter). Participants were also told each answer could be used more than once.

Training.

Following the last session of baseline, a 1-hr training session was conducted with each participant. During this initial training session, participants were given a worksheet summarizing the steps outlined by Hagopian et al. (1997; go to the supplemental materials webpage for a copy of the worksheet). Next, the second author modeled application of the structured criteria using a sample of FA graphs that were not included in the training and generalization sets. After the experimenter highlighted each of the Hagopian et al. rules (pp. 324–325) and presented a step-by-step worksheet related to those rules, participants were provided with the training set graphs and asked to select the correct function(s) for each graph using the multiple-choice bank. Immediately after completing the set of 12 graphs, the second author reviewed the participant's performance using the worksheet. Participants were not required to complete the worksheet for each graph, but feedback included praise for correct responding and step-by-step modeling with the worksheet when participants nominated an incorrect function. Participants were also permitted to ask questions about their performance.

Responding to Undifferentiated FA data

Baseline.

Participants were presented with two sets (one training set, one generalization set) of two FA graphs. Each set contained one graph with low to zero rates of undifferentiated FA data and one graph with high rates of undifferentiated FA data. The training set included one graph with problem behavior never occurring in any condition and one graph in which the rate of problem behavior was between 5 to 8 responses per min across conditions. The generalization set included one graph with problem behavior occurring between 0.5 and 1.5 responses per min across conditions (depicting low rates of behavior) and one graph with problem behavior occurring between 9 and 10 responses per min (depicting high rates of behavior). Participants were given a piece of paper that stated, “Please take a look at the graph below. These data from your extended multielement FA are undifferentiated. What should you do next?” Participants were given 30 min to provide a written response on a separate piece of paper for each graph.

Training.

Following the baseline phase, the lead author presented a 30-min computer slideshow to each participant. A portion of the material included in the initial training session for dealing with high rates of undifferentiated FA data was adapted from Vollmer, Marcus, Ringdahl, and Roane (1995). Each strategy from Vollmer et al. was discussed in the context of actual FA data collected at the current agency. Examples were given of when each strategy should be implemented. Strategies included gathering or revisiting indirect and descriptive assessment results as well considering the arrangement of conditions. For example, if target behavior occurred at a high rate toward the end of one session and early in the next session, carryover between conditions might be suspected. By contrast, if behavior was emitted at a high rate in the alone condition, the practitioner should consider performing an extended alone condition.

When dealing with low or zero rates of undifferentiated FA data, the experimenter described additional steps that could be taken to clarify the function of behavior and the conditions under which these decisions might be made (Hanley, 2012; Hanley et al., 2003). For example, if a particular nontarget behavior was observed during the FA, such as self-restraint, the participant could conduct a pairwise comparison in which access to self-restraint is provided noncontingently in a control condition and access is provided contingent upon problem behaviors in a test condition. Alternatively, if an initial FA yielded zero rates of target behavior, then additional interviews with teachers or further direct observation were encouraged. Each of these steps, which are described in Table 3, were reviewed, and participants were able to ask questions during the presentation.

After the initial training session, the participant's training set essay responses were reviewed, praise was delivered for correct elements of a response, and corrective feedback was given for incorrect or omitted aspects of a response. This feedback process was conducted orally with the scoring rubric present so the participants could see which elements they responded to correctly and which components were incorrect or omitted. Participants were also permitted to ask questions about their performance. Following feedback, each participant's performance was measured in the same manner as baseline.

Selecting Interventions

Baseline.

Participants were given six unique scenarios to consider (three training set scenarios, three generalization set scenarios). Each set contained one scenario with a single-function behavior, and two scenarios with dual-function behaviors. One scenario, for example, read, “You have recently conducted a FA and determined that Allan's bolting behavior is maintained by escape from loud noises and tangible reinforcement (he runs to a play room with toys in it). In the space below, please describe an intervention or interventions you might select to address this behavior.” Participants were given 30 min to respond to each set of three scenarios with written responses. The scenarios were presented in the same order each session.

Training.

A 30-min training was conducted individually with participants. The first author used a computer slideshow to highlight the functions of behavior (attention, escape, tangible, and automatic reinforcement) and to describe function-based interventions. Participants were trained to produce a written description of how to reduce problem behavior, but also to describe how they would teach functional alternative behaviors. Participants were also instructed to link their descriptions of procedures to principles of behavior or to employ a vernacular term from the behavior-analytic literature (i.e., functional communication training). In addition, the experimenter reviewed four sample vignettes (different than those in the training and generalization sets) and participants were asked to practice selecting interventions for each vignette. Praise was given for correct aspects of their responses and corrective feedback was given for incorrect aspects of their responses.

Immediately following the computer slideshow, the first author reviewed the participant's performance during his or her last baseline session. During this review, the experimenter provided praise for the accurate aspects of participant responses and corrective feedback for inaccurate aspects. This process was conducted alongside the scoring rubric (go to the supplemental materials webpage for a copy of the rubric) and participants were also permitted to ask questions about their performance. They were then provided with the scenarios from the training set to complete and no didactic materials were allowed while the participants were responding. This same process also occurred following each intervention session.

Generalization

After each participant met the mastery criterion, their performance was assessed using the generalization sets. The generalization phase concluded when the mastery criteria were met for one session (or one session block for the conducting FA sessions component of training). If performance did not meet the mastery criterion, feedback was provided in the same manner as in the training phase.

Maintenance

Three months after the conclusion of the generalization phase, participants' component skills were assessed using the training sets to measure the maintenance of treatment effects. If treatment gains were not maintained, performance feedback was given and skills were measured using the training set materials until the mastery criteria were met for one session (or one session block for conducting FA sessions). During the time period between the conclusion of the generalization phase and the onset of the maintenance phase, participants did not conduct FA conditions, interpret multielement FA graphs, or manage undifferentiated FA data. As part of their typical job responsibilities, however, the participants did give input to their supervisors regarding the selection of interventions.

Results

All participants met the mastery criterion within eight sessions or less per skill as a result of training. The total training time for this set of four skills (i.e., conducting FA sessions, interpreting graphs, responding to undifferentiated FA data, describing function-based treatments) ranged from 10.5 to 12.5 hours.

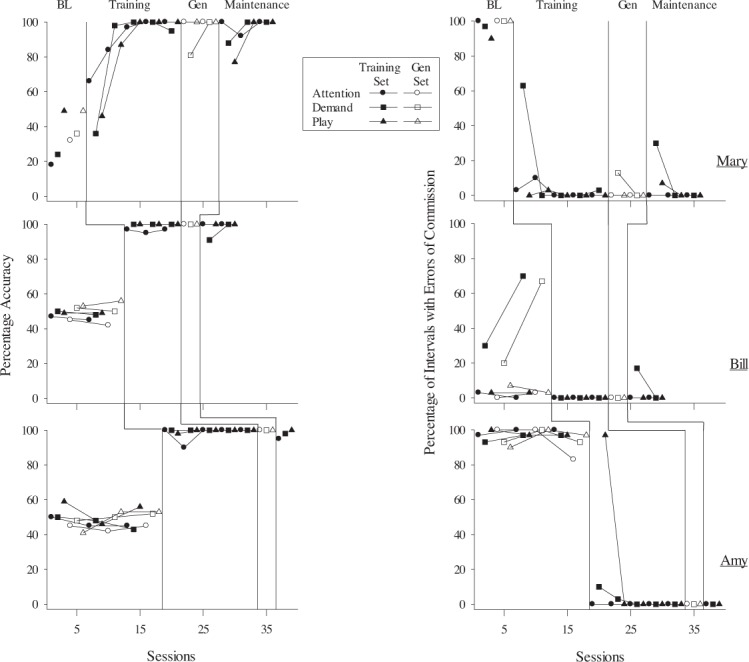

The first column in Figure 2 displays the percentage accuracy for each participant during simulated FA sessions. Baseline performance for all participants was low (60% accuracy or less) across sets. Following training, skills increased immediately and mastery was achieved in five sessions or less for all participants. The participants' accuracy scores across conditions during the generalization phase were, with one exception, 100%. During the demand condition, Mary began the session by giving attention for the target behavior, and then corrected her responses for the remainder of the session. Therefore, she achieved 80% accuracy during this session, but her performance quickly recovered to 100% during the next session block.

Figure 2.

Percentage accuracy (column one) and percentage of 10 s partial intervals in which the participants made at least one error of commission (column 2) across simulated functional analysis conditions.

The second column in Figure 2 displays the percentage of 10-s partial intervals in which errors of commission occurred during FA sessions. The baseline values for Mary and Amy were high (at least 80% of intervals) and stable across conditions, while Bill exhibited very few errors of commission (range, 0% to 10% of intervals) during the attention and play conditions, but higher errors of commission in the demand condition (range, 20% to 70% of intervals). Each participant exhibited at least one idiosyncratic pattern of errors. For example, Mary created a barricade out of office chairs during the play condition, which she later stated was to eliminate the possibility that the simulated client would receive any form of attention for the target behavior. Bill conducted the demand condition by not allowing escape following the target behavior in the form of continued instruction every few seconds. During the attention condition, Amy tended to provide continuous noncontingent attention throughout the sessions. When the experimenter exhibited the target behavior, she immediately provided attention relevant to the target behavior, but then returned to providing noncontingent attention. During the play condition, Amy presented toys but then began reading a book rather than orienting toward the experimenter. In addition, she responded to some distracter behaviors, such as picking up and replacing toys after they had been swiped off of the table by the experimenter.

During the training phase, all 3 participants achieved the mastery criterion for errors of commission within five sessions or less. During the post-treatment generalization phase, Bill and Amy made no errors of commission. Mary, however, emitted errors of commission in 10% of the intervals during the demand condition.

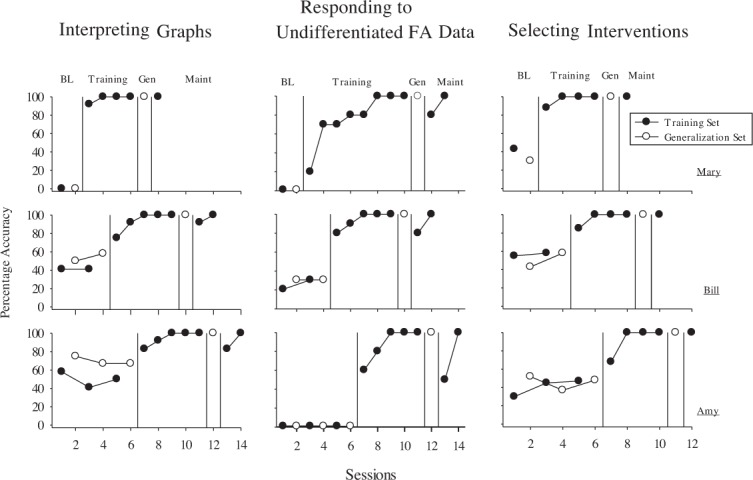

The first column in Figure 3 displays the percentage accuracy for each participant when interpreting multielement FA graphs. During baseline, Mary's performance was lowest at 0% accuracy, whereas Amy's performance was highest with scores ranging between 25% to 75% accuracy across the training and generalization sets. During the intervention phase, performance improved quickly with all participants meeting mastery criteria in five sessions or less. During the generalization phase, all participants achieved an accuracy score of 100% for the generalization set of graphs. After three months, percentage accuracy was indicative of skill maintenance across participants.

Figure 3.

Percentage accuracy for each set of multielement functional analysis graphs (column one). Twelve graphs were included in each set and 12 multiple-choice options were available for each graph. Column two displays each participant's percentage accuracy during open-ended written responses to graphs with undifferentiated FA data from an extended multielement functional analysis. The third column displays percentage accuracy during open-ended written responses to scenarios that prompted participants to describe function-based interventions based upon functional analysis results.

Figure 3 (second column) displays participant's accuracy when responding to undifferentiated FA data. Baseline accuracy scores were ≤ 30% and stable across sets for all participants. Participants most often indicated that they would keep collecting data using the same conditions, or that they would terminate the FA because it had not been useful in determining a function. Mary, in response to the low rates of undifferentiated FA data, indicated that she would “stop the FA if there are zero rates, because there is no socially significant challenging behavior to address.” Bill, in response to the high rates of undifferentiated FA data, stated that he would terminate the FA and “start with the least intrusive treatment strategy and move up to the higher levels of intrusive treatment as needed.” Amy indicated in both the low- and high-rate conditions that she would “use other functional behavior assessment tools to determine the function of the behavior” since the FAs were not fruitful.

During the post-treatment generalization phase, all participants achieved perfect scores on their essays describing how to manage the undifferentiated FA data depicted on the generalization set graphs. Scores declined, however, during the three month follow-up, with Mary and Bill achieving accuracy scores of 80%, and Amy receiving an accuracy score of 50%. All participants achieved perfect scores, however, during the next session.

Figure 3 (third column) shows each participant's accuracy scores for the written exercises measuring selection of function-based interventions. Baseline scores ranged from 35% to 60% between sets for all participants. During the intervention phase, participants met the mastery criterion following the educational presentation and four training sessions. Accuracy scores remained at 100% during the post-treatment generalization phase and during 3-month follow-up sessions.

Discussion

Previous research has demonstrated that staff members can be taught to conduct FA sessions in a relatively brief period of time (Iwata et al. 2000, Moore et al., 2002), and one previous study demonstrated methods to teach interpretation of multielement FA graphs as well (Hagopian et al., 1997). However, the process of designing function-based treatments involves a larger array of component skills. Although conducting FAs and using the results to guide treatment are cornerstone skills for behavior analysts, previous research is limited on how to teach practitioners these skills. The current study serves as a replication and extension of previous studies by evaluating four essential skills relevant to developing function-based treatments. Skills included manipulating antecedents and consequences in three conditions of an FA, interpreting multielement FA graphs, responding to undifferentiated FA data, and selecting function-based treatments based upon FA results. Baseline measures of these skills indicated that all of our participants were in need of training. During the training phase, participants quickly developed these skills and the acquired skills generalized in most cases. Some skills (e.g., responding to undifferentiated FA data), however, diminished by 3-month follow-up sessions. These results suggest that periodic retraining may be necessary.

Several limitations of our study warrant further discussion. For example, our participants had advanced training in behavior analysis and they were compensated for their participation. These factors may have led to more robust findings than could be expected with trainees who do not receive additional compensation or who have less experience in the field of behavior analysis. In addition, our training and interpretation of correct responses when conducting FA sessions were limited to the procedures outlined by Iwata et al. (1982/1984), which may lack some generality given the multitude of variations that have been described in the literature since that initial publication (e.g., Bloom, Iwata, Fritz, Roscoe, & Carreau, 2011; Najdowski, Wallace, Ellsworth, MacEleese, & Cleveland, 2008). We were interested, however, in developing a training model for individuals who are not well-versed in FA methodologies, and thus, saw training of the original conditions as an appropriate starting point.

A limitation of the conducting FA sessions component of our study was that we did not include an alone or a tangible condition, although these conditions were included in the other components of our training. Moreover, we did not include training on the steps prescribed prior to conducting FA conditions, such as indirect or descriptive assessment methods. For example, practitioners should interview caregivers to determine demands likely to evoke problem behavior. On a related note, evaluation of performance with actual clients would be beneficial in practice or future research because skills acquired under analogue training conditions (e.g., simulated FA sessions with the experimenter) may not always generalize to applied situations.

With regard to interpreting graphs, the method outlined by Hagopian et al. (1997) was designed for multielement FAs with 10 data points per condition. However, practitioners interested in using this method for training purposes may need to apply the structured criteria when analyzing FA data sets with more or less than 10 points per condition. Many of the rules can be applied regardless of the number of data points (e.g., “Is the alone condition the highest differentiated condition?”). However, other rules require adjustment for analyzing data sets of varying sizes (visit the supplemental materials webpage for adjustment considerations). Due to the complexity of the structured criteria method, future trainers and evaluators may consider alternative procedures to teach practitioners how to interpret functional analysis results.

For selecting interventions, our scoring system may be viewed as overly stringent because descriptions of how to reduce problem behavior and to increase a functional alternative behavior were both required to receive full credit. Thus, if a participant solely described an evidence-based antecedent manipulation to reduce problem behavior, they would have only received half-credit. We would contend that a more complete treatment involves teaching the client to respond in a socially appropriate manner (e.g., in preparation for conditions in which modifying the environment is not possible). That said, trainers teaching this skill set may wish to adjust the scoring procedures based upon their own commitments when treating problem behavior.

Conducting an FA involves many more skill sets than were measured in this study. For example, practitioners must consider safety and other logistical issues prior to performing an FA (where it will be conducted, who will implement the conditions). In addition, practitioners must define a target behavior, decide on a measurement system, select an experimental design, determine the order of FA conditions and how the arrangement might influence interpretation of results, and graphically depict the data. In summary, a host of complex skills comprise the development, administration, and interpretation of an FA. This study represents a preliminary step toward improving the independent completion of FA-related tasks by qualified staff members.

Footnotes

The authors thank Gregory P. Hanley for his valuable suggestions during the formulation of this project and Nicole Heal for her feedback during the preparation of this manuscript.

Action Editor: Amanda Karsten

References

- Allen K. A., Hart B., Buell J. S., Harris F. R., Wolf M. M. Effects of social reinforcement on isolate behavior of a nursery school child. Child Development. 1964;35:511–518. doi: 10.1111/j.1467-8624.1964.tb05188.x. [DOI] [PubMed] [Google Scholar]

- Bloom S. E., Iwata B. A., Fritz J. N., Roscoe E. M., Carreau A. B. Classroom application of a trial-based functional analysis. Journal of Applied Behavior Analysis. 2011;44:19–31. doi: 10.1901/jaba.2011.44-19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carr E. G. The motivation of self-injurious behavior: A review of some hypotheses. Psychological Bulletin. 1977;84:800–816. [PubMed] [Google Scholar]

- Erbas D., Tekin-Iftar E., Yucesoy S. Teaching special education teachers how to conduct functional analysis in natural settings. Education and Training in Developmental Disabilities. 2006;41:28–36. [Google Scholar]

- Hagopian L. P., Fisher W. W., Thompson R. H., Owen-DeSchryver J., Iwata B. A., Wacker D. P. Toward the development of structured criteria for interpretation of functional analysis data. Journal of Applied Behavior Analysis. 1997;30:313–326. doi: 10.1901/jaba.1997.30-313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley G. P. Functional assessment of problem behavior: Dispelling myths, overcoming implementation obstacles, and developing new lore. Behavior Analysis in Practice. 2012;5:54–72. doi: 10.1007/BF03391818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley G. P., Iwata B. A., McCord B. E. Functional analysis of problem behavior: A review. Journal of Applied Behavior Analysis. 2003;36:147–186. doi: 10.1901/jaba.2003.36-147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hastings R. P., Noone S. J. Self-injurious behavior and functional analysis: Ethics and evidence. Education and Training in Developmental Disabilities. 2005;40:335–342. [Google Scholar]

- Iwata B. A., Dorsey M. F., Slifer K. J., Bauman K. E., Richman G. S. Toward a functional analysis of self-injury. Journal of Applied Behavior Analysis. 1994;27:197–209. doi: 10.1901/jaba.1994.27-197. (Reprinted from Analysis and Intervention in Developmental Disabilities, 2, 3–20, 1982.) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iwata B. A., Dozier C. L. Clinical application of functional analysis methodology. Behavior Analysis in Practice. 2008;1:3–9. doi: 10.1007/BF03391714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iwata B. A., Pace G. M., Cowdery G. E., Miltenberger R. G. What makes extinction work: An analysis of procedural form and function. Journal of Applied Behavior Analysis. 1994;27:131–144. doi: 10.1901/jaba.1994.27-131. doi: 10.1901/jaba.1994.27-131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iwata B. A., Wallace M. D., Kahng S., Lindberg J. S., Roscoe E. M., Conners J., Wordsell A. S. Skill acquisition in the implementation of functional analysis methodology. Journal of Applied Behavior Analysis. 2000;33:181–194. doi: 10.1901/jaba.2000.33-181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miltenberger R. G., Flessner C., Gatheridge B., Johnson B., Satterlund M., Egemo K. Evaluation of behavioral skills training to prevent gun play in children. Journal of Applied Behavior Analysis. 2004;37:513–516. doi: 10.1901/jaba.2004.37-513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore J. W., Edwards R. P., Sterling-Turner H. E., Riley J., DuBard M., McGeorge A. Teacher acquisition of functional analysis methodology. Journal of Applied Behavior Analysis. 2002;35:73–77. doi: 10.1901/jaba.2002.35-73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Najdowski A. C., Wallace M. D., Ellsworth C. L., MacAleese A. N., Cleveland J. M. Functional analyses and treatment of precursor behavior. Journal of Applied Behavior Analysis. 2008;41:97–105. doi: 10.1901/jaba.2008.41-97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelios L., Morten J., Tesch D., Axelrod S. The impact of functional analysis methodology on treatment choice for self-injurious and aggressive behavior. Journal of Applied Behavior Analysis. 1999;32:185–195. doi: 10.1901/jaba.1999.32-185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phillips K. J., Mudford O. C. Functional analysis skills training for residential caregivers. Behavioral Interventions. 2008;23:1–12. [Google Scholar]

- Schaeffer H. H. Self-injurious behavior: Shaping “head banging” in monkeys. Journal of Applied Behavior Analysis. 1970;3:111–116. doi: 10.1901/jaba.1970.3-111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skinner B. F. Science and human behavior. New York: Macmillan; 1953. [Google Scholar]

- Vollmer T. R., Marcus B. A., Ringdahl J. E., Roane H. S. Progressing from brief assessments to extended functional analyses in the evaluation of aberrant behavior. Journal of Applied Behavior Analysis. 1995;28:561–576. doi: 10.1901/jaba.1995.28-561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace M. D., Doney J. K., Mintz-Resudek C. M., Tarbox R. S. Training educators to implement functional analysis. Journal of Applied Behavior Analysis. 2004;37:89–92. doi: 10.1901/jaba.2004.37-89. [DOI] [PMC free article] [PubMed] [Google Scholar]