Abstract

Background. Oligonucleotide microarrays allow for high-throughput gene expression profiling assays. The technology relies on the fundamental assumption that observed hybridization signal intensities (HSIs) for each intended target, on average, correlate with their target's true concentration in the sample. However, systematic, nonbiological variation from several sources undermines this hypothesis. Background hybridization signal has been previously identified as one such important source, one manifestation of which appears in the form of spatial autocorrelation. Results. We propose an algorithm, pyn, for the elimination of spatial autocorrelation in HSIs, exploiting the duality of desirable mutual information shared by probes in a common probe set and undesirable mutual information shared by spatially proximate probes. We show that this correction procedure reduces spatial autocorrelation in HSIs; increases HSI reproducibility across replicate arrays; increases differentially expressed gene detection power; and performs better than previously published methods. Conclusions. The proposed algorithm increases both precision and accuracy, while requiring virtually no changes to users' current analysis pipelines: the correction consists merely of a transformation of raw HSIs (e.g., CEL files for Affymetrix arrays). A free, open-source implementation is provided as an R package, compatible with standard Bioconductor tools. The approach may also be tailored to other platform types and other sources of bias.

1. Background

Microarray technology, a fairly recent yet already well-established and extensively dissected method, allows for the simultaneous quantification of expression levels of entire genomes or subsets thereof [1]. In situ oligonucleotide arrays are by far the most popular type, representing at the time of writing 70% of all arrays deposited in the Gene Expression Omnibus (GEO), a public microarray database, in the last year; of these, 58% are Affymetrix GeneChips [2]. These are designed such that each gene is targeted by multiple perfectly complementary oligonucleotide probes at various locations along its sequence (forming a probe set); copies of each of these probes are covalently linked to a solid surface at a predetermined location on a grid; a labelled RNA sample is allowed to hybridize to each of these probes; and finally a hybridization signal intensity (HSI) is obtained for each probe [3]. The technology relies on the assumption that, on average, HSIs observed in a given probe set correlate with the true concentration of the given mRNA species in the biological sample, that is, the true expression level of the targeted gene. Variations on this architecture exist; for example, tiling arrays, are designed such that probes target contiguous regions of a genome, usually without regard for transcript annotations [4].

Because the objective of such experiments is generally to assess gene expression differences between one or more biological samples, separating biologically interesting variation from all other sources of obscuring variation is of utmost importance [5]; consequently, this has been a major focus of microarray research in the last decade. Whereas random error (i.e., noise) can be estimated via sample variance and cancelled out by some form of averaging, systematic errors introduce biases in the data that cannot be estimated without an independent source of information and cannot be explicitly corrected for without being estimated [6]. As has been shown repeatedly, there are several important sources of systematic errors—notably arising from RNA sample preparation [7]; probe-target binding efficiency [8], specificity [9], and spatial uniformity [10–14]; secondary structure in probes [15] and transcripts [16], and other thermodynamic properties [17] such as GC content [18]; scanner calibration [19]; and algorithmic processing of raw image data [20]—and underestimating their effect on analyses leads to tangible consequences [21].

An initial attempt to address nonuniform “background intensity” was incorporated directly in the design of the GeneChip platform: for each “perfect match” (PM) probe, there is a corresponding “mismatch” (MM) probe which features a single different base [3]. The intention was twofold: to correct for specificity biases, by assuming each PM/MM pair would share nonspecific hybridization signal, while only the PM probe would exhibit specific signal, and to correct for spatial biases, by making each PM/MM pair physically adjacent on the array. In practice, MM probes contain significant specific signal and do not share common nonspecific background with their respective PM probes; in fact, early studies found that approximately one-third of MM intensities are greater than their PM counterpart [22, 23], which is evidently incompatible with their stated purpose. In recent years, MM intensities have largely been ignored, and recent array designs by Affymetrix do not include them [24]. Current popular methods make no attempt to correct for either of these biases.

In order to make data from multiple arrays directly comparable, normalization methods such as locally weighted scatterplot smoothing (LOWESS or loess) [25–28] and Bolstad et al.'s quantile normalization algorithm [27] have been proposed and are currently widely regarded as essential preprocessing steps. The former modifies the HSIs such that a log HSI ratio versus mean log HSI plot becomes locally linear, while the latter forces the HSIs from each array in the experiment to follow the same distribution. It is important to note, then, that neither of these methods attempts to correct for any specific source of obscuring variation, but rather they make a general attempt to craft the raw data from separate arrays such that they become more directly comparable, inevitably discarding information in the process.

It has been noted that the choice of background correction methodology has a significant impact on downstream analysis accuracy [29], which implies that nonspecific hybridization and spatial nonuniformity should not be ignored. We focus here exclusively on the latter; the reader is referred to [30–32] for treatments of the former. A few methods addressing spatial biases in spotted cDNA arrays have been proposed. Dudoit et al. proposed the only such method to gain wide acceptance in the community, which consists of applying the loess-based intensity-dependent bias correction method individually within each print-tip group [25, 26, 33]. Colantuoni et al. proposed to subtract a 2D loess fit of intensities on the array surface [34], while Workman et al. similarly subtract an estimate of local bias based on a 2D weighted moving average with a Gaussian kernel [35], and Wilson et al. fit a loess curve on the MA plot as in Dudoit et al.'s study, but then adjust it by smoothing residuals spatially on the array [36]. Various other methods have also been published [37, 38]. Some methods have been proposed in the case of in situ oligonucleotide arrays as well, although none is commonly used. Reimers and Weinstein proposed to visualize spatial biases by computing the deviation of each probe intensity from a robust average of that probe's intensity across all arrays in the experiment and plotting these values on the array surface [10]; Suárez-Fariñas et al. used these to identify array regions to discard [11]. Upton and Lloyd propose to subtract the smallest intensity around each probe on the array surface [12]. Arteaga-Salas et al. essentially combine the ideas of Reimers and Weinstein and those of Upton and Lloyd to come up with an algorithm: subtract, from each probe intensity, the average local deviation from the average intensity across replicate arrays, that is, an estimate of locally induced, array-specific error [13]. Various other methods have also been published [14], some of which are applicable only to specific platforms, such as CGH arrays [39] and SNP arrays [40].

The currently advocated standard operating procedure with respect to the well-known issue of spatial bias in in situ oligonucleotide array data, when one is used at all, consists of performing quality control steps to identify arrays deemed to be beyond arbitrary acceptability thresholds, and discarding these while leaving others intact [10]. While this may appear to reduce noise on affected probes, it also silently increases noise globally by decreasing replication, and there is comparatively little information on any given gene to begin with [41]. Discarding array regions or even individual probes [10, 11, 42] may alleviate this issue somewhat, though this merely shifts the issue to a lower level. Several studies have concluded that most, if not all, arrays are affected by spatial bias, regardless of platform [14]. We believe that very few of these are likely to be truly unrecoverable; thus, we propose to correct all arrays without prejudice.

We posit that a priori information about sources of systematic variation, in the form of known relationships between probes, can be exploited to identify, quantify, visualize, and effectively correct probe-level systematic errors. Here, we present an algorithm, pyn, to correct for sources of obscuring variation dependent on spatial location of probes on the array. The algorithm works by leveraging the power of expected mutual information found in probe sets with that of unexpected mutual information found in spatially proximate probes.

2. Methods

2.1. Algorithm

Using notation inspired by [22], let Y ijn be the log2-transformed HSI of probe j = 1,…, J n belonging to probe set n = 1,…, N, on array i = 1,…, I. Error estimates for observed intensities can then be expressed as deviation from some given intensity estimator

| (1) |

and we propose the following estimator:

| (2) |

The probe residual, R ijn, is thus simply the deviation of each HSI from the mean observed in its probe set on the same array.

In justifying the use of spike-in and dilution datasets for assessing accuracy, Cope et al. assert that “to estimate bias in measurements, we need truth, in an absolute or relative form, or at least a different, more accurate measurement of the same samples” [6]. We propose two extensions to this view: first, a priori information about relationships between probes provides a form of relative truth; and second, this bias estimate is better used as a correction term than as an accuracy assessment.

Although a given probe residual merely quantifies one HSI's deviation from an estimate and thus contains contributions from many probe-specific biases (e.g., binding efficiency and specificity) and random noise, a sufficiently large pool of probe residuals with similar locations on the array provides a summary of the average bias induced by such a location. Noting that residuals (and accordingly means of residuals) share units and scale with HSIs, we thus simply propose to subtract this estimate of location-induced bias from each HSI to obtain corrected signals:

| (3) |

where

| (4) |

and kNN(jn) returns the k nearest neighbours of probe jn, physically on the array, in terms of Euclidian distance.

It is of theoretical interest to note that, as k increases, the computed estimates of local bias tend to approach a constant as they become less local and approach a global measure on the entire array; in the limiting case, where k → ∑n J n, the correction factor is almost exactly zero. Of practical interest is that this is also the expected behaviour in the case of an ideal array (lacking spatial bias) with a reasonable k.

The optimal choice of k, the only free parameter of our algorithm, is explored in the Results section. When a value is not given in the text, k = 20 is to be assumed.

2.2. Data

In this paper, three typical Affymetrix Human Genome U133A datasets are used to evaluate the proposed algorithm: two datasets obtained from the public microarray repository GEO [2] and a well-known benchmark dataset; these are referred to in the text as “GSE1400,” “GSE2189,” and “spike-in,” respectively. The GSE1400 experiment compares two samples, with three replicates each: RNA associated with membrane-bound polysomes and RNA associated with free polysomes [44]. The GSE2189 experiment assesses the impact of the chemotherapeutic drug motexafin gadolinium relative to an untreated control sample, at three time points, with three replicates for each of these six samples [45]. In the spike-in experiment, 42 transcripts are spiked in at 14 different concentrations, arranged in a Latin Square design across 14 arrays, such that each transcript is spiked in once at each concentration, with three replicates for each array [46]. For practical reasons, some results present data for only one array per dataset; in these cases, the arrays in question are GSM23121, GSM39803, and Expt1_R1, respectively.

2.3. Implementation

An implementation of the proposed spatial correction procedure is provided as part of an R package (“pyn”), with critical parts written in C++ for efficiency. An “AffyBatch” object (“batch”) can be replaced with a corrected version by running the following command within an R session:

> batch <- normalize. pyn (batch)

The procedure runs for approximately one second per array (Intel Core 2 Duo 3.33 GHz) and accepts any valid AffyBatch object, independent of array platform. The user can then proceed with his usual analysis pipeline, for example, “rma” and “limma.” The package also contains some utility functions for generating assessment figures similar to those found in this paper; users are referred to the package's internal documentation for instructions. The package is released under a BSD license and is available at http://lemieux.iric.ca/pyn/.

2.4. Alternative Methods

In this paper, “CPP” and “LPE” refer to the two algorithms proposed by Arteaga-Salas et al. in [13]; implementations provided by Dr. Arteaga-Salas were used as they are, with default parameters. “Upton-Lloyd” refers to the method proposed in [12]; as code was not made available by its authors, we used our own implementation, which is provided for convenience in the R package made available with this paper, as the function “upton.lloyd.2005.” We now briefly describe these three algorithms.

2.4.1. LPE

For each probe on each array in a batch, a value similar to our residual is computed, relating the deviation of the probe's intensity from its median intensity across all arrays. A “code” is then computed for each probe, which identifies the array where this value is the largest and whether it is positive or negative. Then, to determine whether a given location in a batch of arrays is affected by spatial bias, PM and MM probes in a 5 × 5 window are inspected; if an “unusual” number of these exhibit the same code, the location on the array identified by this code is flagged for correction. Finally, for each flagged location, a standardized average of the previously defined deviations within its window is subtracted.

2.4.2. CPP

Residuals are computed for each probe on each array as in LPE, but the authors require in this case for all arrays to be replicates of one another. Each PM intensity is corrected by subtracting its MM counterpart's residual and vice versa. Residuals are scaled before subtraction to address differences in PM and MM distributions.

2.4.3. Upton-Lloyd

From each intensity at a given location on an array, the smallest intensity found in a (2m + 1) × (2m + 1) window is centred on it is subtracted; if the result is negative, it is replaced with zero. The authors find that m = 1 works best.

3. Results

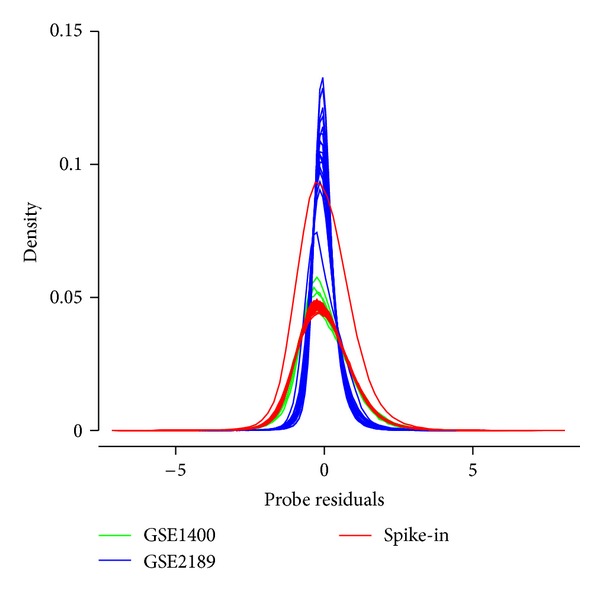

Figure 1 shows the empirical distribution of probe residuals in each of the 66 arrays found in the three datasets described in the Data subsection. In all cases, the residuals appear to follow an approximately normal distribution with sample mean near zero (|μ | <10−17). The parameters of this distribution vary from one array to another, based on their intensity distributions, which notably vary in offset (“background intensity”) and scale (“dynamic range”); however, arrays in a common “batch” usually share these parameters. Thus, identifying outliers in residual distributions within a batch may be useful in identifying arrays significantly affected by spatial bias.

Figure 1.

Empirical distributions of probe residuals R, as defined in the text, with one curve associated with each of the 66 arrays found in the three datasets. In each case, the mean residual is almost zero (|μ | <10−17).

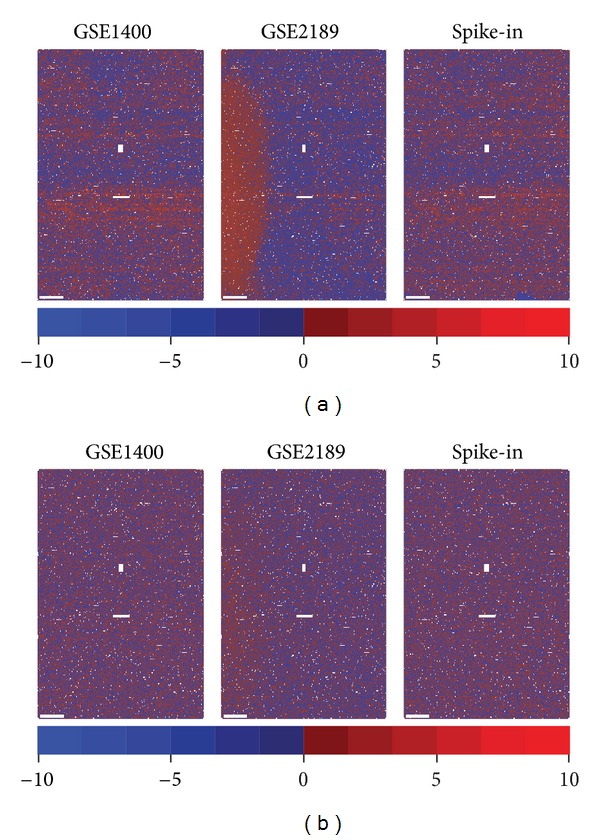

Under the assumption that probes are randomly located on the array, these residuals are expected to be randomly spatially distributed across the array; thus, any spatial patterns must be ascribable to some form of technical error. In practice, we frequently observe various manifestations of such patterns. Figure 2(a) plots the empirical spatial distribution of probe residuals for three arrays as heat maps, allowing for visual, qualitative assessment. GSE1400 is a typical case, in which the only noticeable regularities perceived are a few array-wide, horizontal stripes showing a greater density of either positive or negative residuals; GSE2189 presents a more problematic case, where a large region of the array (left side) exhibits a large cluster of positive residuals; finally, the spike-in dataset reveals similar stripes as in GSE1400, in addition to a small localized artefact showing exclusively negative residuals (bottom right).

Figure 2.

Empirical spatial distribution of probe residuals in original and corrected data. Mapping probe residuals back to their originating physical locations and displaying them as a heat map reveals a variety of spatial artefacts in (a) original data: horizontal stripes (all arrays), a large region of positive residuals (GSE2189, left), and a small region of negative residuals (spike-in, bottom right); (b) after correction (k = 20), all of these artefacts appear to have been eliminated or greatly attenuated. Non-PM locations (MM and control probes) are coloured in white.

The spatial patterns identified in Figure 2 are reminiscent of those previously identified by plotting each probe intensity's deviation from an arbitrary reference array [47], from the average of that probe's intensity in replicate arrays [10], in all arrays in the experiment [11, 13], or in all arrays found in GEO [14], and by plotting the residuals (or some variation thereof) of a probe-level model-based method [48, 49]. However, previous work has been limited to qualitative and subjective quality control purposes, with few exceptions to the best of the authors' knowledge [12, 13]. We propose to exploit these values in a background correction algorithm. Moreover, our definition of probe residuals, not based on other arrays, allows for the indiscriminate identification of both systematic (e.g., batch effects, scanner effects) and array-specific spatial biases (e.g., sample spatial nonuniformity, smudges, scratches).

Figure 2(b) shows the spatial distribution of probe residuals on the three arrays after correction by pyn. Qualitatively, all three types of artefacts previously identified appear to have been eliminated or at least severely reduced, thereby marginalizing spatial dependence between residuals.

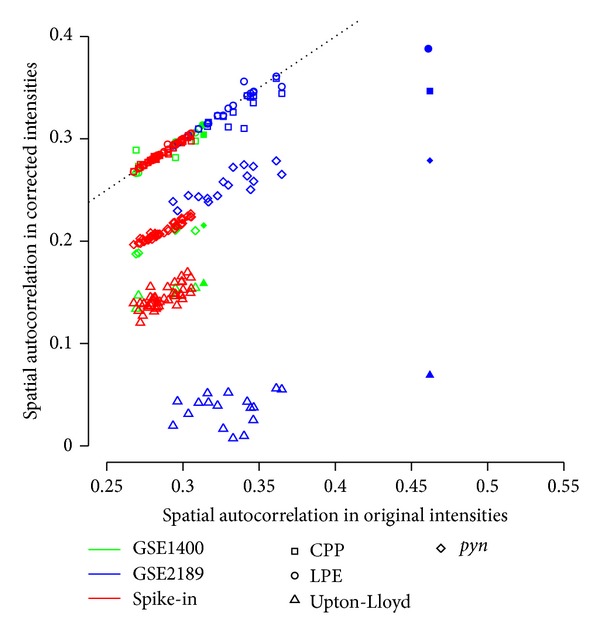

This “spatial dependence” can be defined quantitatively as spatial autocorrelation, the bidimensional extension to autocorrelation, which itself is a special case of correlation in which the two vectors under analysis u and v are such that u i = v i+1 for i = 1, …, | u|. This additional dimensionality and directionality leads to multiple alternative formulations of a quantitative metric: Geary's C [50] and Moran's I [51] are widely used in geostatistics, while Reimers and Weinstein [10] and Wilson et al. [36] have proposed metrics specifically for microarrays. Though we have implemented all four of these metrics, results are only shown for Reimers-Weinstein as all results were found to be comparable (data not shown). Figure 3 assesses the impact of correcting each of the 66 arrays in our study with the four methods described in the Methods section. CPP and LPE leave all arrays largely unchanged, with the exception of GSM38903 (the selected array from GSE2189, with large blob in Figure 2(a)); in all cases, Upton-Lloyd results in the lowest spatial autocorrelation (near zero), while pyn results in the second lowest.

Figure 3.

Quantitative effects of correction on spatial autocorrelation. Reimers-Weinstein spatial autocorrelation metric computed in data corrected by various methods (y-axis) and in original data (x-axis) for each array in each dataset, with the “selected” array from each dataset being emphasized by a solid bullet. The Reimers-Weinstein metric is a Pearson correlation coefficient computed between each intensity and the average intensity among its four neighbours on the array [10]. An unchanged metric lies on the dotted unit line, while a value below (above) this line indicates a decrease (increase) in spatial autocorrelation. Upton-Lloyd consistently results in the greatest decrease, followed by pyn, while CPP and LPE have no effect in all but one case.

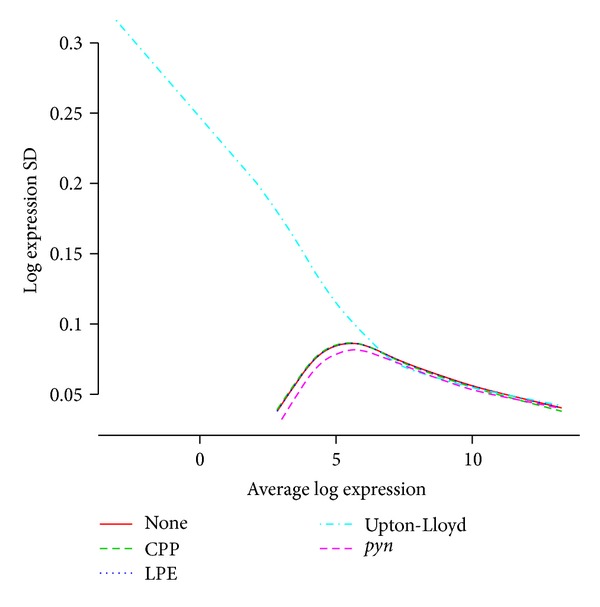

As effective correction of array-specific spatial biases should result in greater reproducibility, we evaluated the impact of each spatial correction method on variance across replicate arrays. Figure 4 shows the standard deviation across replicate arrays of gene expression values as obtained by RMA after pretreatment by each of the methods, as a function of mean log expression value. At low expression levels, pyn performs best, Upton-Lloyd critically inflates standard deviation, and CPP and LPE appear to have no effect whatsoever; at higher expression levels, all methods appear to have virtually no effect.

Figure 4.

Effect of correction on reproducibility across replicate arrays. Standard deviation of log expression index of probe sets across replicate arrays as a function of mean log expression, as computed in data obtained with RMA (all default parameters) after pretreatment with each of the spatial correction methods (or none). pyn performs best, resulting in an increase in reproducibility notably for low-expression genes, while Upton-Lloyd deteriorates data for low-expression genes, and CPP and LPE have virtually no effect.

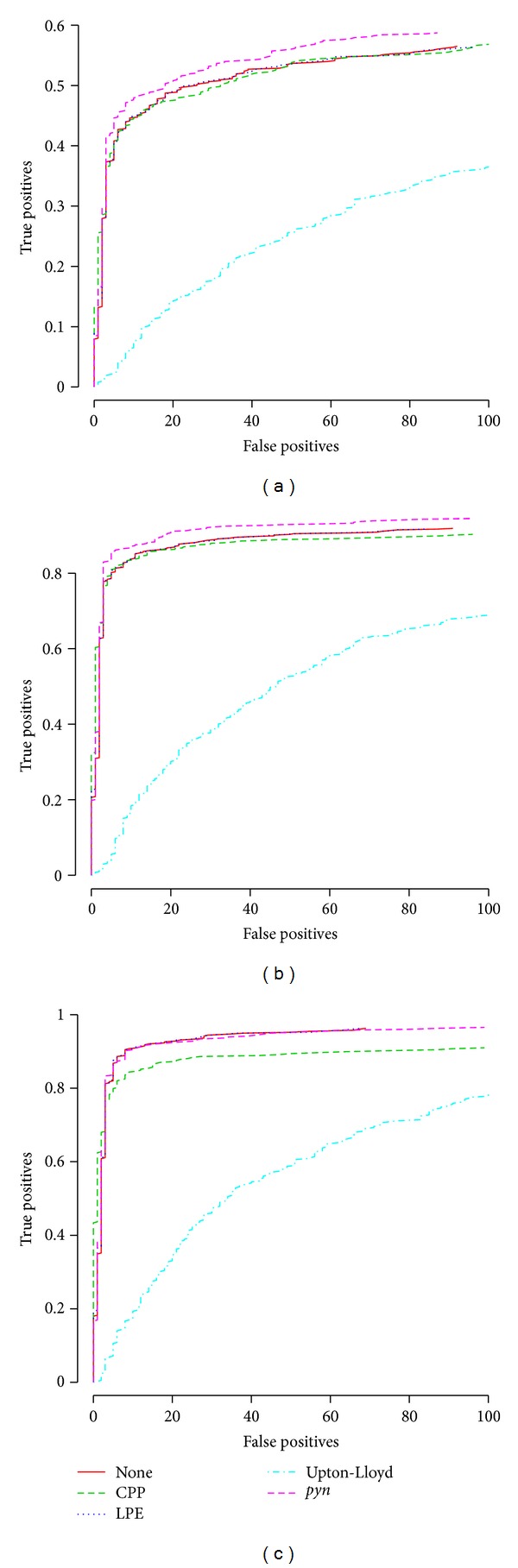

In order to assess the impact of the spatial correction methods on more tangible, biological results, we used the affycomp package [43] to assess differentially expressed gene (DEG) detection power in a dataset in which DEGs are “known.” The tool separates genes spiked in at low (Figure 5(a)), medium (Figure 5(b)), and high concentrations (Figure 5(c)); in each plot, the x-axis conveys 1 − specificity, while the y-axis conveys sensitivity (see [6, 43] for details). pyn significantly improves low- and medium-concentration DEG detection power, while Upton-Lloyd clearly deteriorates it, and CPP and LPE appear to have virtually no effect; at high concentrations, no method can improve DEG detection power, though Upton-Lloyd and CPP deteriorate it significantly.

Figure 5.

Effect of correction on DEG detection power. Receiver operating characteristic (ROC) curves generated by the R/bioconductor package affycomp, plotting sensitivity as a function of (1 – specificity), as computed in data obtained with RMA (all default parameters) after pretreatment with each of the spatial correction methods (or none) for (a) low-, (b) medium-, and (c) high-concentration spike-in genes. See [6, 43] for details. At low and medium concentrations, pyn performs the best; at all concentrations, Upton-Lloyd performs the worst by a large margin; CPP and LPE have virtually no effect, with the exception of CPP degrading results at high concentration.

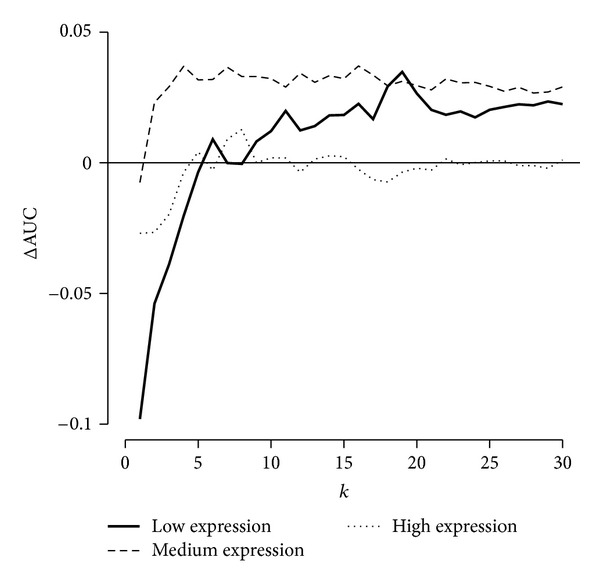

Finally, we assessed the effect of parameter k, the number of neighbouring residuals pooled in (3). ROC curves are generated before correction and after correction with varying values of k, and the area under the curve (AUC) is computed for each ROC curve. Figure 6 shows the difference between the AUC in corrected data and the AUC in original data (ΔAUC), as a function of k, separately for low-, medium-, and high-concentration spike-in genes. Small values of k produce less robust (noisy) estimates, as they are more susceptible to contain contributions from other sources of probe-specific, but not location-dependent, variations; thus, as expected, small values of k(<6) perform badly. Conversely, as k increases, estimates of local bias, that is, the 's, become increasingly robust; thus, low- and medium-concentration DEG detection power is increased significantly above this threshold, peaking around k = 20 then levelling off (not shown, convergence to ΔAUC = 0). Above the k = 6 threshold, high-concentration DEG detection power is unaffected. As explained in the Algorithm subsection, as k continues to increase, the correction gradually becomes a simple scaling of all intensities by a constant; thus, the curves in Figure 6 would approach zero as k continues to increase (data not shown).

Figure 6.

Effect of k on DEG detection power. Difference between area under the curve (AUC) of receiver operating characteristic (ROC) curves computed in corrected data and AUC computed in original data, as a function of the value used for k, separately for low-, medium, and high-concentration spike-in genes. pyn improves low- and medium-concentration DEG detection power with k > 5, with optimal performance at k = 19, and has virtually no effect on high-concentration DEG detection power above k = 5.

4. Discussion

Systematic, nonbiological variations have been long known to obscure microarray data. In the case of spotted arrays, array- and print-tip-dependent biases were the first to be considered. Array bias could trivially be visualized by inspecting box plots of a batch of arrays or using more sophisticated approaches such as RLE and NUSE plots [33]; correction of such biases usually consisted of subtracting each array's mean or median intensity to impose a common average on each array's HSI distribution [33]. Print-tip bias could similarly be visualized by inspecting intensity distributions for each print tip, plotted side by side; a proposed correction consisted of subtracting a loess fit of this plot for each array [25, 26]. Intensity-dependent bias can be visualized and corrected for in the same manner, in which case the plots are known as MA plots and the correction is that first proposed in [25].

This strategy of addressing known sources of bias individually has been somewhat abandoned in the case of in situ oligonucleotide arrays, perhaps based on the assumption that streamlined commercial manufacturing of such high-density arrays is much less prone to significant systematic errors. For example, background correction and normalization steps in two of the most popular analysis packages, RMA [22] and GCRMA [52], do not take into account known sources of bias, but rather make global, sweeping transformations of the data, via a convolutional model [53] and quantile normalization [27], respectively.

We posit, as initially asserted by Dudoit et al. in 2002, that correcting for known biases using a priori information is preferable to global, blind, generic normalization, and/or reliance on unverified modelling assumptions [26]. We have thus proposed such a scheme for correcting spatial bias in Affymetrix GeneChip data, which can readily be applied on other platforms using multiple probes per gene.

We have shown that this method reduces spatial autocorrelation in HSIs, reduces variance in gene expression measures across replicate arrays, improves DEG detection power, and performs better than previously published methods in terms of replicate variance reduction and DEG detection power increase. As for spatial autocorrelation reduction, we conclude that Upton-Lloyd removes “too much” due to its working directly on HSIs as opposed to pyn's working with residuals, which may avoid subtracting the “baseline” spatial autocorrelation inherent to each microarray platform.

Our analysis of parameter k, the number of neighbouring residuals pooled in (3), identified k = 6 as a minimum in order for correction to improve DEG detection power and k = 19 as the optimal value. As computational time is linearly proportional to k and values in the 12 ≤ k ≤ 30 range all result in comparable performance, we propose to compromise with k = 20, and this is the default in the provided implementation.

An assessment based on a spike-in benchmark dataset indicated that DEG detection power is increased for low- and medium-concentration genes and is insignificantly affected for high-concentration genes. However, it should be noted that, by its very design, this central affycomp assessment does not take into account methods' ability to increase detection power across replicate arrays, that is, to take array-specific effects and so-called “batch effects” into consideration: a ROC curve is computed for each possible pair of arrays within each of the three 14-array batches, and the 3 × C(14,2) = 3 × 91 = 273 curves are averaged to generate plots such as Figure 5 [6, 43]. As the great majority of microarray experiments feature more than one replicate array per sample, this setup is highly unrepresentative of real conditions. Additionally, it should be noted that the spike-in dataset is at the high end of the quality control spectrum; thus, if our correction method is able to improve biological results resulting from this data, it is to be expected that improvement will be even more significant for everyday datasets, such as GSE1400 and GSE2189.

Although the “random” spatial distribution of probes on the array surface was presented in this paper as a necessary assumption, this is an oversimplification and, thus, not strictly correct. In reality, the underlying assumption is that probe locations are independent of their targets, or—in more practical terms—of the locations of probes in the same probe set, such that any correlation between locations and residuals is always considered undesirable noise or bias; this can also be expressed as the assumption that probes are randomly spatially distributed on the array surface within each probe set. Thus, although recent studies have uncovered a significant dependence between probe locations and their sequences by observing spatial patterns when spatially mapping probe sequence GC content on the array [10, 14], our algorithm's assumptions are unaffected. This may also explain why, although Upton-Lloyd appears to reduce spatial autocorrelation further than our method, it performs much worse in replicate array variance and DEG detection power. In addition, these results imply that spatial bias correction procedures may unintentionally (likely only partially) correct sequence biases as well; conversely, it implies that sequence bias correction may somewhat correct spatial biases in the process as well. This does not appear to be problematic per se, but is likely worthy of further investigation in order to fully understand its implications.

Finally, the framework established herein provides opportunity for implementing further types of microarray data pretreatments: correction of a specific source of bias which can be expressed as the presence of undesirable mutual information shared by “neighbouring” probes in some given coordinate space (e.g., physical location on the array) or based on some given distance metric, as opposed to expected, desirable mutual information shared by some other sets of probes (e.g., Affymetrix “probe sets”). This approach (which we dub pyn: probe your neighbours) should be applicable to other biases such as probe composition as well as to other microarray platforms such as tiling arrays, and preliminary results indicate that this is indeed the case.

5. Conclusions

Oligonucleotide array data is invariably biased by a number of confounding factors, some of which can be effectively quantified and eliminated. We have proposed a method for correcting bias arising from a known source and show the efficacy of one case, namely, spatial bias in Affymetrix GeneChip data. An implementation is provided as a convenient R package, released under the BSD license, available at http://lemieux.iric.ca/pyn/.

Acknowledgments

The authors thank Dr. Jose Manuel Arteaga-Salas for providing implementations of CPP and LPE. This work was supported by the Canadian Institutes of Health Research (CTP-79843). IRIC is supported in part by the Canadian Centre of Excellence in Commercialization and Research; the Canada Foundation for Innovation; and the Fonds de Recherche en Santé du Québec.

References

- 1.Schena M, Shalon D, Davis RW, Brown PO. Quantitative monitoring of gene expression patterns with a complementary DNA microarray. Science. 1995;270(5235):467–470. doi: 10.1126/science.270.5235.467. [DOI] [PubMed] [Google Scholar]

- 2.Edgar R, Domrachev M, Lash AE. Gene Expression Omnibus: NCBI gene expression and hybridization array data repository. Nucleic Acids Research. 2002;30(1):207–210. doi: 10.1093/nar/30.1.207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Lockhart DJ, Dong H, Byrne MC, et al. Expression monitoring by hybridization to high-density oligonucleotide arrays. Nature Biotechnology. 1996;14(13):1675–1680. doi: 10.1038/nbt1296-1675. [DOI] [PubMed] [Google Scholar]

- 4.Selinger DW, Cheung KJ, Mei R, et al. RNA expression analysis using a 30 base pair resolution Escherichia coli genome array. Nature Biotechnology. 2000;18(12):1262–1268. doi: 10.1038/82367. [DOI] [PubMed] [Google Scholar]

- 5.Hartemink AJ, Gifford DK, Jaakkola TS, Young RA. Maximum likelihood estimation of optimal scaling factors for expression array normalization. Microarrays: Optical Technologies and Informatics. 2001;2(23):132–140. [Google Scholar]

- 6.Cope LM, Irizarry RA, Jaffee HA, Wu Z, Speed TP. A benchmark for Affymetrix GeneChip expression measures. Bioinformatics. 2004;20(3):323–331. doi: 10.1093/bioinformatics/btg410. [DOI] [PubMed] [Google Scholar]

- 7.Gentleman R. Bioinformatics and Computational Biology Solutions Using R and Bioconductor. New York, NY, USA: Springer Science and Business Media; 2005. [Google Scholar]

- 8.Naef F, Magnasco MO. Solving the riddle of the bright mismatches: labeling and effective binding in oligonucleotide arrays. Physical Review E. 2003;68(1, part 1) doi: 10.1103/PhysRevE.68.011906.011906 [DOI] [PubMed] [Google Scholar]

- 9.Wu Z, Irizarry RA. Stochastic models inspired by hybridization theory for short oligonucleotide arrays. Journal of Computational Biology. 2005;12(6):882–893. doi: 10.1089/cmb.2005.12.882. [DOI] [PubMed] [Google Scholar]

- 10.Reimers M, Weinstein JN. Quality assessment of microarrays: visualization of spatial artifacts and quantitation of regional biases. BMC Bioinformatics. 2005;6(article 166) doi: 10.1186/1471-2105-6-166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Suárez-Fariñas M, Haider A, Wittkowski KM. "Harshlighting" small blemishes on microarrays. BMC Bioinformatics. 2005;6(article 65) doi: 10.1186/1471-2105-6-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Upton GJG, Lloyd JC. Oligonucleotide arrays: information from replication and spatial structure. Bioinformatics. 2005;21(22):4162–4168. doi: 10.1093/bioinformatics/bti668. [DOI] [PubMed] [Google Scholar]

- 13.Arteaga-Salas JM, Harrison AP, Upton GJG. Reducing spatial flaws in oligonucleotide arrays by using neighborhood information. Statistical Applications in Genetics and Molecular Biology. 2008;7(1, article 29) doi: 10.2202/1544-6115.1383. [DOI] [PubMed] [Google Scholar]

- 14.Langdon WB, Upton GJ, da Silva Camargo R, Harrison AP. A survey of spatial defects in Homo Sapiens Affymetrix GeneChips. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2010;7(4):647–653. doi: 10.1109/TCBB.2008.108. [DOI] [PubMed] [Google Scholar]

- 15.Gharaibeh RZ, Fodor AA, Gibas CJ. Software note: using probe secondary structure information to enhance Affymetrix GeneChip background estimates. Computational Biology and Chemistry. 2007;31(2):92–98. doi: 10.1016/j.compbiolchem.2007.02.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ratushna VG, Weller JW, Gibas CJ. Secondary structure in the target as a confounding factor in synthetic oligomer microarray design. BMC Genomics. 2005;6(article 31) doi: 10.1186/1471-2164-6-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wei H, Kuan PF, Tian S, et al. A study of the relationships between oligonucleotide properties and hybridization signal intensities from NimbleGen microarray datasets. Nucleic Acids Research. 2008;36(9):2926–2938. doi: 10.1093/nar/gkn133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Samanta MP, Tongprasit W, Sethi H, Chin C, Stolc V. Global identification of noncoding RNAs in Saccharomyces cerevisiae by modulating an essential RNA processing pathway. Proceedings of the National Academy of Sciences of the United States of America. 2006;103(11):4192–4197. doi: 10.1073/pnas.0507669103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Upton GJG, Sanchez-Graillet O, Rowsell J, et al. On the causes of outliers in Affymetrix GeneChip data. Briefings in Functional Genomics and Proteomics. 2009;8(3):199–212. doi: 10.1093/bfgp/elp027. [DOI] [PubMed] [Google Scholar]

- 20.Ahmed AA, Vias M, Iyer NG, Caldas C, Brenton JD. Microarray segmentation methods significantly influence data precision. Nucleic Acids Research. 2004;32(5, article e50) doi: 10.1093/nar/gnh047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Leek JT, Storey JD. Capturing heterogeneity in gene expression studies by surrogate variable analysis. PLoS Genetics. 2007;3(9):1724–1735. doi: 10.1371/journal.pgen.0030161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Irizarry RA, Hobbs B, Collin F, et al. Exploration, normalization, and summaries of high density oligonucleotide array probe level data. Biostatistics. 2003;4(2):249–264. doi: 10.1093/biostatistics/4.2.249. [DOI] [PubMed] [Google Scholar]

- 23.Naef F, Lim DA, Patil N, Magnasco M. DNA hybridization to mismatched templates: a chip study. Physical Review E. 2002;65(4, part 1) doi: 10.1103/PhysRevE.65.040902.040902 [DOI] [PubMed] [Google Scholar]

- 24.Affymetrix. GeneChip Gene 1.0 ST Array System. Santa Clara, Calif, USA, 2007.

- 25.Yang YH, Dudoit S, Luu P, Speed TP. Normalization for cDNA microarray data. Microarrays: Optical Technologies and Informatics. 2001;2(23):141–152. [Google Scholar]

- 26.Dudoit S, Yang YH, Callow MJ, Speed TP. Statistical methods for identifying differentially expressed genes in replicated cDNA microarray experiments. Statistica Sinica. 2002;12(1):111–139. [Google Scholar]

- 27.Bolstad BM, Irizarry RA, Astrand M, Speed TP. A comparison of normalization methods for high density oligonucleotide array data based on variance and bias. Bioinformatics. 2003;19(2):185–193. doi: 10.1093/bioinformatics/19.2.185. [DOI] [PubMed] [Google Scholar]

- 28.Berger JA, Hautaniemi S, Järvinen A, Edgren H, Mitra SK, Astola J. Optimized LOWESS normalization parameter selection for DNA microarray data. BMC Bioinformatics. 2004;5(article 194) doi: 10.1186/1471-2105-5-194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ritchie ME, Silver J, Oshlack A, et al. A comparison of background correction methods for two-colour microarrays. Bioinformatics. 2007;23(20):2700–2707. doi: 10.1093/bioinformatics/btm412. [DOI] [PubMed] [Google Scholar]

- 30.Carter SL, Eklund AC, Mecham BH, Kohane IS, Szallasi Z. Redefinition of Affymetrix probe sets by sequence overlap with cDNA microarray probes reduces cross-platform inconsistencies in cancer-associated gene expression measurements. BMC Bioinformatics. 2005;6(article 107) doi: 10.1186/1471-2105-6-107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wu C, Carta R, Zhang Sequence dependence of cross-hybridization on short oligo microarrays. Nucleic Acids Research. 2005;33(9):p. e84. doi: 10.1093/nar/gni082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Binder H, Brücker J, Burden CJ. Nonspecific hybridization scaling of microarray expression estimates: a physicochemical approach for chip-to-chip normalization. Journal of Physical Chemistry B. 2009;113(9):2874–2895. doi: 10.1021/jp808118m. [DOI] [PubMed] [Google Scholar]

- 33.Yang YH, Dudoit S, Luu P, et al. Normalization for cDNA microarray data: a robust composite method addressing single and multiple slide systematic variation. Nucleic Acids Research. 2002;30(4):p. e15. doi: 10.1093/nar/30.4.e15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Workman C, Jensen LJ, Jarmer H, et al. A new non-linear normalization method for reducing variability in DNA microarray experiments. Genome Biology. 2002;3(9, research0048) doi: 10.1186/gb-2002-3-9-research0048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Colantuoni C, Henry G, Zeger S, Pevsner J. Local mean normalization of microarray element signal intensities across an array surface: quality control and correction of spatially systematic artifacts. BioTechniques. 2002;32(6):1316–1320. doi: 10.2144/02326mt02. [DOI] [PubMed] [Google Scholar]

- 36.Wilson DL, Buckley MJ, Helliwell CA, Wilson IW. New normalization methods for cDNA microarray data. Bioinformatics. 2003;19(11):1325–1332. doi: 10.1093/bioinformatics/btg146. [DOI] [PubMed] [Google Scholar]

- 37.Baird D, Johnstone P, Wilson T. Normalization of microarray data using a spatial mixed model analysis which includes splines. Bioinformatics. 2004;20(17):3196–3205. doi: 10.1093/bioinformatics/bth384. [DOI] [PubMed] [Google Scholar]

- 38.Tarca AL, Cooke JE, Mackay J. A robust neural networks approach for spatial and intensity-dependent normalization of cDNA microarray data. Bioinformatics. 2005;21(11):2674–2683. doi: 10.1093/bioinformatics/bti397. [DOI] [PubMed] [Google Scholar]

- 39.Neuvial P, Hupé P, Brito I, et al. Spatial normalization of array-CGH data. BMC Bioinformatics. 2006;7(article 264) doi: 10.1186/1471-2105-7-264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Chai HS, Therneau TM, Bailey KR, Kocher JA. Spatial normalization improves the quality of genotype calling for Affymetrix SNP 6.0 arrays. BMC Bioinformatics. 2010;11(article 356) doi: 10.1186/1471-2105-11-356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Arteaga-Salas JM, Zuzan H, Langdon WB, Upton GJG, Harrison AP. An overview of image-processing methods for affymetrix genechips. Briefings in Bioinformatics. 2008;9(1):25–33. doi: 10.1093/bib/bbm055. [DOI] [PubMed] [Google Scholar]

- 42.Stokes TH, Moffitt RA, Phan JH, Wang MD. Chip artifact CORRECTion (caCORRECT): a bioinformatics system for quality assurance of genomics and proteomics array data. Annals of Biomedical Engineering. 2007;35(6):1068–1080. doi: 10.1007/s10439-007-9313-y. [DOI] [PubMed] [Google Scholar]

- 43.Irizarry RA, Wu Z, Jaffee HA. Comparison of Affymetrix GeneChip expression measures. Bioinformatics. 2006;22(7):789–794. doi: 10.1093/bioinformatics/btk046. [DOI] [PubMed] [Google Scholar]

- 44.Stitziel NO, Mar BG, Liang J, Westbrook CA. Membrane-associated and secreted genes in breast cancer. Cancer Research. 2004;64(23):8682–8687. doi: 10.1158/0008-5472.CAN-04-1729. [DOI] [PubMed] [Google Scholar]

- 45.Magda D, Lecane P, Miller RA, et al. Motexafin gadolinium disrupts zinc metabolism in human cancer cell lines. Cancer Research. 2005;65(9):3837–3845. doi: 10.1158/0008-5472.CAN-04-4099. [DOI] [PubMed] [Google Scholar]

- 46.Latin Square Data for Expression Algorithm Assessment. http://www.affymetrix.com/support/technical/sample_data/datasets.affx.

- 47.Cheng C, Li LM. Sub-array normalization subject to differentiation. Nucleic Acids Research. 2005;33(17):5565–5573. doi: 10.1093/nar/gki844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Li C, Wong WH. Model-based analysis of oligonucleotide arrays: expression index computation and outlier detection. Proceedings of the National Academy of Sciences of the United States of America. 2001;98(1):31–36. doi: 10.1073/pnas.011404098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Bolstad B, Brettschneider J, Simpson K, Cope L, Irizarry R, Speed TP. Quality assessment of affymetrix GeneChip data. In: Gentleman R, Carey V, Huber W, Irizarry R, Dudoit S, editors. Bioinformatics and Computational Biology Using R and Bioconductor. Springer; 2005. [Google Scholar]

- 50.Geary RC. The contiguity ratio and statistical mapping. The Incorporated Statistician. 1954;5(3):115–146. [Google Scholar]

- 51.Moran PA. Notes on continuous stochastic phenomena. Biometrika. 1950;37(1-2):17–23. [PubMed] [Google Scholar]

- 52.Wu Z, Irizarry RA, Gentleman R, Martinez-Murillo F, Spencer F. A model-based background adjustment for oligonucleotide expression arrays. Journal of the American Statistical Association. 2004;99(468):909–917. [Google Scholar]

- 53.Bolstad BM. Low-level analysis of high-density oligonucleotide array data: background, normalization and summarization [Ph.D. thesis in biostatistics] University of California, Berkeley; 2004. [Google Scholar]