Abstract

Signed languages exploit iconicity (the transparent relationship between meaning and form) to a greater extent than spoken languages. where it is largely limited to onomatopoeia. In a picture–sign matching experiment measuring reaction times, the authors examined the potential advantage of iconicity both for 1st- and 2nd-language learners of American Sign Language (ASL). The results show that native ASL signers are faster to respond when a specific property iconically represented in a sign is made salient in the corresponding picture, thus providing evidence that a closer mapping between meaning and form can aid in lexical retrieval. While late 2nd-language learners appear to use iconicity as an aid to learning sign, they did not show the same facilitation effect as native ASL signers, suggesting that the task tapped into more automatic language processes. Overall, the findings suggest that completely arbitrary mappings between meaning and form may not be more advantageous in language and that, rather, arbitrariness may simply be an accident of modality.

Keywords: semantics, iconicity, sign language, word recognition, psycholinguistics

Across spoken languages, word forms do not typically bear any resemblance to their referents. For example, man's best friend can variously be called dog, Hund, or kutya in English, German, or Hungarian, respectively, with no relationship tying the sounds in the words to properties of an actual dog. Recently, the existence of arbitrariness in language has been argued to aid in language learning and to provide processing benefits. Specifically, it is claimed to be an important design feature because it allows for maximum discrimination between entries in a lexicon (Monaghan & Christiansen, 2006) and thus allows for larger lexica to develop (Gasser, 2004).

Although sign languages conform to the same grammatical constraints and linguistic principles found in spoken languages and are acquired along the same timeline (for reviews, see Emmorey, 2002; Sandler & Lillo-Martin, 2006), they make use of iconicity (the transparent relationship between meaning and form) to a much greater extent than spoken languages (Taub, 2001). In other words, signed languages do not appear to conform to the same principle of arbitrariness that spoken languages do as iconicity is the opposite of arbitrariness (Meier, 2002; Sutton-Spence & Woll, 1999). A question central to our understanding of language, then, is why the relationship between meaning and spoken language phonology is arbitrary while the relationship between meaning and sign language phonology is often iconic?

Across all signed languages, the large proportion of signs that make use of iconicity suggests that the high degree of arbitrariness found in spoken languages may be an accident of modality (signed vs. spoken) rather than a basic principle of language. Under this view, the hands (signing) are better than the voice (speaking) at mapping meaning onto form, with arbitrariness prevailing in spoken languages simply because the real world does not map well onto speech. Additionally, signed languages appear better able than spoken languages to incorporate iconicity while still maintaining arbitrariness. Specifically, four phonological parameters contribute features to make up any one sign (hand shape, location, movement, and orientation), and while many signs are iconic, iconicity need not be encoded across all parameters. Frequently, therefore, some features of a sign are iconic, while others remain arbitrary. Thus, both arbitrariness and iconicity may be relevant aspects of language; however, arbitrariness may prevail in spoken languages simply because the real world does not map well onto speech and because arbitrariness and iconicity cannot coexist to the same extent in spoken languages.

Compatible with this view, iconicity is not exclusive to signed languages. Despite the dominance of arbitrary form–meaning relations, all spoken languages have a repertoire of words for which the relationship between form and meaning is not arbitrary. Consider the English onomatopoetic words moo, bow-wow, and ding-dong in which the sound of the words iconically represent the meaning. While English has a fairly limited set of onomatopoetic words, some spoken languages such as Japanese and Korean have a much larger inventory (several thousand entries, including both common and very rare examples, are found in one Japanese dictionary of iconic expressions; Kakehi, Tamori, & Schourup, 1996). These words cover not only onomatopoeia but also sound–symbolism related to other sensory experiences, manner, and mental–emotional states.

Iconic mappings of various kinds have been shown to have an effect in such varied areas as reading tasks (words presented in a canonical spatial order are read more quickly than in a noncanonical order; Zwaan & Yaxley, 2003) and in syntactic constructions (the structure of language directly reflects some aspect of the structure of reality; see Haiman, 1980). Thus, all languages take advantage of iconicity and are not wholly arbitrary, but it is hard to imagine how spoken languages might incorporate iconicity to a greater degree, particularly at the lexical level. Signed languages, on the other hand, are produced in space and are perceived with the eyes, making them more adept at mapping properties of objects and events in the visual world onto phonological properties of the language.

If the extent of iconicity across languages is linked to language modality, the question then is whether or not iconicity plays a role in language processing. In principle, the presence of a more transparent link between meaning and form could be beneficial both in sign production and sign comprehension. For example, in sign production, a stronger link between semantic properties and iconic phonological properties could help signers avoid tip-of-the-tongue states (or tip of the fingers as it is called for signed languages; Thompson, Emmorey, & Gollan, 2005). In sign comprehension, iconic properties of the sign could more readily activate the corresponding conceptual properties of the referent, resulting in faster on-line processing.

Very little is known about the role of iconicity in adult language processing, although some recent research suggests its relevance. In one study using an off-line similarity judgment task, Vigliocco, Vinson, Woolfe, Dye, and Woll (2005) asked whether native British Sign Language (BSL) signers and English speakers differ in their judgments when grouping signs or words referring to tools, tool actions, and body actions according to meaning similarity. Importantly, in BSL, signs referring to tools (e.g., knife) and tool actions (e.g., to cut) share tool-use iconicity, making them more similar to each other than body actions (e.g., to hit). While English speakers in the study tended to group tool actions along with body actions (showing a preference for distinguishing actions from objects), BSL signers tended to group tools and tool actions together, as predicted on the basis of shared iconic properties of the signs. The results of this study suggest that BSL signers pay attention to iconicity as part of their language processing strategy. However, the off-line nature of the task does not allow for strong conclusions concerning the role of iconicity in automatic language processing, as participants could have explicitly based their judgments in part on iconicity, rather than on using iconicity in a more automatic way.

In another study examining on-line processing, Grote and Linz (2003) found that native signers of German Sign Language were faster at verifying object–property relationships for iconic properties of signs. For example, signers were presented with the German Sign Language sign EAGLE (iconic of an eagle's beak), immediately followed by either a picture of a beak (iconic condition), a wing (noniconic condition), or a necklace (an unrelated feature). German Sign Language signers were faster at determining that a feature was semantically related to a sign in the iconic condition (e.g., they showed faster reaction times [RTs] when presented with beak than wing). Importantly, no such difference was observed for German speakers in a version of the same task in which they were presented with the corresponding German translation. This study, however, can be criticized because the sign presented (e.g., EAGLE) was often the same as, or at least very similar to, the sign for the iconically matched picture (e.g., beak), whereas there was no such phonological relationship with the noniconic condition (e.g., EAGLE paired with a picture of a wing). Because of this, the faster RTs in the iconic condition could be the result of identity priming rather than a closer iconic mapping between object and feature.

Here, we look at whether native signers of American Sign Language (ASL) are faster at recognizing signs for objects when iconic properties are made salient in corresponding pictures. Additionally, given that there is evidence that late second-language (L2) learners make use of iconic properties of signs as a learning strategy, unlike early first-language (L1) learners (Campbell et al., 1992), we also tested late L2 learners in order to determine whether there is any difference in iconicity effects for L2 signers for whom iconic properties may have played a more important role in learning the language.

We addressed the following questions: Does a close form–meaning relationship as seen in iconic signs affect language processing? Is there any difference between L1 ASL signers and L2 signers? If iconicity is found in languages that allow for it, we reasoned it may be because it is useful in language processing and a desirable feature of language. In this case, the data should indicate better lexical access (as evidenced by greater accuracy and faster RTs in a sign comprehension task) when there is a tight link between an ASL sign and a real-world object, while we reasoned that no difference should be observed for English speakers doing the same tasks with English words. Such a difference might be magnified for L2 signers if greater awareness of iconicity during acquisition results in a greater role for iconicity in processing. Alternatively, if there is no effect of iconicity on language processing, the degree to which a sign and an object are linked should not matter.

Method

Participants

Fourteen Deaf1 ASL signers, 17 hearing proficient ASL signers, and 17 hearing nonsigners participated in the study. Five Deaf participants were exposed to ASL from birth, and 9 acquired ASL at an early age (M = 3.2; range = 18 months to 5 years old). Hearing signers began signing after age 16 (M = 20.6; range = 16–28) and had been signing for over 8 years (M = 17.3; range = 8–35). All were working as interpreters at the time of testing. Hearing nonsigners were monolingual English speakers with no previous knowledge of ASL other than the finger spelled alphabet.

Stimuli

Materials for the experiment were a set of ASL signs and English words referring to the same concrete objects. Signs (both experimental items and fillers) were normed for iconicity and familiarity using a 7-point scale in which 1 was completely arbitrary and 7 was completely iconic (13 Deaf, 14 hearing signers; norming participants did not take part in the experiment). Average ratings for Deaf and hearing signers were highly correlated (r = .837). Experimental signs (N = 50) were all rated highly iconic by both groups (3.7 or above, M = 5.1, SD = 1.82). Filler signs (N = 100) were a mix of iconic and arbitrary items (M = 2.72, SD = 1.75). Familiarity was matched across experimental items.

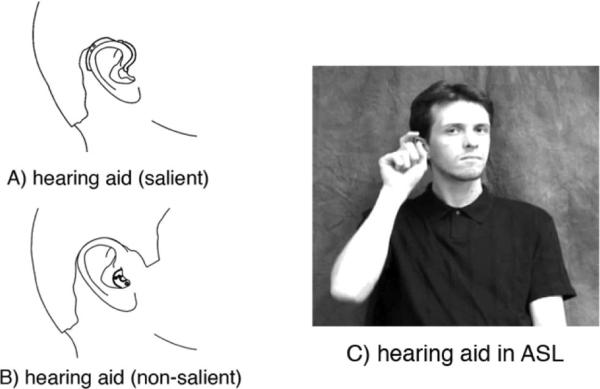

Each of the iconic experimental items (see Appendix A) was paired with two black and white line drawings, some taken from existing sources (Snodgrass & Vanderwart, 1980; Szekely et al., 2004) and others specifically prepared for the purpose (see Appendix B). In one picture, the iconic property of the sign was made salient (e.g., a picture of a hearing aid held behind the ear to match the ASL sign; see Figure 1); in the second, the iconic property was not made salient (e.g., a smaller hearing aid worn solely inside of the ear).

Figure 1.

An example of materials for the picture-matching task. Participants first saw either a picture in which the iconic property of the sign was made salient (as in A: a picture of a wrap-around hearing aid) or they saw a picture in which the iconic property was not made salient (as in B: a picture of a smaller hearing aid that sits in the ear alone). The picture (A or B) was followed by a sign video (as in C: the American Sign Language [ASL] sign for HEARING AID produced with the curved index finger and thumb tapping just behind the ear to represent a hearing aid) or by a video clip of an English speaker saying “hearing aid” (not pictured).

Procedure

Participants’ task was to decide whether a picture and an ASL sign refer to the same object. Participants first saw the picture followed by a sign video, and participants were instructed to respond yes or no (by pressing a computer key) if the picture and the sign referred to the same thing. As a control, nonsigning hearing English speakers carried out a similar task in which the same pictures were followed by a video of someone saying the English words. If iconicity facilitates language processing, we reasoned that signers should be faster at responding yes when the iconic property is salient in the picture than when it is not, while no such difference should be observed for English speakers responding to words. For experimental items, the picture and sign or word always matched, while filler items included both matching and mismatching pairs (always using different pictures and signs or words from the experimental items).

The experiment included two blocks, each containing 50 experimental items and 90 filler items2 (70 mismatching and 20 matching). No pictures were paired with the same sign or word in the second block as in the first. For experimental items, half (randomly determined) were paired with a salient picture in the first block and a nonsalient picture in the second. This order was reversed for half of the participants and for block (first vs. second), and was taken into account in the analysis. Order of presentation of items was randomized within a block for each participant.

The experiment started with 10 practice items, followed by the actual experiment. Each trial began with a fixation cross (400 ms), then the picture display (500 ms), a blank screen (300 ms), and then the sign or word video (RTs were measured from the start of the video) during which participants could make their responses (blank screen after the video completed, until 3,000-ms timeout), followed by 300-ms blank screen before the fixation for the next trial. We presented stimuli and collected data using E-Prime Version 1.1. (Schneider, Eschman, & Zuccolotto, 2002).

Results

After excluding those trials in which participants made incorrect responses, we averaged correct RTs by participants and by items for each of the experimental conditions (see Table 1).

Table 1.

Average Correct Response Latencies (in Milliseconds) as a Function of Participant Group, Picture Salience, and Block

| Salient iconic property |

Nonsalient iconic property |

|||

|---|---|---|---|---|

| Group | Block 1 | Block 2 | Block 1 | Block 2 |

| Native American Sign Language | 952 (42) | 878 (39) | 983 (44) | 912 (36) |

| L2 American Sign Language | 920 (37) | 798 (33) | 923 (41) | 825 (31) |

| English monolingual | 795 (38) | 745 (36) | 779 (40) | 733 (33) |

Note. Standard errors by participants are in parentheses. L2 = second language.

We conducted separate 3 × 2 × 2 analyses of variance, using participants (F1) and items (F2) as random factors, upon correct response latencies.3 Using analyses of variance, we tested the factorial combination of group (native ASL, L2 ASL, English monolingual; between participants and within items), picture salience (whether the iconic feature was made salient in the picture or not), and block (whether the sign or word appeared for the first or second time). The main effect of group was significant, F1(2, 45) = 5.528, p = .007, and F2(2, 98) = 39.010, p < .001; English monolinguals responded faster (763 ms) than either ASL group (native: 931; L2: 867; not significantly different from each other). This unexpected finding in overall RTs between English speakers and ASL signers (both L1 and L2; i.e., signers were significantly slower than English- speaking controls) is likely due to the fact that signs take longer to produce than spoken words. (The average mean length for ASL video clips was 1,809 ms, while English video clips were on average 1,074 ms.)

The main effect of picture salience was significant only by participants but not items, F1(1, 45) = 5.018, p = .030, and F2(1, 49) = 1.614, p = .210; this reflected a tendency toward faster responses for salient pictures overall. There was also a significant main effect of block, F1(1, 45) = 59.779, p < .001, and F2(1, 49) = 123,761, p < .001, such that participants were faster to respond to the second presentation of a sign or word.

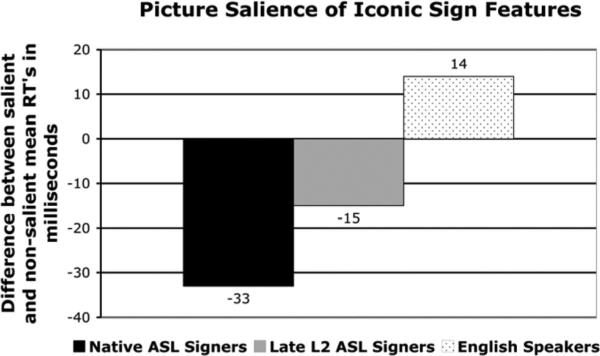

Crucially, these main effects were qualified by two significant interactions. First, group interacted with picture salience, F1(2, 45) = 6.954, p = .002, and F2(2, 98) = 3.642, p = .030. Analysis of simple interactions revealed a clear difference between native ASL signers and English monolinguals (Group × Picture Salience interaction comparing these two groups: F1[1, 29] = 15.301, p = .001, and F2[1, 49] = 6.021, p = .018). Native ASL signers were faster to respond to whether the sign matched the picture after seeing a salient picture than after a nonsalient picture, while English speakers had no response advantage for the same pictures (see Figure 2). Responses by L2 ASL signers patterned more like the L1 ASL signers (the Group × Picture Salience interaction between Deaf and L2 ASL signers was nonsignificant with both ps > .20). However, the same interaction between L2 ASL signers and monolingual English speakers missed significance by items, F1(1, 32) = 5.772, p = .022, and F2(1, 49) = 2.838, p = .098, making L2 ASL signers not significantly different from either native ASL signers or English-speaking nonsigners.

Figure 2.

Difference scores by participant group showing difference between average correct reaction times (RTs) for pictures highlighting salient features of a sign and pictures not highlighting salient features. ASL = American Sign Language; L2 = second language.

There was also an interaction between group and block, F1(2, 45) = 3.568, p = .036, and F2(2, 98) = 10.760, p < .001. While all three groups responded more quickly to the second presentation of a sign or word, this tendency was more pronounced for L2 ASL participants than the other two groups; L2 ASL participants were, on average, 111 ms faster for the second block than for the first versus 72 ms for native ASL participants and 48 ms for English monolinguals (these latter two groups did not differ from each other). None of the remaining interactions were significant (all Fs < 1).

It could be argued that the relatively longer latencies for ASL participants compared to English-speaking participants is due to iconicity effects arising because of slower overall comprehension for signers. If so, then the magnitude of the iconicity effect should decrease when ASL participants are faster to respond. To test this hypothesis, we calculated the correlation between each participant's iconicity effect and their overall RT. Across all Deaf L1 participants, the correlation was not significant (r = .0524, p = .82) and when both L1 and L2 ASL participants were calculated together, the correlation remained not significant (r = –.0577, p = .74). Therefore, the iconicity effect is not explained by the relatively greater time available to sign perceivers because of slower sign production times.

We also investigated whether the iconicity effect might have been influenced by prototypicality, either from prototypical features highlighted in the salient pictures or prototypical features expressed iconically in ASL signs. To assess the prototypicality of target sign properties, we turned to a database of semantic features (McRae, Cree, Seidenberg, & McNorgan, 2005). Highly prototypical properties were defined as those that are well represented in the semantic features (named by at least 40% of McRae et al.'s, 2005, English-speaking participants). For example, the iconic property of the sign ELEPHANT (its trunk) would be considered highly prototypical (77% of participants produced the feature trunk while listing important features of elephants), while the iconic property of the sign MOTORCYCLE (gripping–turning handlebar) would not be considered prototypical, as no features related to grasping or handlebars occur in the database. We classified all set items found in McRae et al.'s (2005) database (N = 30) into high or low prototypicality (high = 13, low = 17). An analysis of native ASL signers and English monolinguals, using prototypicality as a factor along with salience of picture and block, showed no main effect of prototypicality or significant interactions of prototypicality (the Group × Prototypicality and Picture Salience × Prototypicality interactions were nonsignificant with both ps > .9). Thus, Deaf ASL signers were not faster to respond to signs incorporating prototypical features, nor was either group significantly faster to respond to the salient picture when it highlighted more highly prototypical features.

Discussion

Overall, the data show a processing advantage for iconicity in native signers: Native signers were faster at the matching task when iconic properties of the sign were made salient in the picture, while this was not the case for nonsigning participants matching the same pictures to English words. This finding follows naturally if one assumes language is not random but rather an emergent property of natural influences both within and outside of the language itself. Specifically, the incorporation of iconicity appears to be a basic principle across signed languages, and the experimental results suggest that this principle may emerge because of the benefits derived. Moreover, the overall suggestion is that there is nothing inherently better in wholly arbitrary mappings between form and meaning (at least in terms of lexical access), and thus past assertions that the extent of arbitrariness in language likely depends on language modality are supported (Fischer, 1979; Meir, 2003).

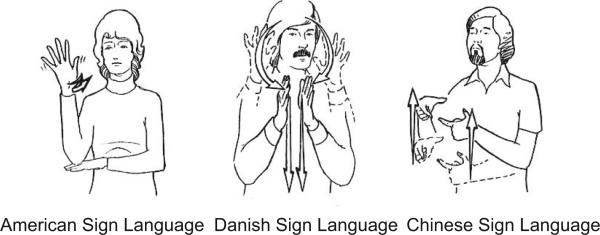

As described in the introduction, arbitrariness has been claimed to be necessary in order to allow for large lexica (maximizing the number of possible phonological contrasts allows for representation of far greater numbers of different words). However, this claim is based on a specific model in which there is an exact one-to-one mapping between meaning and form (Gasser, 2004): a situation very different from how iconicity is actually realized in sign forms. Specifically, iconicity in signed languages is created from the representation of only certain salient element(s) of real-world objects, actions, and so forth and there can be several choices of how to iconically represent any one concept. This is evidenced by the fact that the same concept is not always represented in the same way across sign languages (e.g., the ASL sign for LION iconically represents the mane, while in BSL LION is iconically represented with pouncing paws and claws). Further, while different signed languages often represent real-world objects, actions, and so forth by highlighting the same salient property, rarely is the exact phonological form of the sign the same. This was first discussed in Klima and Bellugi (1979), who provide the example of the sign TREE in ASL, Danish Sign Language, and Chinese Sign Language. All three of these sign languages represent the shape of a prototypical tree, but they are still all phonologically quite different from each other (see Figure 3). This example points out the many phonological choices available for the representation of any one iconic meaning.

Figure 3.

The sign for TREE in three different signed languages. From The Signs of Language (p. 21) by E. Klima & U. Bellugi, 1979, Cambridge, MA: Harvard University Press. Copyright 1979 by the President and Fellows of Harvard College. Reprinted with permission.

Further, as discussed above, iconicity may be represented in some aspects of the phonological representation of any one sign, while other features remain arbitrary. For example, the sign HEAR in ASL is produced by tapping the ear with an index finger. While the location of the ear is clearly iconic, the remaining features appear arbitrary (i.e., the pinky or index and middle fingers together could easily be used for the hand shape, and rubbing or flicking in place of the existing tapping movement). Because not all features of a sign need be iconic and because there are many ways to map salient features onto phonological features, as well as a choice of salient features that can be mapped, signed languages can incorporate a high degree of iconicity for individual form–meaning mappings while still maintaining arbitrariness both in some features of individual phonological forms, and at a more global level across signs that share meanings.

Perhaps the most surprising results were from proficient L2 signers. While all groups were faster in the second block than the first, L2 signers were much better at capitalizing on sign repetition, showing significantly more gain on RTs in Block 2 (110 ms) than the Deaf signers (72.5 ms) or the hearing non-signers (48 ms). The L2 signers in this study all work as simultaneous interpreters who are necessarily highly proficient in the L2 but also appear to possess superior memory resources relative to other language users likely needed specifically for the complex task of simultaneously interpreting between two languages (Christoffels, deGroot, & Kroll, 2006). The exact nature of these resources has been previously attributed to better working memory capacity (Christoffels et al., 2006; Padilla, Bajo, Cañas, & Padilla, 1995). However, Köpke and Nespoulos (2006) conducted a large-scale study using a battery of memory tasks and memory tasks coupled with articulatory suppression tasks (thought to tap into executive control) and found that while professional interpreters resisted articulatory suppression better than other groups, they did not perform differently from noninterpreters on working memory tasks. These results challenge the idea that working memory capacity increases in experts and suggests that perhaps experienced interpreters (the participants included in our study) develop skills that do not depend on working memory storage capacity.

In line with this finding, and directly relevant to our study, Bajo, Padilla, and Padilla (2000) found that simultaneous interpreters were faster to respond on atypical exemplars of categories in a semantic categorization task and were faster on nonwords in a lexical decision task than trainees learning to interpret, bilinguals without interpreting experience, and importantly monolinguals. Thus, our findings support a growing body of research in which simultaneous interpreters outperform other groups for certain tasks relevant to their skills developed as interpreters. Crucially, in our study, the gain in RTs did not differ between the two conditions (salient and nonsalient pictures) or as an overall significantly faster response rate than Deaf signers, and therefore this effect cannot be attributed to iconicity effects but might stem from L2 interpreters’ greater proficiency at accessing previously introduced information (such as already-seen signs).

Also surprising and relevant to our experimental questions, iconicity effects were no greater for L2 signers then for L1 signers. L2 signers did not show a significant difference from native Deaf signers, however they also did not show a significant difference from the nonsigning group (thus, straddling the fence between the two groups). Iconicity in L2 learning has been explained in terms of the conscious use of iconic cues as a memory aid, and we hypothesized that there might be an even greater effect of iconicity for late L2 learners of ASL who appear to be more aware of iconic properties of a sign during the acquisition stage. However, that this was not the case suggests that the experimental task was able to tap into more automatic on-line processes that are not affected by conscious learning strategies or awareness.

Developmental studies have suggested that children do not make use of iconic properties of signs during L1 acquisition (Bellugi & Klima, 1976; Emmorey, 2002; Taub, 2001) or during L1 acquisition of iconically motivated agreement (e.g., for signs such as GIVE that move from source to goal; Meier, 1982) and that most first signs are not iconic (Orlansky & Bonvillian, 1984).4 On the basis of this developmental work, one might predict that iconicity would not play a role in adult language processing. However, regardless of whether ASL signers make use of iconicity during L1 learning or not, they appear to have an awareness of iconicity, as evidenced by their ability to give iconicity rating for signs in our study. Further, there was high agreement between native L1 signers and late L2 signers about which signs are iconic and which are not. Participants’ postexperiment iconicity ratings5 were very highly correlated across the groups: Pearson's r(172) = .842. This indicates while L2 signers may have a greater awareness of iconic properties of signs during L2 acquisition, both groups end up similarly aware of iconic properties of signs.

Crucially, when considering the L1 acquisition literature, there is no reason to assume that the iconic relationship between form and meaning, which we showed to have processing benefits in adults, would necessarily aid in earlier acquisition of these forms. It is an open question whether or not similar effects of iconicity on processing might be found for children performing similar kinds of on-line tasks. Recently, iconicity effects were found for deaf children using Sign Language of the Netherlands (in a picture–sign matching task, responses were significantly faster for highly iconic signs than for less iconic signs; Ormel, 2008). Iconicity has further been claimed to play a role in other cognitive processes for children such as abstract categorization and the ability to recognize and describe the function of objects (Courtin, 1997; Markham & Justice, 2004). Thus, while iconicity may not play a role in language acquisition, children nonetheless appear able to take advantage of iconic aspects of signs for different conceptual tasks including lexical access, as we found with Deaf adults.

The finding that L2 interpreters do not show larger iconicity effects than L1 signers provides evidence that our task elicited automatic effects. But what automatic process is driving the iconicity effect? One view is that the iconicity effect arises from more direct mappings resulting from the nonarbitrary link between phonology and semantics. In lexical decision tasks, there is facilitation when participants are primed with like morphemes embedded in semantically unrelated words (see Drews, 1996, for review) or like phonoaesthemes (i.e., clusters of sounds representing specific meanings as in the gl- of glitter, glaze, and glare to encode the meaning of light; Bergen, 2004). Facilitation is greater than for phonologically or semantically related words alone, indicating a processing advantage for regularized, although arbitrary, form–meaning mappings. The iconicity effect may, in a similar fashion, be driven by regular form–meaning mappings. Under this analysis, our results would arise from general processing benefits of more regular mappings. One consideration then is whether or not there are neighborhoods of iconic features that tend to get mapped onto signs (e.g., round shapes as represented by a curved hand shape in the signs CUP, BINOCULARS, BRACELET, etc.) and whether the iconicity effect is driven by more dense–sparse neighborhoods of meaning. We leave these possibilities open to further research.

In conclusion, a facilitatory effect of sign iconicity suggests that iconic properties of a sign are more salient to signers than noniconic properties. One way to think about the overall results is in terms of current theories of embodied language in which word meanings are understood via mental simulations of past perception and action (Barsalou, 1999; Gallese & Lakoff, 2005). This more direct, less arbitrary connection between the real-world and word meanings could be predicted to extend, where possible, into all areas of language (including form–meaning mappings). This would be particularly true for signed languages, which by their very nature are more directly anchored to the body. The once received but now changing view in language studies was that form and meaning are only arbitrarily linked and that imagistic information must be translated into abstract linguistic information when using language. Finding effects of iconicity in ASL supports a revision of this view under which arbitrariness may be only one of a number of competing principles that drive the shape of language. True arbitrariness, therefore, may be the result of language modality, rather than a stand-alone feature of language.

Acknowledgments

This work was supported by Economic and Social Research Council of Great Britain Grant RES-620-28-6001, the Deafness Cognition and Language Research Centre, and National Institutes of Health Grant R01 HD13249. This study would not have been possible without the generous support of Karen Emmorey and invaluable help from Clifton Langdon, Rachael Colvin, Franco Korpics, and Kyoshi Becker.

Appendix

Appendix A.

List of English Translations for Target Signs

| Alligator | Devil | Pirate |

| Angel | Diaper | Penguin |

| Baby | Dresser | Perfume |

| Banana | Duck | Rabbit |

| Baseball | Elephant | Ring |

| Bicycle | Flag | Rooster |

| Bird | Gasoline | Snake |

| Boat | Hearing Aid | Spider |

| Brain | Hippo | Squirrel |

| Button | Ice Cream | Table |

| Camera | Key | Telephone |

| Candle | Letter | Turkey |

| Car | Lion | Watch |

| Cat | Mirror | Whale |

| City | Moose | Wolf |

| Clown | Motorcycle | |

| Cow | Owl |

Appendix

Appendix B.

Sample Picture Sets

| Sign | Salient picture | Nonsalient picture |

|---|---|---|

| BANANA (sign indicates peeling a banana) |  |

|

| BASKET (sign indicates round shape of basket) |  |

|

| CANDLE (sign indicates flame flickering on top of a long thin object) |  |

|

| HIPPO (sign indicates mouth and long 4 teeth of hippo) |  |

|

Footnotes

By convention, uppercase Deaf is used to indicate individuals who are deaf but who also use sign language and are members of the Deaf community, while lowercase deaf is used to represent audiological status.

The number of filler items per block does not match the number of filler signs described in the Stimuli section, because we presented some filler signs or words only in the second experimental block in order to prevent participants from being able to use decision strategies based on their responses in the first block.

We hypothesized that iconicity effects would be evident in both the accuracy rates and RTs of signers. However, participants across all groups were highly accurate on experimental items across all conditions (native ASL signers: 98.1%, L2 ASL signers: 96.9%, English monolinguals: 96.9%), and the same analyses conducted upon accuracy rates revealed no significant differences.

On the other hand, see the BSL norming study by Vinson Cormier, Denmark, Schembri, and Vigliocco (2008), where deaf signers’ ratings of 300 lexical signs showed a significant relationship between rated iconicity and age of acquisition (r = –.463, p < .01): Earlier acquired signs in this sample did tend to be more iconic than later acquired signs.

Nine of the Deaf participants and 10 hearing L2 participants completed postexperiment norming for iconicity and familiarity using the same scales as the preexperiment norming participants.

References

- Bajo MT, Padilla F, Padilla P. Comprehension processes in simultaneous interpreting. In: Chesterman A, Gallardo San Salvador N, Gambier Y, editors. Translation in context. John Benjamins; Amsterdam: 2000. pp. 127–142. [Google Scholar]

- Barsalou LW. Perceptual symbol systems. Behavioral and Brain Sciences. 1999;22:577–609. doi: 10.1017/s0140525x99002149. [DOI] [PubMed] [Google Scholar]

- Bellugi U, Klima E. Two faces of sign: Iconic and abstract. In: Harnad S, editor. The origins and evolution of language and speech. New York Academy of Sciences; New York: 1976. pp. 514–538. [DOI] [PubMed] [Google Scholar]

- Bergen B. The psychological reality of phonaesthemes. Language. 2004;80:290–311. [Google Scholar]

- Campbell R, Martin P, White T. Forced choice recognition of sign in novice learners of British Sign Language. Applied Linguistics. 1992;13:185–201. [Google Scholar]

- Christoffels I, de Groot A, Kroll J. Memory and language skills in simultaneous interpreters: The role of expertise and language proficiency. Journal of Memory and Language. 2006;54:324–345. [Google Scholar]

- Courtin C. Does sign language provide deaf children with an abstraction advantage? Evidence from a categorization task. Journal of Deaf Studies and Deaf Education. 1997;2:161–170. doi: 10.1093/oxfordjournals.deafed.a014322. [DOI] [PubMed] [Google Scholar]

- Drews E. Morphological priming. Language and Cognitive Processes. 1996;11:629–634. [Google Scholar]

- Emmorey K. Language, cognition, and the brain: Insights from sign language research. Erlbaum; Mahwah, NJ: 2002. [Google Scholar]

- Fischer S. Many a slip ‘twixt the hand and the lip: Applying linguistic theory to non-oral languages. In: Herbert R, editor. Metatheory: III. Application of linguistics in the human sciences. Michigan State University Press; East Lansing: 1979. pp. 45–75. [Google Scholar]

- Gallese V, Lakoff G. The brain's concepts: The role of the sensory-motor system in conceptual knowledge. Cognitive Neuropsychology. 2005;22:455–479. doi: 10.1080/02643290442000310. [DOI] [PubMed] [Google Scholar]

- Gasser M. Proceedings of the Cognitive Science Society Conference. Erlbaum; Hillsdale, NJ: 2004. The origins of arbitrariness in language. pp. 434–439. [Google Scholar]

- Grote K, Linz E. The influence of sign language iconicity on semantic conceptualization. In: Müller WG, Fischer O, editors. From sign to signing. John Benjamins; Amsterdam: 2003. pp. 23–40. [Google Scholar]

- Haiman J. The iconicity of grammar: Isomorphism and motivation. Language. 1980;56:515–540. [Google Scholar]

- Kakehi H, Tamori I, Schourup L. Dictionary of iconic expressions in Japanese. Walter de Gruyter; Berlin: 1996. [Google Scholar]

- Klima E, Bellugi U. The Signs of language. Harvard University Press; Cambridge, MA: 1979. [Google Scholar]

- Köpke B, Nespoulos J. Working memory performance in expert and novice interpreters. Interpreting. 2006;8:1–23. [Google Scholar]

- Markham P, Justice E. Sign language iconicity and its influence on the ability to describe the function of objects. Journal of Communication Disorders. 2004;37:535–546. doi: 10.1016/j.jcomdis.2004.03.008. [DOI] [PubMed] [Google Scholar]

- McRae K, Cree GS, Seidenberg MS, McNorgan C. Semantic feature production norms for a large set of living and nonliving things. Behavioral Research Methods, Instruments, and Computers. 2005;37:547–559. doi: 10.3758/bf03192726. [DOI] [PubMed] [Google Scholar]

- Meier RP. Unpublished doctoral dissertation. University of California; San Diego: 1982. Icons, analogues, and morphemes: The acquisition of verb agreement in American Sign Language. [Google Scholar]

- Meier RP. Why different, why the same? Explaining effects and non-effects of modality upon linguistic structure in sign and speech. In: Meier RP, Cormier K, Quinto-Pozos D, editors. Modality and structure in signed and spoken languages. Cambridge University Press; Cambridge, England: 2002. pp. 1–25. [Google Scholar]

- Meir I. Modality and grammaticalization: The emergence of a case marked pronoun in ISL. Journal of Linguistics. 2003;391:109–140. [Google Scholar]

- Monaghan P, Christiansen MH. Proceedings of the 28th Annual Conference of the Cognitive Science Society. Erlbaum; Mahwah, NJ: 2006. Why form-meaning mappings are not entirely arbitrary in language. pp. 1838–1843. [Google Scholar]

- Orlansky M, Bonvillian JD. The role of iconicity in early sign language acquisition. Journal of Speech and Hearing Disorders. 1984;49:287–292. doi: 10.1044/jshd.4903.287. [DOI] [PubMed] [Google Scholar]

- Ormel E. Unpublished doctoral dissertation. Radbound University; Nijmegen, the Netherlands: 2008. Visual word recognition in bilingual deaf children. [Google Scholar]

- Padilla P, Bajo MT, Cañas JJ, Padilla F. Cognitive processes of memory in simultaneous interpretation. In: Tommola J, editor. Topics in interpreting research. University of Turku; Turku, Finland: 1995. pp. 61–72. [Google Scholar]

- Sandler W, Lillo-Martin D. Sign language and linguistic universals. Cambridge University Press; Cambridge, England: 2006. [Google Scholar]

- Schneider W, Eschman A, Zuccolotto A. E-Prime Reference Guide [Computer software and manual] Psychology Software Tools, Inc.; Pittsburgh, PA: 2002. [Google Scholar]

- Snodgrass JG, Vanderwart M. A standardized set of 260 pictures: Norms for name agreement, image agreement, familiarity, and visual complexity. Journal of Experimental Psychology: Human Learning and Memory. 1980;6:174–215. doi: 10.1037//0278-7393.6.2.174. [DOI] [PubMed] [Google Scholar]

- Sutton-Spence R, Woll B. The linguistics of British Sign Language: An introduction. Cambridge University Press; Cambridge, England: 1999. [Google Scholar]

- Szekely A, Jacobsen T, D'Amico S, Devescovi A, Andonova E, Herron D, et al. A new on-line resource for psycholinguistic studies. Journal of Memory and Language. 2004;51:247–250. doi: 10.1016/j.jml.2004.03.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taub SF. Language from the body: Iconicity and metaphor in American Sign Language. Cambridge University Press; Cambridge, England: 2001. [Google Scholar]

- Thompson RL, Emmorey K, Gollan TH. “Tip of the fingers” experiences by deaf signers: Insights into the organization of sign-based lexicon. Psychological Science. 2005;16:856–860. doi: 10.1111/j.1467-9280.2005.01626.x. [DOI] [PubMed] [Google Scholar]

- Vigliocco G, Vinson DP, Woolfe T, Dye MW, Woll B. Words, signs and imagery: When the language makes the difference. Proceedings of the Royal Society of London, Series B: Biological Sciences. 2005;272:1859–1863. doi: 10.1098/rspb.2005.3169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vinson DP, Cormier K, Denmark T, Schembri A, Vigliocco G. The British Sign Language (BSL) norms for age of acquisition, familiarity and iconicity. Behavior Research Methods. 2008;40:1079–1087. doi: 10.3758/BRM.40.4.1079. [DOI] [PubMed] [Google Scholar]

- Zwaan RA, Yaxley RH. Spatial iconicity affects semantic relatedness judgments. Psychonomic Bulletin & Review. 2003;10:954–958. doi: 10.3758/bf03196557. [DOI] [PubMed] [Google Scholar]