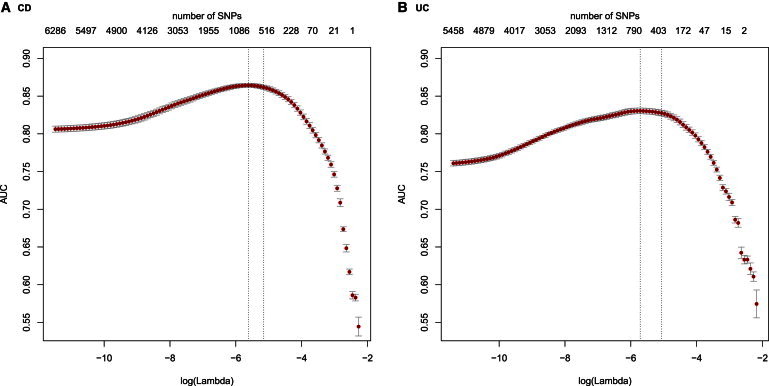

Figure 1.

Ten-Fold Cross-Validation for Model Selection and Training

SNPs that survived fold 1 preselection may still contain noisy predictors. We employed L1-penalized logistic regression to further remove irrelevant SNPs while fitting a predictive model using fold 2 data. The larger the penalty parameter lambda, the more SNPs were removed. The numbers on the top of the plot are the corresponding numbers of SNPs survived under different values of lambda shown along the x axis. We selected lambda by using 10-fold cross validation. Specifically, we calculated the average AUC for different values of lambda and took the largest value yielding the most parsimonious model such that AUC is within 1 SE of the optimum (the two vertical dashed lines). The optimal 10-fold cross-validated AUCs on fold 2 data were 0.864 and 0.830 for (A) CD and (B) UC, respectively.