Abstract

Objective:

In this paper, we evaluate face, content and construct validity of the da Vinci Surgical Skills Simulator (dVSSS) across 3 surgical disciplines.

Methods:

In total, 48 participants from urology, gynecology and general surgery participated in the study as novices (0 robotic cases performed), intermediates (1–74) or experts (≥75). Each participant completed 9 tasks (Peg board level 2, match board level 2, needle targeting, ring and rail level 2, dots and needles level 1, suture sponge level 2, energy dissection level 1, ring walk level 3 and tubes). The Mimic Technologies software scored each task from 0 (worst) to 100 (best) using several predetermined metrics. Face and content validity were evaluated by a questionnaire administered after task completion. Wilcoxon test was used to perform pair wise comparisons.

Results:

The expert group comprised of 6 attending surgeons. The intermediate group included 4 attending surgeons, 3 fellows and 5 residents. The novices included 1 attending surgeon, 1 fellow, 13 residents, 13 medical students and 2 research assistants. The median number of robotic cases performed by experts and intermediates were 250 and 9, respectively. The median overall realistic score (face validity) was 8/10. Experts rated the usefulness of the simulator as a training tool for residents (content validity) as 8.5/10. For construct validity, experts outperformed novices in all 9 tasks (p < 0.05). Intermediates outperformed novices in 7 of 9 tasks (p < 0.05); there were no significant differences in the energy dissection and ring walk tasks. Finally, experts scored significantly better than intermediates in only 3 of 9 tasks (matchboard, dots and needles and energy dissection) (p < 0.05).

Conclusions:

This study confirms the face, content and construct validities of the dVSSS across urology, gynecology and general surgery. Larger sample size and more complex tasks are needed to further differentiate intermediates from experts.

Introduction

The American College of Surgeons has recommended simulation-based training for surgical trainees to increase proficiency and patient safety in light of recent restrictions to trainee work hours and increased concerns over patient safety.1 The first validated low-fidelity objective training and assessment tool for basic laparoscopic skills is the McGill Inanimate System for Training and Evaluation of Laparoscopic Skills (MISTELS), which has been adapted by the Society of American Gastrointestinal and Endoscopic Surgeons (SAGES) for the Fundamentals of Laparoscopic Surgery (FLS) program.2 Currently, FLS certification is required for candidates applying for certification from the American Board of Surgery. Furthermore, construct validity of MISTELS has been established for urology trainees and attending urologists.3 Recently, the American Urological Association’s Committee on Laparoscopy, Robotic and New Technology modified the original MISTELS/FLS program into a more urocentric curriculum, the Basic Laparoscopic Urologic Surgery (BLUS) skills curriculum, by replacing the endoloop and extra-corporeal knot tying exercises with a novel clip-applying task.4 The construct validity of BLUS has been recently demonstrated for the assessment of basic laparoscopic skills of urologists.4

With the introduction of the da Vinci (Intuitive Surgical, Inc., Sunnyvale, CA) robotic-assisted laparoscopic surgery, the need for training and assessment of more complex robotic assisted-laparoscopic skills has increased. In one study comparing novice robotic surgeons performing the intracorporeal suturing exercise of the FLS program using standard laparoscopy versus da Vinci robotic assistance, participants found standard laparoscopy to be more physically demanding and preferred the robotic platform (62 vs. 38%, p < 0.01) despite achieving significantly higher suturing scores with standard laparoscopy when compared with robotic assistance.5 Therefore, there is increasing demand from both patients and surgeons to incorporate this technology. The Ontario Health Technology Assessment found that despite high startup and maintenance cost, robotic-assisted laparoscopic hysterectomies and prostatectomies were associated with lower blood loss and hospitalization length.6 However, robotic-assisted laparoscopic surgery requires a unique set of skills that are not measured on the MISTELS, FLS or BLUS curricula.

Currently, there are three commercially available robotic platform simulators. The face and content validity of the Robotic Surgical Simulator (RoSS) (Simulated Surgical Systems; Williamsville, NY) have been demonstrated.7,8 The original software developed by Mimic Technologies for the second simulator, namely the da Vinci Trainer (dV-Trainer) (Mimic Technologies, Inc., Seattle, WA), has been recently adapted as a “back-pack” to be used with the Si console of the da Vinci robot, thus creating a third simulator, namely the da Vinci Surgical Skills Simulator (dVSSS) (Intuitive Surgical, Inc., Sunnyvale, CA). Although the construct validity of the dV-Trainer has been previously demonstrated, previous studies only included novices and experts, without a group of participants with intermediate level of robotic-assisted lapa-roscopic surgical expertise.9–11 The advantage of the dVSS over the dV-Trainer is that it uses the same platform in the operating theatre, namely the Si console of the da Vinci robot. This means the trainee acquires skills using the same foot and finger clutch controls, as well as the endowrist manipulations that are used in the operating theatre. Although the dVSSS have been previously validated, these studies have shortcomings. Thus, the aim of this present pilot study was to confirm face, content and construct validity of the dVSSS using only 9 tasks with 3 levels of participants (novice, intermediate and expert) from 3 specialties of general surgery, gynecology and urology at a Canadian robotic-assisted laparoscopic surgery training centre.

Methods

After obtaining Institutional Research Ethics Board approval (Protocol 12-049), 48 participants were recruited across the specialties of urology, gynecology and general surgery between March 19 and April 17, 2012 (Table 1). This included attending surgeons, fellows (Post-graduate years [PGY] >5), residents (PGY 1–5) and medical students. Since previous studies on the learning curve for robotic-assisted laparoscopic radical prostatectomy (RALP) have shown that outcomes improve after 70 cases, experts were defined as those who had performed more than 75 robotic cases independently.12 Therefore, participants who had performed between 1 and 74 robotic cases were classified in the intermediate group, while participants without any previous robotic experience were included in the novice group (Table 1).

Table 1.

Participant demographics

| Group | Novice (n=30) | Intermediate (n=12) | Expert (n=6) |

|---|---|---|---|

| Position: | |||

| • Attending | 1 | 4 | 6 |

| • Fellow | 1 | 3 | – |

| • Resident | 13 | 5 | – |

| • Medical student | 13 | – | – |

| • Research assistant | 2 | – | – |

| Specialty: | |||

| • Urology | 9 | 7 | 3 |

| • Gynecology | 6 | 3 | 3 |

| • General surgery | 2 | 2 | – |

| Median age (range) (years) | 27.5 (2–56) | 34.5 (27–48) | 51 (37–53) |

| Median experience (range) (years) | |||

| • Surgical | 0 (0–25) | 6 (3–17) | 19 (9–28) |

| • Laparoscopic | 0 (0–7) | 4 (0–12) | 11.5 (8–18) |

| • Robotic | 0 | 2 (0–4) | 4 (2–5) |

| Median robotic cases performed (range) | 0 | 9 (20–45) | 250 (75–390) |

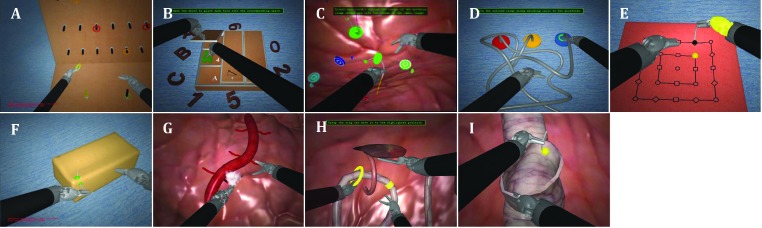

The dVSSS using Mimic software (Mimic Technologies, Inc., Seattle, WA) has been previously described.13–16 Each participant was provided with a manual to read. Novice group participants were provided one hands-on session for 10 minutes to teach them how to use the simulator’s clutches and finger controls. There was also a short video provided by the simulator that preceded each task, explaining the objectives and the difficulty of each task. Each participant completed 9 tasks (Peg board level 2, match board level 2, needle targeting, ring and rail level 2, dots and needles level 1, suture sponge level 2, energy dissection level 1, ring walk level 3, and tubes) (Fig. 1). These were chosen by a focus group of experts representing various technical skills required for robotic surgery, including endowrist manipulation, camera control, clutching, dissection, energy control, fourth arm control, needle control, as well as basic and advanced needle driving. For each participant, the Mimic simulator software calculated a total score for each task, ranging from 0 (worst) to 100 (best).

Fig. 1.

The 9 da Vinci Surgical Skills Simulator (dVRSS) exercises used were as follows: (A) Pegboard level 2: to assess camera and endowrist manipulations when transferring rings from a pegboard onto 2 pegs on the floor. (B) Match board level 2: to assess clutching and endowrist manipulation when picking up objects and placing them into their corresponding places. The objective was to avoid excessive instrument strain. (C) Needle targeting: to assess endowrist manipulation and needle control when inserting needles through a pair of matching colored targets. (D) Ring and rail level 2: to assess camera control and clutching as wells endowrist manipulation when picking up 3 colored rings and guiding them along their matching colored railings. (E) Dots and needles level 1: to assess endowrist manipulation and needle control as well as needle driving technique when inserting a needle through several pairs of targets that have various spatial positions. (F) Suture sponge level 2: to assess endowrist manipulation, camera control, needle control as well as needle driving technique when inserting and extracting a needle through several pairs of targets on the edge of a sponge. (G) Energy and dissection level 1: to assess endowrist manipulation and dissection as well as energy control when cauterizing and cutting small branching blood vessels. (H) Ring walk level 3: to assess endowrist manipulation, camera control, clutching and fourth arm control when guiding a ring along a curved vessel and retracting obstacles using the fourth arm. (I) Tubes: to assess endowrist manipulation, needle control and needle driving technique when performing a tubular anastomosis. 2013 Intutitive Surgical, Inc.

The score is based on a set of predetermined metrics including the following: time to complete exercise, economy of motion, instrument collision, excessive instrument force, master work space range, drops, instruments out of view, missed targets, misplaced energy, broken vessels and blood loss volume. Not all metrics were measured for all tasks. The first attempt score was used in the present study to avoid effects of practice. A detailed score report was obtained for each participant after the first attempt of the task.

Immediately after completing the 9 tasks, each participant was asked to complete a questionnaire (Appendix 1). This was used to evaluate face validity (how realistic is the simulator?) and content validity (how useful is the simulator as a training tool?). Overall percentile scores for each of the 9 tasks were compared among the 3 groups to determine construct validity.

Statistical analysis

Pairwise comparisons (novice vs. intermediate; intermediate vs. expert; novice vs. expert) of scores on the 9 tasks and 11 metrics were conducted using 2-sided Wilcoxon test. Using Bonferroni adjustment for multiple comparisons, a p value less than 1.67% (0.05/3) was considered as evidence against the null hypothesis (no difference between the two groups under comparison).

Results

There were a total of 48 participants: 30 (62.5%) were novices, 6 (12.5%) were experts (all 6 experts were attending surgeons) and 12 (25%) were intermediates (Table 1). There were 19 participants form urology, 12 from gynecology (including 2 gynecology research assistants), 4 from general surgery and 13 medical students. The experts had a median of 11.5 years of laparoscopic experience, including 4 years of robotic-assisted laparoscopic experience. The median number of robotic-assisted laparoscopic cases was 9 and 250 for the intermediate and expert groups, respectively (Table 1).

In terms of face validity, all participants, including the expert group, highly rated (7–8 out of 10 on a Likert scale) the dVSSS for overall realistic representation, 3-dimensional graphics, instrument handling and precision (Table 2). In terms of content validity, all participants, including the expert group, agreed that the dVSSS was useful in assessing and training trainees in robotic skills (scores of 8–10 out of 10). Furthermore, participants, including the expert group, agreed that the dVSSS should be part of a residency training program with robotic training (median score of 10 out of 10) (Table 2). In addition, the expert group recommended 10 (4–30) hours of training for senior residents and 8 (5–20) hours of training for fellows on the dVSSS.

Table 2:

Face and content validity scores (medians and ranges)

| Face validity | All Groups scores | Expert scores |

|---|---|---|

| • Realistic | 8 (4–10) | 7 (6–8) |

| • 3-dimentional graphics | 8 (4–10) | 7 (6–8) |

| • Instrument handling and ergonomics | 8 (6–10) | 8 (6–9) |

| • Precision of the virtual reality platform | 8 (5–10) | 7.5 (7–9) |

| Content validity | ||

| • Useful for training residents | 9 (6–10) | 8.5 (6–9) |

| • Useful for training fellows | 9 (5–10) | 8 (6–9) |

| • All novices to robotic surgery should have training on the da Vinci Skills Simulator prior to performing robotic surgery on patients | 10 (6–10) | 10 (8–10) |

| • Useful in assessing the resident’s skill to preform robotic surgery | 8 (3–10) | 8 (5–9) |

| • Useful in assessing the progression of the trainee’s robotic skills | 8 (2–10) | 8 (5–10) |

| • The da Vinci Skills Simulator should be included in all residency programs with robotic training | 10 (4–10) | 10 (4–10) |

Likert scale: 1 indicating definitely disagree and 10 indicating definitely agree.

For construct validity, experts outperformed novices in all 9 tasks (p < 0.05). Intermediates outperformed novices in 7 of 9 tasks (p < 0.05); there were no significant differences in the energy dissection level 1 and ring walk level 3 tasks. Finally, experts scored significantly better than intermediates in only 3 of 9 tasks (match board level 2, dots and needles and energy dissection level 1) (p < 0.05) (Table 3). When the different metrics were compared among the 3 groups, the novice group was significantly different from the intermediate and expert groups in time to complete exercise, economy of motion, instrument collision, excessive instrument force, and total task score (Table 4). Master workspace range was the only metric with significant differences between expert and intermediate groups and between expert and novice groups (Table 4). However, the scores for this metric between novice and intermediate groups were similar.

Table 3:

All 9 task scores stratified by group (median and range)

|

Group

|

||||||

|---|---|---|---|---|---|---|

| Exercise | Novice n=30 | Intermediate n=12 | Expert n=6 | Intermediate vs. novice (p value) | Expert vs.intermediate (p value) | Expert vs. novice (p value) |

| Peg board (Level 2) | 79 (31–98) | 91.5 (76–99) | 91.5 (85–99) | 0.01 | 0.71 | 0.01 |

| Match board (Level 2) | 64 (27–84) | 75.5 (40–81) | 85.5 (76–92) | 0.03 | 0.01 | <0.01 |

| Needle targeting | 71.5 (6–90) | 94 (71–100) | 94 (86–100) | <0.01 | 0.64 | <0.01 |

| Ring and rail (Level 2) | 40 (8–75) | 63 (32–91) | 71 (53–87) | 0.01 | 0.34 | <0.01 |

| Dots and needles (Level 1) | 57 (5–87) | 80 (28–97) | 93 (74–100) | 0.01 | 0.05 | <0.01 |

| Suture sponge (Level 2) | 58 (21–83) | 77 (53–97) | 86.5 (72–92) | <0.01 | 0.57 | <0.01 |

| Energy dissection (Level 1) | 76 (36–97) | 84 (61–97) | 96 (87–99) | 0.11 | 0.03 | <0.01 |

| Ring walk (Level 3) | 37.5 (0–78) | 53 (6–82) | 65.5 (39–88) | 0.15 | 0.19 | 0.02 |

| Tubes | 50 (28–79) | 67 (42–99) | 78 (53–83) | 0.01 | 0.19 | <0.01 |

Table 4.

Differences among groups in metrics measured

|

Group

|

||||||

|---|---|---|---|---|---|---|

| Matrix | Novice | Intermediate | Expert | Novice vs. intermediate (p value) | Expert vs. intermediate (p value) | Expert vs. novice (p value) |

| Time to complete exercise | 2801 (1400–5823) | 1795 (1258–3777) | 1334 (1122–2327) | <0.01 | 0.17 | <0.01 |

| Economy of motion | 3357 (2352–9851) | 2580 (1987–3546) | 2178 (2160–2959) | <0.01 | 0.53 | <0.01 |

| Instrument collisions | 56 (23–180) | 25 (12–80) | 25 (10–37 ) | <0.01 | 0.56 | <0.01 |

| Excessive instrument force | 77 (77–554) | 7 (1–164) | 3 (0–70) | <0.01 | 0.86 | 0.03 |

| Master workspace range | 87 (78–118) | 88 (76–101) | 74 (72–91) | 0.14 | 0.04 | <0.01 |

| Drop | 1 (0–23) | 1 (0–3) | 0 (0–1) | 0.25 | 0.63 | 0.16 |

| Instruments out of view | 24 (1–335) | 9 (2–107) | 5 (0–71) | 0.11 | 0.90 | 0.19 |

| Missed targets | 42 (17–193) | 24 (11–110) | 15 (13–44) | 0.03 | 0.41 | 0.01 |

| Misapplied energy time | 13 (3–73) | 10 (4–37) | 8 (3–22) | 0.37 | 0.39 | 0.11 |

| Broken vessels | 0 (0–2) | 0 (0–0) | 0 (0–0) | 0.22 | 1.00 | 0.34 |

| Blood loss volume | 27 (0–228) | 22 (0–256) | 0 (0–11 ) | 0.65 | 0.19 | 0.08 |

| Total task score | 520 (178–686) | 671 (460–799) | 766 (642–823) | <0.01 | 0.18 | <0.01 |

Discussion

Currently, the American College of Surgeon requires candidates to pass the FLS curriculum to ensure they have acquired the basic laparoscopic skills during their training. However, there are no such standardized curricula in assessing robotic surgical skill; with the ongoing growth of robotic surgery, we are likely to see a curricula in the near future. Currently, there are 3 robotic simulators commercially available (RoSS, dV-Trainer and dVSSS). The aim of our study was to confirm the validation of the dVSSS using only 9 tasks with 3 levels of participants (novice, intermediate and expert) from 3 specialties of general surgery, gynecology and urology at a Canadian robotic-assisted laparoscopic surgery training centre.

Out of the 48 study participants, 19 (40%) were from urology, 12 (25%) were from gynecology, 4 (8%) were from general surgery, and 13 (27%) were medical students. Previous studies had an overwhelming representation from urology.13–15 The only other dVSSS validation study that included general surgeons is the one by Finnegan and colleagues.16 Therefore, the current study included a participant cohort that is more representative of the current robotic surgeons, including urologists, gynecologists and general surgeons. This is fitting given the recent expansion of robotic surgery within the fields of gynecology and general surgery. In the 2 previous studies the novice group exclusively consisted of medical students, in our study, the novice group includes residents, fellows and an attending surgeons with no robotic experience in addition to medical students.13–14 Therefore, the novice group in the present study may have better represented surgical trainees who are novice robotic surgeons.

Various tasks and repetitions have been used. For example, Liss and colleagues used 3 tasks (peg board 1, peg board 2 and tubes) and Kelly and colleagues used 5 tasks (camera targeting 1, energy switching 1, threading rings 1, dots and needles 1 and ring and rail 1).14–15 On the other hand, Hung and colleagues used 10 tasks with 3 repetitions, while Finnegan and colleagues used 24 tasks.13,16 While more data are gathered by increasing the number of tasks and repetitions, these could create 2 issues. First, in their assessment study, Finnegan and colleagues found that fatigue and boredom occurred in cases in which it took up to 4 hours to perform the assessment.16 The second issue is the inadvertent effect of training while assessing the skill level of participants over multiple repetitions of the same task. Indeed, when Teishima and colleagues compared certified expert laparoscopic urologists with novice laparoscopic urologists, significant differences in their performance on the suture sponge task of the dV-Trainer were only seen at the second and third trials, while there were no significant differences between the two groups at the first and fourth attempts.17 This has several implications. First, even expert laparoscopic surgeons may need to become familiar with the robotic platform by performing a specific task. Second, some tasks may require few repetitions to achieve proficiency. Therefore, for the present study, the number of tasks were limited to 9 to avoid unnecessary fatigue and prolonged assessment period. Furthermore, the first attempt was used to avoid the effects of training.

In terms of face and content validities, all participants, including the expert group, highly rated the dVSSS as a realistic simulator useful for training residents in robotic skills (7–10 on a Likert scale of 1–10) (Table 2). This is similar to the findings of other studies.13–15 However, similar to other studies, the face and content validities of dVSSS were not compared to those of other robotic simulators, since the dVSSS was the only robotic simulator available at the study centre. While the advantage of dVSSS includes using the same robotic platform (console) used in the operating theatre, the same could also be a disadvantage since the console/simulator would only be available outside of operating theatre working hours. Therefore, the other simulators, such as the dV-Trainer and the RoSS, would be available during working hours for training. For the present study, the dVSSS was used only for assessment and training of robotic skills and thus it was not available for clinical cases.

The present study confirms the construct validity of the dVSSS in that experts outperformed novices in all 9 tasks (p < 0.05). Intermediates outperformed novices in 7 of 9 tasks (p < 0.05); there were no significant differences in the energy dissection level 1 and ring walk level 3 tasks. Finally, experts scored significantly better than intermediates in only 3 of 9 tasks (match board level 2, dots and needles and energy dissection level 1) (p < 0.05) (Table 3). Similarly, when metrics were compared among the 3 groups, there were significant results between novice and intermediate groups and between novice and expert groups (Table 4). This is similar to the other two dVSSS validation studies where there were no significant differences between expert robotic surgeons and intermediates (senior residents).14,15 Finnegan and colleagues showed significant differences between the expert and intermediate groups in only 6 out of 24 exercises tested (dots and needles level 2, energy dissection level 1, energy switching, suture sponge level 2, thread the rings, and tubes).16 The only dVSSS validation study to show significant differences between intermediates and experts was the one by Hung and colleagues.13 However, given that study participants performed 3 repetitions for each of the 10 tasks, this may have increased the sample size of the collected data despite introducing a confounding effect of practice.

The lack of significant differences between the intermediate and expert groups on most of the exercises (6 out of 9) is a limitation of the present study and of the dVSSS. Perhaps if the intermediates and experts were allowed to practice prior to collecting data, their scores could have been significantly different in more tasks. In addition, 3 trials rather than only 1 trial would have resulted in narrower standard deviation and perhaps more significant difference between the intermediate and expert groups. In the present study, previous independent performance of 75 robotic cases was arbitrarily chosen as the cut-off between intermediate and expert group. Finnegan and colleagues showed that after 90 robotic cases, there was a plateau of the mean overall score on the dVSSS.16 Other studies have used cut-offs of 100 or 150 cases to define experts. Furthermore, in the present study, the maximum number of robotic cases performed was 390, whereas in other studies, the expert group contained participants with 800 to 1500 robotic cases. Another limitation of the present study is that the expert group was small (only 6 participants). It is expected that as robotic surgical experience advances, larger number of expert participants with larger robotic case logs would be included in future studies. Furthermore, the future generation of robotic simulators needs to incorporate more complex robotic tasks, such as robotic surgeries, with anatomical variations to better distinguish experts from intermediates.

Conclusion

Notwithstanding the limitations of our present pilot study, it confirms the face, content and construct validities of the dVSSS across the 3 surgical disciplines of urology, gynecology and general surgery. Larger sample size and more complex tasks are needed to further differentiate intermediates from experts.

Appendix 1. Da Vinci Surgical Skill Simulator Study Questionnaire

| Name | Age | ||

| Department | |||

| Position | Attending | ||

| Fellow | |||

| Resident —> Level R | |||

| Medical student | |||

| Nurse | |||

| Other | |||

| Years in practice | Surgical | ||

| Laparoscopic | |||

| Robotic | |||

| Previous robotic experiences | |||

| No. of cases performed at the console as primary surgeon | |||

| No. of cases in the last year | |||

| No. of cases performed at the console as assistant | |||

| Previous experience with laparoscopic simulators | YES | NO | |

| Previous experience with Da Vinci Skill Simulator | YES | NO | |

| Previous experience with other robotic simulators | YES | NO | |

| Specify | |||

| From a scale of 1 to 10, answer the following question. (If the scale doesn’t apply to you, mark on “not competent to answer this question.”) | ||||||||||

|

| ||||||||||

| Is the skill simulator realistic? | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

|

| ||||||||||

| The 3D graphics are realistic. | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

|

| ||||||||||

| The instrument handling and ergonomics are realistic. | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

|

| ||||||||||

| The level of precision of the virtual reality platform in the Skill Simulator was realistic. | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

|

| ||||||||||

| The Skill Simulator is useful for training residents in robotic surgery. | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

|

| ||||||||||

| The Skill Simulator is useful for training fellows in robotic surgery. | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

|

| ||||||||||

| All novices to robotic surgery should have training on the Skill Simulator prior to performing robotic surgery on patients. | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

|

| ||||||||||

| The Skill Simulator is useful in assessing the resident’s skill to perform robotic surgery. | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

|

| ||||||||||

| The Skill Simulator is useful in assessing the progression of the trainee’s robotic skills. | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

|

| ||||||||||

| The Skill Simulator should be included in all residency programs with robotic training. | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

|

| ||||||||||

| As a resident or fellow, training on the Skill Simulator will improve your time on the console. | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

|

| ||||||||||

| Previous laparoscopic experience helps in obtaining skills on the robotic simulator. | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

|

| ||||||||||

| Experience with video games (e.g., PS, X-Box, etc) helps improve the performance with the robotic simulator. | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

|

| ||||||||||

| The work on the Skill Simulator helps you understand robotic surgery. | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

|

| ||||||||||

| The work on the Skill Simulator attracts you to do robotic surgery. | Not competent to answer this question | |||||||||

| 1 Definitely disagree |

2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 Definitely agree |

|

| In your opinion, how many total hours should be spent on the Skill Simulator for the purpose of training prior to sitting on the surgical console? | |

| Junior residents | |

| Senior residents | |

| Fellows | |

| What would you improve in the Skill Simulator? | |

Footnotes

Competing interests: This study was supported by an unrestricted educational grant from Abbott Pharmaceuticals and Paladin Labs Inc., Canada. Dr. Xianming Tan of the Biostatistics Core Facility, McGill University Health Centre Research Institute was commissioned to perform the statistical analysis.

This paper has been peer-reviewed.

References

- 1.Healy GB. The college should be instrumental in adapting simulators to education. Bull Am Coll Surg. 2002;87:10–1. [PubMed] [Google Scholar]

- 2.Fried GM. FLS assessment of competency using simulated laparoscopic tasks. J Gastrointestsurg. 2008;12:210–2. doi: 10.1007/s11605-007-0355-0. [DOI] [PubMed] [Google Scholar]

- 3.Dauster B, Steinberg AP, Vassiliou MC, et al. Validity of the MISTELS simulator for laparoscopy training in urology. J Endourol. 2005;19:541–5. doi: 10.1089/end.2005.19.541. [DOI] [PubMed] [Google Scholar]

- 4.Sweet RM, Beach R, Sainfort F, et al. Introduction and validation of the American Urological Association Basic Laparoscopic Urologic Surgery skills curriculum. J Endourol. 2012;26:190–6. doi: 10.1089/end.2011.0414. [DOI] [PubMed] [Google Scholar]

- 5.Stefanidis D, Hope WW, Scott DJ. Robotic suturing on the FLS model possesses construct validity, is less physically demanding, and is favored by more surgeons compared with laparoscopy. Surg Endosc. 2011;25:2141–6. doi: 10.1007/s00464-010-1512-1. [DOI] [PubMed] [Google Scholar]

- 6.Medical Advisory Secretariat Robotic-assisted minimally invasive surgery for gynecologic and urologic oncology: an evidence-based analysis. Ont Health Technol Assess Ser. 2010;10:1–118. http://www.health.gov.on.ca/english/providers/program/mas/tech/reviews/pdf/rev_robotic_surgery_20101220.pdf. Accessed June 26,2013. [PMC free article] [PubMed] [Google Scholar]

- 7.Seixas-Mikelus SA, Kesavadas T, Srimathveeravalli G, et al. Face validation of a novel robotic surgical simulator. Urology. 2010;76:357–60. doi: 10.1016/j.urology.2009.11.069. [DOI] [PubMed] [Google Scholar]

- 8.Seixas-Mikelus SA, Stegemann AP, Kesavadas T, et al. Content validation of a novel robotic surgical simulator. BJU Int. 2011;107:1130–5. doi: 10.1111/j.1464-410X.2010.09694.x. [DOI] [PubMed] [Google Scholar]

- 9.Lendvay TS, Casale P, Sweet R, et al. VR robotic surgery: randomized blinded study of the dV-Trainer robotic simulator. Stud Health Technol Inform. 2008;132:242–4. [PubMed] [Google Scholar]

- 10.Kenney PA, Wszolek MF, Gould JJ, et al. Face, content, and construct validity of dV-trainer, a novel virtual reality simulator for robotic surgery. Urology. 2009;73:1288–92. doi: 10.1016/j.urology.2008.12.044. [DOI] [PubMed] [Google Scholar]

- 11.Sethi AS, Peine WJ, Mohammadi Y, et al. Validation of a novel virtual reality robotic simulator. J Endourol. 2009;23:503–8. doi: 10.1089/end.2008.0250. [DOI] [PubMed] [Google Scholar]

- 12.Raman JD, Dong S, Levinson A, et al. Robotic radical prostatectomy: operative technique, outcomes, and learning curve. JSLS. 2007;11:1–7. [PMC free article] [PubMed] [Google Scholar]

- 13.Hung AJ, Zehnder P, Patil MB, et al. Face, content and construct validity of a novel robotic surgery simulator. J Urol. 2011;186:1019–24. doi: 10.1016/j.juro.2011.04.064. [DOI] [PubMed] [Google Scholar]

- 14.Kelly DC, Margules AC, Kundavaram CR, et al. Face, content, and construct validation of the Da Vinci Skills Simulator. Urology. 2012;79:1068–72. doi: 10.1016/j.urology.2012.01.028. [DOI] [PubMed] [Google Scholar]

- 15.Liss MA, Abdelshehid C, Quach S, et al. Validation, correlation, and comparison of the da vinci trainer and the da vinci surgical skills simulator using the mimi software for urologic robotic surgical education. J Endourol. 2012;26:1629–34. doi: 10.1089/end.2012.0328. [DOI] [PubMed] [Google Scholar]

- 16.Finnegan KT, Meraney AM, Staff I, et al. da Vinci Skills Simulator construct validation study: correlation of prior robotic experience with overall score and time score simulator performance. Urology. 2012;80:330–5. doi: 10.1016/j.urology.2012.02.059. [DOI] [PubMed] [Google Scholar]

- 17.Teishima J, Hattori M, Inoue S, et al. Impact of laparoscopic experience on the proficiency gain of urologic surgeons in robot-assisted surgery. J Endourol. 2012;26:1635–8. doi: 10.1089/end.2012.0308. [DOI] [PubMed] [Google Scholar]