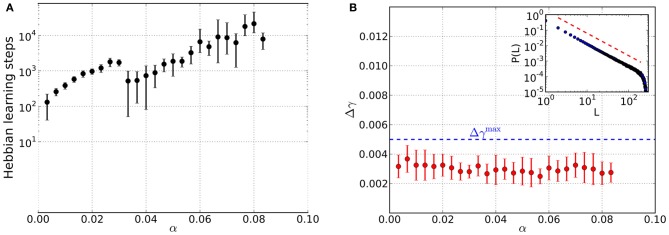

Figure 3.

Results from networks including Hebbian and homeostatic learning of synaptic weights, respectively, for different values of the load parameter α. For each value of α data is taken from 10 trials and error bars mark one standard deviation from the mean. (A) Total number of steps in Hebbian learning needed to converge to a state that is both critical and an associative memory of the stored patterns. The discontinuity near α≈ 0.03 appears to be due to the finite size of the basins of attraction: while for low loading ratios α a basin of attraction of several bits can be achieved, now only a single bit is corrected in the course of the learning which is faster achievable than before. (B) Average mean squared deviation Δγ from the best-fit power law. Since all data points lie below the threshold of Δγmax = 0.005 (blue dashed line), avalanche size distributions are critical over the whole range of α. An example avalanche size distribution P(L) in the converged state is illustrated in the inset (red dashed line indicates slope of the best-fit power law).