Abstract

Although positive and negative images enhance the visual processing of young adults, recent work suggests that a life-span shift in emotion processing goals may lead older adults to avoid negative images. To examine this tendency for older adults to regulate their intake of negative emotional information, the current study investigated age-related differences in the perceptual boost received by probes appearing over facial expressions of emotion. Visually-evoked event-related potentials (ERPs) were recorded from the scalp over cortical regions associated with visual processing as a probe appeared over facial expressions depicting anger, sadness, happiness, or no emotion. The activity of the visual system in response to each probe was operationalized in terms of the P1 component of the ERP evoked by the probe. For young adults, the visual system was more active (i.e., greater P1 amplitude) when the probes appeared over any of the emotional facial expressions. However, for older adults, the visual system displayed reduced activity when the probe appeared over angry facial expressions.

Keywords: Emotion, Aging, Visual Attention, ERP, Social Cognitive Neuroscience

Research examining the interaction between emotion and cognition demonstrates that emotional stimuli enhance visual processing, especially when the stimuli depict negative emotions like fear and anger (e.g., Compton, 2003; Smith, Cacioppo, Larsen, & Chartrand, 2003). For example, Phelps, Ling, and Carrasco (2006) presented fearful or neutral face cues at a central location and examined the effects of this face cue on contrast thresholds obtained from a subsequent discrimination task performed on peripherally presented stimuli. The presence of the fearful face enhanced contrast sensitivity compared to the neutral face. Even though the fearful face was presented centrally, visual processing of peripheral stimuli was enhanced. In another experiment, the fearful and neutral face cues were presented peripherally to involuntarily capture visuospatial attention. The fearful face enhanced contrast sensitivity above and beyond the effects of visuospatial attention alone. These results are consistent with the idea that emotion enhances early visual processing and that emotion can also enhance the effect of visuospatial attention on visual processing.

For the most part, samples in these studies have been limited to college-aged individuals. Recent life-span developmental research suggests that emotional priorities shift with age (Carstensen, Mikels, & Mather, 2006), and that the priority given to negative emotions wanes in older adulthood, giving way to a motivation to minimize the emotional ramifications of negative experiences (Carstensen, 2006). Accordingly, older adults report that they experience less negative affect and that they have more control over their emotions than do young adults (Carstensen, Pasupathi, Mayr, & Nesselroade, 2000; Lawton, 2001). Likewise, older adults are more effective than young adults at employing regulatory strategies to cope with negative emotions (Blanchard-Fields, 2007; Blanchard-Fields, Mienaltowski, & Seay, 2007). An intriguing possibility advanced by Mather and Carstensen (2003) is that this motivation for emotion regulation can influence even early processing of emotional stimuli and may result in a qualitative change in the relationship between emotion and visual processing in the latter half of life, such that older adults tend to shift visual processing resources away from negative images and toward positive ones.

Recent findings from studies utilizing a wide variety of methodological techniques provide converging evidence to support the proposal that the relationship between emotional valence and visual processing changes as individuals grow older. Using a dot-probe paradigm, Mather and Carstensen (2003) presented two facial expressions side by side, an emotional expression and a neutral expression. Their results indicated that older adults selectively devoted fewer visual processing resources to negative expressions compared to young adults. Moreover, eye-tracking research has shown that older adults are more likely than young adults to gradually avert their gaze away from negative facial expressions and towards positive expressions during a preferential looking task (for review, Murphy & Isaacowitz, 2008). Using fMRI, Mather and colleagues (2004) demonstrated that negative emotional scenes elicit less amygdala activation in older adults than in young adults. Overall, these findings suggest that older adults have a natural tendency to minimize their exposure to negative emotional input while maximizing their focus on positive emotional information.

The current study utilizes an ERP design to examine the impact that the emotional content of a background stimulus has on the ability of a subsequent focal stimulus to activate the visual system of young and older adults. Previous ERP research examined the influence that the emotional nature of a scene – positive, negative, or neutral – had on a commonly measured neural correlate of categorization novelty (Kisley, Wood, & Burrows, 2007) known as the late positive potential (LPP) in adults of all ages. This ERP component is measured over the parietal lobe at the mid-line of the scalp. Modulation of the LPP takes places when an infrequently presented stimulus (or “oddball”) appears amongst stimuli that are presented more frequently (Luck, 2005). For younger adults, the LPP was greatest when the oddball stimulus was an emotionally negative scene. However, the strength of the LPP for the negative stimuli declined linearly with age into late adulthood. Conversely, the strength of the LPP elicited by infrequently presented positive scenes did not change with age across adulthood. The authors interpret this finding as reflecting an age-related reduction in the importance placed on processing negative emotional stimuli. This supports Mather and Carstensen’s (2003) suggestion that the visual processing of emotional stimuli changes in older adulthood, as older adults appeared to suppress their processing of negative emotional stimuli. However, there was no evidence for a corresponding enhancement in the processing of positive stimuli. Moreover, the LPP component of the ERP is complex, with multiple neural generators (e.g., Bledowski, Prvulovic, Hoechstetter, Scherg, et al., 2004), so its bearing on the emotional enhancement of early visual processing is not straightforward.

In the present study, we employed an ERP paradigm to specifically examine the influence that the emotion found on a facial image had on the amount of early visual cortical activity evoked by a probe appearing over the facial image shortly after its initial onset. In this case, we were interested in the modulation of the P1 component of the ERP elicited by the probe when it appeared over an image depicting a neutral, happy, sad, or angry facial expression. The P1 is a component of a visually-evoked potential, peaking 60–150 ms post-stimulus and is maximal at posterior electrode sites. Increases in P1 amplitude are thought to reflect an increase in sensory gain in extrastriate visual areas (Hillyard, Vogel, & Luck, 1998), and P1 amplitude has been shown to be influenced by attention allocation in both young and older adults (Curran, Hills, Patterson, & Strauss, 2001). We investigated whether exposure to centrally-presented images of angry, happy, and sad faces would differentially modulate P1 amplitudes evoked by probes appearing over these faces, and whether this modulation differed for young and older adults. An increase in P1 amplitude elicited by the probe in the presence of an emotional face indicates that the emotional expression enhanced early visual processing. As mentioned previously, Phelps, Ling, & Carrasco (2006) have shown that a fearful face cue can enhance contrast sensitivity. Our design can extend these results in several ways. First, we used happy, sad, and angry facial expressions to generalize the results beyond fearful faces. Second, we examined the ability of emotional facial expressions to differentially enhance early visual processing within young and older adult samples. Finally, we used an ERP technique to provide converging evidence that emotional expressions can enhance early visual processing which can complement the behavioral results obtained by Phelps, Ling, & Carrasco (2006).

Consistent with previous research, young adults were expected to display larger P1 amplitudes for probes appearing over all three types of emotional expressions relative to those appearing over neutral faces, with perhaps a greater enhancement for probes appearing over the angry and sad faces (i.e., negative emotional expressions) than for those appearing over the happy faces. Conversely, older adults were expected to display a different pattern of results. Specifically, relative to probes appearing over neutral faces, older adults were expected to display reduced P1 amplitude for probes appearing over the negative faces (i.e., angry and sad faces) and enhanced P1 amplitude for probes appearing over happy faces. Such an outcome would be consistent with prior research that demonstrates that young and older adults evince different patterns of visual processing when presented with emotional stimuli (Mather & Carstensen, 2005; Murphy & Isaacowitz, 2008). Given that previous ERP research (Kisley et al., 2007) failed to document differences in the strength of neurophysiological correlates associated with the categorization of positive and negative emotional stimuli, it was not clear whether positive facial expressions would lead to greater activation of the visual system of older adults than would negative expressions. In addition, because recent findings from eye-tracking research suggest that older adults may selectively avoid specific discrete negative emotions rather than all negative emotions (Isaacowitz, Wadlinger, Goren, & Wilson, 2006), we examined young and older adults’ probe P1 amplitudes separately for each expression used in this study.

Method

Participants

Data were collected from 31 adults: 16 young (50% women; age range = 18–31; M = 19.94; SD = 3.17) and 15 old (47% women; age range = 61–77; M = 69.53; SD = 4.14). Most of the participants were Caucasian (82.8%; African American = 8.6%; Asian = 5.7%; Other = 2.9%). On average, young and older adults reported having similar levels of education (i.e., some college). Young adults provided marginally higher ratings of overall subjective health than did older adults (1 = poor, 5 = excellent; young M = 4.13, SE = 0.20; old M = 3.53, SE = 0.22), t(33) = 1.97, p < .06, but young and older adults did not differ from one another in the extent to which health problems stood in their way of doing the things that they wanted to do in their daily lives (1 = not at all, 4 = a great deal; young M = 1.25, SE = 0.14; old M = 1.53, SE = 0.14). All participants gave written, informed consent before taking part in the experiment.

Stimuli and Procedure

Testing occurred under dim lighting in a sound-attenuating chamber. Participants sat in front of a computer monitor, with a viewing distance of approximately 57 cm. A chinrest was used to restrict head movement. E-Prime software (Psychology Software Tools, Pittsburgh, USA) was used to control the experiment and collect responses. Grayscale images of neutral, happy, angry, and sad expressions from 30 different young actors were used as stimuli. These stimuli were adapted from the MacBrain Face Stimulus Set (MacArthur Foundation Research Network on Early Experience and Brain Development, www.macbrain.org). These images included men and women, and they were ethnically diverse. In order to minimize stimulus-feature differences, only closed-mouth, medium-emotional intensity expressions were used. The images were equated for overall luminance and scaled to fit into a bounding box subtending 9.4°(w) × 12.1°(h) of visual angle.

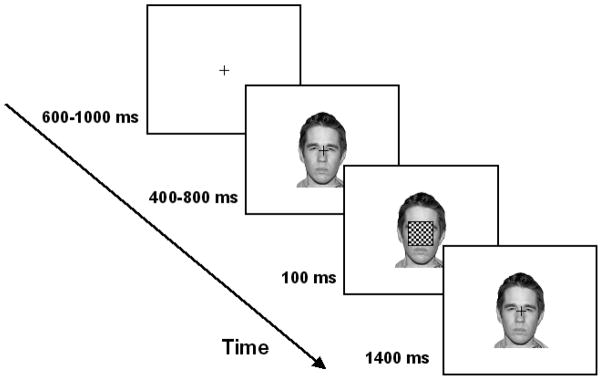

Participants completed a timed go/no-go task, as depicted in Figure 1. Trials began with a fixation cross (0.4°×0.4°; 0.3 cd/m2) positioned centrally on a white background (95.2 cd/m2). A facial image was presented, centered over the fixation point, after a random interval of 600–1000 ms (i.e., fixation period). The image remained on the display by itself for 400–800 ms (i.e., emotion manipulation period), after which a black-and-white checkerboard probe (5.7°×5.7°) flashed over the face for 100 ms. Participants were given a 1400 ms response interval to indicate the onset of this probe, and the participants were instructed to respond as quickly as possible. Both the fixation and emotion manipulation periods were randomly varied over a range of interval durations to eliminate systematic anticipatory effects in the response segments (Talsma & Woldorff, 2005). After a block of 44 practice trials, participants completed four blocks of 192 experimental trials (768 total; 192 trials per emotion). Of these, 10% were catch trials in which a probe never appeared and participants were instructed to withhold responses. Each face stimulus was randomly repeated eight times. Afterwards, all of the facial expressions displayed in the go/no-go task were once again presented to the participants in a forced-choice task that required them to indicate which one of the four emotions was expressed by each image.

Figure 1.

The sequence of events in a typical trial. Participants fixated on the center of the display (600–1000 ms) prior to the onset of a facial expression. Individual faces were presented for 400–800 ms, after which a black-and-white checkerboard probe flashed (100 ms) over the face. The participants’ task was to respond to the onset of the probe via key press. ERPs were time-locked to the onset of the probe.

Electrophysiology

Continuous EEG was recorded from 32 scalp electrodes positioned according to the extended 10–20 system (Nuwer, Comi, Emerson, Fuglslang-Frederiksen, et al., 1998). Additional bipolar leads were placed around the eyes to record the vertical and horizontal electrooculogram. Data from all electrodes were digitized at 512 Hz using an ActiveTwo biopotential measurement system (BioSemi, Amserdam, Netherlands). Data from the scalp sites were re-referenced offline to the average of all scalp electrodes. The continuous EEG data were digitally filtered (bandpass 0.16–30 Hz) and segmented into 900 ms epochs with a 100 ms pre-stimulus baseline. Segmentation was tied to triggers sent to the data collection computer from the stimulus presentation computer using E-Prime software. The triggers time-locked each ERP segment to the onset of the checkerboard probe. Eye movements and blink artifacts were corrected in individual segments using the method of Gratton, Coles, and Donchin (1983). Segments were rejected from analysis if they contained activity exceeding +/−100 μV in any EEG channel. P1 amplitude was quantified as the average of 11 data points centered on the most positive peak within a time-window of 60–150 ms, recorded at occipital-temporal electrodes P7 and P8, over the left and right hemispheres, respectively.

Results

The study employed a 2 (age group: young, old) by 4 (emotion: neutral, happy, angry, sad) mixed-model design, with age group as a between-subjects factor and emotion as a within-subjects factor. Mixed-model analyses of variance (ANOVA) were conducted on probe reaction time (RT), response accuracy, and probe P1 amplitude, as well as on emotion recognition accuracy.

Emotion Recognition Accuracy

A 2 (age group) by 4 (emotion) mixed-model ANOVA conducted on emotion recognition accuracy revealed that main effects for age group, F(1,29) = 16.54 (p < .001, ηp2 = .36), and emotion, F(3,87) = 17.29 (p < .001, ηp 2 = .37), were qualified by an emotion by age group interaction, F(3,87) = 8.50 (p < .001, ηp 2 = .23). The participants’ mean recognition accuracy is reported by emotion and age group in Table 1. Young adults correctly identified more sad faces than did older adults, t(29) = 4.77 (p < .001). However, both young and older adults correctly identified more neutral and happy than angry expressions, t(30) = 4.54 and t(30) = 4.67, respectively (ps < .001). Also, young adults correctly identified more sad faces than angry faces, t(15) = 2.14 (p = .05), and older adults identified more happy and neutral faces than sad faces, t(14) = 5.28 and t(14) = 5.59, respectively (ps < .001). Given the above effects, the remaining analyses reported were run a second time using emotion recognition accuracy as a covariate. Emotion recognition accuracy did not emerge as a significant covariate nor did it interact with age group, emotion, or electrode in the analyses reported herein.

Table 1.

Mean Emotion Recognition Accuracy

| Expression Type | Young Adults n = 16 | Older Adults n = 15 |

|---|---|---|

| Neutral | 97.3% (1.0%) | 95.9% (1.0%) |

| Angry | 88.9% (2.5%) | 86.0% (2.6%) |

| Happy | 97.3% (1.2%) | 97.5% (1.2%) |

| Sad | 94.9% (2.6%) | 77.1% (2.7%) |

Note: Standard errors are reported in the parentheses below each mean.

Go/No-go Reaction Times and Accuracy

Young adults (M = 256 ms, SE = 12.3 ms) were faster than older adults (M = 330 ms, SE = 11.3 ms) at detecting probes, F(1,33) = 19.68 (p < .001, ηp2 = .37).1 However, young (M = 98.5%, SE = 0.8%) and older (M = 96.6%, SE = 0.7%) adults were equally accurate in their responses, and emotion had no influence on probe RT or accuracy.

Probe P1 Amplitude

Each participant’s average probe P1 amplitudes were based on approximately 145 EEG segments (SE = 14) per condition. A 2 (age group) by 4 (emotion) by 2 (electrode: P7 and P8) mixed model ANOVA, in which electrode was included as a within-subjects factor, was conducted on probe P1 amplitude. This ANOVA yielded a significant age group by emotion two-way interaction, F(3,87) = 3.41 (p < .05, ηp 2 = .11), and a trend towards an age group by emotion by electrode three-way interaction, F(3,87) = 2.26 (p < .09, ηp 2 = .07). Separate 2 (age group) by 4 (emotion) mixed model ANOVAs were conducted on P1 amplitude measured at each electrode in response to the onset of the probe. No significant main effects or interactions emerged at the P8 electrode, but a significant age group by emotion interaction emerged at the P7 electrode, F(3,87) = 4.68 (p < .01, ηp 2 = .14).

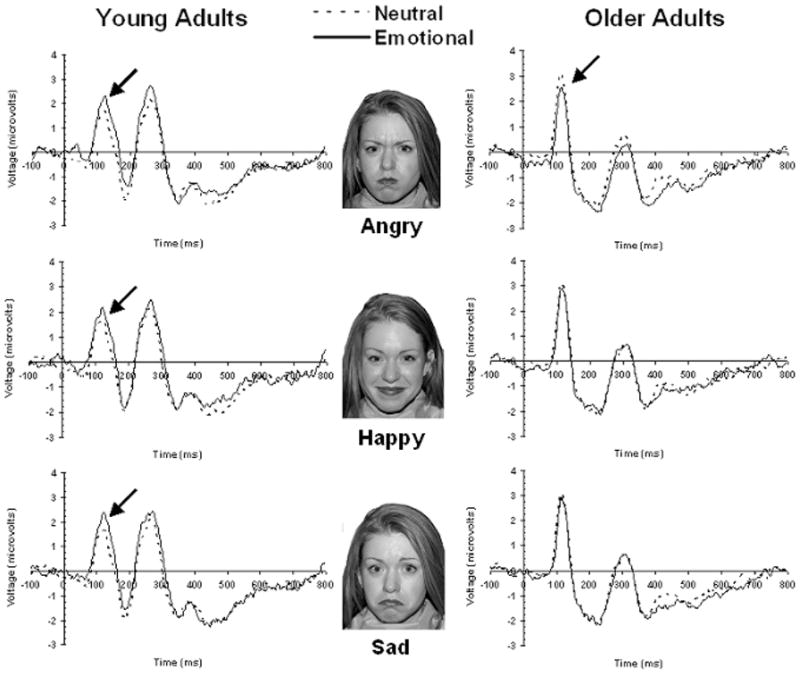

The age group by emotion interaction at the P7 electrode was further investigated using two different approaches. First, we examined the influence that emotion had on P1 amplitude separately for each age group to determine if the within-subjects influence of emotion on P1 amplitude was different for young and older adults as was expected. Next, to be consistent with previous research examining age differences in the impact of emotion on visual processing, separate ANOVAs were conducted by emotion to compare young and older adults’ P1 amplitudes when probes appeared over an emotional face relative to then they appeared over a neutral face. Grand average ERPs for the P7 electrode are displayed in Figure 2 by age group and neutral-to-emotional comparison.

Figure 2.

Grand-average waveforms for visually-evoked potentials (VEP) recorded from electrode site P7 for young and older adults. Separate plots compare the VEP evoked by probes over emotional faces (solid lines) with the VEP evoked by probes over neutral faces (dashed lines). Arrows indicate significant differences in P1 amplitude between “emotional” and “neutral” conditions.

Examining the impact of emotion on P1 amplitude for each age group

Separate analyses of variance were conducted on young and older adults’ probe P1 amplitudes at the P7 electrode using emotion (4 levels) as a within-subjects factor. These analyses revealed a main effect of emotion for young adults, F(3,45) = 2.93 (p < .05, ηp 2 = .16), but not for older adults, F(3,42) = 2.09 (p = .11, ηp 2 = .13). Because we had made specific predictions a priori as to where emotional differences were expected to be observed for each age group, planned comparisons were conducted to compare the probe P1 amplitude of each emotional condition to that of the neutral condition at the P7 electrode separately for young and older adults. For young adults, P1 amplitude was significantly larger when probes appeared over angry faces (M = 3.44, SE = 0.84) and happy faces (M = 3.12, SE = 0.86) than when they appeared over neutral faces (M = 2.55, SE = 0.81), t(15) = 2.15 (p < .05) and t(15) = 2.94 (p < .05), respectively. Young adults also displayed marginally larger P1 amplitudes when probes appeared over sad faces (M = 2.98, SE = 0.74) than when they appeared over neutral faces, t(15) = 1.70 (p = .10). For older adults, P1 amplitudes were smaller when probes appeared over angry faces (M = 2.98, SE = 0.55) than when they appeared over neutral faces (M = 3.48, SE = 0.55), t(14) = 2.25 (p < .05), but no differences emerged when comparing P1 amplitude for probes appearing over happy faces (M = 3.30, SE = 0.63) or sad faces (M = 3.24, SE = 0.53) with probes appearing over neutral faces.

Examining age differences in emotion-to-neutral comparisons of P1 amplitude

Three separate 2 (emotion) by 2 (age group) mixed-model ANOVAs were conducted to directly compare how young and older adults’ P1 amplitudes differed when the probe appeared over emotional faces relative to when it appeared over neutral faces. Although all three ANOVAs failed to reveal main effects of emotion or age group, all three did reveal significant emotion by age group interactions: neutral versus angry, F(1,29) = 8.38 (p < .01, ηp 2 = .22); neutral versus happy, F(1,29) = 8.04 (p < .01, ηp 2 = .22); and neutral versus sad F(1,29) = 4.91 (p < .05, ηp 2 = .15). For the neutral versus angry comparison, the emotion by age group interaction demonstrates that P1 amplitude was substantially greater when the probe appeared over angry faces than when it appeared over neutral faces for young adults, whereas the opposite was true for older adults. When comparing responses to probes over neutral faces to probes over happy or sad faces, older adults’ P1 amplitudes did not differ by condition, whereas young adults displayed greater P1 amplitudes when probes appeared over happy and sad faces.

Summary of analyses

For young adults, probe P1 amplitude was significantly enhanced when probes appeared over happy and angry emotional expressions and only marginally enhanced when probes appeared over sad expressions. For older adults, happy facial expressions did not lead to enhanced probe P1 amplitude; however, probes appearing over angry emotional expressions elicited a reduced P1 amplitude (or less visual activity). Direct age group by emotion comparisons provided further evidence that young and older adults’ probe P1 amplitudes were differentially impacted by the emotional content of the facial expressions over which the probes appeared.

Discussion

These findings indicate that emotion in facial expressions differentially modulates the visual processing of young and older adults. Consistent with Phelps, Ling, & Carrasco (2006), the emotional content of the facial expressions enhanced the reactivity of the visual system of young adults when a probe was presented over these faces. Specifically, P1 amplitudes were larger for probes that appeared over emotional faces than for those that appeared over neutral faces. On the other hand, given that individuals are strongly motivated to regulate their exposure to negative stimuli as they progress in age (Carstensen, 2006), we expected that negative emotional expressions (i.e., angry and sad faces) would lead to a reduction in the reactivity of the visual system of older adults to the probes. Partially consistent with this prediction, older adults displayed a weaker P1 amplitude for probes appearing over angry faces than for those appearing over neutral faces. This suggests that the visual system of older adults was less reactive following the presentation of angry facial expressions. However, older adults’ visual systems were equally reactive to the probes appearing over happy and sad facial expressions relative to those appearing over neutral expressions.

One interesting finding that emerged from this study is that older adults did not show an emotional enhancement effect or even a negativity effect in their visual processing of emotional stimuli. A potential explanation for this follows from recent fMRI research which suggests that the neural circuitry underlying emotional stimulus processing becomes more frontally mediated with advancing age (St. Jacques, Dolcos, & Cabeza, 2010). As a result, older adults display lower rates of co-activation between the amygdala and posterior sensory regions of the cortex than do young adults. Although the amygdala enhances the visual processing of emotional stimuli in young adults (Vuilleumier, Richardson, Armony, Driver, & Dolan, 2004), in older adults, the up-regulation of sensory cortices by the amygdala may be suppressed by frontal processes (St. Jacques et al., 2010), potentially for emotion regulatory purposes (Ochsner & Gross, 2005). With respect to the current study, such frontal regulation of amygdalar input to perceptual regions of the brain could provide the motivational link that accounts for why young adults display an emotional P1 enhancement but older adults do not. Of course, the visual system’s reaction to the probe, as measured by the posterior-focused P1 ERP component, does not directly assess the amount of effort that older adults devote to emotion regulation. However, given that the P1 component is modulated by attention (Mangun & Hillyard, 1988, 1991), a reduction in the amplitude of this component may suggest that less attention is being directed toward a stimulus. Older adults P1 amplitudes reflected this flattened and reduced attentional response, especially to angry expressions.

In the current study, emotional facial expressions generally enhanced the reactivity of the young adults’ visual system to subsequently presented probes. Similarly, in previous ERP research, emotional oddball stimuli (i.e., emotional scenes) elicited greater cortical reactivity than did neutral stimuli for young adults (Kisley et al., 2007). The cortical reactivity of older adults, on the other hand, was not enhanced by emotional facial expressions in the current study (i.e., happy and sad were both equal to neutral). In fact, the visual system of older adults displayed less reactivity to probes that appeared over angry expressions than it did to those appearing over neutral faces. This is partially consistent with previous ERP research demonstrating an age-related dampening of the negativity bias that is normally seen in young adults (Kisley et al., 2007). It is also consistent with the aforementioned fMRI research which suggests that the older adults may use emotion regulation to suppress the sensory processing of negative emotional stimuli (St. Jacques et al., 2010). However, the findings from the current study do not directly address this point, as the study was designed to examine visual system activity as gauged by the P1 component of the visually-evoked potential elicited by the probes.

Unlike in previous research, there was no evidence to suggest that positive facial expressions (i.e., happy faces) enhanced visual processing in older adults. Sad facial expressions were equally ineffectual. One possible explanation for this is that the sad and happy expressions used in the current study were not intense enough to emotionally arouse older adults, but that the angry expressions were of adequate intensity to merit regulation by older participants. This interpretation is speculative given the current study’s manipulation of the emotional valence of the stimuli and not arousal level. However, this interpretation is consistent with previous research that demonstrates that older adults require greater contrast to recognize faces (Owsley, Sekuler, & Boldt, 1981) and that older adults may experience decrements in neural adaptation in brain regions associated with visual processing of faces (Gao, Xu, Zhang, Zhao, et al. 2009). This interpretation is also consistent with research demonstrating that older adults display perceptual emotion recognition deficits (Isaacowitz, Lockenhoff, Wright, Sechrist, et al., 2007; Ortega & Phillips, 2008).

Another more theoretically intriguing possibility is that older individuals implement emotion regulation on a selective, emotion-by-emotion (a.k.a. discrete emotion) basis, especially demonstrated in the case of anger. This interpretation is consistent with eye-tracking research demonstrating that older adults may strategically suppress the processing of angry expressions by selectively withdrawing their gaze from those facial cues that communicate anger (Isaacowitz et al., 2006). In fact, research suggests that older adults are particularly effective at down-regulating anger and are motivated to prevent the experience of anger from ever occurring (Blanchard-Fields & Coats, 2008; Gross, Carstensen, Pasupathi, Tsai, et al., 1997). Furthermore, findings show that older adults are less likely to report the experience of anger, and, when they do report anger, it is rated lower in intensity (Birditt & Fingerman, 2005; Chipperfield, Perry, & Weiner, 2003; Gross et al., 1997; Schieman, 1999). The current study supports this idea in that this perceptual dampening effect may take place very early in the process of perceiving anger-related stimuli. Finally, it may be the case that, although older adults may choose to withdraw from highly arousing negative expressions like anger, they may choose not to withdraw from sorrowful emotional expressions because sadness shared between two individuals strengthens meaningful affiliative bonds (Blanchard-Fields & Coats, 2008). Consistent with this explanation, older adults withdrew attention from probes appearing over angry faces but not from probes appearing over sad faces. Again, however, it may be the case that older adults failed to withdraw their attention from sad faces like they did for angry faces because the emotional content of the expressions was not intense or clear enough to merit such a diversion. This interpretation is consistent with the finding that older adults in the current study had more difficulty accurately recognizing sad facial expressions.2

Besides the limitations already described above, three additional points merit discussion. First, although older adults’ probe P1 amplitude was not significantly larger than that of young adults when the probe was presented over the neutral face, there was a trend in this direction. This occurrence has been reported elsewhere in the literature and is thought to represent either an age-related decrement in neural specificity during visual processing or the recruitment of additional perceptual resources (Gao et al., 2009). This phenomenon can make it difficult to directly compare young and older adults’ P1 amplitude as a measure of attentional modulation, so we dealt with this limitation by comparing pairs of within-subjects conditions in much the same vein that earlier research has relied on the use of difference scores to calculate differences from baseline when comparing young and older adult performance (e.g., Isaacowitz et al., 2006; Mather & Carstensen, 2003). Second, although previous research has demonstrated that perception is boosted in both the left and right visual fields by emotional facial expressions (Phelps et al., 2006), emotion only modulated the probe P1 component in the left hemisphere for the current study. One possible explanation for this is that, given our small sample sizes, we did not have enough power to detect the impact of emotion in the right hemisphere and that increasing our sample size would correct this discrepancy. Another possibility is that the right hemisphere was consumed with face processing when the checkerboard appeared so that emotion could only modulate attentional resources in the left hemisphere (Kanwisher, McDermott, & Chun, 1997; McCarthy, Puce, Gore, & Allison, 1997).3 Lastly, although the emotional expressions manipulated in the current study modulated a neurophysiological marker of visual processing, corresponding modulation of the participants’ behavioral responses (i.e., reaction time and accuracy) failed to take place. Because young and older adults had near-ceiling accuracy and took very little time to respond to the presence of the probe in the go/no-go task, it was difficult to observe the impact that emotion had on the participants’ psychophysical responses.

In sum, emotion in facial expressions differentially modulated young and older adults’ visual systems’ reactivity to subsequently presented probes. For young adults, neurophysiological reactivity to the probes was enhanced by each of the emotional expressions. However, emotion did not enhance older adults’ neurophysiological reactivity to the probes. Rather, for angry expressions, older adults’ responses were actually weakened. When taken together, these findings suggest that older adults may selectively withdraw visual processing resources away from angry expressions, perhaps as a way to regulate the intake of emotional information from their surroundings.

Acknowledgments

This research was supported by funds awarded to Andrew Mienaltowski as a part of a fellowship funded through the National Institute on Aging (NIA) under Training Grant T32-AG00175. It was also supported by NIA Research Grant R01-AG015019 awarded to Fredda Blanchard-Fields. We would like to thank Anne Brauer and Katie McNulty for their assistance on this project.

Development of the MacBrain Face Stimulus Set was overseen by Nim Tottenham and supported by the John D. and Catherine T. MacArthur Foundation Research Network on Early Experience and Brain Development. Please contact Nim Tottenham at tott0006@tc.umn.edu for more information concerning the stimulus set.

Footnotes

Trials were trimmed from probe RT data if responses were +− 2.5 SD of each participant’s average RT (2.3% of trials).

Sad expressions led to the weakest P1 amplitude enhancement for young adults. Two factors may be operating to attenuate the impact of sad expressions in this study. First, sad faces may not be arousing enough to evoke robust attentional effects even in young adults. Second, the current study lacks a large enough sample size to have the statistical power to draw definitive conclusions about the perceptual boost that sad expressions provide to young adults when presented with the probe.

The authors would like to thank a reviewer for providing this suggestion.

Publisher's Disclaimer: The following manuscript is the final accepted manuscript. It has not been subjected to the final copyediting, fact-checking, and proofreading required for formal publication. It is not the definitive, publisher-authenticated version. The American Psychological Association and its Council of Editors disclaim any responsibility or liabilities for errors or omissions of this manuscript version, any version derived from this manuscript by NIH, or other third parties. The published version is available at www.apa.org/pubs/journals/PAG

Contributor Information

Andrew Mienaltowski, Department of Psychology, Western Kentucky University, Bowling Green, Kentucky.

Paul M. Corballis, School of Psychology, Georgia Institute of Technology, Atlanta, Georgia

Fredda Blanchard-Fields, School of Psychology, Georgia Institute of Technology, Atlanta, Georgia.

Nathan A. Parks, Beckman Institute, University of Illinois at Urbana-Champaign, Urbana, Illinois

Matthew R. Hilimire, School of Psychology, Georgia Institute of Technology, Atlanta, Georgia

References

- Birditt KS, Fingerman KL. Do we get better at picking our battles? Age group differences in descriptions of behavioral reactions to interpersonal tensions. Journal of Gerontology: Psychological Sciences. 2005;60B:P121–P128. doi: 10.1093/geronb/60.3.p121. [DOI] [PubMed] [Google Scholar]

- Blanchard-Fields F. Everyday problem solving and emotion: An adult developmental perspective. Current Directions in Psychological Science. 2007;16:26–31. [Google Scholar]

- Blanchard-Fields F, Mienaltowski A, Seay RB. Age-differences in everyday problem-solving effectiveness: Older adults select more effective strategies for interpersonal problems. Journals of Gerontology: Psychological Sciences. 2007;62:P61–64. doi: 10.1093/geronb/62.1.p61. [DOI] [PubMed] [Google Scholar]

- Blanchard-Fields F, Coats AH. The experience of anger and sadness in everyday problems impacts age differences in emotion regulation. Developmental Psychology. 2008;44:1547–1556. doi: 10.1037/a0013915. [DOI] [PubMed] [Google Scholar]

- Bledowski C, Prvulovic D, Hoechstetter K, Scherg M, Wibral M, Goebel R, Linden DEJ. Localizing P300 generators in visual target and distractor processing: A combined event-related potential and functional magnetic resonance imaging study. Journal of Neuroscience. 2004;24:9353–9360. doi: 10.1523/JNEUROSCI.1897-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carstensen LL. The influence of a sense of time on human development. Science. 2006;312:1913–1915. doi: 10.1126/science.1127488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carstensen LL, Mikels JA, Mather M. Aging and the intersection of cognition, motivation, and emotion. In: Birren JE, Schaire KW, editors. Handbook of the psychology of aging. 6. Amsterdam, Netherlands: Elsevier; 2006. pp. 343–362. [Google Scholar]

- Carstensen LL, Pasupathi M, Mayr U, Nesselroade JR. Emotional experience in everyday life across the adult life span. Journal of Personality and Social Psychology. 2000;79:644–655. [PubMed] [Google Scholar]

- Carstensen LL, Pasupathi M, Mayr U, Nesselroade JR. Emotional experience in everyday life across the adult life span. Journal of Personality and Social Psychology. 2000;79:644–655. [PubMed] [Google Scholar]

- Chipperfield JG, Perry RP, Weiner B. Discrete emotions in later life. Journals of Gerontology: Series B: Psychological Sciences & Social Sciences. 2003;58B:P23–P34. doi: 10.1093/geronb/58.1.p23. [DOI] [PubMed] [Google Scholar]

- Compton R. The interface between emotion and attention: A review of evidence from psychology and neuroscience. Behavioral and Cognitive Neuroscience Reviews. 2003;2:115–129. doi: 10.1177/1534582303255278. [DOI] [PubMed] [Google Scholar]

- Curran T, Hills A, Patterson MB, Strauss ME. Effects of aging on visuospatial attention: An ERP study. Neuropsychologia. 2001;39:288–301. doi: 10.1016/s0028-3932(00)00112-3. [DOI] [PubMed] [Google Scholar]

- Gao L, Xu J, Zhang B, Zhao L, Harel A, Bentin S. Aging effects on early-stage face perception: An ERP study. Psychophysiology. 2009;46:970–983. doi: 10.1111/j.1469-8986.2009.00853.x. [DOI] [PubMed] [Google Scholar]

- Gratton G, Coles MGH, Donchin E. A new method for off-line removal of ocular articfact. Electroencephalography and Clinical Neurophysiology. 1983;55:468–484. doi: 10.1016/0013-4694(83)90135-9. [DOI] [PubMed] [Google Scholar]

- Gross JJ, Carstensen LL, Pasupathi M, Tsai J, Skorpen CG, Hsu AYC. Emotion and aging: Experience, expression, and control. Psychology and Aging. 1997;12:590–599. doi: 10.1037//0882-7974.12.4.590. [DOI] [PubMed] [Google Scholar]

- Hillyard SA, Vogel EK, Luck SJ. Sensory gain control (amplification) as a mechanism of selective attention: Electrophysiological and neuroimaging evidence. Philosophical Transactions of the Royal Society: Biological Sciences. 1998;393:1257–1270. doi: 10.1098/rstb.1998.0281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Isaacowitz DM, Loeckenhoff C, Wright R, Sechrest L, Riedel R, Lane RA, Costa PT. Age differences in recognition of emotion in lexical stimuli and facial expressions. Psychology and Aging. 2007;22:147–159. doi: 10.1037/0882-7974.22.1.147. [DOI] [PubMed] [Google Scholar]

- Isaacowitz DM, Wadlinger HA, Goren D, Wilson HR. Selective preference in visual fixation away from negative images in old age: An eye tracking study. Psychology and Aging. 2006;21:40–48. doi: 10.1037/0882-7974.21.1.40. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. The Journal of Neuroscience. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kisley MA, Wood S, Burrows CL. Looking at the sunny side of life: Age-related change in an event-related potential measure of the negativity bias. Psychological Science. 2007;18:838–843. doi: 10.1111/j.1467-9280.2007.01988.x. [DOI] [PubMed] [Google Scholar]

- Lawton MP. Emotion in later life. Current Directions in Psychological Science. 2001;10:120–123. [Google Scholar]

- Luck SJ. An Introduction to the Event-Related Potential Technique. Cambridge: MIT Press; 2005. pp. 42–45. [Google Scholar]

- Mangun GR, Hillyard SA. Spatial gradients of visual attention: Behavioral and electrophysiological evidence. Electroencephalography and Clinical Neurophysiology. 1988;70:417–428. doi: 10.1016/0013-4694(88)90019-3. [DOI] [PubMed] [Google Scholar]

- Mangun GR, Hillyard SA. Modulations of sensory-evoked brain potentials indicate changes in perceptual processing during visual-spatial priming. Journal of Experimental Psychology: Human Perception and Performance. 1991;17:1057–1074. doi: 10.1037//0096-1523.17.4.1057. [DOI] [PubMed] [Google Scholar]

- Mather M, Canli T, English T, Whitfield S, Wais P, Ochsner K, Gabrieli JDE, Carstensen LL. Amygdala responses to emotionally valenced stimuli in older and younger adults. Psychological Science. 2004;15:259–263. doi: 10.1111/j.0956-7976.2004.00662.x. [DOI] [PubMed] [Google Scholar]

- Mather M, Carstensen LL. Aging and attentional biases for emotional face. Psychological Science. 2003;14:409–415. doi: 10.1111/1467-9280.01455. [DOI] [PubMed] [Google Scholar]

- Mather M, Carstensen LL. Aging and motivated cognition: The positivity effect in attention and memory. Trends in Cognitive Sciences. 2005;9:496–502. doi: 10.1016/j.tics.2005.08.005. [DOI] [PubMed] [Google Scholar]

- McCarthy G, Puce A, Gore JC, Allison T. Face-specific processing in the human fusiform gyrus. Journal of Cognitive Neuroscience. 1997;9:605–610. doi: 10.1162/jocn.1997.9.5.605. [DOI] [PubMed] [Google Scholar]

- Murphy NA, Isaacowitz DM. Preferences for emotional information in older and younger adults: A meta-analysis of memory and attention tasks. Psychology and Aging. 2008;23:263–286. doi: 10.1037/0882-7974.23.2.263. [DOI] [PubMed] [Google Scholar]

- Nuwer MR, Comi G, Emerson R, Fuglsang-Frederiksen A, Guerit JM, Hinrichs H, Ikeda A, Luccas FJ, Rappelsburger P. IFCN standards for digital recording of clinical EEG. Electroencephalography and Clinical Neurophysiology. 1998;106:259–261. doi: 10.1016/s0013-4694(97)00106-5. [DOI] [PubMed] [Google Scholar]

- Ochsner KN, Gross JJ. The cognitive control of emotion. Trends in Cognitive Sciences. 2005;9:242–249. doi: 10.1016/j.tics.2005.03.010. [DOI] [PubMed] [Google Scholar]

- Ortega V, Phillips LH. Effects of age and emotional intensity on the recognition of facial emotion. Experimental Aging Research. 2008;34:63–79. doi: 10.1080/03610730701762047. [DOI] [PubMed] [Google Scholar]

- Owsley C, Sekuler R, Boldt C. Aging and low-contrast vision: Face perception. Investigative Ophthalmology and Visual Science. 1981;21:362–365. [PubMed] [Google Scholar]

- Phelps EA, Ling S, Carrasco M. Emotion facilitates perception and potentiates the perceptual benefit of attention. Psychological Science. 2006;17:292–299. doi: 10.1111/j.1467-9280.2006.01701.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schieman S. Age and anger. Journal of Health and Social Behavior. 1999;40:273–289. [PubMed] [Google Scholar]

- Smith NK, Cacioppo JT, Larsen JT, Chartrand TL. May I have your attention, please: Electrocortical responses to positive and negative stimuli. Neuropsychologia. 2003;41:171–183. doi: 10.1016/s0028-3932(02)00147-1. [DOI] [PubMed] [Google Scholar]

- St Jacques P, Dolcos F, Cabeza R. Effects of aging on functional connectivity of the amygdala during negative evaluation: A network analysis of fMRI data. Neurobiology of Aging. 2010;31:315–327. doi: 10.1016/j.neurobiolaging.2008.03.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talsma D, Woldorff MG. Methods for the estimation and removal of artifacts and overlap in ERP waveforms. In: Handy TC, editor. Event-Related Potentials: A Methods Handbook. Cambridge: MIT Press; 2005. pp. 115–148. [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nature Neuroscience. 2004;7:1271–1278. doi: 10.1038/nn1341. [DOI] [PubMed] [Google Scholar]