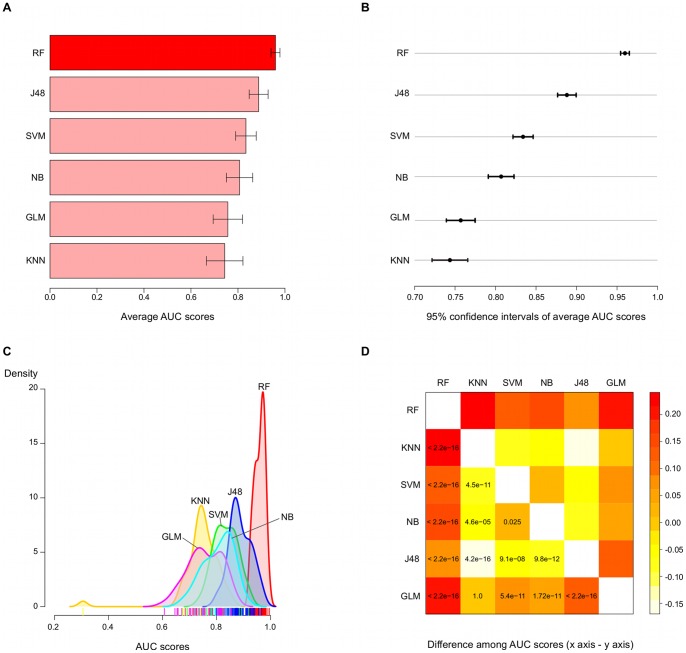

Figure 9. Comparison of our random forest model against several popular classifiers based on repeated cross-validation.

We compare the top 12 features RF model with five others popular classifiers trained with the same features set: J48, K-nearest neighbours (KNN), SVM, Naïve Bayes (NB) and a generalised linear model (GLM). A) The average AUC score, computed as the mean over five repetitions of 10-fold cross-validation, is greater for the RF model, which also presents the smallest standard deviation among all classifiers. B) A comparison of average AUC scores in terms of 95% confidence intervals evidences the statistically significant superiority of the RF model. J48 also shows a significant difference in performance regarding the remaining methods, but still lower than RF’s. C) Moreover, the performance of the classifiers over several resamples are summarised by a kernel density estimator, which indicates a tall and narrow distribution for our RF classifier. This gives a picture on the robustness of the RF model: our classifier is not only better (distribution is shifted towards upper limit of x axis, i.e., highest scores), but also shows a more consistent performance (narrower distribution in relation to others). D) Finally, a t-test over pairwise differences in average AUC scores across all classifiers produces very small p-values ( ) for comparisons against the RF model, providing additional support to the superior performance of the proposed method.

) for comparisons against the RF model, providing additional support to the superior performance of the proposed method.