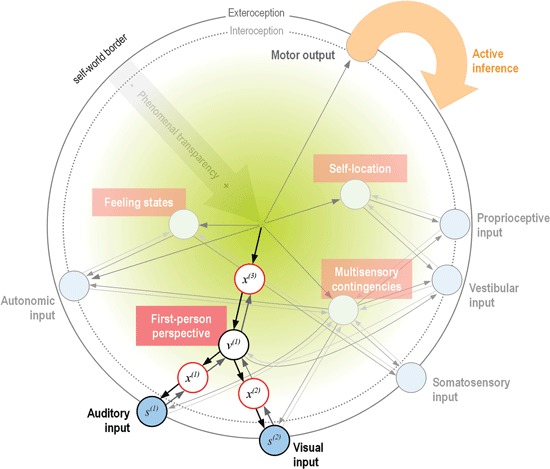

Figure 1.

Schematic proposal for a mapping of the phenomenal self-model onto a hierarchical generative model (format adapted from Bastos et al., 2012). Shown here is only the system’s model of itself, where representational nodes at each level generate descending predictions to increasingly specialized lower levels (symbolized by darker arrows). In this special case, the single modeled cause of sensations is the minimal phenomenal self (Metzinger, 2004a), which generates predictions about the state of one or many sensory modalities (blue circles). The inversion of this generative model (a predictive coding scheme, lighter arrows) infers hidden causes—and thus ultimately, the self as the single cause—of sensory input via minimization of prediction error (Friston, 2011). For simplicity, only one intermediate level of nodes within the hierarchy is displayed, consisting of the basic properties of minimal selfhood as reviewed (white circles). As a (simplified) illustration of the hierarchical generative processing, the case of the 1PP is highlighted. Here, descending predictions of the unified self-model (black arrows) generate sensory data s(i) in the respective modalities (auditory and visual). This happens via a hierarchy of hidden states x(i) and hidden causes v(i) (the 1PP), which generate predictions about data in the level below. The green gradient symbolizes increasing transparency of the accompanying phenomenal states with ascending hierarchy, where the final cause (the self) is completely transparent. Note that at this (highest) level, there is no further representational node; this acknowledges the fact that the perception of a unified minimal self is the result of a temporally extended predictive process, not a static representation (Metzinger, 2004a; Hohwy, 2007). The experience of “mineness” of the self (and of perception and action in general, Hohwy, 2007) is a result of the model’s successful predictions and thus implicitly symbolized by the arrows. Input into this system-model comes from intero- and exteroception (blue circles), while active inference is a means of changing predicted input in all modalities through interaction with the environment. As the model-evidence is evidence for the agent’s existence (Friston, 2011; Friston, 2013b), the model will necessarily be a veridical model of the agent: if there was too much unexplained prediction error, the model would be abandoned in favor of a model with a higher evidence; the self in the present form would cease to exist (Hohwy, 2010; Friston, 2011; Friston, 2012b).