Abstract

Purpose:

To evaluate three measures related to electronic health record (EHR) implementation: clinical volume, time requirements, and nature of clinical documentation. Comparison is made to baseline paper documentation.

Methods:

An academic ophthalmology department implemented an EHR in 2006. A study population was defined of faculty providers who worked the 5 months before and after implementation. Clinical volumes, as well as time length for each patient encounter, were collected from the EHR reporting system. To directly compare time requirements, two faculty providers who utilized both paper and EHR systems completed time-motion logs to record the number of patients, clinic time, and nonclinic time to complete documentation. Faculty providers and databases were queried to identify patient records containing both paper and EHR notes, from which three cases were identified to illustrate representative documentation differences.

Results:

Twenty-three faculty providers completed 120,490 clinical encounters during a 3-year study period. Compared to baseline clinical volume from 3 months pre-implementation, the post-implementation volume was 88% in quarter 1, 93% in year 1, 97% in year 2, and 97% in year 3. Among all encounters, 75% were completed within 1.7 days after beginning documentation. The mean total time per patient was 6.8 minutes longer with EHR than paper (P<.01). EHR documentation involved greater reliance on textual interpretation of clinical findings, whereas paper notes used more graphical representations, and EHR notes were longer and included automatically generated text.

Conclusion:

This EHR implementation was associated with increased documentation time, little or no increase in clinical volume, and changes in the nature of ophthalmic documentation.

INTRODUCTION

The traditional paper-based approach to clinical documentation has become overwhelmed by information exchange demands among health care providers, financial and legal complexities of the modern health care environment, the increasing rate of biomedical knowledge, growing chronic care needs from an aging population, and medical errors associated with handwritten notes.1–5 Meanwhile, advances in computer and communication technology have dramatically transformed the world during the past several decades. Applications of these technologies to clinical medicine through the design and implementation of electronic health record (EHR) systems are an emerging strategy for addressing these problems.6–8 The Institute of Medicine has characterized EHRs as an essential technology for improving the safety, quality, and efficiency of health care.9

Despite these potential benefits, EHR adoption in the United States has been relatively limited. One study found a 17% rate of adoption of basic or complete EHRs by ambulatory physicians across the country in 2008,10 and a survey involving American Academy of Ophthalmology members found a 12% adoption rate by ophthalmologists in 2008.11 In contrast, EHR adoption rates by primary care physicians in many other industrialized countries are well over 90%.12 To address these challenges, the federal Health Information Technology for Economic and Clinical Health (HITECH) Act of 2009 is providing financial incentives to physicians and hospitals for implementation and “meaningful use” of certified EHR systems.13–16 The intent of this federal program is to increase the physician adoption rate to 85% over 5 years, and recent smaller surveys have suggested that EHR adoption is in fact continuing to rise steadily.17–19

There are many important barriers to EHR adoption by ophthalmologists and other physicians.20–23 Several studies have found that electronic systems may contribute to medical errors, particularly if implementation is not performed carefully.24,25 In addition, many EHRs currently used by ophthalmologists are institution-wide systems that were originally built for other specialties, such as internal medicine, and therefore were not designed for the unique workflow requirements of ophthalmology.26 This is particularly challenging because ophthalmology is a visually oriented field in which paper charting methods have traditionally relied on drawings and annotations using examination templates. These functions are not often available in current EHR systems.26 Finally, ophthalmology is a high-volume outpatient specialty with a complex workflow involving multiple personnel, such as technicians, orthoptists, photographers, and physicians. Patients typically require dilation of the eyes and often undergo numerous tests using ophthalmic imaging and measurement devices at each visit. Therefore, to be cost-effective, EHRs must support rapid examination of patients and integration of data from multiple devices.

For these reasons, concerns have been raised that EHRs may cause difficulty with regard to patient volume, speed, learning curve, and effectiveness of clinical documentation.11,20,27 However, no published research to our knowledge has formally examined the effect of EHR adoption by ophthalmologists on clinical efficiency and documentation. Better understanding of these issues will provide information about the impact of EHRs on clinical practice, guide national programs regarding EHR adoption, and identify areas where current systems can be improved. The purpose of this thesis is to systematically evaluate these gaps in knowledge and to test the hypothesis that there will be differences between paper and EHR regarding three key outcome measures: patient volume, time requirements, and nature of clinical documentation. The setting of this study is an ophthalmology department within an academic medical center, which transitioned from a traditional paper-based system to an institution-wide EHR system in 2006. These findings will be analyzed during a 3-year study period after EHR implementation. Findings will also be compared among different providers and compared to baseline pre-implementation measurements using a traditional paper documentation system.

METHODS

This study was reviewed by the Institutional Review Board at Oregon Health & Science University (OHSU) and was granted an exemption because it involved collection of existing data recorded in such a manner that patients could not be identified. The study was conducted in adherence to the Declaration of Helsinki and all federal and state laws.

DESCRIPTION OF STUDY INSTITUTION AND ELECTRONIC HEALTH RECORD SYSTEM

Casey Eye Institute (CEI) is the ophthalmology department at OHSU, a large academic medical center in Portland, Oregon. Over 50 faculty providers at CEI perform over 90,000 annual outpatient examinations. The department provides primary eye care and serves as a major referral center for patients from the Pacific Northwest and nationally. It is organized into clinical divisions based on ophthalmic subspecialties: retina, cornea, pediatric ophthalmology, ocular genetics, glaucoma, neuro-ophthalmology, oculoplastics, uveitis, low vision, and comprehensive ophthalmology.

Over several years, an institution-wide EHR (EpicCare; Epic Systems, Madison, Wisconsin) has been implemented throughout OHSU. This vendor develops software for midsized and large medical practices; is a market share leader among large hospitals; has implemented its EHRs at over 200 hospital systems, including approximately 60 academic medical centers in the United States; and has won numerous awards from well-known independent rating organizations.28,29 In February 2006, all faculty providers, fellows, and residents in the ophthalmology department began using this EHR. All practice management, clinical documentation, order entry, medication prescribing, and billing tasks are performed using components of the electronic system. Ophthalmic images within the department are managed by a different vendor-based system maintained independently from the university picture archiving and communication system (PACS), and images may be copied and pasted into EHR notes. Only several outside satellite clinics, involving a small number of faculty providers, are continuing to use traditional paper documentation. Individual clinical EHR documentation templates were provided by the vendor and were customized within each division before initial system implementation.

All providers at OHSU are required to undergo 15 hours of training before using the EHR system. This includes three 1-hour online modules and three 4-hour classroom training sessions. There is supplemental online training available for advanced system features. A university clinical information systems group provides regular feedback and training to all faculty providers. Of note, OHSU recommended to all departments that clinical volume should be adjusted to 50% of baseline during the first 2 weeks after implementation, increased to 75% of baseline during the following 2 weeks, increased to 90% of baseline during the following 2 weeks, then returned to baseline.

EVALUATION OF CLINICAL VOLUME

The EHR enterprise reporting system was used to collect data on clinical volume by all faculty providers during a 3-year study period beginning after implementation. Baseline clinical volume data were collected from the practice management system for 3 months prior to EHR implementation. To minimize bias from including new providers with growing clinical practices or providers leaving the department with shrinking practices, a group of “stable faculty providers” was defined based on the inclusion criterion of having worked at the department for at least 5 months before and after the study period (February 1, 2006 to January 31, 2009).

Basic characteristics of stable faculty providers were gathered by using publicly available data sources30–32 and by asking individual providers when necessary. These characteristics included gender, age, years in practice, and subspecialty. Quarterly clinical volume was calculated for each stable provider and compared by subspecialty.

Finally, outpatient volume trends in the ophthalmology department were compared with those of other fields within the university. Comparison was made with two groups of fields: (a) General Internal Medicine and Family Medicine, based on the premise that this EHR system was originally designed to support primary care workflow at large medical centers; and (b) Dermatology, Otolaryngology, Plastic Surgery, and Orthopedic Surgery, based on the premise that those fields are comparable to ophthalmology with regard to practice style and scope. Each of these fields has a freestanding department at OHSU except General Internal Medicine, which is a division of the Department of Medicine. Clinical volume among all providers at the university in each field was collected from the EHR enterprise reporting system from the date of earliest available data until December 2010.

EVALUATION OF TIME REQUIREMENTS

During the 3-year study period beginning after implementation, the EHR enterprise reporting system was queried to identify the time each chart was initiated and completed for all 23 stable faculty providers. Two alternative definitions for the time of initiation of each chart were considered, both of which were recorded in the EHR system for every patient visit: (1) the scheduled appointment time and (2) the first time at which any documentation was saved in the computer system, which in a typical workflow occurred when an ophthalmic technician began to interview the patient. The monthly median completion times for these two different approaches were found to be highly correlated (Pearson correlation, 0.99). Therefore, initiation of the EHR chart was defined as the first time of documentation in the computer system, because this was felt to reflect office workflow more accurately. Completion of the chart was defined as the time at which the faculty provider finalized all clinical documentation, financial documentation, and correspondence (eg, letters or faxes to referring physicians and primary care physicians). Because ophthalmology residents and fellows often assisted faculty providers with clinical care and documentation, this involvement was tabulated for more detailed analysis. This was done by querying the EHR reporting system to identify whether an ophthalmology resident or fellow was involved with each encounter based on having viewed or documented in the electronic chart after initiation and before completion.

Baseline data about when paper-based charts were completed before EHR implementation, or about the time required for completion of paper vs EHR charts, were not available. However, many providers anecdotally believed that they completed most paper-based charts during standard clinical time before patients left the office, that they often needed to complete EHR charts during nonstandard clinical time, and that EHR documentation required more time.23,33,34 To examine time requirements involved with the EHR system, the time of day for EHR chart completion by all stable faculty providers was tabulated. The proportion of charts completed during traditional weekday business hours (defined as between 8 AM and 5 PM from Mondays through Fridays), during weekday nonbusiness hours (defined as after 5 PM and before 8 AM from Mondays through Fridays), and on weekends (defined as later than 11:59 PM on Friday night and earlier than or at 11:59 PM on Sunday night) was calculated. Time required for completion of charts by each provider was calculated, and monthly trends were examined during the 3-year study period after EHR implementation. To examine the possibility that workflow and time requirements may be related to ophthalmic subspecialty, these analyses were also performed after grouping providers by division.

To examine time requirements in paper vs EHR charting, two stable faculty providers were identified who examined patients using both the EHR (at the university medical center) and traditional paper methods (at a small satellite clinic). One faculty provider (S.B., “Provider A”) was a retina specialist, and the other (D.J.K., “Provider B”) was a pediatric ophthalmologist. Both providers completed time-motion logs to record the total number of patients seen, the amount of time spent in the clinic, and the amount of time spent outside standard clinic hours to complete all paper or EHR charting based on the definitions above. This was done for 3 full days using traditional paper charts for Provider A, 3 half-days using traditional paper charts for Provider B, and for 3 full days using the EHR system for both providers.

EVALUATION OF CLINICAL DOCUMENTATION

A case series analysis illustrating differences in paper vs EHR documentation of the same clinical findings was carried out by retrospective chart review. Faculty members and EHR system databases at OHSU were queried to identify individual clinical records that included paper notes, EHR notes, and images from the same patients. From these retrieved records, the authors reviewed 100 in detail to select final cases that included clinical examinations of the same patients on different dates using paper and EHR documentation by the same faculty provider.

The authors (M.F.C., D.S.S., D.C.T., S.R.B.) reviewed each case together to distinguish points that were illustrative of common and important qualitative differences between paper and EHR documentation. Three iterative cycles of case review were performed among groups of authors. Each case was then reviewed with the attending ophthalmologist who performed the examination (T.S.H., J.C.M., D.J.W.) during a semistructured written or verbal discussion, to gain additional insights on the differences between paper and EHR documentation of the relevant clinical findings.

EVALUATION OF OTHER OUTCOMES: CODING, BILLING, ACADEMIC PRODUCTIVITY

Three potential benefits of EHR systems relate to improved billing and charge capture, improved quality reporting, and improved clinical research opportunities.1–4 The impact of EHR implementation on these three outcome measures was evaluated at the study institution. First, the financial impact of EHR implementation was examined. This was done by analyzing all departmental billing records for 2 complete years before and 4 complete years after implementation (fiscal years 2004–2009). All outpatient encounters were tabulated that were coded as one of the following Current Procedural Terminology (CPT-4) codes: new eye codes (CPT 92002, 92004), established eye codes (CPT 92012, 92014), new evaluation and management codes (CPT 99201, 99202, 99203, 99204, 99205), established evaluation and management codes (99211, 99212, 99213, 99214, 99215), and office consultations (CPT 99241, 99242, 99243, 99244, 99245). These were converted to yearly work Relative Value Units (RVUs) for collections analysis, using the 2009 Medicare Resource–Based Relative Value Scale (RBRVS) and the Geographic Practice Cost Index (GPCI) for Portland, Oregon. The distributions of coding and collections were compared in years with paper vs EHR systems.

Second, the impact of EHR implementation on quality reporting was examined by reviewing participation in the Physician Quality Reporting System (PQRS) by faculty providers during the study period based on institutional records. Finally, the impact of implementation on clinical research was examined by querying Medline-indexed publications for each faculty provider using the PubMed interface (http://www.ncbi.nlm.nih.gov/pubmed). Study committee publications (eg, Diabetic Retinopathy Clinical Research Network [DRCRnet], Pediatric Eye Disease Investigator Group [PEDIG]) were included if the faculty provider was listed in the manuscript as a group member. These measures were compared with paper vs EHR systems.

STATISTICAL ANALYSIS

Descriptive analyses were performed for clinical volume and time requirement data, including times series plots. The Wilcoxon rank sum test was used to compare the means of two groups. For trend analyses, mixed-effects logistic regression models were used to account for the hierarchical structure (date nested within a provider, and providers nested within a subspecialty division) and to account for potential temporal correlations in the data. Autoregressive and moving average models were used to account for the potential temporal correlations as correlation structure in mixed-effects models.35 For analysis of coding, billing, PQRS, and academic productivity data, the chi-square and Student t tests were used as appropriate. Descriptive analyses were done in spreadsheet software (Excel 2007; Microsoft, Redmond, Washington), and trend analyses were performed using the R statistical language.36

RESULTS

SUMMARY OF FACULTY PROVIDER CHARACTERISTICS

Based on study inclusion criteria, 23 stable faculty providers (21 ophthalmologists and 2 optometrists) were identified (Table 1). These stable faculty providers performed a total of 120,490 outpatient clinical examinations during the 3-year study period. Overall, 74% of stable providers were male, and the mean time in practice was 16.3 years. The largest subspecialties were comprehensive ophthalmology (n=5), retina (n=4), and pediatric ophthalmology (n=4). Eleven (48%) of the 23 providers were considered “higher-volume” providers for study purposes (defined as seeing ≥100 patient visits per month on average), and 12 (52%) providers were considered “lower-volume” providers (defined as seeing <100 patient visits per month on average).

TABLE 1.

CHARACTERISTICS OF 23 STABLE OPHTHALMOLOGY FACULTY PROVIDERS USING ELECTRONIC HEALTH RECORD SYSTEM*

| CHARACTERISTIC | NUMBER |

|---|---|

| Male gender, n (%) | 17 (74%) |

| Age, mean ± SD (range)† | 50.4 ± 11.5 (34–75) |

| Years in practice† | |

| Mean ± SD (range) | 16.3 ± 11.2 (4–38) |

| <10 years, n | 9 |

| 10–19 years, n | 7 |

| >19 years, n | 7 |

| Subspecialty, n (%) | |

| Comprehensive | 5 (22%) |

| Pediatric ophthalmology | 4 (17%) |

| Retina | 4 (17%) |

| Cornea | 2 (9%) |

| Uveitis | 2 (9%) |

| Oculoplastics | 2 (9%) |

| Low vision | 1 (4%) |

| Neuro-ophthalmology | 1 (4%) |

| Genetics | 1 (4%) |

| Glaucoma | 1 (4%) |

Stable providers (21 ophthalmologists, 2 optometrists) were identified based on having worked at the study institution for 5 months before and after the 3-year study period.

Age and length of practice at beginning of study period in 2006.

Overall, resident or fellow trainees were involved in 30,932 (27%) of the 120,490 outpatient encounters during the study period based on EHR access logs. Although trainees assisted with care and documentation during these encounters, all of the encounters were scheduled with the faculty provider, who was ultimately responsible for delivering care.

CLINICAL VOLUME: OPHTHALMOLOGY DEPARTMENT

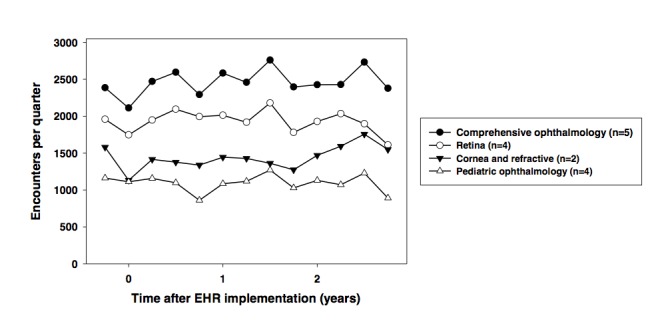

Figure 1 displays quarterly patient volume over time for 23 stable faculty providers after EHR implementation, organized by subspecialty. For all 23 faculty providers taken together, there was a decreasing trend of −2.5 patients per quarter over 3 years, which was not statistically significant. Analysis using a mixed-effects model showed that lower-volume faculty providers, defined as those seeing <100 patients per month on average, had a decreasing trend of −3.7 patients per quarter, which was not statistically significant. Higher-volume faculty providers, defined as those seeing ≥100 patients per month on average, had an increasing trend of 6.7 patients per quarter, which was statistically significant (P=.03). There were no statistically significant relationships between trend in quarterly patient volume and gender, provider age, years of practice, and ophthalmic subspecialty.

FIGURE 1.

Quarterly patient volume over time of 23 stable faculty providers within an academic ophthalmology department after electronic health record (EHR) system implementation. Data are displayed for highest-volume (top), intermediate-volume (middle), and lowest-volume (bottom) ophthalmology divisions. One-quarter of baseline patient volume is shown using paper system before EHR implementation.

CLINICAL VOLUME: COMPARISON WITH PRE-IMPLEMENTATION BASELINE

During the baseline quarter before EHR implementation, there were 10,468 total patient visits for the 23 stable providers. The total patient visits decreased to 9,209 (88% of baseline) during the first quarter of EHR implementation and increased to 10,170 (97% of baseline) during the second quarter after implementation. Compared to this baseline volume, the average quarterly clinical volume after implementation was 93% in year 1, 97% in year 2, and 97% in year 3.

CLINICAL VOLUME: COMPARISON WITH OTHER FIELDS

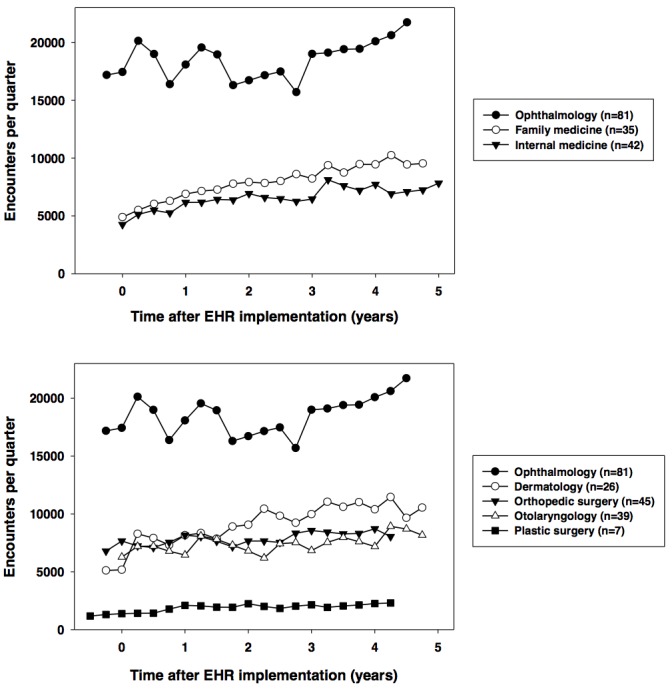

Outpatient volume trends in the ophthalmology department were compared with those of other fields in the university, as summarized in Figure 2, top (General Internal Medicine and Family Medicine) and Figure 2, bottom (Dermatology, Orthopedic Surgery, Otolaryngology, and Plastic Surgery). The Ophthalmology department had the highest clinical volume among all fields, but also had the largest total number of faculty providers. Analysis using a mixed-effects model showed that the Ophthalmology department and six other fields all had increasing clinical volume trends during the approximately 5-year period after EHR implementation (range, 95.7 patients per quarter for Plastic Surgery to 189 patients per quarter for Dermatology). Compared to Dermatology, which was the department with highest rate of volume increase over time, three departments had significantly lower increasing trends (Otolaryngology, P=.02; Orthopedic Surgery, P=.01; Plastic Surgery, P<.01), and three departments had no statistically significant differences (Ophthalmology, Family Medicine, General Internal Medicine).

FIGURE 2.

Quarterly patient volume over time of all faculty providers within an academic ophthalmology department after electronic health record (EHR) system implementation, compared to other fields. Data are displayed for family medicine and general internal medicine (top) and dermatology, orthopedic surgery, otolaryngology, and plastic surgery (bottom). Legends indicate total number of faculty providers who worked in each field at any time during entire period shown. Baseline patient volume is shown using paper system before EHR implementation when available.

We note that the overall clinical volume among all faculty providers in the Ophthalmology department increased during the 5 years since EHR implementation (Figure 2), and that this may be partly explained by growth in the Ophthalmology department during this time period (in comparison, Figure 1 displays data from only 23 stable faculty providers over 3 years).

TIME REQUIREMENTS: 23 STABLE FACULTY PROVIDERS

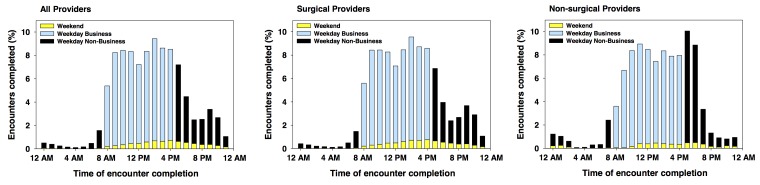

Figure 3, left, summarizes the time of day at which EHR charts were completed by the 23 stable faculty providers during 3 years after system implementation. Among all providers, 68% of EHR charts were completed during traditional weekday business hours, 24% were completed during weekday nonbusiness hours (after 5 PM and before 8 AM), and 8% were completed on weekends.

FIGURE 3.

Time of day for electronic health record (EHR) chart completion by 23 stable faculty providers within an academic ophthalmology department during 3 years after system implementation. Data are displayed for all providers (left), providers from surgical divisions (comprehensive ophthalmology, retina, cornea/refractive, pediatrics, oculoplastics, glaucoma) (center), and providers from nonsurgical divisions (uveitis, neuro-ophthalmology, low vision, genetics) (right). Among all providers, 68% of EHR charts were completed during traditional weekday business hours, 24% were completed during weekday nonbusiness hours (after 5 PM and before 8 AM), and 8% were completed on weekends.

To examine the possibility of a systematic relationship between time of EHR chart completion with ophthalmic subspecialty, these findings are displayed for ophthalmology surgical subspecialty divisions (comprehensive, retina, cornea/refractive, pediatric, oculoplastics, glaucoma) in Figure 3, center, and for ophthalmology nonsurgical subspecialty divisions (uveitis, neuro-ophthalmology, low vision, genetics) in Figure 3, right. Analysis using a mixed-effects Poisson regression model showed that lower-volume providers (P<.001) and encounters involving resident or fellow trainees (P<.001) had greater tendencies to be completed during weekday nonbusiness hours or weekends. There were no systematic statistical differences among the 10 subspecialty divisions regarding time of EHR chart closure.

To investigate other sources of variability, the time of day for completion of EHR charts by individual subspecialty divisions is summarized in Table 2. Among the 10 divisions, the proportion of charts completed during weekday business hours ranged from 50% to 87%, the proportion completed during weekday nonbusiness hours ranged from 14% to 39%, and the proportion completed during weekends ranged from 2% to 19%. There were highly statistically significant differences in distribution of EHR chart completion time of day among the 10 subspecialty divisions (P<.0001).

TABLE 2.

TIME OF DAY FOR ELECTRONIC HEALTH RECORD (EHR) CHART COMPLETION BY 23 FACULTY PROVIDERS WITHIN AN ACADEMIC OPHTHALMOLOGY DEPARTMENT DURING 3 YEARS AFTER SYSTEM IMPLEMENTATION*

| SUBSPECIALTY | TIME OF EHR CHART COMPLETION | ||

|---|---|---|---|

| Weekday Business | Weekday Nonbusiness | Weekend | |

| Comprehensive (n=2) | 22,126 (76%) | 6,737 (23%) | 354 (1%) |

| Cornea/refractive (n=2) | 13,820 (87%) | 1,661 (10%) | 424 (3%) |

| Genetics (n=1) | 1,378 (66%) | 581 (28%) | 128 (6%) |

| Glaucoma (n=1) | 5,463 (50%) | 4,310 (39%) | 1,163 (11%) |

| Low vision (n=1) | 1,918 (76%) | 492 (19%) | 127 (5%) |

| Neuro-ophthalmology (n=1) | 2,006 (65%) | 939 (30%) | 152 (5%) |

| Oculoplastics (n=2) | 11,239 (85%) | 1,841 (14%) | 217 (2%) |

| Pediatric ophthalmology (n=4) | 7,087 (56%) | 3,800 (30%) | 1,761 (14%) |

| Retina (n=4) | 11,882 (53%) | 6,184 (28%) | 4,285 (19%) |

| Uveitis (n=2) | 2,747 (59%) | 1,632 (35%) | 255 (6%) |

| Total | 79,666 (68%) | 28,177 (24%) | 8,866 (8%) |

Data are displayed for 10 subspecialty divisions within the entire department, each with varying numbers of stable faculty providers during the study period. EHR charts were categorized as being completed during weekday business hours (8 AM to 5 PM), weekday nonbusiness hours (after 5 PM and before 8 AM), or weekends. There were highly statistically significant differences in distribution of EHR chart completion time of day among the 10 subspecialty divisions (P<.0001).

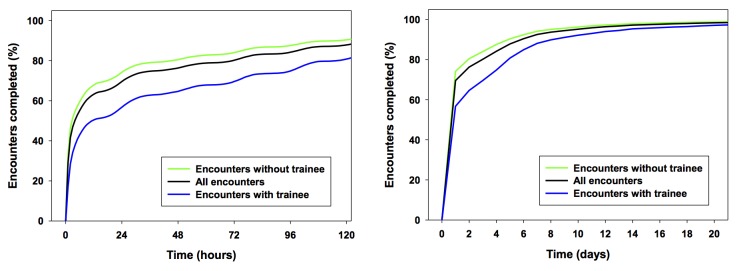

The overall length of time required for EHR chart completion by the 23 stable providers is shown in Figure 4. When considering all encounters seen by these providers over the 3-year study period, 25% of EHR charts were completed within 52 minutes, 50% were completed within 4 hours, and 75% were completed within 1.7 days after beginning EHR documentation. When considering the 23 providers individually, differences in the time required for EHR chart completion are summarized in Table 3. For example, 50% of EHR charts were completed within 7 minutes by one provider, whereas another provider required 6.2 days to complete 50% of EHR charts.

FIGURE 4.

Time required for electronic health record (EHR) chart completion by 23 stable faculty providers within an academic ophthalmology department during 3 years after system implementation. Scale is shown in hours (left) and days (right). Data are displayed for all encounters considered together, for encounters in which resident or fellow trainees were involved with clinical documentation, and for encounters in which trainees were not involved. Considering all providers together, 25% of EHR charts were completed within 52 minutes, 50% were completed within 3.9 hours, and 75% were completed within 1.7 days after beginning EHR documentation.

TABLE 3.

DIFFERENCES IN TIME REQUIRED TO COMPLETE 25%, 50%, AND 75% OF ELECTRONIC HEALTH RECORD (EHR) CHARTS BY 23 STABLE FACULTY PROVIDERS WITHIN AN ACADEMIC OPHTHALMOLOGY DEPARTMENT DURING 3 YEARS AFTER SYSTEM DOCUMENTATION*

| VARIABLE | PERCENTAGE OF EHR ENCOUNTERS COMPLETED | ||

|---|---|---|---|

| 25% | 50% | 75% | |

| All encounters together | 51 minutes | 3.9 hours | 1.7 days |

| Encounters without trainee | 45 minutes | 2.7 hours | 1 day |

| Encounters with trainee | 1.6 hours | 11.3 hours | 4 days |

| Providers individually | |||

| Minimum | 5 minutes | 7 minutes | 13 minutes |

| Maximum | 4.0 days | 6.2 days | 12.8 days |

| Median | 1.3 hours | 4.8 hours | 1.1 days |

| Mean | 8.8 hours | 1.1 days | 3.2 days |

| Standard deviation | 21.5 hours | 1.6 days | 3.8 days |

Data are displayed for all encounters considered together, divided into encounters in which resident or fellow trainees were involved with clinical documentation, and displayed for providers individually.

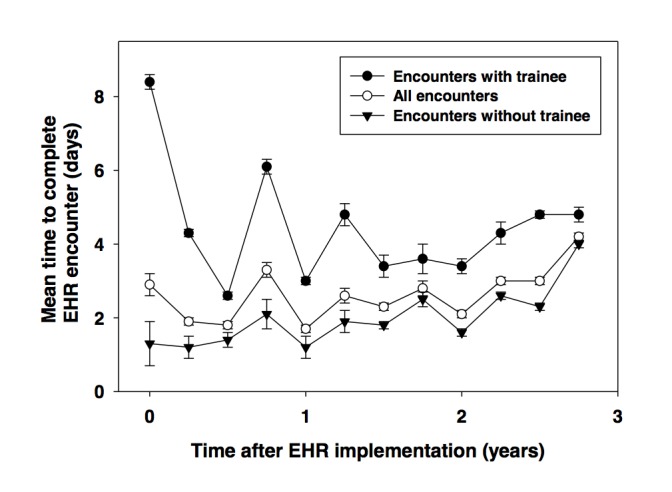

Encounters in which resident or fellow trainees were involved with clinical care required more time compared to encounters in which they were not involved (Figure 4 and Table 3). A mixed-effects model of the proportion of EHR charts completed within 72 hours of patient arrival showed that there were highly statistically significant differences among the 23 individual providers (P<.0001), among the 10 subspecialty divisions (P=.0006), and with resident or fellow involvement with the encounter (P=.04). There were no statistically significant relationships between proportion of EHR charts completed within 72 hours and gender, provider age, years of practice, or high-volume vs low-volume provider status.

Figure 5 displays the monthly trend in mean time required for EHR chart completion during the 3-year period after system implementation. Analysis using a mixed-effects model showed that there was an increasing trend of 9.6 minutes per month (P<.001) for EHR chart completion time among encounters with all providers during this overall study period. Again, encounters with resident or fellow trainee involvement required longer mean times than encounters without trainee involvement; this difference was most pronounced during the first year of the study period (Figure 5).

FIGURE 5.

Trends in mean time required for electronic health record (EHR) chart completion by 23 stable faculty providers within an academic ophthalmology department during 3 years after system implementation. Data are displayed for all encounters considered together, for encounters in which resident or fellow trainees were involved with clinical documentation, and for encounters in which trainees were not involved. Error bars represent standard error of the mean. Among all 23 providers, time series regression analysis showed that median time increased by 9.6 minutes per month over this time period.

TIME REQUIREMENTS: PAPER VS ELECTRONIC HEALTH RECORD BY TWO FACULTY PROVIDERS

Table 4 summarizes findings from time-motion logs recorded by two faculty providers practicing with EHR documentation at the university medical center and with paper-based documentation at small satellite clinics. Data were collected for 344 outpatient examinations (240 by Provider A, 104 by Provider B) during 3 full clinical days for each faculty provider using the EHR system, 3 full clinical days for Provider A using a paper system, and 3 half-days for Provider B using a paper system. The mean clinic times per patient were slightly higher with EHR documentation than paper documentation for each provider (15 vs 12 minutes for Provider A, 20 vs 18 minutes for Provider B), but these differences were not statistically significant. The mean nonclinic documentation times per patient were significantly higher with EHR documentation than paper documentation for both providers (P=.04 for Provider A, P<.01 for Provider B), and the mean total time per patient for both providers was 6.8 minutes greater with EHR than with paper, which was significantly longer by the Wilcoxon rank sum test (P<.01).

TABLE 4.

TIME REQUIRED FOR COMPLETE PAPER VS ELECTRONIC HEALTH RECORD (EHR) DOCUMENTATION OF CLINICAL ENCOUNTERS BY TWO FACULTY PROVIDERS*

| VARIABLE | PROVIDER A (RETINA) | PROVIDER B (PEDIATRIC) | ||

|---|---|---|---|---|

| Paper | EHR | Paper | EHR | |

| Number of new patients, n (%) | 6 (5%) | 14 (12%) | 5 (15%) | 10 (14%) |

| Number of follow-up patients, n (%) | 121 (95%) | 99 (88%) | 29 (85%) | 60 (86%) |

| Total number of patients, n | 127 | 113 | 34 | 70 |

| Total clinic time, hours:minutes | 26:50 | 28:30 | 10:24 | 23:47 |

| Total nonclinic time, hours:minutes | 6:05 | 13:30 | 0:00 | 5:57 |

| Total time, hours:minutes | 32:55 | 42:00 | 10:24 | 29:44 |

| Mean clinic time per patient, minutes | 12.7 | 15.1 | 18.4 | 20.4 |

| Mean nonclinic time per patient, minutes | 2.9† | 7.2† | 0.0† | 5.1† |

| Mean total time per patient, minutes | 15.6‡ | 22.3‡ | 18.4‡ | 25.5‡ |

Two faculty providers completed time-motion logs for all clinic time (within the office including examination time) and nonclinic time (outside the office). Time-motion logs were completed by a retina specialist (Provider A) and a pediatric ophthalmologist (Provider B) while performing similar work using different clinical documentation methods for 3 full days at an academic center (EHR system) and for 3 full days at a satellite office (paper system).

Mean nonclinic documentation times per patient were significantly higher with EHR documentation than paper documentation for both providers by the Wilcoxon rank sum test (P=.04 for Provider A, P<.01 for Provider B).

Mean total time per patient for both providers was significantly longer with EHR than with paper (P<.01).

CLINICAL DOCUMENTATION IN PAPER VS ELECTRONIC HEALTH RECORD

Case 1

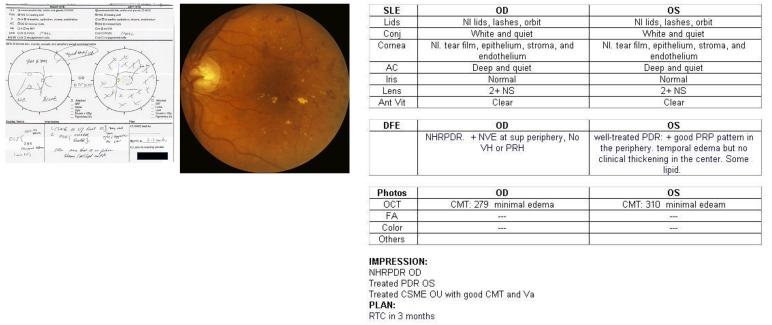

A 67-year-old man had proliferative diabetic retinopathy (PDR) of both eyes and clinically significant macular edema of the left eye. In the paper chart (Figure 6, left), a graphical template with checkboxes was marked to represent normal findings. Some text was not legible to the reviewing author (D.S.S.) and was clarified by other authors (M.F.C., D.C.T.) and by the faculty provider. Sketches with text annotations and symbols (eg, “X”) were used to represent retinal findings such as panretinal photocoagulation (PRP) surrounding the drawn-in optic nerve and arcades. In the EHR chart (Figure 6, right), typed findings were used to represent the same normal findings in a table template. Instead of drawings, typed text was used to describe and interpret retinal findings: “well-treated PDR: +good PRP pattern in the periphery,” “temporal edema but no clinical thickening in the center. Some lipid.” The EHR note contained two additional pages of computer-generated text after the diagnostic impression and plan, with headings such as “Orders and Results,” “Additional Visit Information,” “Level of Service,” and “Routing History.” Fundus photographs were present in the medical record and are shown in Figure 6, center, for comparison.

FIGURE 6.

Example of clinical documentation of posterior segment ocular disease in paper vs EHR systems. Patient with diabetic retinopathy was examined and documented by the same faculty provider on different dates using the two systems. Left, paper documentation emphasizing structured checkboxes and annotated drawings. Right, EHR documentation emphasizing structured textual descriptions and interpretations (eg, disease classification and treatment response). Center, photographic documentation of clinical findings.

Case 2

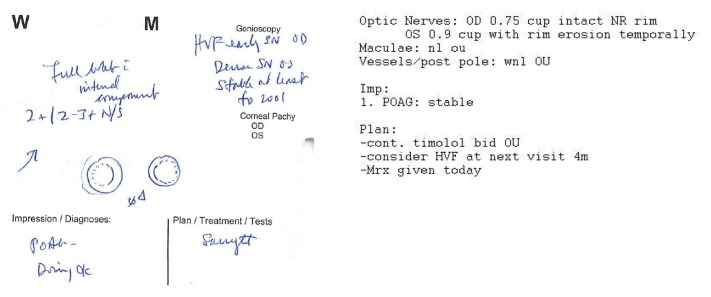

A 75-year-old woman was diagnosed with primary open-angle glaucoma 1 year previously. In the paper note, a graphical template with checkboxes was used to describe most findings. Free text descriptions and drawings (Figure 7, left) were used to represent the optic nerve heads using circular combinations of solid and dotted lines, with an indication that findings were stable (“ØΔ”). Some text was not legible to the reviewing author (D.S.S.) and was clarified by the faculty provider. In the EHR note (Figure 7, right), typed findings were used to describe and interpret examination findings: “OD 0.75 cup intact NR rim. OS 0.9 cup with rim erosion temporally.” No photographic documentation was present in the medical record.

FIGURE 7.

Example of clinical documentation of optic nerve disease in paper vs EHR systems. Patient with primary open-angle glaucoma was examined and documented by the same faculty provider on different dates using the two systems. Left, Paper documentation emphasizing written descriptions and annotated drawings. Right, EHR documentation emphasizing structured textual descriptions and interpretations of optic nerve findings.

Case 3

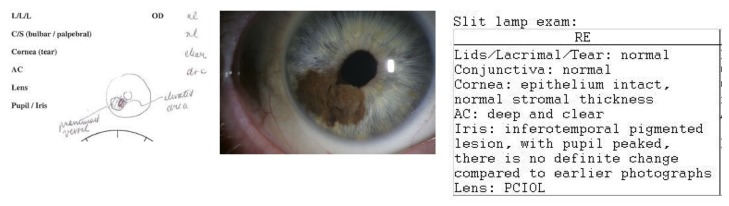

A 58-year-old man was being followed for iris melanoma of the right eye. In the paper chart (Figure 8, left), white space was used for text-based documentation of slit-lamp examination findings (eg, “nl,” “clear”). There was a freehand drawing of the iris lesion with written annotations (eg, “elevated area” and “prominent vessel”). In the EHR chart (Figure 8, right), documentation consisted purely of typed text, framed in tables. The lesion was described and interpreted: “inferotemporal pigmented lesion, with pupil peaked, there is no definite change compared to earlier photographs.” There were over two additional pages of computer-generated text following the assessment and plan. Slit-lamp photographs were present in the medical record and are shown in Figure 8, center, for comparison.

FIGURE 8.

Example of clinical documentation of anterior segment ocular disease in paper vs EHR systems. Patient with iris melanoma was examined and documented by the same faculty provider on different dates using the two systems. Top left, paper documentation emphasizing structured checkboxes and annotated drawings. Top right, EHR documentation emphasizing structured textual descriptions and interpretations (eg, comparison with previous examinations). Bottom, Photographic documentation of clinical findings.

OTHER OUTCOMES: CODING, BILLING, AND ACADEMIC PRODUCTIVITY

Table 5 shows the distribution of coding and collections by the 19 of 23 stable faculty providers with complete data available throughout fiscal years 2004–2009. In each of the coding categories examined (new eye codes, established eye codes, new evaluation & management codes, established evaluation & management codes, office consultations), there were statistically significant differences shifting toward higher-level code distributions with EHR compared to paper (P<.001). The mean work RVUs per year charged were slightly higher with paper compared to EHR, but this difference was not statistically significant (P=.24).

TABLE 5.

COMPARISON OF CODING PATTERNS AMONG 23 STABLE FACULTY PROVIDERS (EHR) SYSTEM IMPLEMENTATION*

| CATEGORY |

NUMBER OF CPT CODES/YEAR (MEAN [%] ± SD) |

RVUS/YEAR (MEAN ± SD) |

||||

|---|---|---|---|---|---|---|

|

| ||||||

| Paper (2004–2005) | EHR (2006–2009) | P value | Paper (2004–2005) | EHR (2006–2009) | P value | |

| Eye Codes: New | ||||||

| 92002 - Intermediate | 568 (16%) ± 28 | 555 (21%) ± 71 | P =.004 | 500 ± 25 | 488 ± 62 | NS |

| 92004 - Comprehensive | 2,996 (84%) ± 46 | 2,422 (79%) ± 235 | 5,452 ± 84 | 4,408 ± 427 | P =.032 | |

| Eye Codes: Established | ||||||

| 92012 - Intermediate | 5,390 (52%) ± 700 | 4,403 (41%) ± 539 | P <.001 | 4,959 ± 644 | 4,051 ± 496 | NS |

| 92014 - Comprehensive | 4,911 (48%) ± 952 | 6,398 (59%) ± 766 | 6,973 ± 1353 | 9,085 ± 1088 | NS | |

| E&M: New | ||||||

| 99201 - Level 1 | 112 (8%) ± 62 | 111 (14%) ± 12 | P <.001 | 50 ± 28 | 50 ± 5 | NS |

| 99202 - Level 2 | 432 (29%) ± 213 | 155 (19%) ± 20 | 380 ± 187 | 137 ± 18 | P =.042 | |

| 99203 - Level 3 | 594 (41%) ± 69 | 342 (41%) ± 72 | 796 ± 93 | 458 ± 96 | P =.015 | |

| 99204 - Level 4 | 257 (18%) ± 57 | 148 (17%) ± 57 | 591 ± 130 | 341 ± 131 | NS | |

| 99205 - Level 5 | 56 (4%) ± 13 | 75 (9%) ± 52 | 167 ± 40 | 225 ± 157 | NS | |

| E&M Codes: Established | ||||||

| 99211 - Level 1 | 368 (4%) ± 269 | 52 (1%) ± 39 | P <.001 | 62 ± 46 | 9 ± 7 | NS |

| 99212 - Level 2 | 4,149 (47%) ± 878 | 1,886 (25%) ± 328 | 1,867 ± 395 | 849 ± 148 | P =.007 | |

| 99213 - Level 3 | 2,768 (31%) ± 876 | 4,126 (54%) ± 239 | 2,546 ± 806 | 3,796 ± 220 | P =.032 | |

| 99214 - Level 4 | 1,168 (13%) ± 28 | 1,249 (16%) ± 224 | 1,659 ± 40 | 1,774 ± 318 | NS | |

| 99215 - Level 5 | 376 (4%) ± 38 | 356 (5%) ± 51 | 752 ± 76 | 713 ± 102 | NS | |

| Office Consults | ||||||

| 99241 - Level 1 | 102 (3%) ± 40 | 54 (2%) ± 41 | P <.001 | 65 ± 25 | 34 ± 27 | NS |

| 99242 - Level 2 | 188 (6%) ± 18 | 301 (12%) ± 68 | 252 ± 25 | 403 ± 91 | NS | |

| 99243 - Level 3 | 1,533 (46%) ± 356 | 1,083 (43%) ± 306 | 2,881 ± 669 | 2,037 ± 575 | NS | |

| 99244 - Level 4 | 1,199 (35%) ± 527 | 901 (36%) ± 276 | 3,619 ± 1591 | 2,720 ± 835 | NS | |

| 99245 - Level 5 | 314 (9%) ± 25 | 172 (7%) ± 35 | 1,184 ± 96 | 647 ± 133 | P =.008 | |

| Overall Mean ± SD | 27,477 ± 1,513 | 24,788 ± 1110 | 34,755 ± 3,313 | 32,222 ± 1,476 | NS | |

CPT, Current Procedural Terminology; NS, not significant; RVU, Relative Value Unit.

Data are shown with paper system for 2 years (fiscal years 2004–2005) and EHR system for 4 years (fiscal years 2006–2009). Cod and established), evaluation & management (E&M) codes (new and established), and office consultations. Yearly work RVUs are sh Based Relative Value Scale and the Geographic Practice Cost Index for Portland, Oregon.

With regard to quality reporting, the ophthalmology department began participating in PQRS in 2008, which was 2 years after EHR implementation. During that year, 5 (22%) of 23 stable faculty providers were eligible for ≥3 PQRS measures, and 8 (35%) of 23 faculty providers were eligible for ≥1 measure. Among these faculty, 3 of 5 (60%) successfully reported ≥3 measures and 3 of 8 (38%) successfully reported ≥1 measure. In 2009, 9 (39%) of 23 stable faculty providers were eligible for ≥3 PQRS measures, and 23 of 23 (100%) were eligible for ≥1 measure. Among these faculty, 7 of 9 (78%) successfully reported ≥3 measures, and 21 of 23 (91%) successfully reported ≥1 measure.

Table 6 summarizes the number of peer-reviewed academic journal publications by faculty providers in years with paper (during the 3 years prior to implementation) vs EHR (during the 3 years after implementation) systems. There were approximately 8% more academic publications by the 23 stable providers during the 3-year period after EHR implementation. However, there was no statistically significant difference in mean number of publications with paper vs EHR.

TABLE 6.

COMPARISON OF PEER-REVIEWED PUBLICATIONS AMONG 23 STABLE FACULTY PROVIDERS WITHIN AN ACADEMIC OPHTHALMOLOGY DEPARTMENT IN THE 3 YEARS BEFORE AND AFTER ELECTRONIC HEALTH RECORD (EHR) SYSTEM IMPLEMENTATION*

| CATEGORY | DOCUMENTATION METHOD | |

|---|---|---|

| Paper (2003–2005) | EHR (2006–2008) | |

| Total publications (23 providers) during period | 183 | 198 |

| Mean ± SD | 7.96 ± 10.9 | 8.61 ± 11.6 |

| Range | 0–43 | 0–53 |

| Publications per provider during period | ||

| 0 | 4 | 6 |

| 1 - 9 | 13 | 10 |

| ≥10 | 6 | 7 |

Mean number of peer-reviewed publications per provider was not significantly different with EHR documentation than with paper documentation.

DISCUSSION

SUMMARY OF KEY FINDINGS

This is the first study to our knowledge that has systematically evaluated the outcome from implementation of an EHR system in ophthalmology. The key findings from this study were that (1) EHR implementation and application are feasible within a large academic practice including a variety of ophthalmologists and practice types; (2) implementation was associated with an initial decrease and subsequent return to near baseline in clinical volume; (3) higher-volume ophthalmology faculty providers had growth in clinical volume compared with lower-volume faculty providers after EHR implementation; (4) EHR usage by ophthalmic providers was associated with increased time expenditure and documentation times compared to paper systems; (5) EHR documentation involved greater reliance on textual descriptions and interpretations of clinical findings compared to graphical representations and checkboxes in paper notes; and (6) EHR notes were longer than paper notes and included more automatically generated text.

IMPACT ON CLINICAL VOLUME

This study demonstrates that it is feasible for a high-volume academic ophthalmology practice to implement and use an EHR successfully. Some ophthalmologists throughout the country have been using EHRs for years.11 At the same time, there have been well-known reports of failed information technology system implementations in ophthalmology and other fields.37–39 We found that ophthalmologists within the department successfully transitioned to using the EHR system regardless of subspecialty, provider age, gender, or length of practice (Table 1 and Figure 1). Previous research has suggested that although younger clinicians might be expected to use information technology more fully, the culture of the practice may be a more critical factor affecting the level of adoption.40 There was strong commitment to EHR implementation at the study institution, both throughout the university and within the ophthalmology department, as well as clear statements of system objectives, an experienced vendor, a departmental project champion, and solid physician commitment to planning and deployment. These factors have consistently been shown to be strongly associated with successful outcomes in the project management and computer science literature, and we believe they must be considered by ophthalmologists before planning EHR adoption.37,38,41–46

There was a small decrease in clinical volume compared to baseline values with a paper system among the 23 stable providers in this study. In particular, there was a 12% overall decrease in volume during the first quarter after EHR implementation, followed by a subsequent slow return to near baseline over time (Figure 1). Of course, there are potential limitations of using clinical volume to assess impact of an EHR system. For example, the practices of younger providers may tend to grow over time, whereas the practices of older providers may tend to shrink over time. In this study, we normalized for these practice variations by defining a set of “stable faculty providers” who were practicing the 5 months before and after the 3-year study period. We believe this is a reasonable method for including the maximum number of study providers while excluding those with atypical practice situations, but acknowledge that there are other confounding factors unrelated to EHR implementation that may affect the practice volumes of the providers in this study. Finally, we note that clinical volume may demonstrate seasonal variation. Baseline clinical volumes using paper documentation before EHR system implementation were available only from November 2005 through January 2006. Although the number of clinic visits to family physicians has not been shown to decrease during winter months,47 providers in this study tended to have lower clinical volumes during the winter (Figure 1). This may bias the baseline pre-implementation values to be somewhat lower than typical pre-implementation clinical volume. Despite these limitations, we do not feel these findings demonstrate any significant change in clinical volume at the study institution during the several years after EHR implementation.

In the ophthalmology field, similar studies examining the impact of EHRs on clinical volume have been limited. A national survey of ophthalmologists working in practices with EHRs found that 34% felt that clinical productivity 6 months after implementation had increased, 30% felt that it was stable, 15% felt that it had decreased, and 21% were unsure.11 In 2010, an informal survey of 150 pediatric ophthalmologists at a national meeting estimated that 20% to 30% had implemented an EHR in their practice (approximately half within the past year). Among ophthalmologists using an EHR, none estimated that their clinical efficiency increased or remained stable, approximately one-third estimated that their efficiency had decreased by 10%, and approximately two-thirds estimated that their efficiency had decreased by 30% or more (Biglan AW, written communication, October 4, 2011). Within other fields, investigators have suggested that there may be an initial decrease in productivity after EHR implementation in primary care offices, with subsequent recovery.27,48 In contrast, a different study involving five ambulatory clinics at an academic medical center suggested that there was no obvious slowing of office workflow or productivity with EHRs.49 Finally, a study examining productivity before vs after implementation of the same EHR (EpicCare) as at our institution showed a small increase in clinical volume and charges after implementation.50 It is likely that the impact of EHRs on clinical volume varies based on differences in the specific system, implementation and utilization process, office workflow, and individual providers.

The system (EpicCare) implemented in this study was used throughout the university medical center. Although this provides advantages with regard to data exchange throughout the institution, there are concerns that large hospital-wide EHRs may not be optimally designed for the unique documentation and workflow requirements of ophthalmologists.26 Despite these concerns, this study found that there were no significant differences with regard to clinical volume after EHR implementation in the ophthalmology department compared to primary care specialties (Figure 2, top) and other similar medical and surgical specialties (Figure 2, bottom). Examining and understanding the differences among different medical specialties that affect ease of EHR adoption is beyond the scope of this study but is an important area for future research that will require detailed analysis of each individual field.

Higher-volume ophthalmology faculty providers in this study had an increasing clinical volume trend, whereas lower-volume providers had a decreasing clinical volume trend. Given that ophthalmology is a fast-paced specialty with many potential documentation challenges associated with new information systems, it might be expected that EHRs would hamper high-volume practices more than low-volume ones. For example, one study found that having more nonclinical work hours was associated with increased use of clinical information technology.51 On the other hand, EHRs may also provide opportunities for optimizing volume and efficiency through improved communication with other health care providers, rapid documentation of common findings through automated templates, electronic data exchange, access to computer-based practice guidelines and information resources, and improved practice management and charge submission.52–57 From this perspective, one possible explanation for this study finding is that higher-volume faculty providers may have been motivated to exploit these new strategies for leveraging EHRs to improve office workflow out of necessity to maintain their practice volumes. Another possibility is that providers who tend to become “higher-volume” do so because of underlying personality traits, and that efficient utilization of EHRs is simply another manifestation of those same traits. Finally, it is possible that higher-volume providers may have had more ancillary support and other clinical resources than lower-volume providers. Additional qualitative research studies may help elucidate the factors related to these differences.58 Interestingly, there were no statistically significant relationships between volume trend and provider age, gender, or subspecialty division. It is our anecdotal observation that individual variations among different providers at our institution may be larger than any differences that might be explained by these specific factors (eg, age, gender). Future studies involving more providers, perhaps from other institutions, would provide more insight into these issues.

IMPACT ON PROVIDER TIME REQUIREMENTS

Despite these findings suggesting that clinical volumes have been relatively stable after EHR implementation, it has been our anecdotal experience that many faculty providers feel that the transition toward electronic systems has been difficult. One possible explanation is that in many paper-based workflows, ophthalmologists often complete all clinical charting before the patient leaves the office. In contrast, a key study finding was that faculty providers using an EHR completed a significant proportion of clinical documentation outside typical business hours. Specifically, faculty providers using the EHR system in this study completed 32% of clinical documentation during weekday nonbusiness hours or on weekends (Figure 3). In fact, this analysis may underestimate the true burden of EHR documentation because much of this work completed during “weekday business hours” may have actually been done during scheduled academic, vacation, or administrative time during standard business hours from Monday through Friday.

Examination of the underlying reasons for these nonstandard documentation times was difficult because there were no baseline data available for chart completion time using paper systems. To address this issue, we performed time-motion comparison59 of two faculty providers (one retinal specialist and one pediatric ophthalmologist) who continued to work in satellite clinics using paper documentation. This showed that the EHR system required significantly more nonclinic documentation time and significantly more total time compared to paper charting (Table 4). These latter findings support the notion that providers using paper-based methods are often able to complete clinical documentation, billing documentation, and dictations to referring physicians during standard clinical time. For example, “Provider B” (pediatric ophthalmology) required no nonclinic time during these study sessions. Although the mean difference of 6.8 minutes per patient between EHR and paper calculated in this study may seem short, this translates to over 2 hours of additional time during a typical half-day clinic session with 20 patients.

Of course, findings from only two providers over 3 days may not be generalizable to other providers at our institution and elsewhere. Although the same two faculty providers were working at both the satellite clinics (using paper) and the academic medical center (using EHR), there were other differences between these sites that may have affected efficiency. Potential differences include the following: (a) Clinical staffing. For the pediatric ophthalmology provider, patients at the satellite clinic were seen by only an orthoptist and the faculty provider. In contrast, the academic center was more heavily staffed, and patients were often seen by technicians, orthoptists, and residents or fellows. For the retina provider, the satellite clinic was staffed by two technicians. In contrast, the academic center was staffed by three technicians. In fact, this additional technician was felt to be required because of slower data entry using EHR. Although it was the same staff members working at satellite and academic clinics with both the retina and pediatric ophthalmology providers, the availability of additional staff to assist at the academic center may have biased the time requirements in either direction. (b) Case mix. Although it was the anecdotal feeling of the two providers that there were not significant systematic differences in disease severity between patients at the satellite and academic clinics, additional data collection would provide more insight into this question. (c) Workflow. Other than the differences above, the workflow at satellite and academic clinics was similar for both providers. For example, neither clinic employed “scribes,” and letters were sent to referring physicians in both clinics when felt to be indicated. In fact, it was the anecdotal impression of one provider that he generated more letters to referring physicians at the satellite office, and the impression of the other provider that “most of my outside satellite-clinic work is the process of sending letters as I can do almost all billing and documentation on-the-fly in the satellite clinic—probably in about the amount of time it takes me to log in and select my patient’s chart with the EHR.” Overall, we note that there were no significant differences in time requirements for either provider among the 3 days examined (data not shown). We are not aware of any other studies that have systematically attempted to examine this issue, perhaps because time-motion data are challenging to collect. For all of these reasons, we feel that these study findings are consistent with our personal observation that ophthalmology EHR documentation requires more time than traditional paper-based documentation.

With regard to the lengths of time required to complete EHR documentation, we found that these were often significant, and that there were some striking variations. For example, EHR documentation was completed for 50% of all patient encounters within 4 hours and for 75% of all patient encounters within 2 days. However, documentation for the remainder of patient encounters required nearly 3 weeks to approach 100% completion (Figure 4). Among different providers, there were some who consistently completed all encounters relatively quickly (eg, we are aware of several providers who perform EHR documentation in the office for each patient), and other providers who consistently required much more time (eg, we are aware of several providers who wait until evenings or weekends to complete EHR documentation). It is difficult to determine with certainty whether faculty providers completed charting during off-hours because they found EHR charting too time-consuming to perform during the patient encounter, because they are less facile with the EHR system, because they preferred the flexibility of performing documentation during nonclinical time, because they felt documentation during the clinic visit would interfere with the patient-physician relationship, or because of other reasons.

Finally, the length of time required by study providers to complete EHR documentation had a statistically significant tendency to increase during the 3-year study period (Figure 5). We suspect that this increase was caused by a combination of factors, such as evolution in workflow patterns (eg, more providers completing EHR documentation during evenings and weekends) and a gradual increase in clinical volume over time. In addition, encounters involving resident or fellow trainees required significantly more time for completion than encounters without trainee involvement immediately after EHR implementation, although this difference narrowed quickly (Figure 5). The underlying reasons for this discrepancy are not clear, but are presumably because of a learning curve involving faculty-trainee interaction while using the EHR together. Important motivating factors for EHR adoption include improving quality of care, decreasing the incidence of medical errors, and decreasing the cost of care.2,6,7,13,14,60,61 However, it is conceivable that documentation of examination findings, diagnostic impressions, and management plans long after the clinical encounter could affect the ability of providers to perform these tasks accurately because of reasons such as memory and fatigue. These issues warrant future research and will be important for ensuring the timeliness and quality of care.

Taken together, these findings involving clinical volume and time requirements suggest that providers need to work longer to examine a similar patient volume using EHRs compared to paper systems. There are no previously published papers to our knowledge examining documentation speed with EHRs by ophthalmologists. Formal investigations involving time efficiency of ambulatory EHRs compared to paper documentation in other medical specialties have reached varying conclusions.62 Several studies examining clinical documentation times by nurses found that EHRs required more time than paper-based systems,63,64 whereas others have showed that EHRs required less time.65,66 Published studies in primary care settings have reported that EHRs were associated with increased documentation time,67–69 yet studies in intensive care unit, psychiatry, and anesthesia settings have found shorter documentation times with EHRs compared to paper systems.70–72 A time-motion study found no difference in the clinic time required for EHR documentation by primary care physicians compared to baseline times using paper documentation, although that study did not consider nonclinic time requirements.73 Kennebeck and colleagues74 found that patient length of stay in a pediatric emergency department increased by 6% to 22% after EHR implementation despite additional providers postimplementation, but those delays were noted to resolve after 3 months. A study utilizing survey reporting demonstrated that 66% of physicians perceived that EHR implementation increased their work amount, although RVUs per hour increased significantly compared to pre-EHR baseline values.75 In a different report utilizing survey methods, Bloom and Huntington76 showed that physicians spent 13 to 16 minutes documenting each patient encounter, and found that physicians and staff felt that the EHR was adversely affecting patient care and communication among clinic personnel. Our study builds upon this published literature by examining the ophthalmology domain, by including analysis of raw data involving clinical volume and encounter times with EHRs vs paper methods, by examining trends over several years involving multiple ophthalmic subspecialties and medical specialties, and by correlating with faculty provider characteristics.

IMPACT ON OPHTHALMIC DOCUMENTATION AND CODING

Although this study found that EHRs are associated with increased time requirements but little or no increase in clinical volume, it is important to note that a potentially important benefit of electronic records is improved quality and completeness of documentation.77–80 For example, information entered into the medical record by physicians may be checked by clinical decision support algorithms to prevent potential medical errors, analyzed through retrospective research studies, used to find patients eligible for prospective clinical trials, and used to populate large-scale public health data repositories.6,81,82 Paper-based medical records are limited because they are organized temporally, whereas a fundamental difference with computer-based records is that they may be organized and visualized longitudinally to visualize trends and comparisons. To realize their full potential in these areas, EHRs must be designed to permit efficient and accurate data entry, along with options for display of examination findings to support optimal diagnosis and management by health care providers.

A large body of research has demonstrated that EHR use can affect physician cognition and clinical decision making, and thereby impact clinical care.70,83–85 One study showed that primary care physicians who transitioned from paper to EHR exhibited both qualitative and quantitative changes in the nature of their clinical documentation. Also, EHR users who were more experienced with the system had very different strategies for interacting with patients while using the computer compared to EHR users who were less experienced with the system.86 Other studies have identified situations in which electronic systems may contribute to medical errors.24,25 This may be particularly true when EHRs are designed or implemented poorly For these reasons, it is essential to understand the differences in clinical documentation using current EHR systems compared to what ophthalmologists have traditionally performed using paper-based systems.

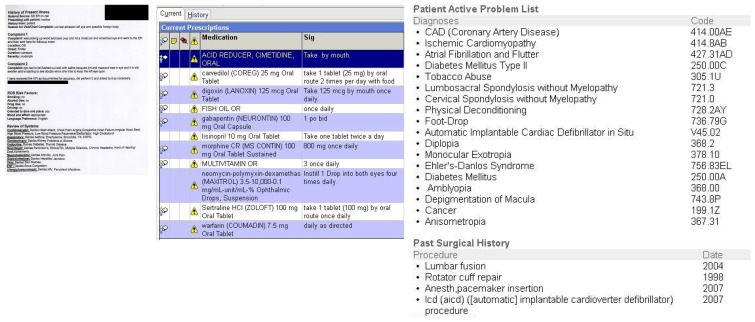

With regard to efficiency of data display in the EHR to support clinical care, we found that EHR notes in all three study cases were longer than the corresponding paper-based notes. This was largely due to additional pages of computer-generated text, which included institution-wide sections such as “Order and Results,” “Additional Visit Information,” “Level of Service,” and “Routing History” to maximize availability of information. While reviewing other records for this study, we found several EHR notes sent from outside institutions that included lengthy descriptions of chief complaint, history of present illness, and review of systems that included extensive automatically generated text (Figure 9, left). Finally, we identified numerous other instances in which ophthalmic problem lists and medication lists were combined within long lists of systemic problems and medications (Figure 9, center and right). Without optimal organization and display of information, the availability of excessive clinical data in EHR documents may inhibit the ability of ophthalmic providers to quickly recognize the most relevant ocular findings for clinical diagnosis.

FIGURE 9.

Examples of clinical documentation challenges using EHR systems. Left, extensive automatically generated text for chief complaint, history of present illness, and review of systems. Center and right, problem lists and medication lists that combine systemic and ophthalmic issues.

These problems may be exacerbated if automated features such as “copy-paste,” “copy-forward” (ie, repeating findings from previous examinations), and “all normal” (ie, prefill a normal examination template) are used indiscriminately. The intent of these features is to improve efficiency of documentation, increase completeness of documentation, and improve charge capture. However, this may create situations in which findings that were not seen during examination are overdocumented. This may impact quality of care and billing compliance.87–91 In fact, it is our feeling that many documentation features of current EHRs were designed to support billing and compliance rather than medical decision making, and that these factors may contribute to the decreased time efficiency associated with EHR documentation in this study. Ironically, there may be situations where excessive information presentation and poor system design could facilitate errors that expose ophthalmologists to medicolegal liability. Critical areas relevant to legal exposure include quality of electronic documentation, consistency between EHR notes and paper records maintained by the same office, documentation of differential diagnosis and decision making, and privacy and security of medical records.92–94 In this study, there was a tendency toward higher-level coding with EHR than with paper (Table 5). This may be because of undercoding with the paper system, overcoding with the EHR, a true shift in clinical complexity, or a combination of these factors. There are no data available at our institution regarding the association between EHR implementation and changes in adverse events or medicolegal risk. All of these areas will require further investigation.

In this study, we identified many other significant qualitative differences in the nature of clinical data representation using paper vs EHR documentation methods. Most paper charts examined in this study emphasized graphical representation of ocular features, as well as reliance on structured forms with checkboxes to summarize ocular findings. Virtually all currently practicing ophthalmologists were trained to document clinical findings using hand-drawn sketches.95 Common graphical representations include annotated drawings of posterior segment (Figure 6), optic nerve (Figure 7), and anterior segment (Figure 8) pathology using anatomic templates or freehand sketches. Standard symbols are typically used to represent examination findings (such as “X”’s for panretinal photocoagulation in Figure 6). Well-known annotated templates are used to organize and display standard ocular examination components such as extraocular motility, gonioscopy, strabismus measurements, and ophthalmoscopic findings in paper-based systems. In comparison, the EHR notes reviewed for this study contained text-based descriptions of findings, along with clinicians’ interpretations of those findings (eg, “well-treated PDR: +good PRP pattern in the periphery” and “NHRPDR” in Figure 6, “no definite change compared to earlier photographs” in Figure 8). Although the EHR system at our institution includes a drawing module with a mouse-based interface, none of the records reviewed for this study contained drawings generated by that tool. We believe that this existing mouse-based drawing tool is used so infrequently by providers at our institution because is too cumbersome and provides insufficient resolution for clinical purposes.

That said, it is unclear whether drawings truly provide information beyond what is conveyed by textual descriptions of ophthalmic findings, and we realize that some trainees and young faculty at our institution have never had the experience of consistently documenting examination features using hand-drawn sketches. Representing the appearance of ocular structures may be inherently qualitative, although numerous classification systems have been developed to standardize the description of specific diseases for clinical care or research.96–99 From this perspective, paper-based documentation using drawings may be somewhat subjective and imprecise, and a large body of research has established that physicians often develop different diagnoses and management plans even when provided with the exact same clinical data.82,100–107 Objective documentation of clinical findings using photography and other imaging modalities in the medical record may be one mechanism for improving the accuracy and reproducibility of ophthalmic care using EHRs (Figure 6, bottom, and Figure 8, bottom). For example, photographic classification of diabetic retinopathy using images captured using standard protocols, with subsequent interpretation at a certified reading center, has been shown to be more accurate than traditional dilated ophthalmoscopy by ophthalmologists or optometrists.108,109 Similarly, it has been shown that review of wide-angle retinal photographs may be more accurate than dilated ophthalmoscopy for diagnosis of retinopathy of prematurity (ROP) in some situations, and that objective photographic documentation may help clinicians recognize disease progression in ROP.110,111 One challenge is that historical patient data, both image-based and text-based, are often archived on analog media such as paper, film, and slides. Comparisons with these existing historical data are often difficult after EHR implementation. At the study institution, and at many other institutions to our knowledge, this is performed using a combination of scanning to digital format and a parallel archive of traditional paper-based charts. Additional research examining the role and cost-benefit tradeoffs of routinely incorporating images into ophthalmic EHRs is warranted.

STANDARDS, INTEROPERABILITY, AND QUALITY REPORTING

As more ophthalmology practices implement EHR systems, we anticipate that methods of clinical ophthalmic documentation will gradually evolve, continuing the shifts described above. Thoughtful EHR system design to capture and represent ophthalmic findings can create critical infrastructure to improve clinical care, while supporting biomedical research and public health reporting.1–8,14–16 Electronic data exchange provides opportunities to improve communication among multiple specialized care providers, to increase efficiency as a growing volume of patient data are being generated, and to decrease redundancy of medical testing. However, data exchange is particularly challenging in ophthalmology because of the large number of electronic systems (eg, EHR, practice management system, image management system) and imaging devices (eg, fundus camera, optical coherence tomography, visual field machine) involved.26 To ensure that data may be exchanged freely among these electronic systems and imaging devices, vendor-neutral standards such as Health Level 7 (HL7), Digital Imaging and Communications in Medicine (DICOM), and Systematized Nomenclature of Medicine (SNOMED) must be adopted by the ophthalmology community.52,112–118

Standards are essential for ensuring interoperability, which represents the ability of electronic systems to exchange data regardless of the vendor. In particular, SNOMED is used for standard representation of clinical findings and concepts, HL7 for exchange of text-based and clinical data, and DICOM for representation and transmission of image-based and machine-derived measurement data.112,114,116–117 Integrating the Healthcare Enterprise (IHE) is a major initiative by health care professionals and private industry that has developed profiles to support coordinated implementation of these existing standards in real-world settings for interoperability of medical devices and systems.115 Although these standards have been well defined in ophthalmology, many devices and EHR systems continue to use proprietary formats defined by individual vendors.26 This creates difficulties in which ophthalmologists are forced to purchase costly and difficult interfaces to integrate EHRs with new devices and systems. In contrast, DICOM-based image storage and communication has been universally adopted within radiology, which facilitated rapid PACS adoption and improved quality of care.119

Despite this promise, there has been no published literature to our knowledge demonstrating that EHRs are associated with broadly improved quality of ophthalmology care. Designing such studies is methodologically challenging because EHRs affect patient outcomes indirectly, through the interactions of the clinicians who use them, rather than directly through traditional medical or surgical interventions. It would be difficult to design rigorous randomized controlled studies with EHR vs without EHR, because implementation is typically performed within entire institutions, and comparing different institutions would introduce significant biases. Finally, clear outcomes of differences in “quality” are difficult to measure and often require lengthy time periods to establish.

In other medical fields, demonstration of broad quality improvement with EHRs has also been difficult to establish because of similar reasons. One recent cross-sectional study using discrete ambulatory quality-of-care measures suggested that EHRs were associated with improved outcomes,120 and another recent study involving diabetes care found that EHR implementation was associated with improvements in intermediate measures such as blood pressure and aspirin prescription.121 In a study of general diabetes care at 46 medical practices, Cebul and associates122 showed that practices using EHR tended to achieve higher composite standards for care than those using paper. However, a much larger number of studies have demonstrated benefits related to intermediate outcomes relevant to EHR implementation. For example, checklists and clinical decision support tools have been found to improve care through decreased medical errors.7,8,123–126