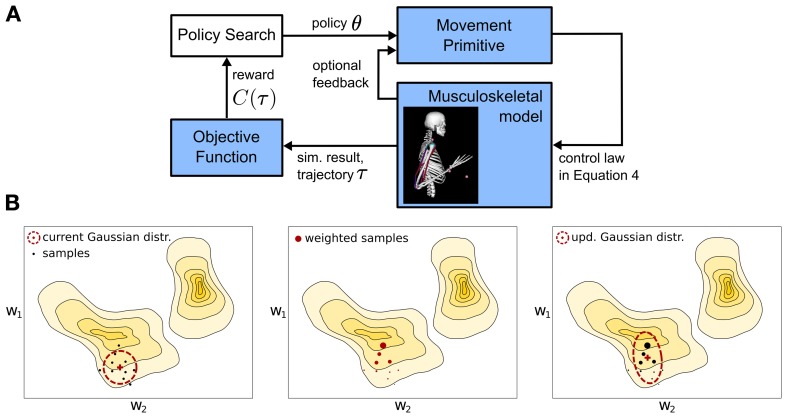

Figure 3.

Overview of the learning framework. (A) A parametrized policy θ modulates the output of a movement primitive that is used to generate a movement trajectory τ. The quality of the movement trajectory is indicated by a sparse reward signal C(τ) which is used for policy search to improve the parameters of the movement primitive. For a single iteration the implemented policy search method - Covariance Matrix Adaptation (CMA) (Hansen et al., 2003) is sketched in (B). The parameter space is approximated using a multivariate Gaussian distribution denoted by the ellipses, which is updated (from left to right) using second order statistics of roll-outs or samples that are denoted by the large dots (see text for details).