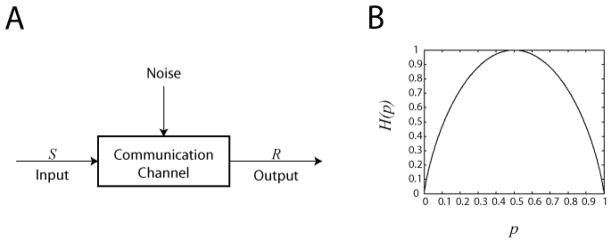

Figure 2. (A) Schematic of a communication channel.

A basic communication channel can be described by an input random variable S connected by a channel to a random variable output R such that the outcome of R is dependent on S subject to the distorting influence of noise. In information theory the complexity of the channel can be represented as a “black box”, since the internal details are fully captured by the joint distribution between R and S. (B) Entropy as a function of a Bernoulli random variable with probability p. This concave down graph illustrates that entropy is at its maximum when all outcomes are equally probable (p = 0.5) and at a minimum when the outcome is predetermined (p = 0 or 1).