Abstract

Objective

To determine the effects of the adoption of ambulatory electronic health information exchange (HIE) on rates of laboratory and radiology testing and allowable charges.

Design

Claims data from the dominant health plan in Mesa County, Colorado, from 1 April 2005 to 31 December 2010 were matched to HIE adoption data on the provider level. Using mixed effects regression models with the quarter as the unit of analysis, the effect of HIE adoption on testing rates and associated charges was assessed.

Results

Claims submitted by 306 providers in 69 practices for 34 818 patients were analyzed. The rate of testing per provider was expressed as tests per 1000 patients per quarter. For primary care providers, the rate of laboratory testing increased over the time span (baseline 1041 tests/1000 patients/quarter, increasing by 13.9 each quarter) and shifted downward with HIE adoption (downward shift of 83, p<0.01). A similar effect was found for specialist providers (baseline 718 tests/1000 patients/quarter, increasing by 19.1 each quarter, with HIE adoption associated with a downward shift of 119, p<0.01). Even so, imputed charges for laboratory tests did not shift downward significantly in either provider group, possibly due to the skewed nature of these data. For radiology testing, HIE adoption was not associated with significant changes in rates or imputed charges in either provider group.

Conclusions

Ambulatory HIE adoption is unlikely to produce significant direct savings through reductions in rates of testing. The economic benefits of HIE may reside instead in other downstream outcomes of better informed, higher quality care.

Background and significance

The Office of the National Coordinator for Health Information Technology has sought to advance secure electronic health information exchange (HIE) to facilitate more ‘coordinated, effective, and efficient care’.1 Given the ever-rising costs of healthcare, policymakers have been interested in the potential for investments in HIE to pay off in efficiency-related savings. A central anticipated benefit of HIE implementation is its potential to reduce unnecessary testing by providing a consolidated, timely, and easily accessible summary of patient information across organizations. Models have suggested enormous potential savings,2 with a projection that payers could realize annual savings of US$3.76 billion in laboratory tests and US$8.04 billion in radiology tests under ideal conditions of interoperable electronic health record use.3 These estimates have been used as part of the justification for investment in HIE by health plans and federal institutions.

However, studies have not demonstrated a consistent beneficial effect of health information technology (including HIE) on rates of test ordering. There is good evidence (with some exceptions)4 that laboratory and radiology test utilization is reduced substantially within institutions (such as medical centers) that implement comprehensive electronic medical records.5–8 However, the evidence for effects of cross-institutional HIE on test utilization is limited and mixed. Studies in emergency departments in Indianapolis, Indiana and Memphis, Tennessee showed that HIE adoption generally did not result in lower overall rates of laboratory and radiology testing, although HIE adoption was associated with reductions in the use of unnecessary neuroimaging for headache9 and overall emergency department-associated charges.10 11 In the ambulatory setting, the adoption of an ‘internal HIE’ by two Boston, Massachusetts hospitals in 2000 was associated with reductions in some laboratory testing rates.12 While overall rates of laboratory testing increased from 1999 to 2004, rates declined for encounters in which the results of recent off-site tests were available through the internal HIE.

The wide-scale adoption of HIE in Mesa County, Colorado from 2005 to 2010 provided a unique opportunity to explore the effects of HIE adoption on ambulatory testing rates more broadly for a well-defined market area and dominant health plan. Data on HIE adoption by ambulatory practitioners and claims data from this insurer were used to determine the effects of HIE adoption on laboratory and radiology testing rates. We hypothesized that HIE adoption would be associated with lower rates of testing and lower associated costs.

Methods

Setting

Mesa County, Colorado is a metropolitan area on the western slope of Colorado composed of the city of Grand Junction and surrounding areas. Mesa County is noted for the collaborative approach members of its medical community have taken to improve the quality and efficiency of local care.13 14 In the 1970s, the county medical society formed an independent practice association (Mesa County Physicians IPA) and local medical providers and business leaders formed Rocky Mountain HMO. The latter, now called Rocky Mountain Health Plans, is the region's dominant insurer (approximately 40% local market share), providing commercial insurance (covering 35% of local commercial lives) as well as managed Medicaid and Medicare supplement programs (covering 72% of local Medicaid beneficiaries and 40% of local Medicare beneficiaries). In 2004, Mesa County Physicians IPA and Rocky Mountain Health Plans sponsored the development of Quality Health Network (QHN), a new, independent regional health information organization.

QHN launched the HIE in 2005. QHN collects, standardizes, and distributes nearly all of Mesa County's laboratory and radiology test results, the vast majority of which are handled by the region's two major hospitals and two large local practices with Clinical Laboratory Improvement Amendments of 1988 (CLIA)-certified laboratories. Practices adopting QHN received electronic access to the QHN system, allowing provider and non-provider users to retrieve test results ‘pushed’ to the practice. Results of tests ordered by the practice and forwarded by other practices were ‘pushed’. Provider users of QHN could also ‘pull’ data by searching a consolidated repository of community test results. The QHN master patient index consists of over 540 000 patients who have received care in Mesa County. By 2010, it had been adopted by 84 provider groups consisting of 351 clinical providers, representing approximately 85% of Mesa County providers by 2010.

Data sources

For this project, Rocky Mountain Health Plans created enrollment and claims data files for patients residing in Mesa County (based on ZIP code) for calendar years 2005 to 2010. By special agreement, QHN linked the claims data to local HIE adoption data. HIE adoption on the practice level was defined by QHN as the date on which the practice began receiving electronic access to results ‘pushed’ to the practice by the QHN system. HIE adoption on the provider level was defined by QHN as the month in which the individual provider logged into the QHN system more than 20 times. In QHN's experience this distinguished actual clinical use from use associated with initial training. Practice, provider, and patient identifiers were transformed into unique unrelated numbers to create a limited dataset that was provided to the research team for analysis. The study was conducted with approval of the Colorado Multiple Institutional Review Board.

Creation of analytical dataset, including outcome and independent variables

Each claim record included the date of service; an identifier for the patient receiving the service, the medical provider or organization making the claim, and the primary care provider; the healthcare common procedure coding system procedure code; and the place of service code. Duplicate claims records (those with identical values for all fields) were removed. A supplemental dataset associated providers with practices.

Definition of ambulatory visits, laboratory orders, and radiology orders

Only ambulatory claims were included in the analytical dataset. These were defined as claims with place of service codes 11 (office), 22 (outpatient hospital), 71 (state or public health clinic), 72 (rural health clinic), and 81 (independent laboratory).15 Claim records were then categorized based on Berenson–Eggers type of service (BETOS) code categories.16 Claims for visits were defined as claims in BETOS category 1 (evaluation and management), subcategories M1A, M1B, M6, M5C, M5B, Y1, P5A, P5B, P6A, P6B, and Z2. Claims for laboratory tests were defined as claims in BETOS category 4 (tests), subcategories T1A–T1H, excluding claims for routine venipuncture (G0001) and handling and/or conveyance of specimen for transfer from the physician's office to a laboratory (99 000). Claims for radiology tests were defined as all claims in BETOS category 3 (imaging). When multiple claims using different healthcare common procedure coding system codes were associated with a single episode of screening mammography (ie, multiple claims for screening mammography for the same patient on the same day), these were collapsed into a single claim. Following the analytical methods of a related study for electronic medical records,4 claims for advanced radiology were defined as the subset of imaging claims associated with CT, MRI, or positron emission tomography.

Attribution of laboratory and radiology claims to the ordering provider

Claims for laboratory and radiology tests indicated the provider making the claim (eg, the pathologist or radiologist) but not the ordering provider. For this analysis, the ordering provider was inferred as follows: (1) the test was attributed to the provider associated with the ambulatory visit closest in time to the test in the previous 0–60 days; (2) if no ambulatory visit claim was made in this interval, the test was attributed to the provider associated with the ambulatory visit closest in time to the test in the subsequent 1–30 days; (3) if no ambulatory visit claim was made in this interval, the test was attributed to the primary care provider (PCP) listed in the claim for the test; (4) if the PCP was not defined in the claims dataset, the test could not be attributed to an ordering provider. In the first and second steps, if more than one provider made an office visit claim on the same day, the test claim associated with the first office visit claim in the dataset for that day was used. Only claims associated with ambulatory medical providers in Mesa County were included in the analytical dataset. Claims for chiropractors, ophthalmologists, optometrists, and physical therapists were excluded due to their limited use of laboratory and radiology tests.

Outcome and independent variables

Outcomes and independent variables were defined at the level of the provider quarter. Due to noted anomalies in claims data from the first quarter of 2005, the dataset included claims from the second quarter of 2005 to the fourth quarter of 2010.

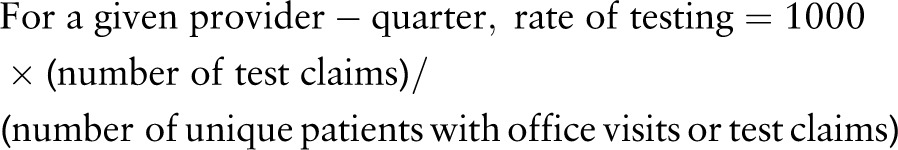

The first set of primary outcome measures were rates of claims for laboratory, radiology, and advanced radiology tests. For each provider quarter, rates were defined as the number of test claims per 1000 unique patients cared for. As the claims dataset did not indicate panels of patients for providers, the denominator (unique patients cared for) was defined as the number of unique patients with an ambulatory office visit, laboratory test, or radiology claim:

|

Observations were censored for provider quarters in which the denominator was less than 15.

The second set of outcome measures were charges for laboratory and radiology, and advanced radiology tests per 1000 patients cared for per provider quarter. To determine charges, standard Medicare allowable charges for 2010 were imputed for every laboratory and radiology test.17

The primary explanatory variable was a time-varying covariate indicating for each quarter of data whether the provider was pre or post-adoption of HIE (with provider adoption of HIE as defined above). In the model, ‘provider adopter’ was ‘no’ through the first quarter that included the month of provider adoption and was ‘yes’ for each subsequent quarter.

Five additional independent variables were included: (1) categorization of the age of patients seen in that provider quarter, based on whether the mean patient age was 0–19, 20–54, or 55 years and older; (2) percentage of female patients; (3) proportion of ‘transitional’ encounters (encounters in which the preceding encounter in the community was to a different provider);18 (4) primary care (general internal medicine, family medicine, or pediatrics) provider or not; (5) chronological quarter, ranging from 0 (second quarter 2005) to 22 (fourth quarter 2010). Practice adoption, defined as the quarter after the practice began participation in the QHN system (receiving test results from QHN and being able to review results obtained from other practices), was a candidate covariate but was removed from the final model because it caused over-adjustment. Results of the model using practice adoption as the primary explanatory variable rather than provider adoption are included in the supplementary appendix (available online only).

Statistical analysis

Descriptive statistics including means, SD, proportions, and frequency distributions were generated for patient and practice characteristics. To assess the effect of provider adoption of HIE on testing rates, we employed a general linear mixed model with random coefficients. Costs were analyzed using generalized linear mixed effects models (gamma distribution with a log link function).19

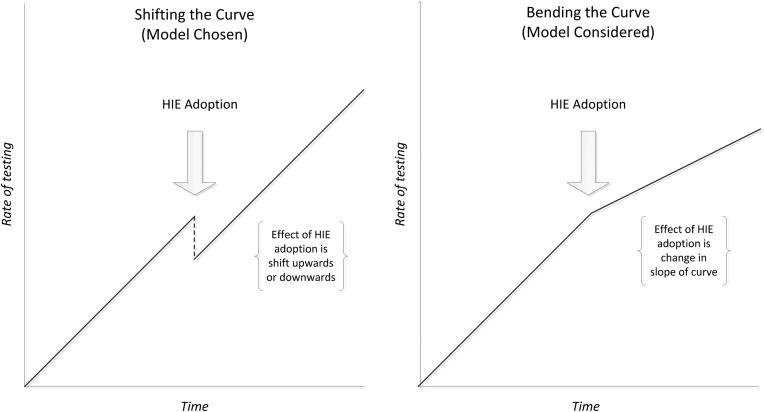

HIE adoption, the primary independent variable, was included as a time-varying covariate in all models. In each model, a ‘pre-adoption’ linear trend (slope) in the rate or cost of testing was established. We explored two variants of the models, one in which provider adoption increased or reduced rates or costs post-adoption but did not change the slope of the linear trend (‘shifting the curve’) and another in which provider adoption changed the slope of the linear trend (‘bending the curve) (figure 1). Using Akaike information criterion, the former approach (shifting the curve) was found to fit the data better. This model was therefore employed for our analyses. In addition, two interaction terms (time×provider type, HIE adoption×provider type) were included in all models to obtain different estimates for primary care and specialty care providers. Estimates were obtained for slopes (change per quarter) and the shift that occurred at the time of HIE adoption for both primary care and specialty care providers. For rates, these estimates are the same regardless of when they occur as the model is inherently linear (general linear mixed model). For costs, as we employed a generalized linear mixed model (gamma distribution with a log link), absolute values of slope and shift vary slightly, depending on actual calendar time. As the midpoint of adoption was approximately the 10th to 11th quarter we report slope and shift at that time point in actual dollars rather than log costs. Data were analyzed using PROC MIXED or Proc GLIMMIX in SAS 9.3.

Figure 1.

Models chosen and considered for evaluation of the effect of health information exchange adoption on rates of testing.

Results

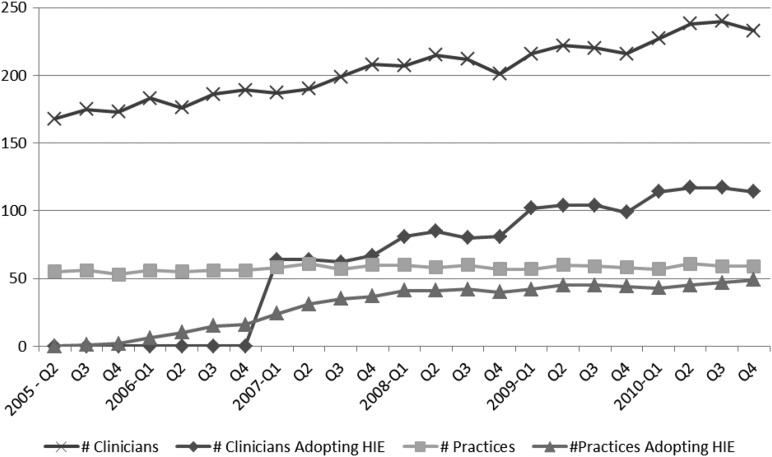

The final analytical dataset included claims from 306 ambulatory providers in 69 practices submitting 1 013 962 ambulatory claims (528 118 office visits, 358 324 laboratory claims, and 127 520 radiology claims) for 34 818 unique patients from the second quarter of 2005 to the fourth quarter of 2010. Characteristics of patients in the dataset are shown in table 1 and characteristics of providers are shown in table 2. The relatively high proportion of pediatric patients in this dataset is notable in comparison to analyses that are limited to Medicare data. HIE adoption rose steadily from 2005 to 2010, with 46% of providers adopting HIE by the end of 2010 (figure 2).

Table 1.

Characteristics of patients and claims in analytical dataset

| Characteristic | N, % |

|---|---|

| Patients | 34818 |

| Female (N, %) | 19747, 56.7% |

| Age range, years (N, %) | |

| 0–18 | 11464, 32.9% |

| 19–34 | 5639, 16.2% |

| 35–49 | 5184, 14.9% |

| 50–64 | 5836, 16.8% |

| 65–80 | 4438, 12.8% |

| 80+ | 2257, 6.5% |

| Claims | 1013, 962 |

| Line of business: | |

| Commercial | 415148, 40.9% |

| Medicaid | 271121, 26.7% |

| Medicare | 321814, 31.7% |

| Dual-eligible | 5879, 0.6% |

Table 2.

Characteristics of providers in analytical dataset

| Primary care | Specialists | |

|---|---|---|

| No of providers | 146 | 160 |

| No of practices | 26 | 43 |

| No of providers/practice (mean, range) | 5.6, 1–49 | 3.7, 1–20 |

| Patient load (unique patients seen over the entire course of dataset) per provider (mean, range) | 595, 39–2504 | 347, 18–1432 |

| Ever adopted QHN (N, %) | 95, 65% | 46, 29% |

QHN, Quality Health Network.

Figure 2.

Adoption of health information exchange in Mesa County, CO, 2005-2010.

In our primary models, secular trends (slopes) were distinguished from shifts related to HIE adoption (shift in y-intercept) for the quantity of tests ordered (table 3) and the costs of (allowable charges for) those tests (table 4). Overall, the only significant effect of HIE adoption was a shift downward in the quantity of laboratory tests:

Laboratory tests: For both primary care and specialty care providers the secular trend was an increase in both quantity and cost. With HIE adoption there was a significant shift downward in quantity but no significant shift in cost.

Radiology tests: For both primary care and specialty care providers there was no significant secular trend in either quantity or cost. With HIE adoption there was no significant shift in either quantity or cost.

Advanced radiology tests: For primary care providers there was no significant secular trend in quantity or cost, while for specialty care providers the secular trend was an increase in both quantity and cost. For both primary and specialty care providers, with HIE adoption, there was no significant shift in quantity or cost.

Table 3.

Primary models of testing rates

| Specialty | Baseline rates | Change per quarter | p Value | Shift with HIE adoption | p Value | |

|---|---|---|---|---|---|---|

| Laboratory testing rate* | Primary care | 1040.5 | 13.1 | <0.0001 | −83.4 | 0.0089 |

| Specialty care | 717.9 | 19.1 | <0.0001 | −119.0 | 0.0072 | |

| Radiology testing rate* | Primary care | 290.4 | −1.3 | 0.3601 | −1.0 | 0.9412 |

| Specialty care | 600.7 | −2.3 | 0.0906 | −26.5 | 0.1740 | |

| Advanced radiology testing rate* | Primary care | 32.8 | −0.05 | 0.8657 | 4.3 | 0.4081 |

| Specialty care | 81.4 | 1.0 | 0.0007 | −2.4 | 0.7219 |

*Testing rate is defined as the rate of the number of tests ordered per provider per quarter per 1000 patients cared for.

HIE, health information exchange.

Table 4.

Primary models of overall testing costs

| Specialty | Baseline costs | Range of change in cost per quarter (Q7–8, Q20–21)* | Change per quarter at midpoint (Q10–11) | p Value | Range of shift with HIE adoption (Q7, Q20)† | Shift with HIE adoption at midpoint (Q10) | p Value | |

|---|---|---|---|---|---|---|---|---|

| Laboratory costs* | Primary care | $16769 | $121, $138 | $125.0 | <0.0001 | $318, $364 | $327.7 | 0.4586 |

| Specialty care | $16486 | $178, $216 | $185.6 | <0.0001 | $54.9, $67.8 | $57.3 | 0.9176 | |

| Radiology costs* | Primary care | $36282 | $−19, −$19 | −$19 | 0.6990 | $1036, $1024 | $1033 | 0.2118 |

| Specialty care | $75893 | $136, $142 | $138 | 0.1022 | −$250, −$266 | −$254 | 0.9019 | |

| Advanced radiology costs* | Primary care | $16205 | −$29, −$29 | −$29 | 0.4393 | $948, $920 | $941 | 0.1536 |

| Specialty care | $49573 | $162, $171 | $163 | 0.0429 | −$366, −$397 | −$374 | 0.8409 |

*Costs are defined as the sum of allowable charges for the tests ordered per provider per quarter per 1000 patients cared for.

†For costs, coefficients are invariant but the relationship is non-linear so back-transformed estimates (ie, US dollars) differ slightly across quarters.

HIE, health information exchange.

The observation that HIE adoption was associated with downward shifts in the quantity of laboratory tests ordered but no change in costs suggested that perhaps HIE adoption might be associated with ordering fewer but more expensive laboratory tests. To assess this, a secondary analysis was performed. Using the same analytical approach as the primary cost models, new models defined the dependent variable to be the mean unit cost of testing (derived by dividing the total cost of laboratory testing by the number of tests ordered). For both primary care and specialty care providers, HIE adoption was not associated with a significant shift in the mean unit cost of laboratory tests (table 5). Incidentally, for primary care providers the mean unit cost of advanced radiology tests shifted upward after HIE adoption.

Table 5.

Secondary models of mean unit testing costs

| Specialty | Baseline unit cost | Change per quarter at midpoint (Q10–11)* | p Value | Shift with HIE adoption at midpoint (Q10)* | p Value | |

|---|---|---|---|---|---|---|

| Mean unit cost of laboratory tests | Primary care | $16.1 | −$0.1 | 0.3167 | $0.3 | 0.6184 |

| Specialty care | $25.1 | −$0.15 | <0.0001 | $0.9 | 0.1979 | |

| Mean unit cost of radiology tests | Primary care | $118.2 | $0.4 | 0.0326 | $3.4 | 0.2077 |

| Specialty care | $137.6 | $0.6 | <0.0001 | $1.3 | 0.7019 | |

| Mean unit cost of advanced radiology tests | Primary care | $1082.4 | $0.4 | 0.8978 | $226.4 | <0.0001 |

| Specialty care | $1167.1 | $16.2 | <0.0001 | $63 | 0.4500 |

*Differences in slope and shift estimates were less than US$3 at lower (Q7–8, Q7) and upper (Q20–21, Q20) ranges.

HIE, health information exchange.

Discussion

In the ambulatory setting, provider adoption of HIE in Mesa County, Colorado was associated with a significant downward shift in laboratory testing rates but no significant shifts in radiology testing rates or imputed costs for either laboratory or radiology tests. Off hand, it may seem inconsistent that the laboratory testing rate could significantly shift downward with HIE adoption without a corresponding significant shift in either the unit or total costs of laboratory testing. However, the analytical models used for testing rates differed from those used for testing costs. For testing rates an analytical model using a linear distribution could be employed, while the greater skew and variability of testing costs required the use of an analytical model using gamma distribution with log link. Because these distributional assumptions affect the calculation of statistical significance, it is not incongruous for the statistical significance of shifts in testing rates to differ from the statistical significance of shifts in testing costs.

While the reduction in laboratory testing rates confirms that HIE adoption can result in more efficient care, the observed magnitude of benefit appears to be far lower than that projected in early economic models.2 3 The observed reduction in laboratory testing rates is consistent with the reduction observed by Hebel et al12 when off-site laboratory tests were available through HIE. More generally, our results are similar to those of other studies that did not show consistent reductions in overall rates of testing with HIE10 11 or of costs of testing.9 In contrast to the cross-sectional analysis of National Ambulatory Medical Care Survey (NAMCS) data by McCormick and colleagues,4 which found that ambulatory access to computerized results was associated with an increase in testing rates, in our longitudinal analysis HIE adoption by providers was not associated with a significant increase in testing rates.

Our analytical methods differed from previous studies but were rigorous and appropriate for the analysis of ambulatory claims data. An important distinction between this analysis and others that have focused on the emergency department setting10 11 is the use of the provider quarter as the unit of analysis, rather than the patient encounter. While tests can reasonably be attributed to emergency department encounters, this is more difficult in the outpatient setting, where follow up or standing orders may be placed outside of a face-to-face encounter. Aggregation to the provider level was therefore necessary in the ambulatory setting. Defining ‘adoption of HIE’ as the primary explanatory variable rather than ‘use of HIE’ was another distinction for the present study. This choice was made partly due to the difficulty of linking tests to encounters, and partly due to the inability to distinguish active (pull) rather than passive (push) HIE use in the QHN system. It also avoids analytical problems related to confounding by intention observed in other studies (eg, HIE use being associated with higher rates of test because HIE tends to be used in more complicated patients).20 Using ‘adoption’ as the primary explanatory variable could, however, mask differential effects of varying intensity of HIE use among diverse adopters.

The limitations of this analysis include a restricted ability of the model to differentiate effects of HIE adoption from other secular trends and possible selection bias related to the timing of HIE adoption. Strengths include the study of an HIE during a period of wide-scale adoption, the ability to combine claims and HIE administrative data for analysis on the broad community level, and careful methods to account for the effects of different patient panel characteristics and the proportion of ‘transitions’ encounters.

Overall, this analysis suggests that reductions in the rates of testing in the ambulatory setting are unlikely to result in substantial short-term cost savings. While these results do not rule out a significant association between select instances of ambulatory HIE use and reductions in ambulatory test ordering, they do address the key policy questions, ‘What magnitude of changes in ambulatory testing rates can be expected with robust community-wide HIE adoption?’ and, ‘How likely are health plans to enjoy overall cost savings through reductions in ambulatory testing rates?’ Even so, it is important to note that HIE adoption may result in efficiencies that transcend effects on testing rates. Other studies, for instance, have observed cost savings associated with HIE adoption that may be related to improved care coordination.10 11 21 These benefits may be the most promising avenue for future research on the effects of HIE adoption.

Footnotes

Acknowledgments: The Quality Health Network team provided invaluable assistance in obtaining, linking, de-identifying, and clarifying data, including claims data provided by Rocky Mountain Health Plans.

Contributors: SER and TAR developed the initial conception and design of the project. WGL was responsible for the acquisition of data. All listed authors (SER, TAR, WGL, JMD, AML and DEN) made substantial contributions to the subsequent analysis and interpretation of data. SER drafted the initial manuscript. All listed authors made substantial revisions and have approved the version that has been submitted for publication.

Funding: This work was supported by the Agency for Healthcare Research and Quality (AHRQ), grant number 5R21HS018749. AHRQ reviewed design and conduct of the study, including analysis and interpretation of the data and manuscript preparation. Previous approval of the manuscript was not required by AHRQ.

Competing interests: In addition to his ongoing appointment with University of Colorado, SER is now employed by Health Language Incorporated (HLI), which provides medical terminology services. The reported analysis was developed, implemented, and largely completed before SER joined HLI, and HLI has no direct financial interest in the outcomes of the analysis. TAR, WGL, JMD, AML, and DEN have no competing interests to report.

Ethics approval: The study was conducted with approval of the Colorado Multiple Institutional Review Board.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: The data used for this analysis will be maintained as per federal requirements.

References

- 1.Williams C, Mostashari F, Mertz K, et al. From the Office of the National Coordinator: the strategy for advancing the exchange of health information. Health Aff (Millwood) 2012;31:527–36 [DOI] [PubMed] [Google Scholar]

- 2.Hillestad R, Bigelow J, Bower A, et al. Can electronic medical record systems transform health care? Potential health benefits, savings, and costs. Health Aff (Millwood) 2005;24:1103–17 [DOI] [PubMed] [Google Scholar]

- 3.Walker J, Pan E, Johnston D, et al. The value of health care information exchange and interoperability. Health Aff (Millwood) 2005;Suppl Web Exclusives: W5–10 to W5–18 [DOI] [PubMed] [Google Scholar]

- 4.McCormick D, Bor DH, Woolhandler S, et al. Giving office-based physicians electronic access to patients’ prior imaging and lab results did not deter ordering of tests. Health Aff (Millwood) 2012;31:488–96 [DOI] [PubMed] [Google Scholar]

- 5.Stair TO. Reduction of redundant laboratory orders by access to computerized patient records. J Emerg Med 1998;16:895–7 [DOI] [PubMed] [Google Scholar]

- 6.Wilson GA, McDonald CJ, McCabe GP., Jr The effect of immediate access to a computerized medical record on physician test ordering: a controlled clinical trial in the emergency room. Am J Public Health 1982;72:698–702 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tierney WM, McDonald CJ, Martin DK, et al. Computerized display of past test results. Effect on outpatient testing. Ann Intern Med 1987;107:569–74 [DOI] [PubMed] [Google Scholar]

- 8.Bates DW, Kuperman GJ, Rittenberg E, et al. A randomized trial of a computer-based intervention to reduce utilization of redundant laboratory tests. Am J Med 1999;106:144–50 [DOI] [PubMed] [Google Scholar]

- 9.Bailey JE, Wan JY, Mabry LM, et al. Does health information exchange reduce unnecessary neuroimaging and improve quality of headache care in the emergency department? J Gen Intern Med 2012;28:176–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Overhage JM, Dexter PR, Perkins SM, et al. A randomized, controlled trial of clinical information shared from another institution. Ann Emerg Med 2002;39:14–23 [DOI] [PubMed] [Google Scholar]

- 11.Frisse ME, Johnson KB, Nian H, et al. The financial impact of health information exchange on emergency department care. J Am Med Inform Assoc 2012;19:328–33 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hebel E, Middleton B, Shubina M, et al. Bridging the chasm: effect of health information exchange on volume of laboratory testing. Arch Intern Med 2012;172:517–19 [DOI] [PubMed] [Google Scholar]

- 13.Thorson M, Brock J, Mitchell J, et al. Grand Junction, Colorado: how a community drew on its values to shape a superior health system. Health Aff (Millwood) 2010;29:1678–86 [DOI] [PubMed] [Google Scholar]

- 14.Gawande A. The cost conundrum. New Yorker 2009:36–4419663044

- 15.Center for Medicare & Medicaid Services. Place of Service Code Set. [cited 6/3/2012]; https://http://www.cms.gov/Medicare/Coding/place-of-service-codes/Place_of_Service_Code_Set.html

- 16.Center for Medicare & Medicaid Services. Berenson–Eggers Type of Service (BETOS) Codes. [cited 6/3/2012]; http://www.cms.gov/Research-Statistics-Data-and-Systems/Statistics-Trends-and-Reports/MedicareFeeforSvcPartsAB/downloads/BETOSDescCodes.pdf

- 17.Trailblazer Health Enterprises LLC. Medicare Fee Schedule. [cited 6/2/2012]; Available from: http://www.trailblazerhealth.com/Tools/Fee%20Schedule/MedicareFeeSchedule.aspx?

- 18.Rudin RS, Salzberg CA, Szolovits P, et al. Care transitions as opportunities for clinicians to use data exchange services: how often do they occur? J Am Med Inform Assoc 2011;18:853–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kilian R, Matschinger H, Loeffler W, et al. A comparison of methods to handle skew distributed cost variables in the analysis of the resource consumption in schizophrenia treatment. J Ment Health Policy Econ 2002;5:21–31 [PubMed] [Google Scholar]

- 20.Vest JR. Health information exchange and healthcare utilization. J Med Syst 2009;33:223–31 [DOI] [PubMed] [Google Scholar]

- 21.Sridhar S, Brennan PF, Wright SJ, et al. Optimizing financial effects of HIE: a multi-party linear programming approach. J Am Med Inform Assoc 2012;19:1082–8 [DOI] [PMC free article] [PubMed] [Google Scholar]