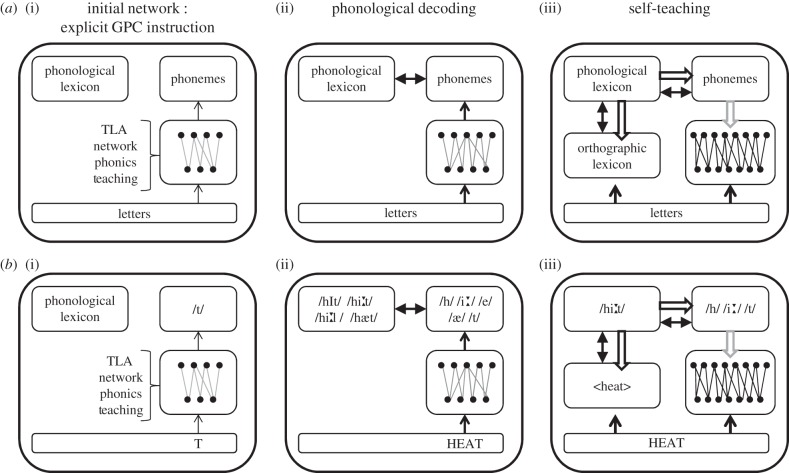

Figure 1.

(a,b) Illustration of the phonological decoding and self-teaching mechanisms in the context of the CDP [13] model. After initial explicit teaching on a small set of grapheme–phoneme correspondences (GPCs), for example T->/t/ (i), the network is able to decode novel words, for example HEAT (ii), which has a pre-existing representation in the phonological lexicon. If the decoding mechanism activates a word in the phonological lexicon (here, the correct word /hi:t/ is more active than its competitors), an orthographic entry is created (<heat>) and the phonology of the ‘winner’ (/hi:t/) is used as an internally generated teaching signal (grey arrows) to improve and strengthen the weights of the TLA network (iii).