Abstract

A common problem in the longitudinal data analysis is the missing data problem. Two types of missing patterns are generally considered in statistical literature: monotone and non-monotone missing data. Non-monotone missing data occur when study participants intermittently miss scheduled visits, while monotone missing data can be from discontinued participation, loss to follow-up and mortality. Although many novel statistical approaches have been developed to handle missing data in recent years, few methods are available to provide inferences to handle both types of missing data simultaneously. In this article, a latent random effects model is proposed to analyze longitudinal outcomes with both monotone and non-monotone missingness in the context of missing not at random (MNAR). Another significant contribution of this paper is to propose a new computational algorithm for latent random effects models. To reduce the computational burden of high dimensional integration problem in latent random effects models, we develop a new computational algorithm that uses a new adaptive quadrature approach in conjunction with the Taylor series approximation for the likelihood function to simplify the E step computation in the EM algorithm. Simulation study is performed and the data from the Scleroderma lung study are used to demonstrate the effectiveness of this method.

Keywords: Adaptive quadrature, Missing not at random, Joint model, Scleroderma study

1 Introduction

Longitudinal study designs are frequently used in clinical studies to monitor disease progression and treatment efficacy over time. One of the most common problems in the longitudinal data analysis is the missing data problem. During the course of study, the outcomes of interest can be missing due to subjects’ non-response, missed visits, dropout, death and other reasons. There is a rich statistical literature on the analysis of missing data1. If the missingness is independent of the observed and unobserved data, the missing mechanism is missing completely at random (MCAR). If given the observed data, the missingness is independent of unobserved data, it is defined as missing at random (MAR). For data of MCAR and MAR, the missing mechanisms are considered as ‘ignorable’ missingness, and likelihood methods provide valid inference without modeling the missing mechanisms. However, in practice it may be difficult to justify the independence assumptions of MCAR or MAR, and we need to consider missing not at random (MNAR), where the missing probability depends on unobserved data. For example, in our motivated example of the Scleroderma lung study, about 60% of patient dropouts are due to death and treatment failure, which are likely related to the lack of treatment efficacy.

In statistical literature, two types of missing data patterns are generally considered2. The first type is called ‘intermittently missing’ or ‘non-monotone missing’, where a subject may miss particular visits during the course of study follow-up and return at later scheduled visits. On the other hand, “monotone missing” data refer to that a subject may leave the study at some point and never return. Most statistical models focus on one type of missing data pattern. For example, to handle non-monotone and non-ignorable missing data3, a full likelihood approach has been used to specify the joint likelihood of the outcome and missing indicator. Others4,5 suggested the use of the pseudo likelihood approach for the analysis with non-monotone missing data. On the other hand, different methods6 are developed to handle monotone missing data. In reality, we often observe both types of missing patterns simultaneously in longitudinal studies.

In this paper we are interested in making statistical inference with both monotone and non-monotone missing data in longitudinal studies, assuming MNAR. We use the joint model approach to model longitudinal outcomes, intermittent missing data and patient death or treatment failure simultaneously. For example in the Scleroderma lung study, we will use a linear mixed effects model to characterize the longitudinal forced vital capacity (FVC) outcome, a logistic mixed effects model for the intermittent missing data, and a proportional hazards frailty model for the time to dropouts due to death or treatment failure. The correlations of the random effects in the three models represent the unobserved dependence among the longitudinal outcome, intermittent missingness, and time to death or treatment failure. This approach allows to access the dependence between missingness and longitudinal outcomes, to correct the bias in estimation due to missed visits, patient death or treatment failure, and to improve the efficiency of parameter estimation by using all available information.

Despite the advantages of the joint model approach, the computation of the joint model remains challenging due to its large number of parameters included in the models and high dimensional latent variables to capture the dependence among all observations. Many authors7,8 employed the Bayesian approach and used the Markov chain Monte Carlo (MCMC) to estimate the posterior distribution of the parameters. MCMC method provides a straightforward approach to parameter estimation. However, MCMC is computationally expensive, difficult to determine convergence, and necessary to specify prior distributions. On the other hand, the EM algorithm is a popular frequentist approach in the joint model analysis9. However the computation in the E step may involve high dimensional integration if the number of random effects is large. Many computation strategies have been proposed to improve the computational efficiency for the estimation in joint models or high dimensional integration in general. For example, the Laplace method10-13 has been used to approximate the likelihood function to avoid high dimensional integration. Although many found satisfactory results using the Laplace approximation, others14,15 suggested poor performance of the Laplace approximation. Additional drawbacks of Laplace approximation include that it demands a larger number of repeated measurements for good approximations, and there are no feasible ways to control the approximation accuracy13. Alternatively, the adaptive Gaussian quadrature16,17 and the sparse quadrature18 provided means to reduce the computational burden and to improve the computational efficiency in high dimension integration.

In this paper, we develop a new computational strategy for the high dimension integration in the E step of EM algorithm. This new approach seeks to reduce the number of evaluations in two ways. First, the adaptive Gaussian quadrature integration makes use of the moment estimates of random effects in the previous EM iteration to construct the importance distribution. Second, we use the Taylor series expansion to approximate the integration in the E steps based on the current moment estimates of random effects. These two techniques effectively reduce the computational burden in the EM steps.

1.1 Relationship to current literature

Our proposed model is an extension of Elashoff et al.19, which used the latent random effects model to handle non-ignorable monotone missing data. Most missing data literature solely devotes to one type of missing data pattern3-6. However, both monotone and non-monotone missing data are often observed simultaneously in longitudinal studies. Our model is also similar to Wu et al.20, who considered a nonlinear mixed-effects model for the longitudinal outcome, the proportional hazards model for the time-to-event outcome, and a logistic regression model for missing data. The interpretation of our model emphasizes on handling different types of non-ignorable missing data, whereas Wu et al.20 highlighted the simultaneous modeling a longitudinal outcome and a time-to-event outcome. To characterize the dependence among outcome and different types of missing data, we assume that each outcome model and missing data model has its own random effects and jointly these random effects follow a multivariate normal distribution. Wu et al.20 introduced single random effect that is shared by the longitudinal, time-to-event, and missing data models, with a scalar multiplying each random effect to account for the fact that the linear predictors in each model are measured on different scales and it is not reasonable to assume they have the same variance. The shared random effect model is more restricted that it only allows positive correlations between two outcomes21. It has some unexpected consequence such as the marginal distribution to be always overdispersed. Our multivariate random effects represent a more flexible correlated random effects model, which allows a full range of covariance and is a generalization of the shared parameter model in Wu et al.20.

In this paper, we present a new computation strategy to reduce the computation burden on high dimensional integration in the EM algorithm. An efficient method will achieve same accuracy in integration with fewer numerical evaluations. The adaptive quadrature method16,17 can be viewed as a deterministic version of importance sampling, and the Laplace method is generally used to find a Gaussian density as the importance distribution with the same mode and curvature of the posterior distribution. However, the Laplace method may not be feasible in a multiple level random effects model, while the moment estimates are always available. Naylor et. al22 and Rabe-Hesketh et. al23 estimated mean and variance of the importance distribution in the context of Newton-Ralphson iteration in searching for maximum likelihood estimates. We use a Gaussian distribution with the same first two moments of the random effects as the importance distribution for adaptive quadrature. To further reduce the number of evaluations in integration, the Taylor series approximation is used for the integrals of complex functions. Others13,24 have proposed to approximate integrals through the moment generating function in the context of Laplace approximations. Due to the Taylor series approximation, we only need to evaluate the first two moments of the random effects, and we use the same importance distribution to obtain all the first two moments estimates to reduce the effort in searching for importance distributions.

The rest of the paper is organized as follows. In section 2, we present the model specification, the inference procedure and the description of adaptive Gaussian quadrature in the EM steps. Section 3 demonstrates the method with a real example from a Scleroderma clinical trial. In section 4, we conduct simulation studies to examine the effectiveness and the proposed method. We provide the conclusions in section 5.

2 The Joint Model

In this section, we formulate the joint model for non-ignorable missing data with both monotone and non-monotone missingness. Let Yij ∈ ℝ be the repeated measurement outcome of the ith subject at visit j, and Mij be the intermittent missingness indicator of the ith subject at visit j, where Mij=1 if Yij is missing, and Mij=0 if Yij observed. i = 1, …, N and j = 1, …, K; N is the number of subjects and K is the total number of visits. LetSi = (Ti, δi) denote the dropout data on the ith subject. Ti = min(TFi, TCi) is the minimum of failure time (TFi) and censoring time (TCi), and δi takes value in (0, 1), with δi = 0 being a censored event and δi = 1 indicating that subject i drops out for reasons associated with unfavourable outcomes, such as death or treatment failure.

In this model Yij is missing when Mij=1 or the subject drops out before the jth visit. Both Yij and Mij are censored by failure/censoring time Ti.

We use a linear mixed effects model for the repeated measurement Yij,

| (1) |

where X1ij ∈ ℝp1 is a vector of fixed effect covariates, Z1i ∈ ℝq1 is a vector of random effect covariates, σ is the dispersion parameter, β1 ∈ ℝp1 is a vector of regression coefficients and b1i ∈ ℝq1 is a vector of random effects. In the Scleroderma example, we use a t distribution with 3 degrees of freedom for residuals, ∊ij ~ t-distribution with 3 degrees of freedom, to reduce the impact of outlying observations and achieve robust inference. There are various ways to incorporate the t distribution model for robust inferences25. For example, given sufficient data, one can estimate the degree of freedom based on the likelihood method. In this paper, we use a fixed v = 3 degrees of freedom throughout for robust inference. It simplifies the model and computational effort, as recommended in the literature25,26. The t distribution with 3 degrees of freedom has sufficient long tails and will provide a considerable degree of down-weighting for extreme outliers25. If outlying observations are not of concern, one can use a normal distribution model for the residuals; ∊ij ∈ N(0, 1).

To model the pattern of intermittent missingness, we use a mixed effects logistic regression model. Let πij = Prob(Mij = 1) be the probability of intermittent missingness for subject i at visit j,

| (2) |

where X2ij ∈ ℝp2 is a vector of fixed effect covariates, Z2i ∈ ℝq2 is a vector of random effect covariates, β2 ∈ ℝp2 is a vector of regression coefficients and b2i ∈ ℝq2 is a vector of random effects.

The hazard of patient dropout due to death or treatment failure is characterized by a proportional hazards frailty model,

| (3) |

where λ0(t) is the baseline hazard function, X3i ∈ ℝp3 is a vector of fixed effect covariates, Z3i ∈ ℝq3 is a vector of random effect covariates, β3 ∈ ℝp3 is a vector of regression coefficients and b3i ∈ ℝq3 is a vector of random effects.

The latent random effects of the above 3 models, bi = (b1i; b2i; b3i), are a q1+q2+q3 dimensional vector to capture the unobserved dependence among all observations. We assume that the random effects follow a multivariate normal distribution, bi ~ N(0, Σ(θ)),

| (4) |

Combining equations (1), (2),(3), and (4), this joint model implies that missing data from missed visits and study dropouts are non-ignorable missing data. If the missed visits are considered as ignorable, then equation (2) can be removed. Similarly, if missing data dude to study dropouts are ignorable missing data, then equation (3) can be removed. We also note that all fixed effects covariates (X1ij, X2ij, X3i) and random effects covariates (Z1i, Z2i, Z3i) can be disjoint or overlapped.

2.1 Likelihood and estimation

Let Yobs and Ymis be the observed and missing outcome measurements, and Mobs and Mmis be the observed and missing indicators for intermittent missingness. The death and treatment failure information Sobs is observed for all subjects. Xi = (X1i1, …, X1iK, X2i1, …, X2iK, X3i) and Zi = (Z1i, Z2i, Z3i) are fixed and random effects covariates of the ith subject. The likelihood function for the ith subject is

The latent random effects model assumes that the observed data (Yi,obs, Mi,obs) and the unobserved data (Yi,obs, Mi,obs) are conditionally independent given the random effects bi. Furthermore, because ∫ f(Yi,miss)dYi,mis = 1 and ∫ f(Mi,miss)dMi,mis = 1, the likelihood function is the likelihood of observed data:

Let ϕ = (β1, β2, β3, σ, θ) and . Our goal is to calculate the maximum likelihood estimates for Θ = (ϕ, Λ0), over a set in which ϕ is in a bounded set and Λ0 is in a space of all the increasing function in t with Λ0(0) = 0. Note that the random effects bi, i ∈ 1, …, N are unobserved and considered as missing data.

Because the maximization is straight forward with complete data, we use the EM algorithm to calculate the maximum likelihood estimates. In the E-step, we calculate the expectation of complete-data log-likelihood given the observed data and the current values of the parameters. More specifically, at the (k+1)th iteration, the E-step consists of evaluating the expected value of functions of bi with respect to its conditional distribution with parameters estimated in the kth iteration

where Di = (Yi,obs, Mi,obs, Si,obs) denotes the observed data of the ith subject, and Λ(k) and ϕ(k) are the estimates from the kth iteration. For example, the conditional expectation for function A(bi),

| (5) |

The E steps involves at least 3 dimensional integrations, which demand intensive computation. We use several techniques to reduce computational burden and to achieve better accuracy in evaluating equation (5) in the E step. First of all, we approximate the numerator and denominator separately by Gauss-Hermite quadrature. It has been suggested in the literature13,27,28 that the two integrands in the numerator and denominator in equation (5) are similar in shape. By taking the ratio of the numerator and denominator, the leading error term in the approximation may cancel out and result in better approximation accuracy. However, the computational burden to evaluate the numerator and denominator in equation (5) can be large even with moderate number of random effects bi and if many functions A(bi) are evaluated in the E steps.

Here we use two strategies to reduce the computational burden: to use the Taylor series expansion to approximate E(A(bi)) and to use adaptive quadrature to increase the computational efficiency. For the first strategy, we use the Taylor series expansion to approximate the expectation in the numerator for all functions A(bi) by the first two moments of bi

where Ebi = E(bi) is the expectation of bi, V bi = E(bi − Ebi)2 is the variance of bi, and is the second partial derivative of A(b). With the Taylor series approximation, the only integrals need to be evaluated in the E step are the first two moments of bi.

We use the Gauss-Hermite quadrature to evaluate the expectation of the first two moments of bi. The Gaussian quadrature approximates integrals of function with respect to a given kernel by a weighted average of integrand evaluated at predetermined abscissas. For a multi-dimensional integral, the number of abscissas required to achieve the same accuracy rises exponentially as the number of dimensions increases. The adaptive quadrature applies a linear transformation on bi such that the integrand will be sampled in a suitable range. Following Liu et al.16, we use Gaussian Hermite quadrature for integrals of the form

where , m is number of nodes, xk and wk are the kth node and weight. The critical step for the success of adaptive quadrature is the choice of the importance distribution ψ and its parameters and . It is suggested16,17 to use the Laplace method to estimate the mode and dispersion of g(bi) for and . This choice of importance distribution is near optimal, however, it requires to find the importance distribution for every function g. As described above, with Taylor series approximation, we only need to compute the the first two moments of bi to approximate E(A(bi)).

We propose to use a single importance distribution to compute the first two moments of bi. Specially, we recycle the mean and variance calculated in the previous E step as the importance distribution parameters ( and ) for the evaluation in the current E step. This choice of importance distribution parameters allows us to sample the integrand g(bi) in a suitable range with minimal cost in computation. Table 1 compares the bias between Gaussian Hermite (GH) quadrature and proposed adaptive Gaussian Hermite (AGH) quadrature. In this comparison, we assume and are known. Two random variables are considered in this experiment, a normal distribution Normal(1, 2) variable and a standard exponential distribution Exponential(1) variable. The first two moments of random variables are evaluated by both quadratures with number of nodes ranging from 2 to 30. The results suggest that the adaptive Gaussian Hermite (AGH) quadrature has no bias in estimating the first two moments for the normal random variable, while regular Gaussian Hermite quadrature has bias close to zero with 20 nodes. For the standard exponential random variable, the adaptive Gaussian Hermite quadrature can achieve the same accuracy with fewer number of nodes. For example, the adaptive Gaussian Hermite quadrature with 3 nodes has similar accuracy with the regular Gaussian Hermite quadrature with 10 nodes in evaluating the first two moments of standard exponential variable. In general, the bias diminishes as the number of nodes increases for both regular Gaussian Hermite quadrature and adaptive Gaussian Hermite quadrature. However, we also note that the bias is not reduced in estimating E(X) for the Exponential(1) variable when the number nodes increases from 10 to 20 with the adaptive Gaussian Hermite quadrature.

Table 1.

Comparison between Gaussian Hermite (GH) quadrature and proposed adaptive Gaussian Hermite (AGH)quadrature.

| Distribution | X ~ N(1, 2) | X ~ Exp(1) | ||||||

|---|---|---|---|---|---|---|---|---|

|

| ||||||||

| method | GH | AGH | GH | AGH | ||||

|

| ||||||||

| No of Nodes | E(X) | E(X2) | E(X) | E(X2) | E(X) | E(X2) | E(X) | E(X2) |

| 2 | 0.14 | 0.30 | 1.00 | 3.00 | 0.51 | 0.36 | 0.56 | 1.12 |

| 3 | 0.32 | 0.72 | 1.00 | 3.00 | 0.48 | 0.58 | 0.95 | 1.50 |

| 5 | 0.61 | 1.54 | 1.00 | 3.00 | 0.68 | 0.99 | 0.94 | 1.75 |

| 10 | 0.93 | 2.67 | 1.00 | 3.00 | 0.93 | 1.53 | 1.02 | 1.93 |

| 20 | 1.00 | 2.99 | 1.00 | 3.00 | 0.99 | 1.86 | 0.97 | 1.99 |

| 30 | 1.00 | 3.00 | 1.00 | 3.00 | 1.00 | 1.95 | 1.01 | 2.00 |

| true value | 1.00 | 3.00 | 1.00 | 3.00 | 1.00 | 2.00 | 1.00 | 2.00 |

In the M-step, we solve the conditional score equations of the complete data given the observed data. If the longitudinal model is a linear mixed effects model with normal random effects and residuals, the score equations for the regression coefficients have a closed form solution in the M step. However, for the regression coefficients in the mixed effects logistic model, proportional hazard frailty model and the t-distribution longitudinal model, there is no closed form solution and one-step of Newton Raphson is carried out in the M step. We iterate between E- and M- steps until the estimates converge. Appendix A provides the details of our computational algorithm.

To estimate the standard errors, a direct calculation and inversion of the Fisher information matrix29 is not feasible due to the infinite dimensional parameter λ0. Standard errors are approximated by the inverse of empirical Fisher information of the log profile likelihood with the nonparametric baseline hazard λ0 being profiled out. It has been suggested30 that the inverse of empirical Fisher information underestimates standard errors and the bootstrap method is recommended to obtain standard error estimates. The bootstrap method requires intensive computation. In our simulation study it appears that this approximation has satisfactory performance on the inference of longitudinal outcomes. However, under-estimation in the standard error is observed for the parameters in the logistic model and proportional hazard model.

3 Scleroderma lung study data analysis

In this section, we demonstrate the use of our proposed model to handle non-monotone and monotone non-ignorable missingness in the analysis of the Scleroderma lung study31. The Scleroderma lung study is a multi-center placebo-control double bind randomized study to evaluate the effects of oral cyclophosphamide (CYC) on lung function and other health-related symptoms in patients with evidence of active alveolitis and scleroderma-related interstitial lung disease. In this study, eligible participants received either daily oral cyclophosphamide or matching placebo for 12 months, followed by another year of follow-up without study medication. The primary endpoint of the study is the forced vital capacity (FVC, expressed as a percentage of the predicted value), which is measured at baseline and at three-month intervals throughout the study. One hundred and fifty eight eligible patients underwent randomization, and about 15% of them dropped out of the study before 12 months. About 30% of dropouts are due to death and treatment failures. Intermittent missed visits also occurred during the course of the study. It is likely that the missing data are due to the ineffectiveness of treatment and related to the outcome of interest.

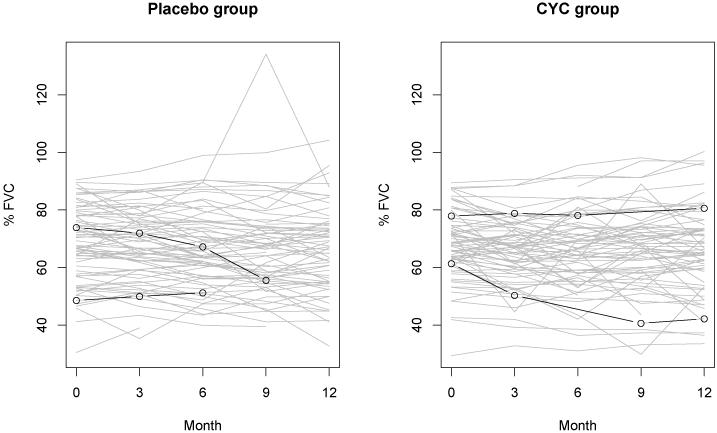

Here we present the analysis of the primary endpoint of FVC. Figure 1 gives the plot of FVC over time for each study group during the first year of the trial. In this figure, two subjects (01-ALS-013 and 04-JWW-010) in the placebo group are highlighted as examples of study dropouts at 6 and 9 months, and two subjects (08-S-L-018 and 09-NEP-005) in the CYC group are examples with missed visits at 6 and 9 months. Most subjects have small variation in FVC over time. However, a few observations show considerable changes between follow-ups. It suggests the possibility of outlying observations. For this analysis, the fixed effects model includes covariates of time, baseline FVC (FVC0), baseline maximum fibrosis (MAXFIB0), cyclophosphamide (CYC), and the interactions between treatment and baseline FVC, baseline maximal fibrosis and time. The random effects model includes a random intercept (b1) which represents the unobserved subject level factor associated with FVC, and residuals (∊) with a t-distribution with 3 degrees of freedom to reduce the impact of outlying observations. σ is related to the variance of FVC.

For the intermittent missing data model, the mixed effects logistic model is used to model the probability (p) of missed visits. The fixed effect includes time effect and the random effect includes a random intercept (b2).

For time to death or treatment failure, the Proportional hazards frailty model includes covariates of baseline FVC (FVC0), baseline maximum fibrosis (MAXFIB0), and cyclophosphamide (CYC). b3 is the random effect for time to death or treatment failure.

The random effects (b1, b2, b3) in above 3 models are assumed to follow a 3 dimensional multivariate normal distribution to capture the unobserved dependence among FVC measurement, the missed visit events and time to death or treatment failure. Because there are 3 random effects in the joint model, the E steps involve 3 dimensional integration. With a 3 nodes Gaussian Hermite quadrature for each dimension, 27 nodes Gaussian Hermite quadrature is used for the integrations in the E steps.

Figure 1.

Longitudinal FVC in the placebo and CYC groups during the first 12 months. In the Scleroderma lung study, the forced vital capacity (FVC, expressed as a percentage of the predicted value) is measured at baseline and at three-month intervals throughout the study. In this figure, two subjects (01-ALS-013 and 04-JWW-010) in the placebo group are highlighted as examples of study dropouts at 6 and 9 months, and two subjects (08-S-L-018 and 09-NEP-005) in the CYC group are examples of missed visits at 6 and 9 months.

As this is a randomized trial, the assignment of CYC is independent of baseline characteristics. We can derive the overall treatment effect at time m:

where Effi is the treatment(CYC) effect for the ith subject at time m

Table 2 gives parameter estimates in the longitudinal model for the Scleroderma lung study, based on 6 to 18 months data. We observed that the FVC outcome is associated with baseline FVC measurement and maximum fibrosis score. In addition, the treatment effect appears to be modified by baseline FVC measurement and maximum fibrosis. Roth et al.32 also reported similar findings of the treatment-maximum fibrosis interaction effect using a different analysis. The estimated overall treatment effect is also reported in Table 2.

Table 2.

Analysis of FVC in the Scleroderma study, using data over 6 to 18 months

| β | Est. | t model Std |

p-value |

|---|---|---|---|

| β1 (Time) | −0.07 | 0.06 | 0.297 |

| β2(FVC0) | 0.92 | 0.013 | <0.001 |

| β3(MAXFIB0) | −1.62 | 0.16 | <0.001 |

| β4(CYC) | −1.08 | 1.15 | 0.416 |

| β5(CYC×FVC0) | 0.081 | 0.019 | <0.001 |

| β6(CYC×MAXFIB0) | 1.50 | 0.25 | <0.001 |

| β7(CYC×Time) | 0.24 | 0.09 | 0.009 |

|

| |||

| overall effect at | |||

| 6 months | 0.36 | 0.71 | 0.609 |

| 9 months | 1.08 | 0.51 | 0.036 |

| 12 months | 1.79 | 0.41 | <0.001 |

| 15 months | 2.50 | 0.47 | <0.001 |

| 18 months | 3.21 | 0.69 | <0.001 |

|

| |||

| β4 = β5 = β6 = β7 = 0 | <0.001 | ||

4 Simulation study

In this section, we carry out simulation studies to evaluate the effectiveness of the proposed method. The goal is to examine the amount of bias generated from the likelihood approximation by the Gaussian Hermite quadrature and Taylor series approximation. The simulation set-up includes a linear mixed model to generate longitudinal outcome data, a logistic mixed model for intermittent missing data, and a proportional hazard frailty model for time to dropout due to treatment failure or death.

Here are the specifications of 3 models:

| (6) |

| (7) |

| (8) |

Yij is the longitudinal outcome value of the ith subject at the jth time i = 1, …, N, j = 1, …, K = 6. The sample size is n, and each subject has at most K = 6 visits including the baseline visit. X11ij is the longitudinal covariate for the longitudinal outcome the ith subject at the jth time. β11 represents the strength of the association between the outcome Yij and X11ij. For example, if X11ij is the indicator of treatment assignment, then β11 quantifies the treatment effect. Mij is the intermittent missing indicator; Mij = 1 if Yij is missing due to missed visits, and 0 if Yij is observed. X21ij is the longitudinal covariate for the intermittent missingness for the ith subject at the jth time. We assume that, for intermittent missed visit, X21ij is always observed before it is censored by the dropout time or the end of study. β21 indicates the association between missed visit Mij and X21ij, and β20 determines the prevalence of intermittent missingness. About 10% visits are missing. Time to death or treatment failure is generated with proportional hazard model with a constant baseline hazard λ0(t). The censoring time and the baseline hazard are simulated with constant hazard functions, such that about 15% subject are censored (loss to follow-up for other reasons) and ps = 20% or 50% subjects drop out due to treatment failure or death before finishing 6 follow-up visits. All covariates in the models, X11ij, X21ij and X31i, are generated according to standard normal distribution.

The true values of the regression coefficients are (β10, β11, β21, β31) = (2, 2, 2, 2). The random effect (b1, b2, b3) follows multi-variate normal distribution with mean zero, common variance 2, and correlation 0.5 among the random effects. The residuals of the longitudinal outcome are generated based on a mixture of normal distributions to represent possible outlying observations.

where pe corresponds to the percent of outlying observations, and fe > 1 represents the degree that the outliers deviate from rest of the observations. A large fe generates large variability in the outliers. A special case pe = 0 gives normal distribution residuals without outliers. In the simulation we vary pe among 0%, 10% and 25%, set fe = 4, and vary sample size between n = 200 and 400. Five hundred simulations are carried out for each parameter combination. With 3 random effects in the joint model, the E steps involve 3 dimensional integrations. We use a 3 nodes Gaussian Hermite quadrature for the each dimension, which results in a 33 = 27 nodes Gaussian Hermite quadrature for the 3 dimensional integrations in the E steps.

Table 3 provides the simulation results. The upper table gives the summary for the estimated regression coefficients in the longitudinal t-distribution model. It suggests that the estimates of β01 and β11 have minimal biases, the estimated standard errors are close to empirical standard deviation of the estimates, and the 95% coverage probability is satisfactory. This results also suggest good performance for the t-distribution model in the presence of outlying observations.

Table 3.

Simulation results with sample sizes N = 200,400. ps is the propor tion of non-ignorable dropouts due to death and treatment failure. pe is the proportion of outlying observations in the longitudinal model. β10, β11, β21, and β31 are the average estimates in 500 simulations, with standard deviation in parenthesis. SD is the average estimated standard errors. CP is the coverage probability for the 95% confidence interval. The true values of the regression coefficients are (β10, β11, β21, β31) = (2, 2, 2, 2).

| N | ps | pe | β 10 | SD(β10) | CP | β 11 | SD(β11) | CP |

|---|---|---|---|---|---|---|---|---|

| 200 | 0.2 | 0 | 2.01 ( 0.14 ) | 0.13 | 92 % | 2.00 ( 0.09 ) | 0.08 | 94 % |

| 0.10 | 2.00 ( 0.14 ) | 0.13 | 93 % | 2.00 ( 0.09 ) | 0.10 | 96 % | ||

| 0.25 | 1.99 ( 0.17 ) | 0.15 | 92 % | 2.00 ( 0.12 ) | 0.13 | 95 % | ||

| 0.5 | 0 | 2.01 ( 0.15 ) | 0.14 | 91 % | 2.00 ( 0.09 ) | 0.09 | 95 % | |

| 0.10 | 2.02 ( 0.17 ) | 0.15 | 93 % | 2.01 (0.11) | 0.10 | 92 % | ||

| 0.25 | 2.00 ( 0.19 ) | 0.17 | 92 % | 2.02 ( 0.12 ) | 0.13 | 96 % | ||

| 400 | 0.2 | 0 | 2.01 ( 0.10 ) | 0.10 | 94 % | 2.00 ( 0.06 ) | 0.06 | 92 % |

| 0.10 | 2.01 (0.11) | 0.10 | 94 % | 2.00 ( 0.07 ) | 0.07 | 93 % | ||

| 0.25 | 2.01 ( 0.12 ) | 0.11 | 93 % | 2.00 ( 0.08 ) | 0.08 | 96 % | ||

| 0.5 | 0 | 2.02 ( 0.11 ) | 0.10 | 93 % | 2.00 ( 0.07 ) | 0.06 | 93 % | |

| 0.10 | 2.02 ( 0.12 ) | 0.11 | 93 % | 2.00 ( 0.08 ) | 0.07 | 94 % | ||

| 0.25 | 2.02 ( 0.13 ) | 0.12 | 93 % | 2.00 ( 0.09 ) | 0.09 | 96 % |

| N | ps | pe | β 21 | SD(β21) | CP | β 31 | SD(β31) | CP |

|---|---|---|---|---|---|---|---|---|

| 200 | 0.2 | 0 | 2.00 ( 0.20 ) | 0.16 | 92 % | 1.93 ( 0.23 ) | 0.17 | 82 % |

| 0.10 | 2.01 ( 0.20 ) | 0.17 | 90 % | 1.92 ( 0.24 ) | 0.17 | 81 % | ||

| 0.25 | 2.03 ( 0.20 ) | 0.17 | 89 % | 1.95 ( 0.26 ) | 0.17 | 79 % | ||

| 0.5 | 0 | 2.02 ( 0.21 ) | 0.18 | 92 % | 1.90 ( 0.22 ) | 0.14 | 73 % | |

| 0.10 | 2.03 ( 0.21 ) | 0.18 | 92 % | 1.90 ( 0.23 ) | 0.14 | 73 % | ||

| 0.25 | 2.03 ( 0.22 ) | 0.18 | 91 % | 1.89 ( 0.22 ) | 0.14 | 73 % | ||

| 400 | 0.2 | 0 | 2.00 ( 0.14 ) | 0.11 | 91 % | 1.98 ( 0.18 ) | 0.12 | 80 % |

| 0.10 | 2.01 ( 0.14 ) | 0.12 | 91 % | 1.98 ( 0.18 ) | 0.12 | 80 % | ||

| 0.25 | 2.01 ( 0.14 ) | 0.12 | 91 % | 1.97 ( 0.18 ) | 0.12 | 79 % | ||

| 0.5 | 0 | 2.01 ( 0.15 ) | 0.13 | 89 % | 1.96 ( 0.17 ) | 0.10 | 71 % | |

| 0.10 |

2.02 ( 0.15 ) | 0.13 | 90 % | 1.95 ( 0.17 ) | 0.10 | 73 % | ||

| 0.25 | 2.02 ( 0.15 ) | 0.13 | 90 % | 1.95 ( 0.17 ) | 0.10 | 72 % |

The lower table provides the summary for the estimated regression coefficients in the intermittent missing logistic model (β21) and the time to death or treatment failure model (β31). We observe that β21 is generally unbiased or minimally overestimated. A larger sample size (n = 400) appears to further diminish the biases. The standard errors are underestimated, which results in the slightly lower coverage probability ranging from 89% to 92%. Similar to the results in the Laplace approximation11,12, we observe a relatively large bias in the estimates of β31. A larger sample size (N = 400) appears to reduce the biases. However, the standard error are also underestimated. The 95% coverage probability ranges from 72% to 82%. The underestimation may be due to the approximation of integration with Gaussian Hermite quadrature and the Taylor series approximation of score function. In contrast, the longitudinal outcome in the simulation has a symmetric distribution, which may results in better approximation using Gaussian Hermite quadrature.

Our simulation suggests that the proposed model and our computational method can provide reliable inference for the parameters in the longitudinal model. In some applications, for example the Scleroderma lung study, the primary research interest is the treatment effect on the longitudinal outcome, and the underestimation of the parameters in time to event outcome may not be a big concern.

5 Conclusion

This paper presents a joint model approach to analyze longitudinal outcomes in the presence non-ignorable missing data due to both intermittent and monotone missingness. In the SLS clinical trial example, the intermittent missing data are due to patient missed visits and monotone missing data are from patient dropout due to death or treatment failure. We use a logistic model to describe the intermittent missingness pattern and a Cox proportional model for the dropout due to death or treatment failure. These probabilistic missing models reflect the fact that a subject may come back after missed visits, but will drop out the study if death or treatment failure occurs. Our proposed latent random effects model can easily be extended to models with multiple longitudinal outcomes, multiple intermittent missing outcomes processes, and a competing risk model for multiple causes of dropout. Alternatively, multi-state models are a viable approach to handle both intermittent and monotone missingness simultaneously33. In a multi state model, one can specifies observed visits, missed visit, death and treatment failure as different states, where missed visits are transient states and death and treatment failure are absorbing states.

We use a t-distribution model to reduce the impact of outlying observations25,34 in the longitudinal model. It is possible to consider the degrees of freedom as a parameter of the model and estimate it based on the data. One can also extend our model to use a multivariate t distribution to model outcome and random effects simultaneously35. However, this may involve intensive computation, require larger sample size for stable estimation and the improvement in efficiency might be limited. The use of Huber function36,37 also has been developed for the robust likelihood inference in longitudinal data analysis. Our joint model can easily accommodate the use of Huber function for robust inference.

We develop approximation methods to calculate the maximum likelihood estimates based on adaptive Gaussian Hermite quadrature and Taylor expansion approximation to reduce the computational burden and to improve the approximation accuracy. It will be interesting to compare our approach with other numerical integration methods, such as Laplace approximation12,13, other adaptive Gaussian Hermite quadrature16,17, and Monte Carlo integration20. We observe satisfactory performance on the estimates of longitudinal model and logistic regression model. Methods to improve the estimation in the and time to event model warrant further research.

In this paper, standard errors are approximated by the inverse of empirical Fisher information of the log profile likelihood with the nonparametric baseline hazard λ0 being profiled out. In our simulation study it appears that this approximation has satisfactory performance for the standard error estimation for the longitudinal model. However, under-estimation in the standard error is observed for the parameters in the logistic regression model and proportional hazard model, as suggested in the literature30. Because in the Scleroderma study, the outcome of interest is the longitudinal FVC, our method can provide valid inference; the logistic model and proportional hazard model are incorporated to correct the potential bias due to non-ignorable missing data. Other examples of joint model with the primary interest mostly on longitudinal outcomes include the NINDS rt-PA stroke trial with the main outcome of modified Rankin Scale38 and a breast cancer clinical trial with the quality of life outcome3. On the other hand, when the focus is on the survival outcome, the validity of the standard errors approximated by the inverse of empirical Fisher information may be questionable; for example, HIV clinical trial with patient survival as the outcome9 and NYU Women’s Health Study with the outcome of the onset of breast cancer39. The bootstrap method was recommended to obtain standard error estimates30. However, the bootstrap method increases the computational complexity. Other less computationally intensive standard error estimation procedures for semi-parametric models40,41 in the literature may warrant further investigation.

In contrast to the Laplace approximation, the adaptive quadrature allows to control the error of integration approximation by the number of nodes. We use a fixed 3 nodes adaptive quadrature for each dimension of integration in this paper. It is possible to determine the number of nodes based on the some integration precision criterion. For example, the GLIMMIX procedure in SAS software determines the number of nodes by evaluating the log likelihood at the starting values at a successively larger number of nodes until a tolerance is met. However with a high dimensional integration, different integration function with different dimension (random effect) may require different number of nodes to reach the same precision. In our example, the integration related to the random effect of the Cox proportional hazards model may possibly require more nodes than the integration related to the random effect of the longitudinal model to achieve better efficiency in computation. Further research to determine optimal number of nodes for adaptive quadrature and to investigate the relationship between the number of nodes and the bias in parameter estimation is needed.

Finally, the latent random effects model provides inference for non-ignorable missingness with correlated random effects. However, the non-ignorable assumption is untestable. Fortunately, in a real life data analysis, one can often bring in knowledge and assumptions that are external to the data to provide evidence for missing mechanism. Therefore the non-ignorable missing data analysis must be carried out with great care. It also implies the importance of the information on the causes of missing data in data collection to determine possible missing mechanism. In our Scleroderma example, many missing data are due to lack of treatment efficacy (for example, death or treatment failure), and non-ignorability seems to be a reasonable assumption. In general, local sensitivity analysis can be performed to evaluate the effects of non-ignorability42,43.

Acknowledgements

This work is partially supported by NIH grant UL1TR000124 and UL1RR033176.

Appendix

Here we provide the detailed proposed computational algorithm for the EM steps to calculate the maximum Likelihood estimates. Let bi be the (vector of) random effects, and θ(k) be the (vector of) estimated parameters in the kth EM steps.

Start with initial θ(0)

- For the kth EM step:

-

–E step 1: calculate E(bi|θ(k)) and with Gaussian Hermite quadrature. The importance distribution is set to be normal distribution with the first two moments of E(bi|θk−1)) and variance , which are readily available from the kth EM step.

-

–E step 2: approximate the expectation E(A(bi))| θ(k)) using the Taylor series expansion.

where Ebi = E(bi) is the expectation of bi, V bi = E(bi − Ebi)2 is the variance of bi, and is the second partial derivative of A(b). -

–M step: use the approximate E(A(bi)) to solve the conditional score and obtain θ(k+1)

-

–

Repeat 2 until convergence

Remarks:

’E step 1’ reduces the computational effort in looking for the importance function in the adaptive Gaussian Hermite quadrature; we use the same importance function for all integrations, and the parameters in the importance function are readily available from previous iteration.

’E step 2’ uses the Taylor series approximation to reduce the number of needed integrations to the first two moments of random effects. The moments estimates are also re-used in the next iteration to construct the importance distribution in ‘E step 1’.

Contributor Information

Chi-hong Tseng, Department of Medicine, School of Medicine, University of California at Los Angeles, ctseng@mednet.ucla.edu.

Robert Elashoff, Department of Biomathematics and Biostatistics, School of Medicine and Public Health, University of California at Los Angeles, relashoff@biomath.ucla.edu.

Ning Li, Biostatistics Core, Samuel Oschin Comprehensive Cancer Institute, Cedars-Sinai Medical Center, Los Angeles, CA 90048, USA, ning.li@cshs.org.

Gang Li, Department of Biostatistics, School of Public Health, University of California at Los Angeles, vli@ucla.edu.

References

- 1.Little RJA, Rubin DB. Statistical Analysis with Missing Data. 2nd ed. Wiley; New York: 2002. [Google Scholar]

- 2.Ibrahim J, Molenberghs G. Missing data methods in longitudinal studies: a review. TEST. 2009;18:1–43. doi: 10.1007/s11749-009-0138-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ibrahim JG, Chen MH, Lipsitz SR. Missing responses in generalized linear mixed models when the missing data mechanism is nonignorable. Biometrika. 2001;88:551–564. [Google Scholar]

- 4.Troxel AB, Lipsitz S, Harrington D. Marginal models for the analysis of longitudinal measurements with non-ignorable non-monotone missing data. Biometrika. 1998;85:661–672. [Google Scholar]

- 5.Parzen M, Lipsitz S, Fitzmaurice G, Ibrahim J, Troxel A. Pseudo-likelihood methods for longitudinal binary data with non-ignorable missing responses and co-variates. Statistics in Medicine. 2006;25:2784–2796. doi: 10.1002/sim.2435. [DOI] [PubMed] [Google Scholar]

- 6.Hogan JW, Roy J, Korkontzelou C. Biostatistics tutorial: Handling dropout in longitudinal data. Statistics in Medicine. 2004;23:1455–1497. doi: 10.1002/sim.1728. [DOI] [PubMed] [Google Scholar]

- 7.Faucett CL, Thomas DC. Simultaneously modelling censored survival data and repeatedly measured covariates: A Gibbs sampling approach. Statistics in Medicine. 1996;15:1663–1685. doi: 10.1002/(SICI)1097-0258(19960815)15:15<1663::AID-SIM294>3.0.CO;2-1. [DOI] [PubMed] [Google Scholar]

- 8.Brown ER, Ibrahim JG. A Bayesian semiparametric joint hierarchical model for longitudinal and survival data. Biometrics. 2003;59:221–228. doi: 10.1111/1541-0420.00028. [DOI] [PubMed] [Google Scholar]

- 9.Wulfsohn MS, Tsiatis AA. A joint model for survival and longitudinal data measured with error. Biometrics. 1997;53:330339. [PubMed] [Google Scholar]

- 10.Breslow NE, Clayton DG. Approximate inference in generalized linear mixed models. Journal of the American Statistical Association. 1993;88:9–25. [Google Scholar]

- 11.Ripatti S, Palmgren J. Estimation of multivariate frailty models using penalized partial likelihood. Biometrics. 2000;56:10161022. doi: 10.1111/j.0006-341x.2000.01016.x. [DOI] [PubMed] [Google Scholar]

- 12.Ye W, Lin X, Taylor JMG. A penalized likelihood approach to joint modeling of longitudinal measurements and time-to- event data. Statistics and its inference. 2008;1:33–45. [Google Scholar]

- 13.Rizopoulos D, Verbeke G, Lesaffre E. Fully exponential Laplace approximations for the joint modeling of survival and longitudinal data. Journal of the Royal Statistical Society, Series B. 2009;71:637–654. [Google Scholar]

- 14.Rodrguez G, Goldman N. An assessment of estimation procedures for multilevel models with binary responses. Journal of the Royal Statistical Society, Series A. 1995;158:73–89. [Google Scholar]

- 15.Raudenbush SW, Yang M, Yosef M. Maximum likelihood for generalized linear models with nested random effects via high-order, multivariate Laplace approximation. Journal of Computational and Graphical Statistics. 2000;9:141–157. [Google Scholar]

- 16.Liu Q, Pierce DA. A note on Gauss-Hermite quadrature. Biometrika. 1994;81:624–629. [Google Scholar]

- 17.Pinheiro J, Bates DM. Efficient algorithms for robust estimation in linear mixed-effects models using the multivariate t-distribution. Journal of Computational and Graphical Statistics. 1995;4:12–35. [Google Scholar]

- 18.Heiss F, Winschel V. Likelihood approximation by numerical integration on sparse grids. Journal of Econometrics. 2008;144:62–80. [Google Scholar]

- 19.Elasho RM, Li G, Li N. A joint model for longitudinal measurements and survival data in the presence of multiple failure types. Biometrics. 2008;64:762–771. doi: 10.1111/j.1541-0420.2007.00952.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wu L, Hu XJ, Wu H. Joint inference for nonlinear mixed-effects models and time to event at the presence of missing data. Biostatistics. 2008;9:308–320. doi: 10.1093/biostatistics/kxm029. [DOI] [PubMed] [Google Scholar]

- 21.McCulloch C. Joint modeling of mixed outcome types using latent variables. Statistical Methods in Medical Research. 2008;17:53–73. doi: 10.1177/0962280207081240. [DOI] [PubMed] [Google Scholar]

- 22.Naylor JC, Smith AFM. Econometric illustrations of novel numerical integration strategies for Bayesian inference. Journal of Econometrics. 1988;38:103125. [Google Scholar]

- 23.Rabe-Hesketh S, Skrondal A, Pickles A. Maximum likelihood estimation of limited and discrete dependent variable models with nested random effects. Journal of Econometrics. 2005;128:301–323. [Google Scholar]

- 24.Tierney L, Kadane JB. Accurate approximations for posterior moments and marginal densities. Journal of the American Statistical Association. 1986;81:82–86. [Google Scholar]

- 25.Lange KL, Little RJA, Taylor JMG. Robust statistical modeling using the t-distribution. Journal of the American Statistical Association. 1989;84:881–896. [Google Scholar]

- 26.Tukey J. One degree of freedom for non-additivity. Biometrics. 1949;5:232–242. [Google Scholar]

- 27.Tierney L, Kass RE, Kadane JB. Fully Exponential Laplace Approximations to Expectations and Variances of Nonpositive Functions. Journal of the American Statistical Association. 1989;84:710–716. [Google Scholar]

- 28.Lin H, Liu D, Zhou X. A correlated random-effects model for normal longitudinal data with nonignorable missingness. Statistics in Medicine. 2010;29:236–247. doi: 10.1002/sim.3760. [DOI] [PubMed] [Google Scholar]

- 29.Louis TA. Finding the observed information matrix when using the EM algorithm. Journal of the Royal Statistical Society, Series A. 1982;44:226–233. [Google Scholar]

- 30.Hsieh F, Tseng YK, Wang JL. Joint Modeling of Survival and Longitudinal Data: Likelihood Approach Revisited. Biometrics. 2006;62:1037–1043. doi: 10.1111/j.1541-0420.2006.00570.x. [DOI] [PubMed] [Google Scholar]

- 31.Tashkin DP, Elasho R, Clements PJ, Goldin J, Roth MD, Furst DE, et al. Cyclophosphamide versus placebo in scleroderma lung disease. N Engl J Med. 2006;354:2655–2666. doi: 10.1056/NEJMoa055120. [DOI] [PubMed] [Google Scholar]

- 32.Roth MD, Tseng CH, Clements PJ, Furst DE, Tashkin DP, Goldin JG, et al. Predicting treatment outcomes and responder subsets in scleroderma-related interstitial lung disease. Arthritis & Rheumatism. 2011 doi: 10.1002/art.30438. http://dx.doi.org/10.1002/art.30438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Albert PS. A transitional model for longitudinal binary data subject to nonignorable missing data. Biometrics. 2000;56:602–608. doi: 10.1111/j.0006-341x.2000.00602.x. [DOI] [PubMed] [Google Scholar]

- 34.Li N, Elasho RM, Li G. Robust joint modeling of longitudinal measurements and competing risks failure time data. Biometrical Journal. 2009;51:19–30. doi: 10.1002/bimj.200810491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Pinheiro J, Liu C, Wu YN. Efficient algorithms for robust estimation in linear mixed-effects models using the multivariate t-distribution. Journal of Computational and Graphical Statistics. 2001;10:249–276. [Google Scholar]

- 36.Huggins R. A robust approach to the analysis of repeated measures. Biometrics. 1993;49:715–720. [Google Scholar]

- 37.Gill P. A robust mixed linear model analysis for longitudinal data. Statistics in Medicine. 2000;19:975–987. doi: 10.1002/(sici)1097-0258(20000415)19:7<975::aid-sim381>3.0.co;2-9. [DOI] [PubMed] [Google Scholar]

- 38.Li N, Elasho LGRM, Saver J. Joint modeling of longitudinal ordinal data and competing risks survival times and analysis of the NINDS rt-PA stroke trial. Statistics in Medicine. 2010;29:546557. doi: 10.1002/sim.3798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Tseng CH, Liu M. Joint modeling of survival data and longitudinal measurements under nested case-control sampling. Statistics in Biopharmaceutical Research. 2009;1:415–423. [Google Scholar]

- 40.Chen HY, Little RJA. Proportional hazards regression with missing covariate. The Journal of American Statistical Association. 1999;94:896–908. [Google Scholar]

- 41.Jin Z, Ying Z, Wei LJ. A simple Resampling Method by Perturbing the Minimand. Biometrika. 2001;88:381–390. [Google Scholar]

- 42.Verbeke G, Molenberghs G, Thijs H, Lesaere E, Kenward M. Sensitivity analysis for nonrandom dropout: A local influence approach. Biometrics. 2001;57:7–14. doi: 10.1111/j.0006-341x.2001.00007.x. [DOI] [PubMed] [Google Scholar]

- 43.Ma G, Troxel A, Heitjan D. An index of local sensitivity to non-ignorable drop-out in longitudinal modeling. Statistics in Medicine. 2005;24:2129–2150. doi: 10.1002/sim.2107. [DOI] [PubMed] [Google Scholar]