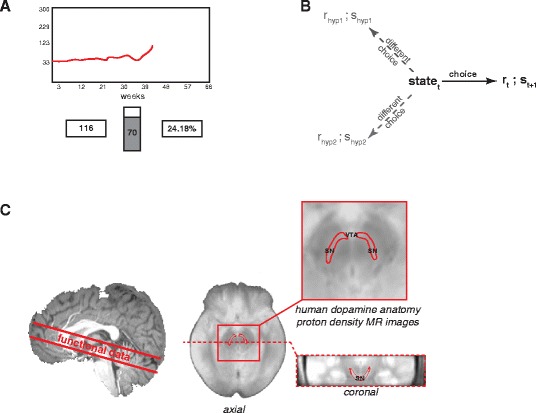

Fig. 1.

a Participants began the task with $100 (running total, left box). On each trial, they had unlimited time to place a bet (slider bar) into a market (graph). After a variable delay of 4–10 s, the change in market value (trace), the amount of money gained or lost (right box), and the overall earnings (left box) were updated. Trials were separated by an intertrial interval of 4–10 s. b Information from the trial outcomes enabled computation of experiential and counterfactual learning signals. A learner’s actual experience is indicated in black. The actual choice transitions the learner from a given state, s t, to a new state, s t+1, while the participant receives a reward, r t. Hypothetical experience is indicated in gray. Information can be gained from this hypothetical experience, from what would have happened if different choices had been made. Experiential learning signals (black) correspond to TD errors, which reflect the difference between rewards received and expected. Counterfactual learning signals (gray) correspond to fictive errors, operationalized as the difference between the best possible outcome and the actual outcome. c Functional data were restricted to a slab centered on the mesencephalon and tilted to cover the substantia nigra and ventral tegmental area