Abstract

Auditory spatial attention serves important functions in auditory source separation and selection. Although auditory spatial attention mechanisms have been generally investigated, the neural substrates encoding spatial information acted on by attention have not been identified in the human neocortex. We performed functional magnetic resonance imaging experiments to identify cortical regions that support auditory spatial attention and to test 2 hypotheses regarding the coding of auditory spatial attention: 1) auditory spatial attention might recruit the visuospatial maps of the intraparietal sulcus (IPS) to create multimodal spatial attention maps; 2) auditory spatial information might be encoded without explicit cortical maps. We mapped visuotopic IPS regions in individual subjects and measured auditory spatial attention effects within these regions of interest. Contrary to the multimodal map hypothesis, we observed that auditory spatial attentional modulations spared the visuotopic maps of IPS; the parietal regions activated by auditory attention lacked map structure. However, multivoxel pattern analysis revealed that the superior temporal gyrus and the supramarginal gyrus contained significant information about the direction of spatial attention. These findings support the hypothesis that auditory spatial information is coded without a cortical map representation. Our findings suggest that audiospatial and visuospatial attention utilize distinctly different spatial coding schemes.

Keywords: audition, intraparietal sulcus, multivoxel pattern analysis, spatial maps, visuotopy

Introduction

In real world listening environments, sound sources combine acoustically before reaching our ears. Spatial attention plays a critical role in helping us to segregate and select the acoustic target of interest while ignoring interference in the mixture of sound (Best et al. 2006; Kidd et al. 2005; Shinn-Cunningham 2008). It is well established that visual spatial attention is supported by a dorsal attention network involving multiple frontal and parietal cortical areas (e.g. Corbetta 1998; Colby and Goldberg 1999; Kastner and Ungerleider 2000). Human and nonhuman primate studies of audition have revealed a spatial processing or “where” subsystem in posterior temporal, posterior parietal, and lateral prefrontal cortex (e.g. Bushara et al. 1999; Rauschecker and Tian 2000; Alain et al. 2001; Maeder et al. 2001; Tian et al. 2001; Arnott et al. 2004; Rämä et al. 2004; Murray et al. 2006; De Santis et al. 2007). This parallels the well documented where pathway in the visual cortex, and the parietal and frontal cortical areas appear similar between modalities. Several studies have suggested that the dorsal fronto-parietal network may function as a supramodal spatial processing system (Macaluso et al. 2003; Krumbholz et al. 2009; Tark and Curtis 2009; Smith et al. 2010; but see Bushara et al. 1999). However, there are important differences between audition and vision that raise issues for this supramodal account. In vision, spatial information is extracted directly from the spatial layout of the retina and visual spatial cortical maps have been observed in no fewer than 20 distinct visual cortical areas (e.g. Engel et al. 1994; Sereno et al. 1995, 2001; Tootell et al. 1998; Schluppeck et al. 2005; Silver et al. 2005; Swisher et al. 2007; Wandell et al. 2007; Arcaro et al. 2009; Silver and Kastner 2009). In contrast to visual coding of space, auditory spatial information must be computed from signal differences between the 2 cochleae, largely from interaural time differences (ITDs) and interaural level differences (Rayleigh 1907). Although auditory spatial maps are well documented in the owl (e.g. Carr and Konishi 1990), it is not clear that mammalian cortex contains any auditory spatial map representations. Single neuron electrophysiology in the auditory cortex reveals broad spatial tuning; however, spatial tuning is narrower when the animal is engaged in a spatial task than that in nonspatial tasks (Lee and Middlebrooks 2011). Moreover, although neural encoding of source identity is weakly modulated by source location for a source presented alone, spatial modulation is enhanced by the presence of a competing source (Maddox et al. 2012). These findings raise the possibility that auditory spatial tuning is stronger during tasks that demand spatial attention due to the presence of a competing sound source, and motivated us to investigate auditory cortical responses during such an auditory task.

If auditory spatial tuning is coarse in early auditory cortex, then how are auditory spatial representations selected by attention or supported in working memory? We consider 2 hypotheses. First, that auditory spatial information merges with visual spatial information within the visual cortical maps of the where pathway; that is, the visual maps provide the spatial backbone for supramodal spatial maps. Prior work has suggested that posterior parietal cortex plays a central role in multisensory integration (e.g. Stein et al. 1989; Andersen et al. 1997; Macaluso et al. 2003; Molholm et al. 2006) and specifically in auditory spatial attention (Wu et al. 2007; Hill and Miller 2009; Smith et al. 2010). The medial bank of the intraparietal sulcus (IPS) contains 5 distinct visuotopic maps, IPS0, IPS1, IPS2, IPS3, and IPS4, that can be driven directly by visual stimulation (Swisher et al. 2007) and are recruited during visual spatial attention and visual short-term memory (Sereno et al. 2001; Schluppeck et al. 2005; Silver et al. 2005; Konen and Kastner 2008; Silver and Kastner 2009; Sheremata et al. 2010). These regions are key to dorsal stream or where pathway processing in vision. An alternate hypothesis is that auditory spatial information is coded only coarsely via an opponent-process mechanism without explicit maps (von Bekesy 1930; van Bergeijk 1962; Colburn and Latimer 1978; McAlpine 2005; Magezi and Krumbholz 2010). These 2 hypotheses need not be mutually exclusive, as auditory spatial attention could be supported differently within different brain regions.

We tested the hypothesis that auditory spatial attention recruits the visuospatial maps of the IPS to create multimodal spatial attention maps by first mapping visuotopic IPS regions in individual subjects and then measuring auditory spatial attention effects within these regions of interest when listeners were attending to different spatial locations. Contrary to the multimodal map hypothesis, we observed that auditory spatial attentional modulations spared the visuotopic maps (IPS0–4) of IPS. Instead, auditory attention drove parietal regions (lateral IPS, latIPS and anterior IPS, antIPS) that lacked map structure. To test the “no map” hypothesis, we performed univariate and multivariate analyses of auditory spatial attention activation. Although the standard univariate analysis failed to reveal any structures that encoded auditory spatial information, multivariate or multivoxel pattern analyses (MVPA) revealed that the superior temporal gyrus (STG) and the supramarginal gyrus (SMG) contained significant information about the direction of spatial attention. These findings support the hypothesis that auditory spatial information is coded without a cortical map representation. Our findings suggest that audiospatial and visuospatial attention utilize distinctly different spatial coding schemes.

Materials and Methods

Subjects

Nine healthy right-handed subjects (5 females), ages 18–30 years old, were recruited from the BU community. Before participating in the experiments, subjects gave written informed consent, as overseen by the Charles River Campus Institutional Review Board and Massachusetts General Hospital, Partners Community Healthcare. All subjects reported normal or corrected-to-normal vision and normal hearing thresholds. Handedness was evaluated using the Edinburgh Handedness Inventory (Oldfield 1971).

MRI Scans

Each subject participated in a minimum of 3 sets of scans across multiple sessions and separate behavior sessions. First, high-resolution structural scans were collected to support anatomical reconstruction of the cortical hemispheric surfaces. Secondly, polar-angle visuotopic mapping functional magnetic resonance imaging (fMRI) scans were performed to identify visuotopic areas in the parietal and occipital areas (Swisher et al. 2007). Finally, auditory spatial attention fMRI scans were conducted in which subjects performed a 1-back digit memory task, varying the direction of the attended stream from block to block.

Imaging was performed at the Martinos Center for Biomedical Imaging at Massachusetts General Hospital on a 3-T Siemens Tim Trio scanner with 12-channel (auditory scans only) or 32-channel (all other scans) matrix coils. A high-resolution (1.0 × 1.0 × 1.3 mm) magnetization-prepared rapid gradient-echo sampling structural scan was acquired for each subject. The cortical surface of each hemisphere was computationally reconstructed from this anatomical volume using FreeSurfer software (Dale et al. 1999; Fischl, Sereno, Dale 1999, Fischl, Sereno, Tootell, et al. 1999; Fischl et al. 2001). To register functional data to the 3-dimensional reconstruction, T1-weighted echo-planar images were acquired using the same slice prescription as in the T2*-weighted functional scans. For functional studies, T2*-weighted gradient echo, echo-planar images were collected using 34 3-mm slices, oriented axially (time echo 30 ms, time repetition [TR] 2100 ms, in-plane resolution 3.125 × 3.125 mm) for auditory functional scans and using 42 3-mm axial slices with a 2600-ms TR for visual functional scans. Functional images were collected using prospective acquisition correction to automatically correct for subject head motion (Thesen et al. 2000).

Analysis of fMRI Data

Functional data were analyzed using Freesurfer/FS-FAST (CorTech, Inc.) with an emphasis on localizing distinct cortical areas on individual subject's cortical surfaces. All subject data were intensity normalized (Cox and Hyde 1997) and spatially smoothed with a 3-mm full-width half-maximum Gaussian kernel.

Analysis of the auditory spatial attention task scans used standard procedures and Freesurfer FS-FAST software. Scan time series were analyzed voxel by voxel using a general linear model (GLM) whose regressors matched the time course of the experimental conditions. The canonical hemodynamic response function was convolved (Cohen 1997) with the regressors before fitting; this canonical response was modeled by a γ function with a delay of δ = 2.25 s and decay time constant of τ = 1.25. A contrast between different conditions produced t-statistics for each voxel for each subject, which were converted into significance values and projected onto the subject's reconstructed cortical surface. For region of interest (ROI) analysis, the percentage signal change data were extracted (from all time points of a block) and averaged across all runs for each condition. Since attentional cueing and/or switching of the attentional focus can induce activation specific to the reorienting (e.g. Shomstein and Yantis 2006), the time points of the cue period were excluded by assigning them to a regressor of no interest. The percent signal change measure was defined relative to the average activation level during the fixation period. Random effects group analysis was performed on these ROI data extracted for each subject. In addition, random effects group analysis was also performed using surface-based averaging techniques. In this group analysis, regressor beta weights produced (by individual subject GLMs) at the first level were projected onto the individual subjects' reconstructed cortical surfaces. These individual surfaces were then morphed onto a common spherical coordinate system (Fischl, Sereno, Dale 1999; Fischl et al. 2001) and were coregistered based on sulci and gyri structures. Parameter estimates were then combined at the second level (across all subjects) via t-tests (Buchel et al. 1998).

Visuotopic Mapping and ROI Definitions

Phase-encoded retinotopic mapping (Engel et al. 1994; Sereno et al. 1995; DeYoe et al. 1996) used a temporally periodic stimulus to induce changes in neural activity at the same frequency in voxels biased to respond to stimulation of a particular region of the visual field. Subjects viewed a multicolored flashing checkerboard background with either a wedge rotating around fixation on polar angle mapping scans, or with an expanding or contracting annulus on eccentricity mapping scans. The periodicity for both types of stimuli was 55.47 s (12 cycles/665.6 s). We alternated between clockwise and counterclockwise rotation (or expansion and contraction) on all runs. Subjects were instructed to fixate a small (8 arc min) dot in the center of the screen and to respond with a button press whenever the fixation point dimmed. Dimming events occurred at random intervals throughout the scan, every 4.5 s, on average (for further details, see Swisher et al. 2007). The purpose of this task is to help subjects to maintain central fixation and alertness. These mapping methods routinely identify more than a dozen visual field representations in occipital, posterior parietal, and posterior temporal cortex. For the purpose of the current project, visuotopic mapping was used to identify early visual cortices V1, V2, V3; and visuotopically mapped areas on the medial bank of the IPS0 (previously known as V7), IPS1, IPS2, IPS3, and IPS4. These methods did not consistently yield visuotopic maps in the frontal cortex. A separate scan session was dedicated to retinotopic mapping in each subject. Four polar angle scans were performed (256 TRs or 11 min 5.6 s, per run), using clockwise and counterclockwise stimuli. It has been reported that combining attention and retinotopy, relative to retinotopy alone, can enhance the reliability of the maps in IPS0–2, but not alter the location of those maps (Bressler and Silver 2010). Here, we obtained robust maps in IPS0–4 using retinotopy alone, as previously reported (Swisher et al. 2007).

Two sets of parietal ROIs were defined for each hemisphere of each subject on their reconstructed cortical mesh. The first set of ROIs was defined on retinotopic criteria corresponding to the underlying visual maps. These ROIs mapped each quadrant of early retinotopic visual cortex (V1–V3). Visuotopic maps in the parietal cortex, IPS0–4, were defined by phase reversals of each hemifield map in areas constrained to show significant angular response (P < 0.05). A second set of parietal ROIs were defined by excluding the visuotopic IPS0–4 regions from the larger anatomically defined parcellation of IPS that is automatically generated for each subject's hemisphere by the Freesurfer cortical reconstruction tools (Desikan et al. 2006). Two nonvisuotopic IPS regions were defined: latIPS, which lies laterally adjacent to the IPS0–4 regions that lie along the medial bank of IPS, and antIPS, which extends from the anterior border of IPS4. These ROIs were used in the comparison of percent signal change and percent voxel overlap in the auditory spatial attention task.

Multivoxel Pattern Analysis

Functional data (previously used in the individual GLM analyses) from selected ROIs were analyzed using MVPA (Cox and Savoy 2003; Greenberg et al. 2010; Haynes and Rees 2005; Kamitani and Tong 2005; Kriegeskorte et al. 2006; Norman et al. 2006; Swisher et al. 2010). MVPA has proven to be a sensitive method for fMRI data analysis, able to detect information encoded in the local patterns of brain activity that is too weak to be detectable by standard univariate analysis. In MVPA, activity patterns extracted in all voxels in each ROI are used as input to a linear support vector machine (SVM) classifier (Cortes and Vapnik 1995). A leave-one-run-out (LORO) approach was employed for cross-validation. Specifically, in each SVM realization, the classifier was trained on data from all but one of the functional runs. The resulting classifier was then tested on the independent run that was left out when building the classifier. This method was repeated for each run. Classifier accuracies across all the testing runs were pooled to compute an average classification rate when training and testing data were constrained to differ.

To assess whether the prediction accuracy is statistically significant, we first arcsine transformed the classification accuracy for each subject ROI. As the individual subject classification accuracies are binomially distributed, this procedure leads to nearly-normal and homoskedastic scores in the transformed space (Freeman and Tukey 1950). Then for each ROI we performed a 1-sample t-test between the arcsine-transformed classifier predictions and the arcsine transform of the assumed chance level (50%) to see if the means were significantly different from chance. These analyses were repeated for each of the ROIs.

Support Vector Machine

SVMs (Cortes and Vapnik 1995) are maximum margin binary linear classifiers that determine which of 2 classes a given input belongs. Specifically, an SVM treats each input data as a vector in a high-dimensional space and constructs the hyperplane that separates the input samples such that samples from the 2 classes fall into distinct sides of the hyperplane. While there may be an infinite number of hyperplanes that linearly separate the 2 classes, the SVM selects that hyperplane that has the largest margin (distance to the nearest training data of any class), and is thus optimal in its “robustness” for separating novel samples that are similar to, but distinct from, the samples used to train the classifier. We implemented a 2-class, linear SVM classifier using libsvm libraries (see http://www.csie.ntu.edu.tw/~cjlin/libsvm/) in Matlab (Mathworks, Natick, MA, USA).

Retinotopic Stimulus Presentation and Behavioral Response Data Collection

A MacBook Pro laptop running Python (www.python.org) with VisionEgg (www.visionegg.org) software libraries (Straw 2008) was used to drive visual stimulus presentation and to collect subject responses. Visual stimuli were projected (via liquid crystal display) onto a rear projection screen (Da-Plex, Da-Lite Screen) viewed via an adjustable mirror placed in the magnet bore. The screen lies at a viewing distance of approximately 90 cm and the projected images extend across a visual angle of roughly 15° radius horizontally and 12° radius vertically. Auditory stimuli were generated and presented using Matlab software (Mathworks, Inc., Natick, MA, USA) with Psychophysics Toolbox (www.psychtoolbox.org) through an audio system (MR-confon, www.mr-confon.de) that included a control unit, audio amplifier, DA converter, and MR-compatible headphones/earmuffs. Inside the MR scanner, subject responses were collected using an MR-compatible button box.

Auditory Stimuli and Procedures

Two simultaneous, but spatially separated auditory streams (see below), were presented to the subjects during fMRI scanning. Subjects were instructed to attend to either the left or right stream (depending on the block) and to perform a 1-back task. Stimuli were spoken digits in both the attended and distractor stream. To investigate whether auditory spatial attention engages parietal visuotopically defined maps, no visual stimulus was provided during the auditory task except for a central fixation point. Subjects practiced each task 1 day prior to the scan until reaching 80% performance in the baseline condition (see below).

Auditory streams were generated from monaural recordings of 8 digits (1–9, excluding the 2-syllable digit 7) spoken by a single male talker. Each digit was sampled at 44.1 kHz and had duration of 500 msec, windowed with cosine-squared onset and offset ramps to reduce spectral splatter and other artifacts (30-ms ramp time). The digits were then monotonized to 84 Hz (using Praat software; see http://www.praat.org/) and normalized to have equal root mean square energy. Each monaural digit recording was used to generate a binaural, lateralized signal in which the signal at the 2 ears was identical, except for a delay between the ears (delay = 700 μs, leading either right or left to produce ITDs of ±700 μs, respectively, with no interaural level difference). This manipulation resulted in lateralized percepts, with the digits perceived as coming from either right or left of the median plane, depending on the sign of the ITD.

The choice of using ITDs only, rather than including all spatial cues that arise in anechoic settings, is likely to have reduced the realism and the “externalization” of the perceived streams. However, ITDs alone are very effective in allowing listeners to direct spatial auditory attention; realism of the simulated scene does not have a large impact on task performance (e.g. see Shinn-Cunningham et al. 2005). Even more, when listeners are told to ignore spatial cues and to divide attention between 2 streams coming from different directions, they cannot; instead, the more distinct the 2 streams' spatial attributes (including simple ITDs alone), the more strongly listeners are forced to obligatorily attend to 1 stream at the expense of the other (Best et al. 2007, Ihlefeld and Shinn-Cunningham 2008a, 2008b, 2008c; Best et al. 2010, see review in Shinn-Cunningham 2008). Thus, in cases like those used in the current experiment, where there is a relatively large separation of streams, spatially directed attention is necessary to separate the 2 streams, perceptually, and to perform the task, and is very effective at suppressing responses to whatever stream is not in the attentional foreground at a given moment.

Auditory stimuli were delivered through MR-compatible headphones (MR-Confon GmbH, Magdeburg, Germany). The earmuffs from the headphones, together with insert earplugs, provided 50 dB attenuation of external noise, including scanner noise.

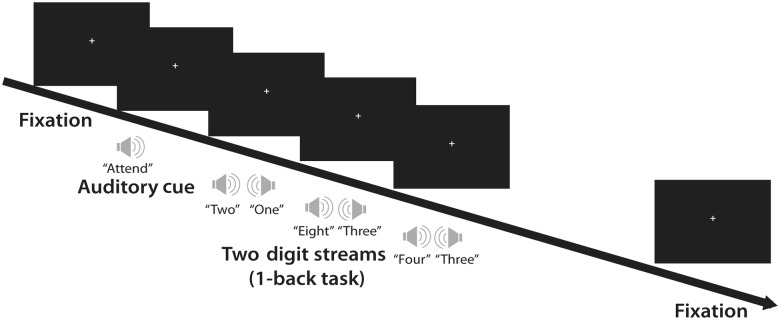

Subjects performed a “1-back” memory judgment as part of an auditory spatial attention task (Fig. 1). Two competing digit streams, lateralized by ITD, were presented simultaneously through headphones. Subjects were instructed to maintain fixation on a center cross throughout the experiment. During attend conditions, subjects attended to either the left or the right digit stream (target stream) and performed a 1-back task. Prior to the start of each given block, an auditory cue came on during a 4.2-s cue period. The cue was a spoken word “attend,” spatially localized to either the left or the right in the same way described above. During the 16.8-s trial period that followed, the 2 streams of digits completely overlapped temporally. The subjects were instructed to press a button with their right index fingers each time they heard a digit repeat in the attended target stream. In the baseline condition, subjects were instructed to listen to the identical stimuli without directing their attention to either stream. The auditory cue was a spoken word “passive” presented diotically (with zero ITD, identical at the 2 ears, so that it appeared to come from the center). Note that this baseline control task was not simply passive listening; in order to match the motor responses in the attend condition, subjects were instructed to press a button when they detected the presence of a 500-Hz pure tone. The tone was diotic and came on at random intervals. During the experiment, each digit was presented for 500 ms, followed by 200 ms of silent interval, resulting in a digit presentation rate of 700 ms per digit. In each run, 8 attend blocks alternated with 8 baseline blocks, giving rise to a total scan time of 5 min and 36 s or 160 acquisitions (TR = 2.1 s). Each subject performed between 6 and 8 runs on the scan day. Behavioral data were summarized by reaction times (RT) and d'. RTs were calculated from the time elapsed between the end of the auditory presentation of a target digit and the time of the button response indicating that subjects detected a repeated target. Only hit trials were included in calculating RTs. d' was defined by d' = Z (hit rate) − Z (false alarm rate), where function Z (p),  is the inverse of the Gaussian cumulative distribution function. Hits represent trials with 1-back matches that the subject correctly reported within 700 ms; misses represent the 1-back match trials that the subject failed to report. False alarms represent the times that the subject reported a match when one did not occur in the stimuli. The sensitivity index measures the distance between the means of the target and the noise distributions in units of standard deviation, factoring out any decision bias in the response patterns.

is the inverse of the Gaussian cumulative distribution function. Hits represent trials with 1-back matches that the subject correctly reported within 700 ms; misses represent the 1-back match trials that the subject failed to report. False alarms represent the times that the subject reported a match when one did not occur in the stimuli. The sensitivity index measures the distance between the means of the target and the noise distributions in units of standard deviation, factoring out any decision bias in the response patterns.

Figure 1.

Trial structure in the auditory spatial attention task. Each block started with an auditory cue word: Attend, spatially localized to the right or the left, for the attend conditions and passive, lacking spatial cues (zero ITD), for the baseline condition. The spatial location of the cue words indicated the target location. The subjects then attended to only the target stream and performed a 1-back task. Baseline condition was a tone detection task with a nonspatial tone embedded in the same stimuli.

Results

Behavioral Performance

During the task, subjects were able to perform well attending both spatial locations (d' = 2.19 for attend left d' = 2.43 for attend right). Although prior studies have reported small hemispheric asymmetries (e.g. Krumbholz et al. 2007; Teshiba et al. 2012), performance of attend-left and attend-right conditions was not statistically different either in sensitivity index (t(8) = 1.96, P = 0.07) or in RTs (t(8) = 0.16, P > 0.1). These results confirmed that the task was attentionally demanding, yet within the subjects' abilities, independent of the direction of attention.

Effects of Sustained Auditory Attention

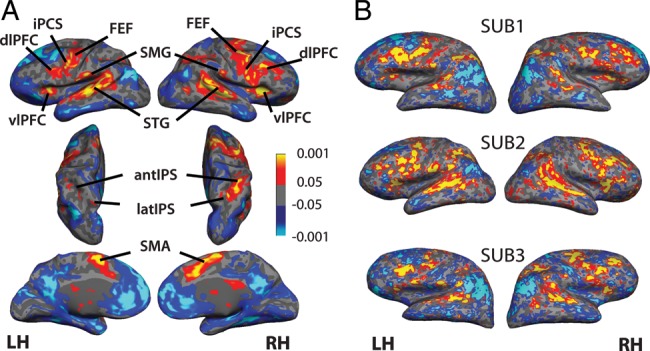

To reveal modulatory effects of sustained auditory spatial attention, we excluded time points during the auditory cue period and analyzed only the ongoing times during which subjects spatially directed attention. We combined the regressors from attend-left and attend-right conditions and contrasted them with that from the baseline condition. Activation of attention modulation in the “attend versus baseline” contrast was summarized in statistical maps on the group-average level (Fig. 2A) and in individual subjects (Fig. 2B). For illustrative purposes, the significance maps are displayed with a lenient threshold of P < 0.05 (uncorrected) in order to detect weak activation.

Figure 2.

Statistical maps reflecting activation in the auditory spatial attention task. (A) The lateral, dorsal, and medial view of group-averaged (N = 9) auditory spatial attention task activation. Areas more activated during attend trials compared with baseline condition (P < 0.05) include FEF, inferior precentral sulcus (iPCS), dorsal and ventral lateral prefrontal cortex (dlPFC and vlPFC), SMG, STG, the lateral and anterior intraparietal sulcus (latIPS and antIPS), and the SMAs. (B) Activation from the auditory spatial attention task with the same contrast on 3 individual subjects.

While expected effects of sustained spatial attention were observed in the dorsal attention network mediating voluntary orientation of spatial attention in the visual system, several areas outside the dorsal attention network were also recruited during the auditory spatial attention task. The largest and most robust activation was observed in the bilateral STG, where the primary and secondary auditory cortex is located. Activation was strongest in the STG, but also extended into posterior portions of superior temporal sulcus in many subjects. In the parietal cortex, we observed a swath of activation along the lateral bank of IPS that extended anteriorly to the point where IPS merges with the postcentral sulcus. Visuotopic maps in IPS primarily lie along the medial bank of IPS (Swisher et al. 2007). Visual examination revealed that the IPS activation present during the auditory spatial attention task lies lateral and inferior to the visuotopic maps (Fig. 3).

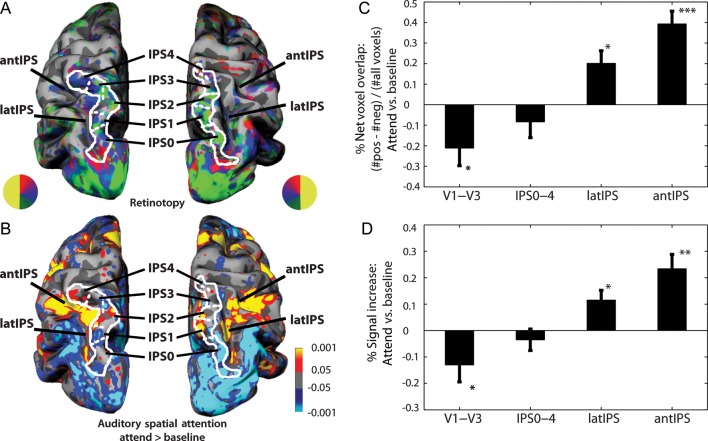

Figure 3.

Visuospatial mapping and auditory spatial attention activation in the parietal lobe in an individual subject (A and B) and in all subjects (C and D). (A) Retinotopic (polar angle) mapping reveals areas IPS0, IPS1, IPS2, IPS3, and IPS4 along the medial bank of IPS. Nonvisuotopic IPS areas (latIPS and antIPS) are anatomically identified. (B) Auditory spatial attention task engaged nonvisuotopic IPS areas, but not visuotopic mapping areas. (C) Proportion of net voxels activated ((# of voxels positively activated − # of voxels deactivated/# of ROI voxels) during attend trials. (D) Percent signal increase relative to baseline for each ROI.

Another locus of activation in the parietal lobe lies in the SMG. Although one must be cautious when comparing activity across ROIs, we note that the activity of SMG was more robust and consistent on the left hemispheres in individual subjects (Fig. 2B) than on the right hemispheres (5 of 9 showed greater activation in the left SMG. No subjects exhibited greater SMG activity in the right hemisphere). The functional role of this portion of the SMG was not explored here; however, other studies have reported a key substrate of verbal processing and the phonological loop within the SMG (Jacquemot et al. 2003; Nieto-Castanon et al. 2003). The hemispheric asymmetry observed was consistent with this hypothesis and likely reflected the verbal nature of the stimuli used. Nevertheless, our results support the view that phonological segregation in our task relied on auditory spatial attention. In the frontal lobe, we identified 5 distinct regions of activation, 2 of which are key components of the visual dorsal attention network. These areas included the frontal eye field (FEF), located at the intersection between posterior end of caudal superior frontal sulcus and the precentral sulcus; and the inferior precentral sulcus (iPCS), located at the junction of precentral sulcus and inferior frontal sulcus. Activation was also observed in the dorsolateral prefrontal cortex (dlPFC), anterior to iPCS, and ventrolateral prefrontal cortex (vlPFC). On the medial wall of the prefrontal cortex, we observed bilateral activation in the supplementary motor area (SMA). The auditory spatial attention task activation in the parietal and frontal lobes spread into larger areas on the right hemisphere than on the left hemisphere, consistent with a general right hemisphere bias in top-down attention (Mesulam 1981). The attend versus baseline contrast revealed consistent deactivation across angular gyrus, dorsomedial prefrontal cortex, posterior cingulate cortex, and the temporal pole. These areas collectively form the default mode network (e.g. Shulman et al. 1997; Raichle et al. 2001), which routinely exhibits greater activity during passive rest than during task performance. In addition to the above-mentioned areas, we also observed unilateral activation from superior FEF and lateral occipital complex on the right hemisphere. As activation from these areas was less robust, they were not included in the subsequent ROI analyses.

Overlap Between Auditory Attention and Visuotopic IPS

To test the hypothesis that auditory spatial attention may recruit parietal visuotopically mapped areas (IPS0–4) to create multimodal spatial attention maps, we mapped visuotopic IPS regions and contrasted them with the observed auditory spatial attention modulation within our ROIs in individual subjects. Figure 3A,B summarizes this comparison for an individual subject. In Figure 3A, the contralateral visuotopic maps were evident in the occipital lobe extending dorsally along the medial bank of IPS. The solid white lines marked the boundaries of 5 distinct parietal maps (IPS0–4) and the dashed white lines marked the reversals separating each individual map that corresponded to the vertical meridians. We defined 2 additional regions of interest on the lateral bank of IPS that were not visuotopically mapped, latIPS and antIPS. We employed these ROIs to analyze fMRI activation during the auditory spatial attention task in individual subject hemispheres (Fig. 3B). Auditory attention largely spared IPS0–4, which contained visuotopic maps of the contralateral hemifield. In contrast, lateral and anterior to these areas, activation from auditory task ran along the fundus of IPS, merging with postcentral sulcus at the lateral/inferior bank of the anterior branch of IPS. Furthermore, early visual cortices (V1–V3) in the occipital lobe were deactivated during auditory attention. The finding that auditory spatial attention activation spared IPS0–4 contradicts our hypotheses that the visuotopically defined maps in IPS are multimodal, as auditory attention clearly did not modulate activity in IPS0–4. Conversely, areas that did show significant response to auditory spatial attention (latIPS and antIPS) lacked visuotopic maps.

To quantitatively assess the extent of auditory attention modulation in the parietal areas, we performed ROI analyses for: 1) a single ROI combining IPS0–4, 2) latIPS, and 3) antIPS. We also included a combined V1–V3 ROI. For each of these 4 ROIs, we calculated 2 measures of attentional effects: The net voxel overlap and the percent signal change.

Net voxel overlap was calculated in each ROI in 2 stages: First, by counting the number of voxels within the ROI that were significantly activated (P < 0.05, uncorrected) during auditory attention and subtracting the number of voxels significantly deactivated within the same ROI; then, this net voxel count was divided by the total number of voxels within the ROI to yield the net voxel overlap fraction. Figure 3C summarized the net voxel overlap measure in each of the 4 ROIs in all subjects. Of the 4 areas, V1–V3 and IPS0–4 contain visuotopic maps, while latIPS and antIPS are nonvisuotopic areas. No significant differences in activity between the 2 hemispheres were observed (F1,8 = 0.35, P = 0.57), nor there was a significant interaction between hemisphere and ROIs (F3,24 = 1.91, P = 0.15). We therefore combined ROIs from the 2 hemispheres. Consistent with the pattern observed qualitatively in individual subjects (Fig. 2A,B), the net voxel overlap in visuotopic IPS0–4 exhibited a trend toward there being a larger number of deactivated than activated voxels (t(8) = 2.06, P = 0.07). The nonvisuotopic latIPS and antIPS, on the other hand, were significantly recruited by auditory attention (t(8) = 3.29, P < 0.05 and t(8) = 6.34, P < 0.001, respectively). The occipital visual cortex V1–V3 had a significantly greater number of deactivated than activated voxels (t(8) = 2.79, P < 0.05).

The second measure of attention effects in these ROIs, percent signal change, measures the averaged strength of modulation, contributed from all voxels within an ROI. No significant differences in activity between the 2 hemispheres were observed (F1,8 = 2.39, P > 0.1), although there was a significant interaction between hemisphere and ROIs (F3,24 = 3.17, P = 0.04). Post hoc t-tests revealed no hemispheric asymmetries within the ROIs (V1–V3: t(16) = 0.657, P = 0.52; IPS0–4: t(16) = 0.104, P = 0.92; latIPS: t(16) = 0.030, P = 0.98; antIPS: t(16) = 1.160, P = 0.263). Figure 3D, which summarizes the percent signal change in all 4 ROIs, reveals a pattern similar to the net voxel overlap. A significantly greater proportion of the voxels in the occipital visual cortex (V1–V3) were deactivated by auditory attention than activated (t(8) = 2.38, P < 0.05); the visuotopic IPS0–4 showed a trend toward more deactivated voxels (t(8) = 1.91, P = 0.09); and nonvisuotopic latIPS and antIPS had significantly more activated than deactivated voxels (t(8) = 3.14, P < 0.05 and t(8) = 4.49, P < 0.01, respectively). To further investigate visuotopic IPS, we repeated this analysis with ROIs for each visuotopic IPS ROI, IPS0–4. Similar to the results for the combined visuotopic IPS0–4 ROI, none of these visuotopically mapped areas exhibited significant activation. IPS0 trended toward deactivation (t(8) = 2.07, P = 0.072); IPS1, IPS3, and IPS4 had nonsignificant negative mean activation (P > 0.4) and IPS2 had nonsignificant positive activation (t(8) = 0.34, P = 0.74).

Together, these findings indicate, contrary to the multimodal map hypothesis, that auditory spatial attention does not utilize parietal visuotopic maps. In addition, areas that were recruited did not contain map-like organizations. This suggests that auditory space encoding is maintained independently of the parietal visual maps. These results also suggest a functional distinction between the visuotopically organized IPS0–4 and its neighboring areas lacking map structure.

Effects of the Direction of Attention on BOLD Amplitude

To test sensitivity to the direction of auditory attention (left vs. right) in the areas modulated by auditory attention, we contrasted the 2 attention conditions: Attending the left stream versus attending the right stream. Group-averaged maps (not shown) failed to reveal, even at the lenient threshold of 0.05 uncorrected, any clusters of activation for either attend-right > attend-left or the opposite contrast. We took 2 steps to analyze the directional data in greater detail: Univariate ROI and multivariate ROI analyses. In addition to the occipital (V1–V3) and intraparietal (IPS0–4, latIPS, antIPS) ROIs defined in the prior section, we also defined ROIs for the regions that exhibited auditory spatial attentional modulation (P < 0.05) in individual subjects, using the contrast attend > baseline. Note that the contrast used to define the ROIs is orthogonal to the contrast of attend left versus attend right that is analyzed using these ROIs. Specifically, we identified STG (where the primary and secondary auditory cortex is located), FEF, iPCS, dorso- and ventrolateral prefrontal cortex (dlPFC and vlPFC), SMA, and SMG.

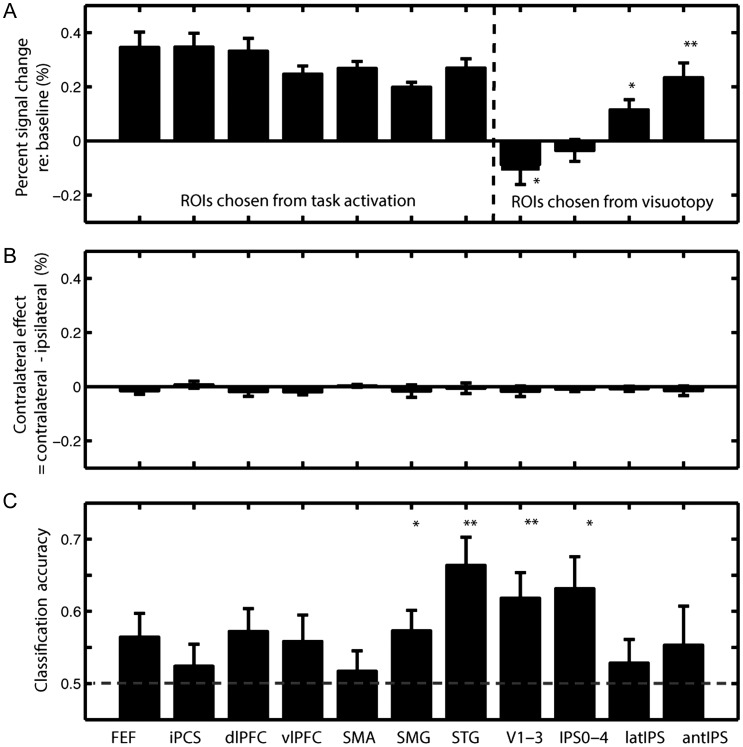

Figure 4A summarizes the overall percent signal change in all the ROIs analyzed (overall signal change for visuotopically defined areas is replotted from Fig. 3D). Significance of the attention modulation in the attentionally defined ROIs was not assessed since, by definition (attend > baseline), these ROIs would be significant; such analysis would be statistically circular. For each ROI in each hemisphere, we calculated the percent signal changes when subjects were attending to the ipsilateral stream and when they were attending to the contralateral stream (both with respect to the baseline condition). The contralateral effect in each ROI in each hemisphere is defined as the contralateral signal change minus the ipsilateral signal change. We then combined and averaged the contralateral effect for the same ROI across the 2 hemispheres. Figure 4B reveals that although auditory spatial attention modulates activity, none of the areas we examined showed a significant contralateral effect (P > 0.5, for all ROIs). This result was obtained both for each ROI in each hemisphere and for the combined hemisphere ROIs. This observation suggests that, unlike what occurs for visual processing, there is no strong map-like topography of spatial receptive fields in these areas.

Figure 4.

Overall signal change modulated by spatial attention and attended hemifield, multivoxel pattern classification predicting the direction of attention. (A) Percent signal increase in attend blocks compared with baseline in selected ROIs. The activation from frontal and temporal ROIs is shown for illustration, not statistical purposes, since such analysis would be circular. (B) Contralateral effect = attend contralateral hemifield − attend ipsilateral hemifield). (C) Multivoxel pattern classification accuracy in predicting left versus right attend blocks in the same ROIs.

These univariate results may suggest that the direction of auditory spatial attention does not produce any significant change in blood oxygen level-dependent (BOLD) activity in these areas. However, some areas may still be sensitive to the direction of attention: The direction that the subject is attending may be encoded on a finer spatial scale in the auditory system that does not cause large-scale changes in BOLD amplitude averaged over the whole ROI. Univariate ROI analysis alone cannot reveal differences in fine-scale patterns of activation. To further investigate if any spatial information is encoded in these selected ROIs in finer detail, we performed MVPA.

Decoding Direction of Attention Using Multivoxel Pattern Classification

Although univariate GLM analysis did not reveal left versus right sensitivity in the average magnitude of BOLD activation in any of the selected ROIs (Fig. 4B), MVPA revealed that a subset of these areas do encode information about the direction of auditory attention. The dashed horizontal line in Figure 4C indicates the 50% chance level of correct classification, given the 2-class (left vs. right) classification problems. In all of the selected ROIs, MVPA predicted the direction of auditory attention of the blocks >50% of the time (Fig. 4C). T-tests (see Materials and Methods for arcsine transformation details) revealed that classification accuracy for 4 of the ROIs (SMG (P < 0.05); STG (P < 0.01), IPS0–4 (P < 0.01), and V1–V3 (P < 0.05)) was significantly the above-chance level, indicating that the classifier was able to use the patterns of activity in multiple voxels to determine the direction of attention. In addition, 2 frontal areas FEF and dlPFC trended toward providing classification accuracies better than chance (P(FEF) = 0.09; P(iPCS) = 0.05). These 2 areas may also contain information about auditory spatial attention since both areas have been reported to contain visual maps for attention, working memory, or saccade tasks (Saygin and Sereno 2008; Silver and Kastner 2009) and show persistent activity in spatial working memory tasks (Bruce and Goldberg 1985; Chafee and Goldman-Rakic 1998; Curtis and D'Esposito 2003).

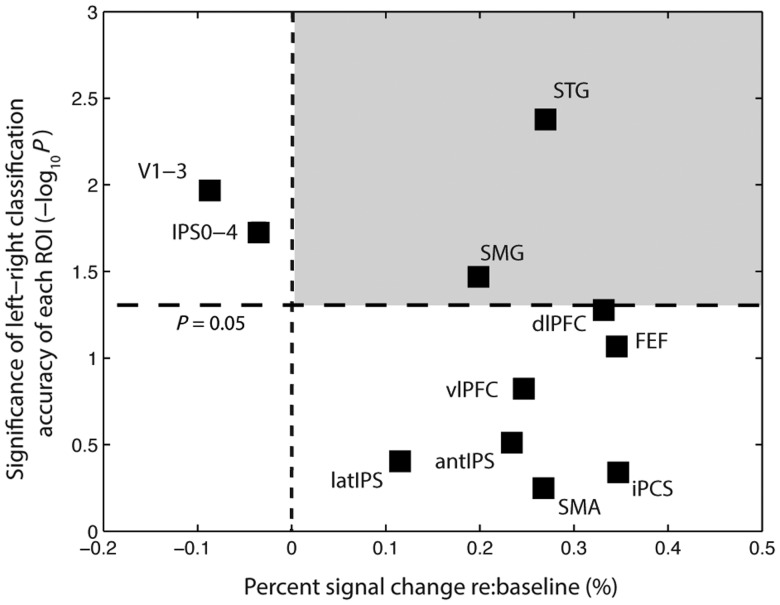

Figure 5 plots the change in average BOLD signal during the auditory spatial attention task (attention > baseline) in a given ROI against the performance of an SVM MVPA classifier (left vs. right) trained on the activation patterns in that ROI. By definition, areas with increased activity during the auditory spatial attention task are displayed to the right of the vertical dashed line, while areas to the left of the line exhibited a decrease in BOLD magnitude. Along the y-axis, areas above the horizontal dashed line are those whose MVPA analysis predicted the direction of spatial auditory attention significantly better than chance. Areas of greatest interest are those that both exhibit net activation during the task and exhibit information about the direction of auditory attention. Only 2 areas, STG and SMG, both had greater activity during spatial auditory attention and predicted the direction of auditory spatial attention.

Figure 5.

Comparison of auditory task activation (spatial attention vs. baseline) and classification accuracy (left vs. right) across ROIs. Activity in voxels from STG and SMG is enhanced by auditory spatial attention in a direction-specific manner. In contrast, activity in V1–V3 and IPS0–4 is suppressed in a direction-specific manner.

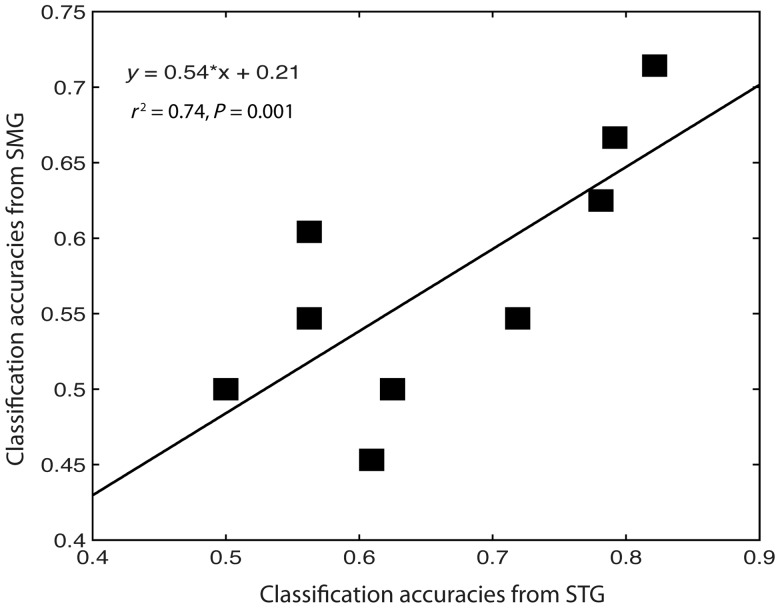

To analyze whether the spatial information in these STG and SMG is related, we calculated the correlation of the decoding accuracies in these 2 areas for individual subjects. We hypothesized that if the spatial information in STG and SMG was shared between the 2 areas or came from a common source, then subjects who showed high classification accuracy using voxels in STG would also show high classification accuracy using voxels within SMG. We found that, looking across individual subjects, the accuracies of classification of the direction of attention using voxels in STG and the accuracy using voxels in SMG are significantly correlated (Fig. 6, r2 = 0.74, t(7) = 4.459, P = 0.001). This result suggests that the spatial information in the 2 auditory areas STG and SMG was either shared directly or came from a common source, since subjects whose STG strongly encodes direction of auditory attention tend to be those subjects whose SMG also encodes the direction of auditory attention strongly. Similarly, we tested the correlation of the classification accuracy based on MVPA analysis of the 2 visuotopically mapped areas V1–V3 and IPS0–4. As when comparing auditory processing areas, we found that classification accuracies based on the pattern of activation in V1–V3 and in IPSO-4 were significantly correlated across subjects (r2 = 0.63, t(7) = 3.437, P = 0.005), suggesting that these 2 areas derive their sensitivities to the direction of auditory attention from a common source or suggesting that one area provides strong input to the other. It is possible that some of this correlation strength is due to global signal change differences across subjects. However, when we computed the correlation between classification accuracies of classifiers operating on information in the early auditory sensory cortex (STG) and on information in the early visual sensory cortex (V1–V3), we found no significant correlation (r2 = 0.08, t(7) = 0.785, P = 0.229). In other words, those subjects whose STG activity enabled a classifier to determine the direction of auditory attention well were not the same subjects whose V1–V3 activity enabled accurate classification of the direction of attention. Together, these results suggest that information about the direction of auditory attention is derived from a common underlying computation for areas responding to a particular sensory input, but this information is derived from different computations in the primary auditory areas and in the primary visual areas.

Figure 6.

Classification accuracy for the direction of attention in STG versus classification accuracy for the direction of attention in SMG. Each data point represents an individual subject.

Primate studies have indicated that neurons in the caudal portion of auditory cortex have sharper spatial tuning than neurons in the rostral portion of auditory cortex (Hart et al. 2004; Woods et al. 2006). In the MVPA analyses, we therefore investigated the classifiers' weight distribution in the STG ROI to see if the caudal voxels played a stronger role in determining the attended direction. We found no evidence for any clusters that distinguished themselves as more dominating. In fact, each subject showed a different distribution of classifier weights. This finding is consistent with the univariate analysis. If clusters of voxels preferring the left/right location were organized topographically, the univariate GLM analysis is likely to have shown a contralateral bias in these voxels.

Discussion

Prior fMRI investigations of auditory spatial attention have revealed a network of cortical areas that closely resemble the areas that support visual spatial attention and the IPS and/or superior parietal lobule (SPL) have been specifically implicated (Shomstein and Yantis 2004; Mayer et al. 2006; Wu et al. 2007; Hill and Miller 2009; Smith et al. 2010). One primary focus of the present study was to investigate the hypothesis that auditory spatial attention utilizes IPS0, IPS1, IPS2, IPS3, and/or IPS4, regions of SPL that contain visuospatial maps (e.g. Swisher et al. 2007), to support the representation of auditory space. To test this multimodal map hypothesis, we performed retinotopic mapping using fMRI in individual subjects to define regions of interest for analyzing data from an auditory spatial attention experiment performed in the same subjects. We failed to find any evidence to support the multimodal map hypothesis for any of the IPS0–4 areas; these regions trended toward deactivation. However, neighboring nonvisuotopic regions in the lateral and anterior portions of IPS were significantly activated in the auditory spatial attention task. The functionality of these nonvisuotopic IPS regions is not well characterized, although it has been suggested that they support nonspatiotopic control functions for attention and cognition (e.g. Vincent et al. 2008). Our findings suggest that while auditory and visual spatial attention may share some control mechanisms they do not share spatial map structures in the posterior parietal lobe. This is a significant observation given the conventional wisdom that posterior parietal cortex is a key site of multisensory integration.

More broadly, this study investigated the cortical substrates that were modulated by auditory spatial attention and that contained specific information about the location of the attended stimulus. A prior fMRI study had observed that the left STG and surrounding cortex were more responsive to moving auditory stimuli in the contralateral spatial field than in the ipsilateral field; a similar, but weaker trend was observed in the right hemisphere (Krumbholz et al. 2005). Other prior fMRI studies have not reported spatial specificity for auditory stimuli (e.g. Mayer et al. 2006; Hill and Miller 2009; Smith et al. 2010) or reported laterality effects only for monaural, not binaural stimuli (Woldorff et al. 1999). Here, we designed our stimuli and task to keep equal the stimulus drive for both left and right auditory space at all times (using only ITDs, without any interaural level differences) in order to investigate the top-down influences of auditory spatial attention. This is an important distinction from previous auditory attention studies that commonly employed sound localization, detection, or spatial memory of lateralized stimuli presented in isolation.

We observed that a network of frontal, parietal, and temporal cortical areas was activated by the auditory spatial attention task (task vs. baseline). A wide band of activation was observed in the STG, the cortical area that hosts the auditory cortex; a region of the SMG along with latIPS and antIPS was activated in the parietal lobe, as were a collection of frontal regions, FEF, iPCS, dlPFC, vlPFC, SMA, that appear similar to those reported in prior auditory or visual spatial attention studies (e.g. Hagler and Sereno 2006; Wu et al. 2007; Hill and Miller 2009; Smith et al. 2010). Univariate analysis of the contrast of “attend contralateral” versus “attend ipsilateral” failed to reveal significant activation in the cortex, consistent with prior studies that failed to observe a spatially specific signal in fMRI studies of sustained auditory attention. This result differs dramatically from the strong contralateral modulations typically observed with visual spatial attention (e.g. Silver and Kastner 2009). However, our data do not reflect a null result; application of MVPA revealed that 2 of the regions activated in the task versus baseline contrast, STG and SMG also contained significant information about the direction of auditory spatial attention. The observation in STG extends the prior Krumbholz et al. (2005) finding of spatial coding of auditory stimuli in STG to spatial coding of auditory spatial attention. SMG is implicated in the phonological loop (Jacquemot et al. 2003; Nieto-Castanon et al. 2003) and may have been recruited by the verbal nature of our task. These findings are the first fMRI report of directional information for sustained auditory spatial attention and the first to report spatial specificity of auditory spatial attention coding in the SMG. This latter finding may have important implications for understanding the neural mechanisms of auditory source separation and the “cocktail party effect” for phonological stimuli (Cherry 1953).

We found no evidence to support the view that sustained auditory spatial attention is encoded in maps, as is observed for visual spatial attention; instead, the auditory spatial attentional information appears to be sparsely coded without an apparent map structure. A recent electroencephalography study (Magezi and Krumbholz 2010) found evidence to support the view that auditory spatial information is coded via an opponent process model, in which neurons are broadly tuned to ITDs (left or right) rather than narrowly tuned to a parametric range of ITDs. Our results are consistent with that view. However, 2 caveats deserve mention. First, we cannot rule out the existence of a subpopulation of neurons within the visuotopic IPS regions that code auditory spatial information; small populations of auditory–visual neurons have been reported in IPS of nonhuman primates (Cohen et al. 2005; Gifford and Cohen 2005; Mullette-Gillman et al. 2005). MVPA analysis revealed that significant information about the direction of auditory spatial attention was encoded in the combined IPS0–4 ROI. However, since IPS0–4 was deactivated by the task, we suggest that this reflects weak spatially specific suppression of contralateral visual-space representations. Cross-modal deactivations are a signature of modality specific attention (Merabet et al. 2007; Mozolic et al. 2008). Consistent with this interpretation, early visual cortical areas V1–V3 also are both deactivated during the auditory task and contain information about the direction of auditory spatial attention. The second caveat is that one or more areas that failed to reach significance in the MVPA analysis might reach significance in a study with substantially increased statistical power. The individual subject ROI approach employed here typically yields high statistical power per subject; nevertheless, it is worth noting that the dlPFC and FEF approached significance in the MVPA analysis of coding of the direction of spatial attention. Although dlPFC and FEF do not typically yield visuotopic maps using retinotopic mapping, other methods using working memory and saccade approaches have revealed coarse vision-related maps in both of these regions (Hagler and Sereno 2006; Kastner et al. 2007; Saygin and Sereno 2008). Therefore, we cannot rule out the possibility that auditory spatial attention utilizes visuotopic maps in these prefrontal regions. Notably, Tark and Curtis (2009) reported that spatial working memory for auditory stimuli recruited FEF in a spatially specific manner, consistent with coding of auditory space; however, that study did not demonstrate that those voxels were part of a visuotopic map, so it remains unclear whether or not auditory spatial attention utilizes cortical map structures within lateral frontal cortex or utilizes nonspatiotopic regions near the map structures, as we observed for the parietal lobe. We also note that while the auditory spatial attention components of our task were demanding, the spatial short-term memory components were not; further investigations of the differences between auditory spatial attention and auditory spatial short-term memory are needed to address this issue.

The current study also distinguishes itself from previous studies that investigated cueing or switching mechanisms of spatial attention. In auditory attention switching studies, medial SPL and precuneus revealed stronger activity during switching than nonswitching trials (Shomstein and Yantis 2004, 2006; Wu et al. 2007; Krumbholz et al. 2009). Although the MNI coordinates for IPS2 and IPS3 are in the vicinity of those reported for auditory spatial attention in some studies, we hope to emphasize that IPS2/3 lie along the medial bank of IPS on the lateral surface of SPL, not on the medial surface of SPL or in the precuneus; we did not observe attention modulation in medial SPL or precuneus. In our analysis, we assigned the cue period to a regressor of no interest for the sake of being conservative; although, in principle, some effects of the cue period could have elevated activity during the sustained attention periods, we failed to observe any activation patterns consistent with prior attentional switching effects. Considered together with prior findings, our results highlight the substantial differences between sustained attention and attentional switching mechanisms.

Previously, we reported that a tactile attention task produced fMRI activation that abutted, but did not overlap the visuotopic parietal areas IPS0–4 (Swisher et al. 2007). In the present study, we find that auditory attention task activation also abuts and does not overlap with IPS0–4. Taken together, these studies suggest that these visuotopic IPS regions are strongly unimodal. A key to both studies was that we employed retinotopic mapping of the IPS regions within individual subjects. In our tactile studies (Merabet et al. 2007; Swisher et al. 2007), group analysis of the data revealed a swath of parietal tactile activation that appeared to intersect with the swath of parietal visual activation in IPS; however, analysis at the individual subject hemisphere level revealed patterns of activation that fit together like interlocking puzzle pieces with minimal overlap. There are many small cortical areas within the posterior parietal lobe; the spatial blurring induced by group averaging has the potential to obscure important functional distinctions that are visible in within-subject analyses. We believe that our use of these individual subject methods explain why we found a parietal lobe difference between audition and vision that prior studies did not identify Table 1.

Table 1.

Mean Montreal Neurological Institutes (MNI) coordinates of IPS0–4, latIPS, and antIPS areas and the standard deviation of centroids (in mm)

| Region | Mean MNI Coordinates |

Standard of mean MNI |

||||

|---|---|---|---|---|---|---|

| X | Y | Z | X | Y | Z | |

| LH | ||||||

| IPS0 | −25 | −81 | 22 | 7 | 4 | 7 |

| IPS1 | −20 | −78 | 41 | 9 | 5 | 8 |

| IPS2 | −15 | −66 | 48 | 7 | 9 | 9 |

| IPS3 | −19 | −62 | 55 | 11 | 9 | 8 |

| IPS4 | −22 | −54 | 55 | 11 | 10 | 7 |

| latIPS | −26 | −65 | 36 | 7 | 6 | 3 |

| antIPS | −34 | −47 | 43 | 5 | 5 | 7 |

| RH | ||||||

| IPS0 | 27 | −81 | 29 | 5 | 3 | 8 |

| IPS1 | 21 | −73 | 42 | 5 | 8 | 8 |

| IPS2 | 20 | −65 | 55 | 6 | 6 | 5 |

| IPS3 | 21 | −57 | 59 | 6 | 5 | 5 |

| IPS4 | 25 | −52 | 58 | 8 | 6 | 8 |

| latIPS | 28 | −65 | 39 | 2 | 5 | 6 |

| antIPS | 34 | −46 | 43 | 6 | 6 | 5 |

IPS0–4 were defined by visuotopic mapping. LatIPS and antIPS areas were identified by excluding the visuotopically mapped areas from the anatomical IPS.

In summary, we identified a fronto-parietal network that supported auditory spatial attention. This network included multiple regions from the dorsal and ventral attention networks and auditory areas STG and SMG. Notably, we found little or no overlap between the auditory spatial attention task activation and visuotopic IPS areas, suggesting that auditory spatial attention does not utilize visuotopic maps. MVPA revealed that voxels from STG and SMG showed sensitivity to the direction of auditory attention. Taken together, these findings support the hypothesis that auditory source location is not encoded in neurons that are topographically organized. Instead, spatial information may be encoded in patterns of activation on a finer scale. Furthermore, it suggests that the integration of spatial information across multiple sensory modalities may be implemented primarily between networks of cortical areas rather than by the convergence onto distinct cortical areas containing robust multisensory maps (Pouget and Sejnowski 1997).

Notes

This work was supported by the National Science Foundation (grant numbers SBE-0354378, BCS-0726061); the National Institutes of Health (grant numbers R01EY022229, R01DC009477, F32EY019448); the National Center for Research Resources (grant P41RR14075); and the Mental Illness and Neuroscience Discovery Institute. Conflict of Interest: None declared.

References

- Alain C, Arnott SR, Hevenor S, Graham S, Grady CL. “What” and “where” in the human auditory system. Proc Natl Acad Sci U S A. 2001;98(21):12301–12306. doi: 10.1073/pnas.211209098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersen RA, Snyder LH, Bradley DC, Xing J. Multimodal representation of space in the posterior parietal cortex and its use in planning movements. Annu Rev Neurosci. 1997;20:303–330. doi: 10.1146/annurev.neuro.20.1.303. [DOI] [PubMed] [Google Scholar]

- Arcaro MJ, McMains SA, Singer BD, Kastner S. Retinotopic organization of human ventral visual cortex. J Neurosci. 2009;29(34):10638–10652. doi: 10.1523/JNEUROSCI.2807-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnott SR, Binns MA, Grady CL, Alain C. Assessing the auditory dual-pathway model in humans. Neuroimage. 2004;22(1):401–408. doi: 10.1016/j.neuroimage.2004.01.014. [DOI] [PubMed] [Google Scholar]

- Best V, Gallun FJ, Ihlefeld A, Shinn-Cunningham BG. The influence of spatial separation on divided listening. J Acoust Soc Am. 2006;120:1506–1516. doi: 10.1121/1.2234849. [DOI] [PubMed] [Google Scholar]

- Best V, Gallun FJ, Mason CR, Kidd G, Jr, Shinn-Cunningham BG. The impact of noise and hearing loss on the processing of simultaneous sentences. Ear Hear. 2010;31(2):213–220. doi: 10.1097/AUD.0b013e3181c34ba6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Best V, Ozmeral EJ, Shinn-Cunningham BG. Visually-guided attention enhances target identification in a complex auditory scene. J Assoc Res Otolaryngol. 2007;8(2):294–304. doi: 10.1007/s10162-007-0073-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bressler DW, Silver MA. Spatial attention improves reliability of fMRI retinotopic mapping signals in occipital and parietal cortex. Neuroimage. 2010;53:526–533. doi: 10.1016/j.neuroimage.2010.06.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruce CJ, Goldberg ME. Primate frontal eye fields. I. Single neurons discharging before saccades. J Neurophysiol. 1985;53(3):603–635. doi: 10.1152/jn.1985.53.3.603. [DOI] [PubMed] [Google Scholar]

- Buchel C, Holmes AP, Rees G, Friston KJ. Characterizing stimulus-response functions using nonlinear regressors in parametric fMRI experiments. Neuroimage. 1998;8(2):140–148. doi: 10.1006/nimg.1998.0351. [DOI] [PubMed] [Google Scholar]

- Bushara KO, Weeks RA, Ishii K, Catalan MJ, Tian B, Rauschecker JP, Hallett M. Modality-specific frontal and parietal areas for auditory and visual spatial localization in humans. Nat Neurosci. 1999;2(8):759–766. doi: 10.1038/11239. [DOI] [PubMed] [Google Scholar]

- Carr CE, Konishi M. A circuit for detection of interaural time differences in the brain stem of the barn owl. J Neurosci. 1990;10(10):3227–3246. doi: 10.1523/JNEUROSCI.10-10-03227.1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chafee MV, Goldman-Rakic PS. Matching patterns of activity in primate prefrontal area 8a and parietal area 7ip neurons during a spatial working memory task. J Neurophysiol. 1998;79(6):2919–2940. doi: 10.1152/jn.1998.79.6.2919. [DOI] [PubMed] [Google Scholar]

- Cherry EC. Some experiments on the recognition of speech, with one and with two ears. J Acoust Soc Am. 1953;25(5):975–979. [Google Scholar]

- Cohen MS. Parametric analysis of fMRI data using linear systems methods. Neuroimage. 1997;6(2):93–103. doi: 10.1006/nimg.1997.0278. [DOI] [PubMed] [Google Scholar]

- Cohen YE, Russ BE, Gifford GW. Auditory processing in the posterior parietal cortex. Behav Cogn Neurosci Rev. 2005;4(3):218–231. doi: 10.1177/1534582305285861. [DOI] [PubMed] [Google Scholar]

- Colburn HS, Latimer JS. Theory of binaural interaction based on auditory-nerve data. III. Joint dependence on interaural time and amplitude differences in discrimination and detection. J Acoust Soc Am. 1978;64(1):95–106. doi: 10.1121/1.381960. [DOI] [PubMed] [Google Scholar]

- Colby CL, Goldberg ME. Space and attention in parietal cortex. Annu Rev Neurosci. 1999;22:319–49. doi: 10.1146/annurev.neuro.22.1.319. [DOI] [PubMed] [Google Scholar]

- Corbetta M. Frontoparietal cortical networks for directing attention and the eye to visual locations: identical, independent, or overlapping neural systems? Proc Natl Acad Sci U S A. 1998;95(3):831–838. doi: 10.1073/pnas.95.3.831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cortes C, Vapnik V. Support-vector networks. Mach Learning. 1995:273–297. [Google Scholar]

- Cox DD, Savoy RL. Functional magnetic resonance imaging (fMRI) "brain reading”: detecting and classifying distributed patterns of fMRI activity in human visual cortex. Neuroimage. 2003;19(2 Pt 1):261–270. doi: 10.1016/s1053-8119(03)00049-1. [DOI] [PubMed] [Google Scholar]

- Cox RW, Hyde JS. Software tools for analysis and visualization of fMRI data. NMR Biomed. 1997;10(4–5):171–178. doi: 10.1002/(sici)1099-1492(199706/08)10:4/5<171::aid-nbm453>3.0.co;2-l. [DOI] [PubMed] [Google Scholar]

- Curtis CE, D'Esposito M. Persistent activity in the prefrontal cortex during working memory. Trends Cogn Sci. 2003;7(9):415–423. doi: 10.1016/s1364-6613(03)00197-9. [DOI] [PubMed] [Google Scholar]

- Dale AM, Fischl B, Sereno MI. Cortical surface-based analysis. I. Segmentation and surface reconstruction. Neuroimage. 1999;9(2):179–194. doi: 10.1006/nimg.1998.0395. [DOI] [PubMed] [Google Scholar]

- De Santis L, Clarke S, Murray MM. Automatic and intrinsic auditory “what” and “where” processing in humans revealed by electrical neuroimaging. Cereb Cortex. 2007;17(1):9–17. doi: 10.1093/cercor/bhj119. [DOI] [PubMed] [Google Scholar]

- Desikan RS, Ségonne F, Fischl B, Quinn BT, Dickerson BC, Blacker D, Buckner RL, Dale AM, Maguire RP, Hyman BT, et al. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage. 2006;31(3):968–980. doi: 10.1016/j.neuroimage.2006.01.021. [DOI] [PubMed] [Google Scholar]

- DeYoe EA, Carman GJ, Bandettini P, Glickman S, Wieser J, Cox R, Miller D, Neitz J. Mapping striate and extrastriate visual areas in human cerebral cortex. Proc Natl Acad Sci U S A. 1996;93(6):2382–2386. doi: 10.1073/pnas.93.6.2382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engel SA, Rumelhart DE, Wandell BA, Lee AT, Glover GH, Chichilnisky EJ, Shadlen MN. fMRI of human visual cortex. Nature. 1994;369(6481):525. doi: 10.1038/369525a0. [DOI] [PubMed] [Google Scholar]

- Fischl B, Liu A, Dale AM. Automated manifold surgery: constructing geometrically accurate and topologically correct models of the human cerebral cortex. IEEE Trans Med Imaging. 2001;20(1):70–80. doi: 10.1109/42.906426. [DOI] [PubMed] [Google Scholar]

- Fischl B, Sereno MI, Dale AM. Cortical surface-based analysis. II: inflation, flattening, and a surface-based coordinate system. Neuroimage. 1999;9(2):195–207. doi: 10.1006/nimg.1998.0396. [DOI] [PubMed] [Google Scholar]

- Fischl B, Sereno MI, Tootell RB, Dale AM. High-resolution intersubject averaging and a coordinate system for the cortical surface. Hum Brain Mapp. 1999;8(4):272–284. doi: 10.1002/(SICI)1097-0193(1999)8:4<272::AID-HBM10>3.0.CO;2-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman MF, Tukey JW. Transformations related to the angular and the square root. Ann Math Statist. 1950;21:607–11. [Google Scholar]

- Gifford GW, Cohen YE. Spatial and non-spatial auditory processing in the lateral intraparietal area. Exp Brain Res. 2005;162(4):509–512. doi: 10.1007/s00221-005-2220-2. [DOI] [PubMed] [Google Scholar]

- Greenberg AS, Esterman M, Wilson D, Serences JT, Yantis S. Control of spatial and feature-based attention in frontoparietal cortex. J Neurosci. 2010;30(43):14330–14339. doi: 10.1523/JNEUROSCI.4248-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagler DJ, Sereno MI. Spatial maps in frontal and prefrontal cortex. Neuroimage. 2006;29(2):567–577. doi: 10.1016/j.neuroimage.2005.08.058. [DOI] [PubMed] [Google Scholar]

- Hart HC, Palmer AR, Hall DA. Different areas of human non-primary auditory cortex are activated by sounds with spatial and nonspatial properties. Hum Brain Mapp. 2004;21(3):178–190. doi: 10.1002/hbm.10156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynes JD, Rees G. Predicting the orientation of invisible stimuli from activity in human primary visual cortex. Nat Neurosci. 2005;8(5):686–691. doi: 10.1038/nn1445. [DOI] [PubMed] [Google Scholar]

- Hill K, Miller L. Auditory attentional control and selection during cocktail party listening. Cereb Cortex. 2009;20(3):583–590. doi: 10.1093/cercor/bhp124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ihlefeld A, Shinn-Cunningham BG. Disentangling the effects of spatial cues on selection and formation of auditory objects. J Acoust Soc Am. 2008a;124:2224–2235. doi: 10.1121/1.2973185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ihlefeld A, Shinn-Cunningham BG. Spatial release from energetic and informational masking in a divided speech identification task. J Acoust Soc Am. 2008b;123:4380–4392. doi: 10.1121/1.2904825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ihlefeld A, Shinn-Cunningham BG. Spatial release from energetic and informational masking in a selective speech identification task. J Acoust Soc Am. 2008c;123:4369–4379. doi: 10.1121/1.2904826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacquemot C, Pallier C, LeBihan D, Dehaene S, Dupoux E. Phonological grammar shapes the auditory cortex: a functional magnetic resonance imaging study. J Neurosci. 2003;23(29):9541–9546. doi: 10.1523/JNEUROSCI.23-29-09541.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamitani Y, Tong F. Decoding the visual and subjective contents of the human brain. Nat Neurosci. 2005;8(5):679–685. doi: 10.1038/nn1444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kastner S, DeSimone K, Konen CS, Szczepanski SM, Weiner KS, Schneider KA. Topographic maps in human frontal cortex revealed in memory-guided saccade and spatial working-memory tasks. J Neurophysiol. 2007;97(5):3494–3507. doi: 10.1152/jn.00010.2007. [DOI] [PubMed] [Google Scholar]

- Kastner S, Ungerleider LG. Mechanisms of visual attention in the human cortex. Annu Rev Neurosci. 2000;23:315–341. doi: 10.1146/annurev.neuro.23.1.315. [DOI] [PubMed] [Google Scholar]

- Kidd G, Arbogast TL, Mason CR, Gallun FJ. The advantage of knowing where to listen. J Acoust Soc Am. 2005;118(6):3804–3815. doi: 10.1121/1.2109187. [DOI] [PubMed] [Google Scholar]

- Konen CS, Kastner S. Two hierarchically organized neural systems for object information in human visual cortex. Nat Neurosci. 2008;11(2):224–231. doi: 10.1038/nn2036. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proc Natl Acad Sci U S A. 2006;103(10):3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krumbholz K, Hewson-Stoate N, Schönwiesner M. Cortical response to auditory motion suggests an asymmetry in the reliance on inter-hemispheric connections between the left and right auditory cortices. J Neurophysiol. 2007;97(2):1649–1655. doi: 10.1152/jn.00560.2006. [DOI] [PubMed] [Google Scholar]

- Krumbholz K, Nobis EA, Weatheritt RJ, Fink GR. Executive control of spatial attention shifts in the auditory compared to the visual modality. Hum Brain Mapp. 2009;30(5):1457–1469. doi: 10.1002/hbm.20615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krumbholz K, Schönwiesner M, von Cramon DY, Rübsamen R, Shah NJ, Zilles K, Fink GR. Representation of interaural temporal information from left and right auditory space in the human planum temporale and inferior parietal lobe. Cereb Cortex. 2005;15(3):317–324. doi: 10.1093/cercor/bhh133. [DOI] [PubMed] [Google Scholar]

- Lee CC, Middlebrooks JC. Auditory cortex spatial sensitivity sharpens during task performance. Nat Neurosci. 2011;14(1):108–114. doi: 10.1038/nn.2713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Macaluso E, Driver J, Frith CD. Multimodal spatial representations engaged in human parietal cortex during both saccadic and manual spatial orienting. Curr Biol. 2003;13(12):990–999. doi: 10.1016/s0960-9822(03)00377-4. [DOI] [PubMed] [Google Scholar]

- Maddox RK, Billimoria CP, Perrone BP, Shinn-Cunningham BG, Sen K. Competing sound sources reveal spatial effects in cortical processing. PLoS Biol. 2012;10(5):e1001319. doi: 10.1371/journal.pbio.1001319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maeder PP, Meuli RA, Adriani M, Bellmann A, Fornari E, Thiran JP, Pittet A, Clarke S. Distinct pathways involved in sound recognition and localization: a human fMRI study. Neuroimage. 2001;14(4):802–816. doi: 10.1006/nimg.2001.0888. [DOI] [PubMed] [Google Scholar]

- Magezi DA, Krumbholz K. Evidence for opponent-channel coding of interaural time differences in human auditory cortex. J Neurophysiol. 2010;104(4):1997–2007. doi: 10.1152/jn.00424.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mayer AR, Harrington D, Adair JC, Lee R. The neural networks underlying endogenous auditory covert orienting and reorienting. Neuroimage. 2006;30(3):938–949. doi: 10.1016/j.neuroimage.2005.10.050. [DOI] [PubMed] [Google Scholar]

- McAlpine D. Creating a sense of auditory space. J Physiol. 2005;566(Pt 1):21–28. doi: 10.1113/jphysiol.2005.083113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merabet LB, Swisher JD, McMains SA, Halko MA, Amedi A, Pascual-Leone A, Somers DC. Combined activation and deactivation of visual cortex during tactile sensory processing. J Neurophysiology. 2007;97(2):1633–1641. doi: 10.1152/jn.00806.2006. [DOI] [PubMed] [Google Scholar]

- Mesulam MM. A cortical network for directed attention and unilateral neglect. Ann Neurol. 1981;10(4):309–325. doi: 10.1002/ana.410100402. [DOI] [PubMed] [Google Scholar]

- Molholm S, Sehatpour P, Mehta AD, Shpaner M, Gomez-Ramirez M, Ortigue S, Dyke JP, Schwartz TH, Foxe JJ. Audio-visual multisensory integration in superior parietal lobule revealed by human intracranial recordings. J Neurophysiol. 2006;96(2):721–729. doi: 10.1152/jn.00285.2006. [DOI] [PubMed] [Google Scholar]

- Mozolic JL, Joyner D, Hugenschmidt CE, Peiffer AM, Kraft RA, Maldjian JA, Laurienti PJ. Cross-modal deactivations during modality-specific selective attention. BMC Neurol. 2008;8:35. doi: 10.1186/1471-2377-8-35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mullette-Gillman OA, Cohen YE, Groh JM. Eye-centered, head-centered, and complex coding of visual and auditory targets in the intraparietal sulcus. J Neurophysiol. 2005;94(4):2331–2352. doi: 10.1152/jn.00021.2005. [DOI] [PubMed] [Google Scholar]

- Murray MM, Camen C, Gonzalez Andino SL, Bovet P, Clarke S. Rapid brain discrimination of sounds of objects. J Neurosci. 2006;26(4):1293–1302. doi: 10.1523/JNEUROSCI.4511-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nieto-Castanon A, Ghosh SS, Tourville JA, Guenther FH. Region of interest based analysis of functional imaging data. Neuroimage. 2003;19(4):1303–1316. doi: 10.1016/s1053-8119(03)00188-5. [DOI] [PubMed] [Google Scholar]

- Norman KA, Polyn SM, Detre GJ, Haxby JV. Beyond mind-reading: multi-voxel pattern analysis of fMRI data. Trends Cogn Sci. 2006;10(9):424–430. doi: 10.1016/j.tics.2006.07.005. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9(1):97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Pouget A, Sejnowski TJ. A new view of hemineglect based on the response properties of parietal neurones. Philos Trans R Soc Lond B Biol Sci. 1997;352(1360):1449–1459. doi: 10.1098/rstb.1997.0131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raichle ME, MacLeod AM, Snyder AZ, Powers WJ, Gusnard DA, Shulman GL. A default mode of brain function. Proc Natl Acad Sci U S A. 2001;98(2):676–682. doi: 10.1073/pnas.98.2.676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rämä P, Poremba A, Sala JB, Yee L, Malloy M, Mishkin M, Courtney SM. Dissociable functional cortical topographies for working memory maintenance of voice identity and location. Cereb Cortex. 2004;14(7):768–780. doi: 10.1093/cercor/bhh037. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Tian B. Mechanisms and streams for processing of “what” and “where” in auditory cortex. Proc Natl Acad Sci U S A. 2000;97(22):11800–11806. doi: 10.1073/pnas.97.22.11800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rayleigh L. On our perception of sound direction. Philos Mag. 1907;13:214–232. [Google Scholar]

- Saygin AP, Sereno MI. Retinotopy and attention in human occipital, temporal, parietal, and frontal cortex. Cereb Cortex. 2008;18(9):2158–2168. doi: 10.1093/cercor/bhm242. [DOI] [PubMed] [Google Scholar]

- Schluppeck D, Glimcher P, Heeger DJ. Topographic organization for delayed saccades in human posterior parietal cortex. J Neurophysiol. 2005;94(2):1372–1384. doi: 10.1152/jn.01290.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno MI, Dale AM, Reppas JB, Kwong KK, Belliveau JW, Brady TJ, Rosen BR, Tootell RB. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science. 1995;268(5212):889–893. doi: 10.1126/science.7754376. [DOI] [PubMed] [Google Scholar]

- Sereno MI, Pitzalis S, Martinez A. Mapping of contralateral space in retinotopic coordinates by a parietal cortical area in humans. Science. 2001;294(5545):1350–1354. doi: 10.1126/science.1063695. [DOI] [PubMed] [Google Scholar]

- Sheremata SL, Bettencourt KC, Somers DC. Hemispheric asymmetry in visuotopic posterior parietal cortex emerges with visual short-term memory load. J Neurosci. 2010;30(38):12581–12588. doi: 10.1523/JNEUROSCI.2689-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shinn-Cunningham BG. Object-based auditory and visual attention. Trends Cogn Sci. 2008;12(5):182–6. doi: 10.1016/j.tics.2008.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shinn-Cunningham BG, Satyavarta IA, Larson E. Bottom-up and top-down influences on spatial unmasking. Acta Acust United Ac. 2005;91:967–979. [Google Scholar]

- Shomstein S, Yantis S. Control of attention shifts between vision and audition in human cortex. J Neurosci. 2004;24(47):10702–10706. doi: 10.1523/JNEUROSCI.2939-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shomstein S, Yantis S. Parietal cortex mediates voluntary control of spatial and nonspatial auditory attention. J Neurosci. 2006;26(2):435–439. doi: 10.1523/JNEUROSCI.4408-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]