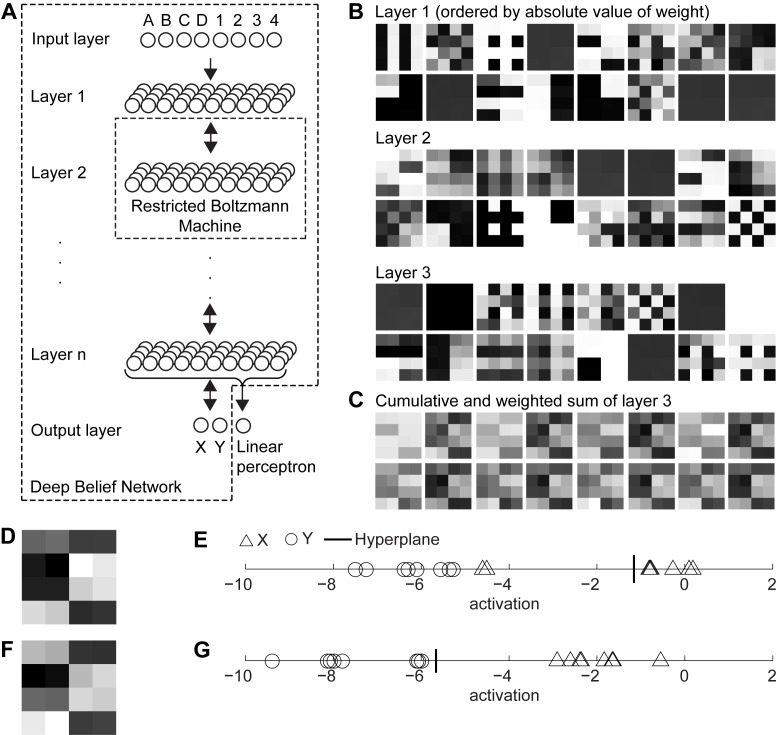

Figure 2. Shows the network architecture for the Deep Belief Network (DBN) combined with the Linear Perceptron (LP) together with the internal representations of these networks.

(A) Network architecture of an N-layer DBN. (B) Internal representation for a 3-layer DBN when probing with the 16 stimulus-context combinations or data points. (C) Cumulative and weighted sum of the 16 strongest weights for the output node X of the DBN. (D) A rescaled version of the 16th tile, which shows that all data points that map to class X have high values (brighter) and those data points that map to class Y have low values (darker). (E) Inputs to the LP. The threshold for classification is denoted as bold, vertical line when trained without A1 and B1 and (G) when trained with all data.