Abstract

To understand social interactions, we must decode dynamic social cues from seen faces. Here, we used magnetoencephalography (MEG) to study the neural responses underlying the perception of emotional expressions and gaze direction changes as depicted in an interaction between two agents. Subjects viewed displays of paired faces that first established a social scenario of gazing at each other (mutual attention) or gazing laterally together (deviated group attention) and then dynamically displayed either an angry or happy facial expression. The initial gaze change elicited a significantly larger M170 under the deviated than the mutual attention scenario. At around 400 ms after the dynamic emotion onset, responses at posterior MEG sensors differentiated between emotions, and between 1000 and 2200 ms, left posterior sensors were additionally modulated by social scenario. Moreover, activity on right anterior sensors showed both an early and prolonged interaction between emotion and social scenario. These results suggest that activity in right anterior sensors reflects an early integration of emotion and social attention, while posterior activity first differentiated between emotions only, supporting the view of a dual route for emotion processing. Altogether, our data demonstrate that both transient and sustained neurophysiological responses underlie social processing when observing interactions between others.

Keywords: dyadic interaction, social attention, gaze, dynamic emotional expression, MEG

INTRODUCTION

We live in a social world. In our daily life, we make sense of encountered social scenarios by extracting relevant visual cues which include determining the focus of another’s attention from their gaze direction, recognizing directed emotions and interpreting socially directed behaviors. These processes take place when we are directly involved in a social interaction (thus under a second-person viewpoint, SPV) as well as when we watch interactions between others without being directly involved in the interaction (thus under a third-person viewpoint, TPV). Here, we used magnetoencephalography (MEG) to investigate the neural dynamics of the perception of social attention scenarios and of the integration of gaze and emotional facial expression cues when perceived from a TPV.

To date, studies on gaze and emotion perception have mostly relied on SPV approaches. Thus, with regard to the perception of social attention scenarios, these studies compared conditions of direct and averted gaze in order to map brain responses to mutual vs deviated social attention conditions. They showed modulation of early event-related potential components such as the N170 (in electroencephalography, or EEG) and the M170 (in MEG) by direct vs averted gaze (e.g. Puce et al., 2000; Watanabe et al., 2001, 2006; Conty et al., 2007; see also Senju et al., 2005). However, it is still not known whether such modulations reflect early neural responses to distinct gaze directions or to the associated social attention scenarios. Furthermore, direct gaze enhances face processing (George et al., 2001; Senju and Hasegawa, 2005; Vuilleumier, 2005) and may trigger specific cognitive operations related to the self as a result of being the focus of another’s attention. Investigating the perception of social attention scenarios under TPV might be able to disentangle the effects of social attention from gaze direction and eliminate confounding effects of any personal involvement resulting from being looked at. In this vein, Carrick et al. (2007) examined the perception of three-face displays where an eye gaze interaction between adjacent faces generated distinct social attention conditions. Carrick and colleagues showed only late event-related potential (ERP) modulations as a function of social attention scenario. However, early neurophysiological responses (N170) previously associated with social attention processing (Puce et al., 2000; Conty et al., 2007) were not modulated in this paradigm. This lack of modulation was interpreted as being consistent with a gaze aversion in the central face relative to the viewer that was the only stimulus change during each experimental trial (Puce et al., 2000). However, due to a complex viewing situation in each trial, which changed from an SPV to a TPV perspective, the lack of N170 modulation could alternatively be interpreted as arising from mixed effects of viewed direct and deviated gazes on multiple faces. To avoid this problem, here, we used a paradigm where social attention scenarios, consisting of either mutual or deviated group attention, emerged from the interaction of two avatar faces who never gazed at the subject and displayed similar eye movements under every attention condition. Our first aim was to test if the early MEG activity (M170) may be modulated by social attention scenario in this paradigm. This would provide evidence for early neural encoding of social attention.

In addition, relatively little is known about the neural dynamics underlying the evaluation of social and emotional information and how this information might be integrated to generate a gestalt of the social situation. The existing literature in this area has been in neuroimaging studies that have shown that gaze direction and facial expression perception engage both distinct and overlapping brain regions, the latter including in particular the amygdala and the superior temporal sulcus (STS) regions (e.g. George et al., 2001; Puce et al., 2003; Sato et al., 2004b; Hardee et al., 2008). Furthermore, these regions seem to be involved in the integrated processing of these cues. In particular, amygdala responses are enhanced when gaze direction and emotional expressions jointly signal tendencies to approach or to avoid (Sato et al., 2004b, 2010a; Hadjikhani et al., 2008; N’Diaye et al., 2009; Ewbank et al., 2010; but see also Adams et al., 2003). Similarly, the STS is sensitive to the combination of gaze direction and emotional expression (Wicker et al., 2003; Hadjikhani et al., 2008; N’Diaye et al., 2009). However, while there are well-established neuroanatomical models of socio-emotional cue processing from faces (e.g. Haxby et al., 2000, 2002; Ishai, 2008), the temporal dynamics of the combined processing of these cues is largely unknown. Neuroanatomical models postulate that a posterior core system would be involved in eye gaze and facial expression perceptual processing whereas a more anterior, extended system would integrate this information to extract meaning from faces (Haxby et al., 2000). This may suggest a temporal sequence of early, independent perceptual processing of eye gaze and emotional expression followed by later stages of information integration. In line with this view, some recent studies suggested that eye gaze and emotional expression are computed separately during early visual processing, while integrated processing of these cues was observed in later stages (Klucharev and Sams, 2004; Pourtois et al., 2004; Rigato et al., 2009; see Graham and Labar, 2012 for a review). However, as there are multiple, largely parallel routes to face processing (Bruce and Young, 1986; Pessoa and Adolphs, 2010), it is also possible that there is an early integrated processing of eye gaze and emotional expression in some brain regions.

A second aim of this study was to investigate the neural dynamics underlying the integration of social attentional and emotional information when observing two interacting agents. We studied MEG activity while subjects viewed a pair of avatar faces displaying dynamic angry or happy expressions under two different social scenarios seen from a TPV. The initial stimulus gaze change allowed us to evaluate M170 and set up a social scenario of either mutual or group deviated attention where the pair of avatar faces subsequently displayed dynamic emotions (angry or happy) that waxed and waned under these social attention scenarios. Specifically, this design allowed the temporal separation of neural activity related to: (i) face onset, (ii) the gaze change and (iii) the evolving emotional expression, while conforming to the type of gaze transitions followed by emotional expression which is typically seen in everyday life (see Conty et al., 2007; Carrick et al., 2007 for a similar approach). Since both angry and happy expressions signal approach-related behavioral tendency but of opposite valence, we expected greater differentiation of MEG responses to emotion under the mutual relative to the group deviated attention scenario. An important, exploratory question concerned the dynamics of this effect: would the interaction between emotion and social attention arise early on or would initial MEG responses to emotion be independent from social attention scenario?

MATERIALS AND METHODS

Subjects

Fourteen paid volunteers (18–27 years; 10 female and 1 left-handed) participated in the study. The protocol was approved by the local Ethics Committee (CPP Ile-de-France VI, nb. 07024). All subjects had normal or corrected-to-normal vision and had no previous history of neurological or psychiatric illness.

Stimuli

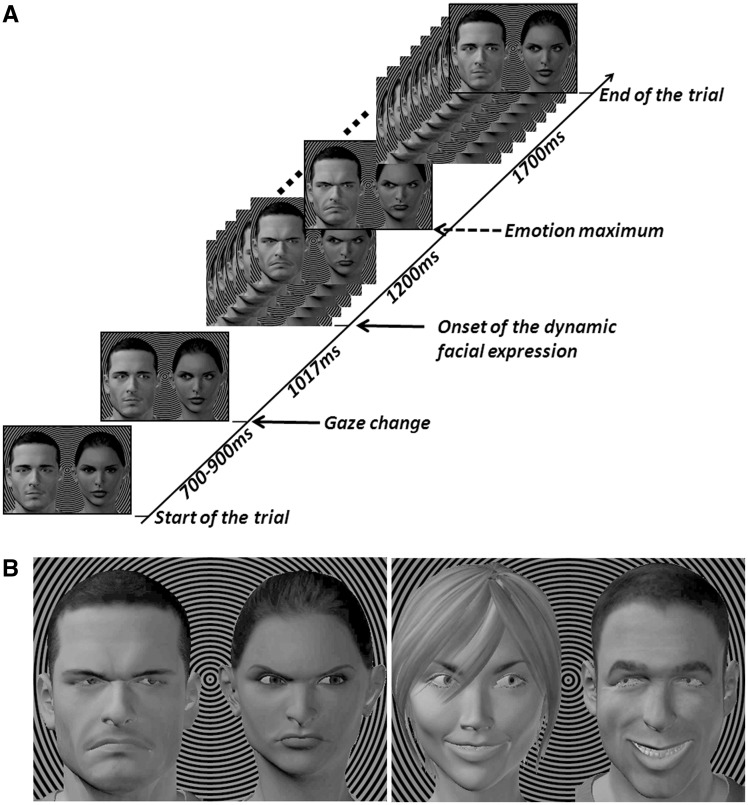

We created videos displaying 12 different pairs of avatar faces with initial downcast eyes, followed by a gaze change, and then subsequent dynamic facial expressions (happiness or anger) that grew and waned (Figure 1; see Supplementary Material for stimulus creation details). The gaze change generated two conditions: either the protagonists looked at each other (mutual attention) or they deviated their gaze toward the same side of the screen (deviated group attention, from now on referred to as deviated attention). Avatar pairs were placed on a background of black and gray concentric circles, so that the center of these circles was placed in between the two faces, at their eye level, and served as the fixation point. This point was perceived as slightly behind the avatar pair (Figure 1B), so that when the two avatars looked at each other, a mutual gaze exchange was seen. We also verified that the happy and angry expressions depicted by the avatars were recognized accurately and with similar perceived intensity, as described in Supplementary Material.

Fig. 1.

Time course of a trial. (A) The trial began showing the avatar pair with downcast eyes. After 700–900 ms, a gaze change occurred. Here, we show a mutual attention condition. After a further 1017 ms, the dynamic facial expression commenced, peaking at 1200 ms and then waning over the next 1700 ms to end the trial. Total trial duration was ∼4700 ms. (B) Examples of single frames at emotion peak for angry faces with deviated attention (left image) and happy faces with mutual attention (right image).

Procedure and MEG data acquisition

The subject was comfortably seated in a dimly lit electromagnetically shielded MEG room in front of a translucent screen (viewing distance 82 cm). Stimuli were back projected onto the screen through an arranged video projector system (visual angle: 5 × 3 degrees). Neuromagnetic signals were continuously recorded on a whole-head MEG system (CTF Systems, Canada) with 151 radial gradiometers (band-pass: DC-200 Hz; sampling rate: 1250 Hz; see Supplementary Material for details). Vertical and horizontal eye movements were monitored through bipolar Ag/AgCl leads above and below the right eye, and at the outer right eye canthi, respectively.

Each trial began with an avatar face pair with neutral expression and downward gaze lasting between 700 and 900 ms (Figure 1A). Then, a gaze change occurred on one frame and was maintained until the trial end, resulting in a mutual or deviated attention scenario. This was followed after 1017 ms by facial emotional expressions that grew and waned as depicted in Figure 1A. The inter-trial interval varied randomly between 1.5 and 2.5 s. Subjects were instructed to blink during inter-trial intervals and to maintain central fixation throughout the blocks, avoiding explorative eye movements during stimulus presentation.

The recording session comprised eight blocks, with short pauses given to the subject between blocks. In each block, each avatar face pair was presented once for each experimental condition of social attention (mutual/deviated) and emotion (happy/angry). For the deviated condition, half of the blocks displayed gazes deviated to the right and the other half gazes deviated to the left, producing 48 trials per block (12 avatar pairs × 2 emotion × 2 social attention). In addition, four to seven target trials were added in each block. During these trials, the fixation point was colored blue in a randomly selected frame during the occurrence of the emotional expression (Figure 1B). Subjects pressed a button as rapidly as possible when they detected this blue target circle. The task aided subjects to maintain central fixation and attention on the visual stimulation. The order of trial presentation was randomized within each block and across subjects, but with no immediate repetition of any given avatar pair. Before the proper experiment began, subjects performed a training block of seven trials, where every avatar character and every experimental condition were presented including one target trial.

MEG data analysis

Event-related magnetic fields

Trials with eye blinks or muscle artifacts within 200 ms before to 3600 ms after the gaze change (encompassing almost the entire unfolding of the facial expression) were rejected by visual inspection. Two types of averaged event-related magnetic fields (ERFs) were computed. First, to identify brain responses to changed social attention, we averaged neuromagnetic signals time-locked to the gaze change from the initial downward position to the left or rightward gaze position, using a 200 ms baseline before the gaze changes and a digital low-pass filter at 40 Hz (IIR fourth-order Butterworth filter). Averages were computed separately for mutual and deviated attention condition. Second, to identify brain responses to the dynamic emotional expression, we averaged neuromagnetic signals time-locked to the onset of the emotional expression, using a 200 ms baseline before emotional expression onset. These averages were computed separately for each emotion expression under each social attention condition. Target trials and false positives were excluded from analysis. Marked and prolonged oscillatory activity was evident in the ERFs to dynamic emotional expressions with no clear peak of activity. To analyze these data further, we smoothed these ERFs with a digital low-pass filter at 8 Hz. A mean number of 75 ± 11 trials were averaged for every condition and subject.

ERF mean amplitude analyses

ERF activity elicited to the social attention (gaze) change

We measured the mean amplitude of the M170 response to gaze change between 170 and 200 ms from seven sensors in the left (MLO12, MLP31, MLP32, MLP33, MLT15, MLT16 and MLT26) and right (MRO12, MRO22, MRT15, MRT16, MRT25, MRT26 and MRT35) hemispheres for every subject.

ERF activity elicited to the dynamic emotional expression

The mean ERF amplitude was measured in consecutive 300 ms time windows from 100 to 2500 ms after the onset of dynamic emotional expression over two scalp regions. First, a circumscribed, bilateral posterior region was considered including five left-side (MLO12, MLO21, MLO22, MLO32 and MLO33) and five right-side (MRO22, MRO33, MRT16, MRT26 and MRT35) sensors. Second, an extended right anterior region was considered, with 15 right-side (MRF12, MRF23, MRF34, MRT11, MRT12, MRT13, MRT21, MRT22, MRT23, MRT24, MRT31, MRT32, MRT33, MRT41 and MRT42) sensors.

Statistical analyses

All statistical analyses were performed using Statistica 8 (StatSoft, Inc.). Prior to these analyses, MEG data were multiplied by −1 in the hemisphere where in-flow fields were observed. Mean ERF amplitude for the gaze change was analyzed using a two-way repeated-measures analysis of variance (ANOVA) with social attention (mutual and deviated) and hemisphere (right and left) as within-subject factors. For mean ERF amplitudes to dynamic emotional expressions over posterior and anterior regions, an initial overall ANOVA including time window of measurement as a within-subject factor showed interactions between time window and our experimental factors of emotion and/or social attention on both sensor sets. Thus, to get some insight into the temporal unfolding of the effects of emotion and social attention, we ran ANOVAs in each time window on each selected scalp region, with social attention (mutual and deviated), emotion (angry and happy) and—for the bilateral posterior MEG sensor sets—hemisphere (right and left) as within-subjects factors.

RESULTS

Behavior

Subjects responded accurately to the infrequent blue central circle targets, with a mean correct detection rate of 96.5 ± 1.1% and a mean response time of 632 ± 45 ms.

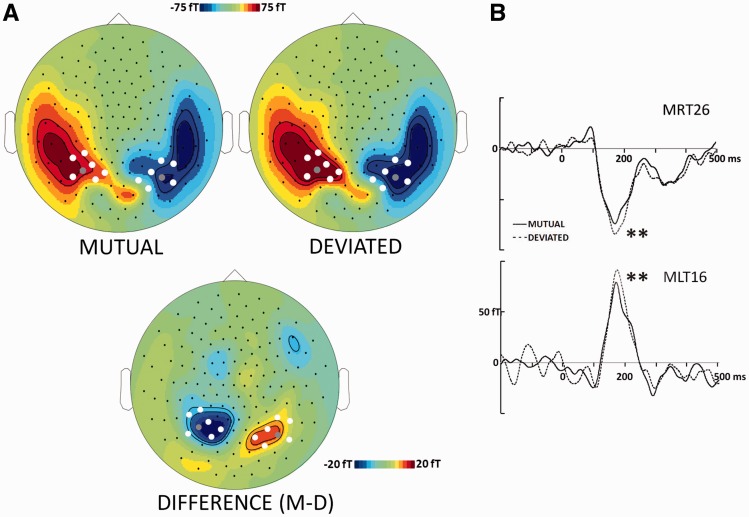

ERFs elicited to the gaze change

The gaze change elicited a prominent M170 response that peaked around 185 ms over bilateral occipito-temporal MEG sensors in all conditions (Figure 2). The bilateral pattern of MEG activity, with a flowing-in field over right hemisphere and a flowing-out field over left hemisphere, represented the typical M170 pattern to faces and eyes (Figure 3A) (Taylor et al., 2001; Watanabe et al., 2001, 2006). We performed mean amplitude analysis between 170 and 200 ms on left and right occipito-temporal sensors centered on the posterior maximum of the M170 component where the response to the gaze change was maximally differentiated. This showed a main effect of social attention with greater M170 amplitude for deviated relative to mutual attention (F1,13 = 10.09, P < 0.01; Figure 3B). There was not any significant lateralization effect or interaction between hemisphere and social attention.

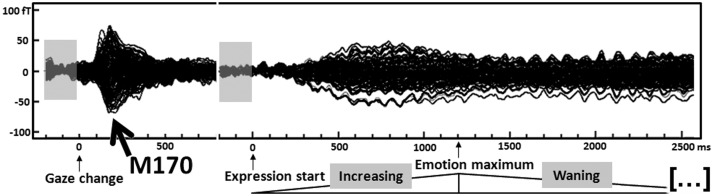

Fig. 2.

ERFs to the gaze change and subsequent dynamic emotional expression. Time course overlays of grand-averaged ERFs from 151 MEG sensors (group averages across all experimental conditions). On the timeline, the first zero corresponds to the gaze change. The arrow identifies the M170 to the gaze change. The light gray box depicts the pre-stimulus baseline for the M170 beginning 200 ms before the gaze change. The second zero in the timeline corresponds to the facial expression onset. Grand-averaged ERFs for dynamic emotional expression are depicted and appear to peak before 1000 ms after facial expression onset. The corresponding 200-ms pre-stimulus baseline for these responses is shown as a preceding light gray box. The evolution and subsequent wane of the emotional expression are indicated as a schematic triangle below the time scale. The vertical scale depicts ERF strength in femtoTesla (fT). The horizontal scale depicts time relative to the gaze change or facial expression onset in milliseconds (ms).

Fig. 3.

Effect of social attention on the M170. (A) Group-averaged topographic maps of mean ERF amplitude between 170 and 200 ms post-gaze change for MUTUAL (top left) and DEVIATED (top right) conditions, and the DIFFERENCE between these conditions (bottom), with corresponding magnitude calibration scales in femtoTesla (fT). Black dots depict MEG sensor positions, white dots depict sensors whose activity was sampled and analyzed statistically and gray dots indicate the illustrated sensors (which were also included in the statistical analysis). (B) Time course of ERFs for the representative sensors in right (MRT26) and left (MLT26) hemispheres shown in (A). The deviated condition elicited the largest ERF amplitudes. The difference in ERF amplitude across deviated and mutual conditions showed a main effect that was significant at the P < 0.01 level (dual asterisks). In the ERP waveforms, the solid lines represent the MUTUAL condition and the dashed lines represent the DEVIATED condition.

ERFs elicited to the dynamic emotional expression

Discernable MEG activity from ∼300 ms after the onset of the emotional expression was observed and persisted for the entire emotional expression display (Figure 2). This activity reached a maximum strength just prior to the maximal expression of the emotion. The activity appeared to differentiate happy vs angry expressions over a circumscribed bilateral posterior region and an extended right anterior region (Figure 4A). We performed mean amplitude analyses on bilateral posterior and right anterior sensors that covered both regions, including eight consecutive 300-ms time windows from 100–400 to 2200–2500 ms (Table 1; Figure 4B and C).

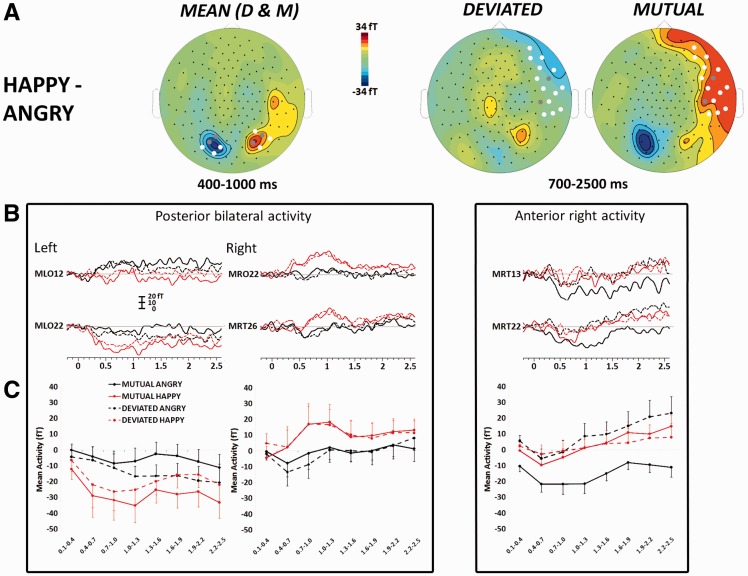

Fig. 4.

Group-averaged ERF amplitudes to dynamic emotional expressions. (A) Topographical ERF difference maps across happy and angry expressions (HAPPY–ANGRY) for the MEAN of mutual and deviated (D and M) conditions between 400 and 1000 ms, and for MUTUAL and DEVIATED social attention conditions between 700 and 2500 ms. A color calibration scale is shown in femtoTesla (fT). (B) Overall time course of ERFs to the evolution and waning of dynamic expressions, for the four experimental conditions. Data from two representative posterior and two representative anterior right sensors are illustrated. ERF amplitude (in fT) appears on the ordinate, and time (in seconds; relative to dynamic expression onset) is plotted on the abscissa. (C) Mean ERF amplitude (in fT) at the posterior and anterior sensors arrays as a function of emotion and social scenario. The grand mean amplitude (±SEM) of ERFs was computed over eight consecutive 300 ms time windows, between 100–400 and 2200–2500 ms after the start of the dynamic emotional expression. For parts (B) and (C), the four different line types correspond to the four different experimental conditions.

Table 1.

Statistical analysis of ERF activity to the emotional expression as a function of condition and post-expression onset time interval

| 100–400 | 400–700 | 700–1000 | 1000–1300 | 1300–1600 | 1600–1900 | 1900–2200 | 2200–2500 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Bilateral posterior | |||||||||||||

| Emotion (Emo) | n.s. | F = 6.62 P < 0.05 | F = 8.72 P < 0.05 | F = 5.69 P < 0.05 | n.s. | n.s. | n.s. | n.s. | |||||

| Social attention (Soc) | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | |||||

| Soc × Emo | n.s. | n.s. | n.s. | n.s. | n.s. | n.s | n.s. | n.s. | |||||

| Soc × Emo × Hem | F = 4.93 P < 0.05 | n.s. | n.s. | F = 5.17 P < 0.05 | F = 5.35 P < 0.05 | F = 5.79 P < 0.05 | F = 5.11 P < 0.05 | n.s. | |||||

| Left posterior | |||||||||||||

| Emo | n.s. | F = 6.24 P < 0.05 | F = 4.9 P < 0.05 | n.s. | n.s. | n.s. | n.s. | – | |||||

| Soc × Emo | n.s. | n.s. | n.s. | F = 8.32 P < 0.05 | F = 8.24 P < 0.05 | F = 8.97 P < 0.05 | F = 6.23 P < 0.05 | – | |||||

| Emo (mutual) | n.s. | – | – | F = 6.41 P < 0.05 | F = 5.74 P < 0.05 | F = 8.62 P < 0.05 | F = 4.69 P < 0.05 | – | |||||

| Emo (deviated) | n.s. | – | – | n.s. | n.s. | n.s. | n.s. | – | |||||

| Right posterior | |||||||||||||

| Emo | n.s. | F = 4.35 P = 0.057 | F = 10.72 P < 0.01 | F = 6.24 P < 0.05 | n.s. | n.s. | n.s. | – | |||||

| Soc × Emo | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | – | |||||

| Right anterior | |||||||||||||

| Emotion (Emo) | n.s. | F = 4.4 P = 0.056 | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | |||||

| Social Attention (Soc) | F = 9.08 P < 0.01 | F = 13.9 P < 0.01 | F = 11.86 P < 0.01 | F = 21.61 P < 0.001 | F = 10.76 P < 0.01 | n.s. | F = 6.09 P < 0.05 | F = 7.09 P < 0.05 | |||||

| Soc × Emo | n.s | n.s. | F = 5.11 P < 0.05 | F = 15.68 P < 0.01 | F = 13.65 P < 0.01 | F = 12.66 P < 0.01 | F = 21.58 P < 0.001 | F = 38.03 P < 0.001 | |||||

| Emo (mutual) | n.s. | F = 5.24 P < 0.05 | F = 12.51 P < 0.01 | F = 14.50 P < 0.01 | F = 19.99 P < 0.001 | F = 16.40 P < 0.01 | F = 14.85 P < 0.01 | F = 24.43 P < 0.001 | |||||

| Emo (deviated) | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | F = 5.38 P < 0.05 | |||||

| Soc (angry) | n.s. | F = 21.2 P < 0.001 | F = 29.79 P < 0.001 | F = 28.70 P < 0.001 | F = 19.5 P < 0.001 | F = 13.6 P < 0.01 | F = 20.08 P < 0.001 | F = 39.49 P < 0.001 | |||||

| Soc (happy) | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | |||||

Notes: Repeated measures ANOVAs were performed over bilateral posterior and right anterior sensors in each of the eight 300-ms time windows. The bilateral posterior analysis had three within-subjects factors: social attention (Soc), emotion (Emo) and hemisphere (Hem). The right anterior analysis had two within-subjects factors: social attention (Soc) and emotion (Emo). F- and P-values are reported when significant. Planned comparisons were performed when significant main effects or interactions were observed. Emo (mutual) and Emo (deviated) correspond to the effects of emotion under mutual and deviated attention conditions, respectively. Soc (angry) and Soc (happy) correspond to the effects of social attention for the angry and happy emotions, respectively. n.s. = not significant.

The analysis of bilateral posterior responses showed a sustained main effect of emotion independent of social attention between 400 and 1300 ms (Table 1; see also Figure 4C, left panels). A significant three-way interaction between emotion, social attention and hemisphere was seen between 1000 and 1300 ms. This reflected a main effect of emotion at right posterior sensors, whereas the emotion effect was dependent on social attention, reaching significance under mutual attention only, over the left posterior sensors. The differentiated response to emotions under mutual attention persisted between 1300 and 2200 ms on left posterior sensors (Table 1).

In contrast, analysis of the right anterior response demonstrated a sustained main effect of social attention over most time epochs from 100–400 to 1900–2500 ms post-expression onset (Table 1; see also Figure 4B and C, right panels). A trend for a main effect of emotion was observed only in the 400–700 ms time window. Importantly, there was a prolonged and significant interaction between emotion and social attention from 700–1000 to 2200–2500 ms; this interaction was driven by a differential response to angry vs happy expressions only under mutual attention, as well as by a marked effect of the social attention condition only for anger (Figure 4 and Table 1). We note that these effects surfaced between 400 and 700 ms but without a significant interaction between emotion and social attention.

DISCUSSION

In this study, we aimed at investigating the temporal dynamics of ERFs associated with the perception of dynamic dyadic social interactions under a TPV. The main findings were (i) larger M170s to the gaze change in deviated when compared with mutual attention scenarios and (ii) sustained ERF activity to the subsequent dynamic expression. This latter activity was modulated by both displayed emotion and social attention scenario in right anterior sensors, with angry vs happy faces under mutual attention being distinguished 400 ms after emotion onset. In contrast, activity in posterior sensors was initially modulated by emotional expression only; then, from 1000 ms onwards, activity on left posterior sensors was further modulated by social attention, with greater differentiation to angry vs happy faces under mutual attention. Our data demonstrate complex spatiotemporal effects to fairly simple displays of dynamic facial expressions (relative to a real-life social interaction). We discuss the separable neural effects due to the change in gaze and in the facial expression separately below.

Social attention modulates M170 amplitude

In our paradigm, mutual vs deviated attention conditions were generated from a gaze change of two avatar faces that never gazed at the viewer. This TPV approach was intended to create distinct social scenarios that were not based on a direct interaction of the stimuli with the subject, that is, no direct gaze was involved. Direct gaze sends important mutual attention signals to the viewer, but also elicits a feeling of personal involvement (Conty et al., 2010), thus potentially evoking brain activity related to both social attention and self-involvement processing, which are indistinguishable in this type of situation. It is however likely that these processes involve dissociable brain responses as shown by some recent fMRI and brain-lesion studies (Schilbach et al., 2006, 2007). Furthermore, under SPV, social attention scenarios of mutual vs deviated attention are directly mapped onto direct vs averted gaze directions. Hence, while several studies have shown N170 (in EEG) and M170 (in MEG) modulation for direct vs averted gaze directions (e.g. Puce et al., 2000; Watanabe et al., 2001, 2006; Conty et al., 2007), it is unclear whether this modulation reflects an early neural encoding of social attention, rather than processes related to self-involvement or to the coding of different gaze directions. Here, we show that under a situation where no self-involvement process was implicated and only averted gaze was seen, social content information—in the form of mutual vs deviated attention seen under TPV—was extracted early by the brain, as indicated by the modulation of the M170.

Our neurophysiological finding converges with a previous fMRI study that showed an influence of social context on the neural responses to gaze changes (Pelphrey et al., 2003). This latter effect was observed in the STS as well as in the intraparietal sulcus and fusiform gyrus. Source localization was beyond the scope of this study as we were concerned by the neurophysiological dynamics underlying the perception of changing social attention. Previously, it has been proposed that M170 neural sources sensitive to eyes and gaze direction are located in the posterior STS region (Itier and Taylor, 2004; Conty et al., 2007; Henson et al., 2009). Our M170 distribution is consistent with the involvement of these regions, and adjacent inferior parietal regions that belong to the attentional brain system (Hoffman and Haxby, 2000; Lamm et al., 2007). This would be consistent with the observation of a larger M170 for deviated relative to mutual attention, which suggests that this effect may also be related to the changes in visuospatial attention induced by seeing the gaze of others turning toward the periphery.

Our data contrast with a previous study of social attention perception where only late effects of social scenarios were found (from 300 ms post-gaze change; Carrick et al., 2007). However, these authors created social scenarios with gaze aversions in a central face flanked by two faces with (unchanging) deviated gaze: the central face’s gaze changed from direct gaze with the viewer (mutual attention under SPV) to one of three social attention scenarios under TPV (mutual attention with one flanker, group deviated attention with all faces looking to one side, and a control with upward gaze and no interaction with either flanker face). Thus, gaze aversion in the central face always produced a social attention change relative to the viewer. This social attention ‘away’ change may have masked any early differentiation between the ensuing social scenarios. Taken together with the results of Carrick et al. (2007), our finding suggests that the social modulation of the N/M170 represents the first of a set of neural processes that evaluate the social significance of an incoming stimulus.

We note that the N/M170s elicited to dynamic gaze changes here and in other studies (Puce et al., 2000; Conty et al., 2007) appear to be later in latency than those elicited to static face onset. Yet, the scalp distributions are identical to static and dynamic stimuli when compared directly in the same experiment (Puce et al., 2007). The latency difference is likely to be caused by the magnitude of the stimulus change: static face onset alters a large part of the visual field, whereas for a dynamic stimulus (e.g. a gaze change), a very small visual change is apparent. This may drive the latency difference (see Puce et al., 2007; Puce and Schroeder, 2010).

There is an additional consideration in our design with respect to the basic movement direction in our visual stimuli. In deviated attention trials, gaze directions were either both rightward or both leftward, whereas in mutual attention trials, one face gazed rightward and the other leftward. It could be argued that the M170 effect could reflect coding of homogeneous vs heterogeneous gaze direction, related to the activation of different neuronal populations under each condition (Perrett et al., 1985). At an even lower level, there could be neural differences to a congruous or incongruous local movement direction or alternatively visual contrast changes across both conditions. In all of the above cases, the heterogeneous condition could theoretically yield a net cancellation of overall summed electromagnetic activity. However, we believe that this is unlikely given that in Carrick et al. (2007), no differences in N170 amplitude occurred across heterogeneous and homogeneous gaze conditions. Additionally, comparisons between leftward vs rightward gaze movements have not shown directional differences in posterior distributed N170 or M170 amplitudes (Puce et al., 2000; Watanabe et al., 2001).

Social attention and facial expression interactions in sustained brain responses to dynamic emotional expressions

With regard to the emotional expressions themselves, we were interested in examining the temporal deployment of the neural responses to the emotional expressions under the different social attention scenarios. Given that the neural response profile of a social attention change has been previously described (Puce et al., 2000), we separated the social attention stimulus from the emotional expression to allow the neural activity associated with the social attention change to be elicited and die away prior to delivering the second stimulus consisting of the emotional expression. As we used naturalistic visual displays of prolonged dynamic emotional expressions, we believed it unlikely that discrete, well-formed ERP components would be detectable. Accordingly, discernible neural activity differentiating between the emotional expressions occurred over a prolonged period of time, as the facial expressions were seen to evolve. Brain responses appeared to peak just prior to the apex of the facial expression and persisted as the facial emotion waned, in agreement with the idea that motion is an important part of a social stimulus (Kilts et al., 2003; Sato et al., 2004a; Lee et al., 2010; see also Sato et al., 2010b and Puce et al., 2007).

Our main question concerned integration of social attention and emotion signals from seen faces. Classical neuroanatomical models of face processing suggest an early independent processing of gaze and facial expression cues followed by later stages of information integration to extract meaning from faces (e.g. Haxby et al., 2000). This view is supported by electrophysiological studies that have shown early independent effects of gaze direction and facial expression during the perception of static faces (Klucharev and Sams, 2004; Pourtois et al., 2004; Rigato et al., 2009). However, behavioral studies indicate that eye gaze and emotion are inevitably computed together as shown by the mutual influence of eye gaze and emotion in various tasks (e.g. Adams and Kleck, 2003, 2005; Sander et al., 2007; see Graham and Labar, 2012 for a review). Furthermore, recent brain imaging studies supported the view of an intrinsically integrated processing of eye gaze and emotion (N’Diaye et al., 2009; Cristinzio et al., 2010). Here, using MEG, our main result was that there were different effects of emotion and social attention over different scalp regions and different points in time. An initial main effect of emotion was not modulated by social attention over posterior sensors; this effect started around 400 ms post-expression onset and was then followed by an interaction between emotion and social attention from 1000 to 2200 ms, over left posterior sensors. In contrast, there was an early sustained interaction between emotion and social attention on right anterior sensors, emerging from 400 to 700 ms. Thus, in line with recent models of face processing (Haxby et al., 2000; Pessoa and Adolphs, 2010), these findings support the view of multiple routes for face processing: emotion is initially coded separately from gaze signals over bilateral posterior sensors, with (parallel) early integrated processing of emotion and social attention in right anterior sensors, and subsequent integrated processing of both attributes over left posterior sensors.

These findings complement those of previous studies using static faces (Klucharev and Sams, 2004; Rigato et al., 2009). The early interaction between emotion and social attention on anterior sensors obtained here shows that the neural operations reflected over these sensors are attuned to respond to combined socio-emotional information. Although we do not know the neural sources of this effect, it is tempting to relate this result to the involvement of the amygdala in the combination of information from gaze and emotional expression (Adams et al., 2003; Sato et al., 2004b; Hadjikhani et al., 2008; N’Diaye et al., 2009), as well as in the processing of dynamic stimuli (Sato et al., 2010a). Furthermore, the lateralization of this effect is consistent with the known importance of the right hemisphere in emotional communication, as shown by the aberrant rating of emotional expression intensity in patients with right (but not left) temporal lobectomy (Cristinzio et al., 2010). However, any interpretation of the lateralization of the effects obtained here should be made with caution, especially as we also found a left lateralized effect with regard to the interaction between emotion and social attention over posterior sensors. These topographical distributions are likely to reflect the contribution of the sources of the different effects that we obtained, which were activated concomitantly and overlapped at the scalp surface.

Mutual attention in angry faces increases sustained brain responses to dynamic emotional expressions

Happy and angry expressions both signal an approach-related behavioral tendency, but with opposite valence. As expected, we found more differentiated responses to these expressions under the congruent, approach-related condition of mutual attention than under the group deviated attention condition. This is in agreement with data that have shown enhanced emotion processing when gaze and emotional expressions signal congruent behavioral tendencies (Adams and Franklin, 2009; Rigato et al., 2009, see also Harmon-Jones, 2004 and Hietanen et al., 2008). In our paradigm, the compatible approach-related tendencies signaled by the avatars’ expressions (anger and happiness) and mutual attention might have enhanced the emotional expression salience, resulting in more differentiated brain responses to these opposite emotions under the mutual relative to the deviated attention condition. Interestingly, this effect contrasts with the larger MEG response seen under the deviated relative to mutual attention when the emotion was not yet displayed. This underlines the interdependence of social attention and emotion processing—that the social attention change gives the emotion a context. Moreover, the differential effect obtained seemed to take the form of a dissociated response to angry avatars with mutual gaze when compared with the other conditions, over the right anterior sensors. Note that flowing-in (seen as negative) and flowing-out (seen as positive) magnetic fields cannot be easily interpreted in terms of underlying sources activation strength, as they reflect the spatial arrangement of these sources as well as their strength. This is why we prefer to refer to differential responses between conditions, or to dissociated response in one condition, rather than to heightened or lowered response in one condition relative to the others. Thus, our results suggest the involvement of selective neural resources when observing an angry interaction between two individuals. This is reminiscent of Klucharev and Sams (2004) results showing larger ERPs for angry than happy static expressions with direct gaze, while the opposite pattern was obtained under the averted gaze condition. It may reflect the importance of the detection of angry expressions—evoking hostile intentions and threat—not only for oneself but also when observing two individuals in close proximity who are engaged in a mutual interaction.

Limitations

Finally, it is important to note that our study did not include any explicit task related to the perceived emotion and social attention situations. Thus, it is difficult to explicitly relate the effects obtained to either perceptual stage of information processing or some higher level processing stage of meaning extraction from faces. This question may be an interesting topic for future studies, given that from this study, it is clear that neurophysiological activity can be reliably recorded to prolonged dynamic facial expressions. The bigger question here is how sustained neural activity from one neural population is relayed to other brain regions in the social network. Source localization, using a realistic head model generated from high-resolution structural MRIs of the subjects, may also contribute in disentangling these complex interactions in the social network of the brain. This may be challenging to implement, given the temporally overlapping effects seen in this study with respect to isolated effects of emotion, and integration of social attention and emotion information.

The separation of the social attention stimulus and the dynamic emotional expression could be potentially seen as a design limitation in this study. However, the design allows the neural activity to each of these important social stimuli to play out separately in their own time and be detected reliably. By using a design where both social attention and emotion expression change simultaneously, there is the potential risk that the complex neural activity profile ensuing to these two potentially separate brain processes might superimpose or potentially cancel at MEG sensors.

CONCLUSION

The neural dynamics underlying the perception of an emotional expression generated in a social interaction is complex. Here, we disentangled neural effects of social attention from emotion by temporally separating these elements: social attention changes were indexed by M170, whereas the prolonged emotional expressions presented subsequently elicited clear evoked neural activity that was sustained effectively for the duration of the emotion. The modulation of this sustained activity by social attention context underscores the integrated processing of attention and expression cues by the human brain. These data further suggest that as we view social interactions in real-life, our brains continually process, and perhaps anticipate, the unfolding social scene before our eyes. How these prolonged neural signals influence brain regions remote from the local neural generators remains an unanswered question that goes to the core of understanding information processing in the social brain.

SUPPLEMENTARY DATA

Supplementary data are available at SCAN online.

Acknowledgments

We wish to thank Jean-Didier Lemaréchal and Lydia Yahia Cherif for their technical support in data analysis, and to all the staff of CENIR for their help in the experimental procedures. J.L.U. was supported by the Comisión Nacional de Investigación Científica y Tecnológica (CONICYT), Chile (PCHA, Master 2 y Doctorado) and by Fondation de France (Comité Berthe Fouassier) grant no. 2012-00029844. A.P. was supported by NIH (USA) grant NS-049436. N.G. was supported by ANR (France) grant SENSO.

REFERENCES

- Adams RB, Franklin RG. Influence of emotional expression on the processing of gaze direction. Motivation and Emotion. 2009;33:106–12. [Google Scholar]

- Adams RB, Gordon HL, Baird AA, Ambady N, Kleck RE. Effects of gaze on amygdala sensitivity to anger and fear faces. Science. 2003;300:1536. doi: 10.1126/science.1082244. [DOI] [PubMed] [Google Scholar]

- Adams RB, Kleck RE. Perceived gaze direction and the processing of facial displays of emotion. Psychological Science. 2003;14:644–7. doi: 10.1046/j.0956-7976.2003.psci_1479.x. [DOI] [PubMed] [Google Scholar]

- Adams RB, Jr, Kleck RE. Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion. 2005;5:3–11. doi: 10.1037/1528-3542.5.1.3. [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A. Understanding face recognition. British Journal of Psychology. 1986;77:305–27. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- Carrick OK, Thompson JC, Epling JA, Puce A. It’s all in the eyes: neural responses to socially significant gaze shifts. NeuroReport. 2007;18:763–6. doi: 10.1097/WNR.0b013e3280ebb44b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conty L, N’Diaye K, Tijus C, George N. When eye creates the contact! ERP evidence for early dissociation between direct and averted gaze motion processing. Neuropsychologia. 2007;45:3024–37. doi: 10.1016/j.neuropsychologia.2007.05.017. [DOI] [PubMed] [Google Scholar]

- Conty L, Russo M, Loehr V, et al. The mere perception of eye contact increases arousal during a word-spelling task. Social Neuroscience. 2010;5:171–86. doi: 10.1080/17470910903227507. [DOI] [PubMed] [Google Scholar]

- Cristinzio C, N’Diaye K, Seeck M, Vuilleumier P, Sander D. Integration of gaze direction and facial expression in patients with unilateral amygdala damage. Brain. 2010;133:248–61. doi: 10.1093/brain/awp255. [DOI] [PubMed] [Google Scholar]

- Ewbank MP, Fox E, Calder AJ. The interaction between gaze and facial expression in the amygdala and extended amygdala is modulated by anxiety. Frontiers in Human Neuroscience. 2010;4:56. doi: 10.3389/fnhum.2010.00056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- George N, Driver J, Dolan RJ. Seen gaze-direction modulates fusiform activity and its coupling with other brain areas during face processing. NeuroImage. 2001;13:1102–12. doi: 10.1006/nimg.2001.0769. [DOI] [PubMed] [Google Scholar]

- Graham R, Labar KS. Neurocognitive mechanisms of gaze-expression interactions in face processing and social attention. Neuropsychologia. 2012;50:553–66. doi: 10.1016/j.neuropsychologia.2012.01.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadjikhani N, Hoge R, Snyder J, de Gelder B. Pointing with the eyes: the role of gaze in communicating danger. Brain and Cognition. 2008;68:1–8. doi: 10.1016/j.bandc.2008.01.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hardee JE, Thompson JC, Puce A. The left amygdala knows fear: laterality in the amygdala response to fearful eyes. Social Cognitive and Affective Neuroscience. 2008;3:47–54. doi: 10.1093/scan/nsn001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harmon-Jones E. Contributions from research on anger and cognitive dissonance to understanding the motivational functions of asymmetrical frontal brain activity. Biological Psychology. 2004;67:51–76. doi: 10.1016/j.biopsycho.2004.03.003. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends in Cognitive Sciences. 2000;4:223–33. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. Human neural systems for face recognition and social communication. Biological psychiatry. 2002;51:59–67. doi: 10.1016/s0006-3223(01)01330-0. [DOI] [PubMed] [Google Scholar]

- Henson RN, Mouchlianitis E, Friston KJ. MEG and EEG data fusion: simultaneous localisation of face-evoked responses. NeuroImage. 2009;47:581–9. doi: 10.1016/j.neuroimage.2009.04.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hietanen JK, Leppänen JM, Peltola MJ, Linna-aho K, Ruuhiala HJ. Seeing direct and averted gaze activates the approach–avoidance motivational brain systems. Neuropsychologia. 2008;46:2423–30. doi: 10.1016/j.neuropsychologia.2008.02.029. [DOI] [PubMed] [Google Scholar]

- Hoffman EA, Haxby JV. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nature Neuroscience. 2000;3:80–4. doi: 10.1038/71152. [DOI] [PubMed] [Google Scholar]

- Ishai A. Let’s face it: it’s a cortical network. NeuroImage. 2008;40:415–9. doi: 10.1016/j.neuroimage.2007.10.040. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. Source analysis of the N170 to faces and objects. NeuroReport. 2004;15:1261–5. doi: 10.1097/01.wnr.0000127827.73576.d8. [DOI] [PubMed] [Google Scholar]

- Kilts CD, Egan G, Gideon DA, Ely TD, Hoffman JM. Dissociable neural pathways are involved in the recognition of emotion in static and dynamic facial expressions. NeuroImage. 2003;18:156–68. doi: 10.1006/nimg.2002.1323. [DOI] [PubMed] [Google Scholar]

- Klucharev V, Sams M. Interaction of gaze direction and facial expressions processing: ERP study. Neuroreport. 2004;15:621–5. doi: 10.1097/00001756-200403220-00010. [DOI] [PubMed] [Google Scholar]

- Lamm C, Batson CD, Decety J. The neural substrate of human empathy: effects of perspective-taking and cognitive appraisal. Journal of Cognitive Neuroscience. 2007;19:42–58. doi: 10.1162/jocn.2007.19.1.42. [DOI] [PubMed] [Google Scholar]

- Lee LC, Andrews TJ, Johnson SJ, et al. Neural responses to rigidly moving faces displaying shifts in social attention investigated with fMRI and MEG. Neuropsychologia. 2010;48:477–90. doi: 10.1016/j.neuropsychologia.2009.10.005. [DOI] [PubMed] [Google Scholar]

- N’Diaye K, Sander D, Vuilleumier P. Self-relevance processing in the human amygdala: gaze direction, facial expression, and emotion intensity. Emotion. 2009;9:798–806. doi: 10.1037/a0017845. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Singerman JD, Allison T, McCarthy G. Brain activation evoked by perception of gaze shifts: the influence of context. Neuropsychologia. 2003;41:156–70. doi: 10.1016/s0028-3932(02)00146-x. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Smith PA, Potter DD, et al. Visual cells in the temporal cortex sensitive to face view and gaze direction. Proceedings of the Royal Society of London. Series B. 1985;223:293–317. doi: 10.1098/rspb.1985.0003. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Adolphs R. Emotion processing and the amygdala: from a “low road” to “many roads” of evaluating biological significance. Nature Reviews. Neuroscience. 2010;11:773–83. doi: 10.1038/nrn2920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Sander D, Andres M, et al. Dissociable roles of the human somatosensory and superior temporal cortices for processing social face signals. European Journal of Neuroscience. 2004;20:3507–15. doi: 10.1111/j.1460-9568.2004.03794.x. [DOI] [PubMed] [Google Scholar]

- Puce A, Schroeder CE. Multimodal studies using dynamic faces, Ch. 9. In: Giese MA, Curio C, Bülthoff HH, editors. Dynamic Faces: Insights from Experiments and Computation. Cambridge: MIT Press Books; 2010. pp. 123–40. [Google Scholar]

- Puce A, Epling JA, Thompson JC, Carrick OK. Neural responses elicited to face motion and vocalization pairings. Neuropsychologia. 2007;45:93–106. doi: 10.1016/j.neuropsychologia.2006.04.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A, Smith A, Allison T. ERPs evoked by viewing facial movements. Cognitive Neuropsychology. 2000;17:221–39. doi: 10.1080/026432900380580. [DOI] [PubMed] [Google Scholar]

- Puce A, Syngeniotis A, Thompson JC, Abbott DF, Wheaton KJ, Castiello U. The human temporal lobe integrates facial form and motion: evidence from fMRI and ERP studies. NeuroImage. 2003;19:861–9. doi: 10.1016/s1053-8119(03)00189-7. [DOI] [PubMed] [Google Scholar]

- Rigato S, Farroni T, Johnson MH. The shared signal hypothesis and neural responses to expressions and gaze in infants and adults. Social Cognitive and Affective Neuroscience. 2009;5:88–97. doi: 10.1093/scan/nsp037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sander D, Grandjean D, Kaiser S, Wehrle T, Scherer KR. Interaction effects of perceived gaze direction and dynamic facial expression: evidence for appraisal theories of emotion. European Journal of Cognitive Psychology. 2007;19:470–80. [Google Scholar]

- Sato W, Kochiyama T, Uono S, Yoshikawa S. Amygdala integrates emotional expression and gaze direction in response to dynamic facial expressions. NeuroImage. 2010a;50:1658–65. doi: 10.1016/j.neuroimage.2010.01.049. [DOI] [PubMed] [Google Scholar]

- Sato W, Kochiyama T, Yoshikawa S. Amygdala activity in response to forward versus backward dynamic facial expressions. Brain Research. 2010b;1315:92–9. doi: 10.1016/j.brainres.2009.12.003. [DOI] [PubMed] [Google Scholar]

- Sato W, Kochiyama T, Yoshikawa S, Naito E, Matsumura M. Enhanced neural activity in response to dynamic facial expressions of emotion: an fMRI study. Cognitive Brain Research. 2004a;20:81–91. doi: 10.1016/j.cogbrainres.2004.01.008. [DOI] [PubMed] [Google Scholar]

- Sato W, Yoshikawa S, Kochiyama T, Matsumura M. The amygdala processes the emotional significance of facial expressions: an fMRI investigation using the interaction between expression and face direction. NeuroImage. 2004b;22:1006–13. doi: 10.1016/j.neuroimage.2004.02.030. [DOI] [PubMed] [Google Scholar]

- Schilbach L, Koubeissi MZ, David N, Vogeley K, Ritzl EK. Being with virtual others: studying social cognition in temporal lobe epilepsy. Epilepsy and Behavior. 2007;11:316–23. doi: 10.1016/j.yebeh.2007.06.006. [DOI] [PubMed] [Google Scholar]

- Schilbach L, Wohlschlaeger AM, Kraemer NC, et al. Being with virtual others: neural correlates of social interaction. Neuropsychologia. 2006;44:718–30. doi: 10.1016/j.neuropsychologia.2005.07.017. [DOI] [PubMed] [Google Scholar]

- Senju A, Hasegawa T. Direct gaze captures visuospatial attention. Visual Cognition. 2005;12:127–44. [Google Scholar]

- Senju A, Tojo Y, Yaguchi K, Hasegawa T. Deviant gaze processing in children with autism: an ERP study. Neuropsychologia. 2005;43:1297–306. doi: 10.1016/j.neuropsychologia.2004.12.002. [DOI] [PubMed] [Google Scholar]

- Taylor MJ, George N, Ducorps A. Magnetoencephalographic evidence of early processing of direction of gaze in humans. Neuroscience Letters. 2001;316:173–7. doi: 10.1016/s0304-3940(01)02378-3. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: neural mechanisms of emotional attention. Trends in Cognitive Sciences. 2005;9:585–94. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Watanabe S, Kakigi R, Miki K, Puce A. Human MT/V5 activity on viewing eye gaze changes in others: a magnetoencephalographic study. Brain Research. 2006;1092:152–60. doi: 10.1016/j.brainres.2006.03.091. [DOI] [PubMed] [Google Scholar]

- Watanabe S, Kakigi R, Puce A. Occipitotemporal activity elicited by viewing eye movements: a magnetoencephalographic study. NeuroImage. 2001;13:351–63. doi: 10.1006/nimg.2000.0682. [DOI] [PubMed] [Google Scholar]

- Wicker B, Perrett DI, Baron-Cohen S, Decety J. Being the target of another’s emotion: a PET study. Neuropsychologia. 2003;41:139–46. doi: 10.1016/s0028-3932(02)00144-6. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.