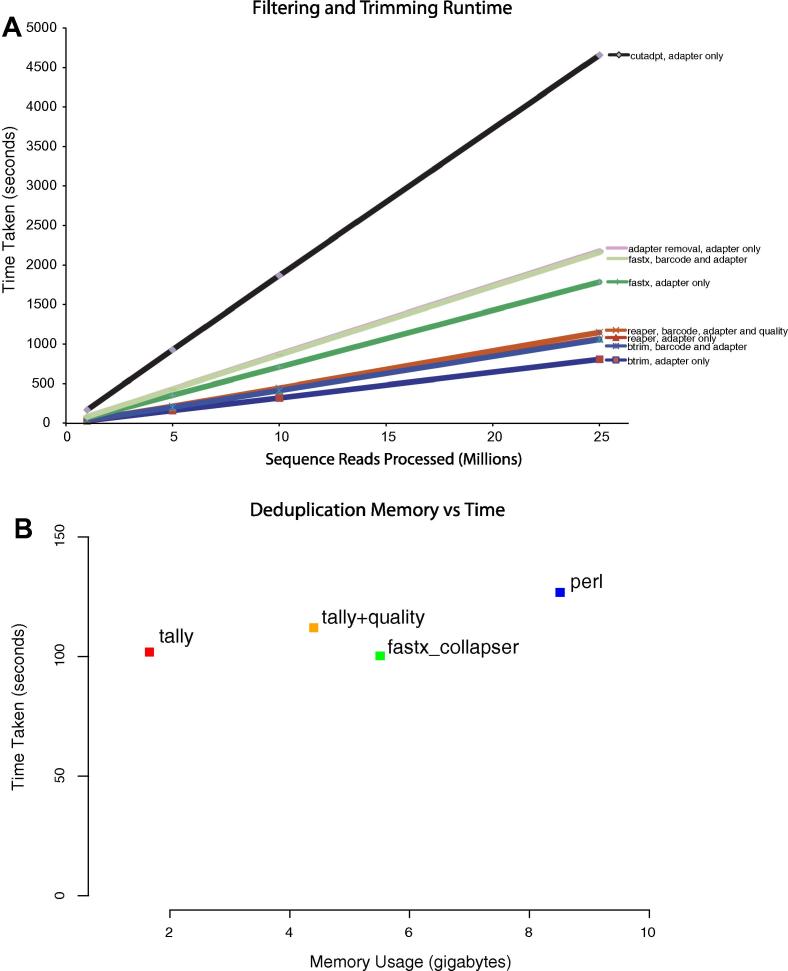

Fig. 5.

Read trimming and filtering benchmarking. (A) Run-time for a test benchmark dataset of 1, 5, 10 and 25 million reads for Reaper, Btrim, Cutadapt, FASTX and Adapter Removal. For each size the total runtime in seconds for each method is given. Input was in all cases provided as compressed FASTQ format and output was compressed on the fly. The same adapter sequence and barcode sequences were provided to each method. (B) Memory usage and run-time benchmark for a deduplication task for a FASTQ file with 65 M reads and 2.5G bases. Results are shown for Tally, Fastx_collapser, and a simple custom Perl program employing an associative array, including a Tally run where quality data was tracked for each deduplicated read (using the per-base maximum quality score across all duplicated reads).