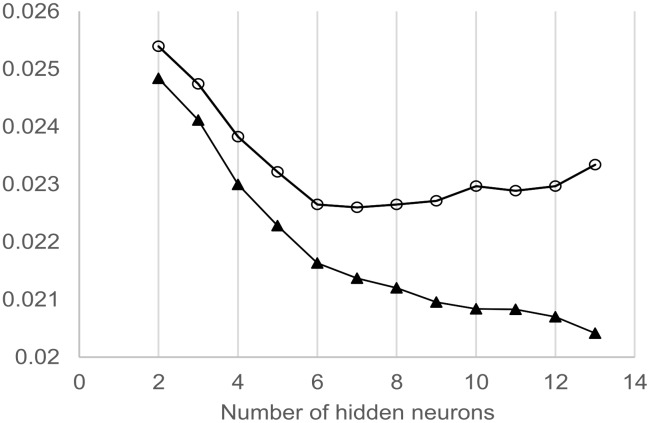

Figure 2. Determination of the best multi-layers perceptron (MLP) architecture (i.e optimal number of hidden neurons).

The curves show mean squared errors in function of the number of hidden neurons for the training (MSEt, black triangles) and the validation phase (MSEv, open circles) of the modelling process. MSEt and MSEv were averaged over the 1000 simulations carried at each level of hidden neurons, with the following predictive variables: year, temperature, daylight length, temperature x daylight length, body weight. As the number of neurons increased, training performance improved (decreasing MSEt), whilst validation performance firstly improved (decreasing MSEv) but then worsened (MSEv increasing) at 8 hidden neurons. Thus, the optimal state was reached at 7 neurons.