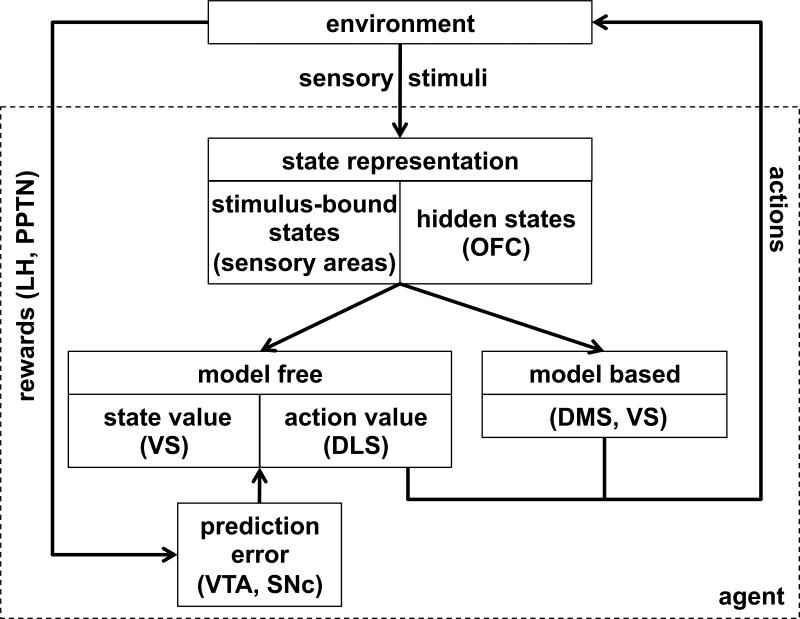

Figure 7.

Schematic of neural RL with hypothesized mapping of functions to brain areas. The environment provides rewards and sensory stimuli to the brain. Rewards, represented in areas such as the lateral habenula (LH) and the pedunculopontine nucleus (PPTN), are used to compute prediction error signals in ventral tegmental area (VTA) and substantia nigra pars compacta (SNc). Sensory stimuli are used to define the animal's state within the current task. The state representation might involve both a stimulus-bound (externally observable) component, which we propose is encoded both in OFC and in sensory areas, and a hidden (unobservable) component which we hypothesize is uniquely encoded in OFC. State representations are then used as scaffolding for both model-free and model-based RL. Model-free learning of state and action values occurs in ventral striatum (VS) and dorsolateral striatum (DLS), respectively, while model-based learning occurs in dorsomedial striatum (DMS) as well as VS.