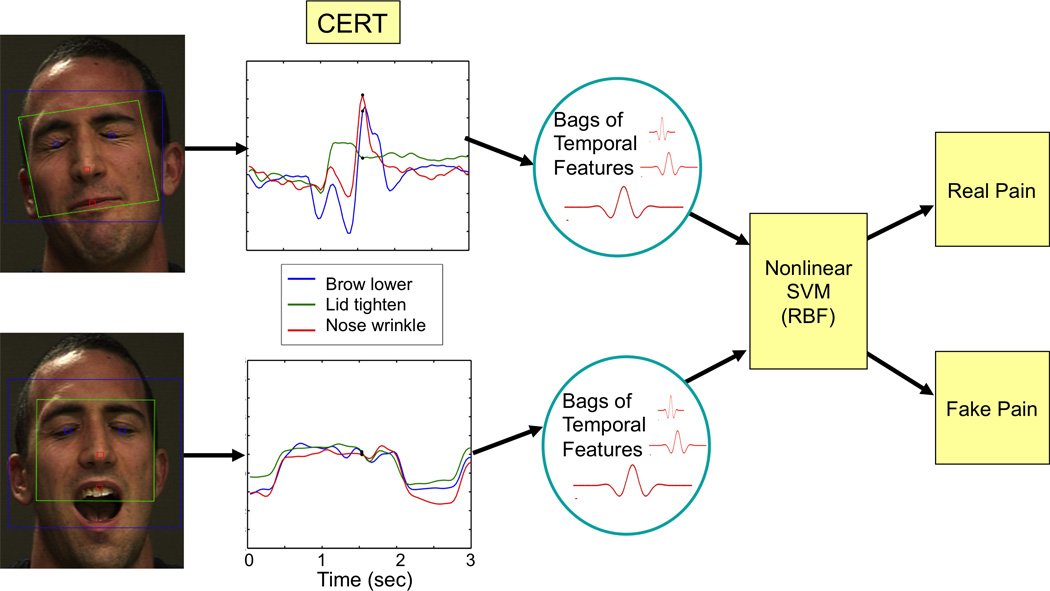

Figure 2. System Overview.

Face video is processed by the computer vision system, CERT, to measure the magnitude of 20 facial actions over time. The CERT output on the top is a sample of real pain, while the sample on the bottom shows the same three actions for faked pain from the same subject. Note that these facial actions are present in both real and faked pain, but their dynamics differ. Expression dynamics were measured with a bank of 8 temporal Gabor filters and expressed in terms of ‘bags of temporal features.’ These measures were passed to a machine learning system (nonlinear support vector machine) to classify real versus faked pain. The classification parameters were learned from the 24 one-minute examples of real and faked pain.