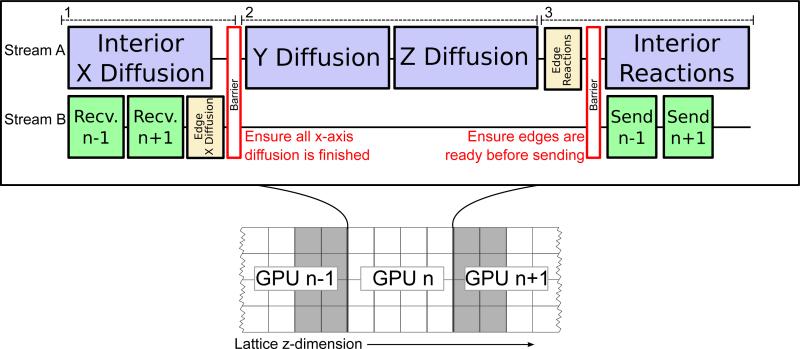

Figure 2.

Execution of a timestep on each GPU is a three-phase process: On GPU n, the first phase processes x-axis diffusion on the interior (white area) of its sublattice while receiving the updated lattice state along the sublattice boundaries (gray) from neighboring GPUs (n – 1, n + 1). These operations are executed asynchronously on two CUDA streams to overlap peer-to-peer GPU memory copy operations with the computational kernels. X-axis diffusion is run on the sublattice edges once the receive operations complete. A stream/event barrier (red) synchronizes the two streams before continuing, ensuring that x-axis diffusion is complete across the entire sublattice and all memory transfers are complete. The second phase evaluates the y- and z-axis diffusion on the entire sublattice as sequential operations, and no communication with other GPUs is necessary. The third phase begins with the computation of reactions on the sublattice edges. The remaining sublattice interior is evaluated for reactions while the updated states of the two sublattice edges are asynchronously sent to the neighboring GPUs.