Abstract

This paper discusses the design, development, features, and clinical evaluation of a personal digital assistant (PDA)-based platform for cochlear implant research. This highly versatile and portable research platform allows researchers to design and perform complex experiments with cochlear implants manufactured by Cochlear Corporation with great ease and flexibility. The research platform includes a portable processor for implementing and evaluating novel speech processing algorithms, a stimulator unit which can be used for electrical stimulation and neurophysio-logic studies with animals, and a recording unit for collecting electroencephalogram/evoked potentials from human subjects. The design of the platform for real time and offline stimulation modes is discussed for electric-only and electric plus acoustic stimulation followed by results from an acute study with implant users for speech intelligibility in quiet and noisy conditions. The results are comparable with users’ clinical processor and very promising for undertaking chronic studies.

Keywords: Cochlear implant (CI), electrical stimulation, speech processing

I. INTRODUCTION

Cochlear implants (CI) serve as a benchmark technology in neural prostheses for their high success rate in restoring hearing to the deaf and their growing and widespread use. According to the U.S. Food and Drug Administration (FDA), as of December 2010, approximately 219 000 people worldwide have received CI [1]. Comparison of these statistics to the year 2005 when there were about 110 000 implant recipients [2] and the year 1995 when there were only 12 000 [3] implant recipients, indicates the growing demand and satisfac tion with the implant performance. The cochlear implant system has been continuously improved from front-ending sound processing and fitting software to internal stimulator and electrode design [4], [5]. In particular, sound processor technology has played a significant role in the growth of CI uptake in the community.

The present work focuses on the development of a general purpose sound processing research platform which could be used to design new experiments and evaluate user performance over time. Implant manufacturers usually provide research speech processors for use with human subjects that allow researchers to develop and test new signal processing algorithms. However, most labs are unable to use them due to limited technical resources or due to the constrained framework of the interface provided by the manufacturer. These limitations include flexibility, portability, wearability, ease of programmability, long-term evaluation and features to design intricate experiments. One of the important factors which hinder their use for speech processing research is that a skilled programmer is required to implement the algorithms in a high level or low-level language [6]. A summary of features and limitations of the existing research interfaces is provided in Table I.

TABLE I.

Major Features and Limitations of Popular Research Interfaces for Cochlear Implants

| Research Interface | Implant type | Suitable for real- time1 processing |

Suitable for offline2 streaming |

Portable / wearable |

Bilateral stimulation |

Electric plus acoustic stimulation |

Programmability in high-level language |

Suitable for complex algorithms |

Suitable for take- home trials |

|---|---|---|---|---|---|---|---|---|---|

| Nucleus Implant Communicator (NIC) [8], NIC version 2 [9] | Nucleus devices: CI22 / CI24 | ✓ | ✓ | ✓ | |||||

| Sound Processor for Electrical and Acoustic Research, revision 3 (SPEAR3) System [10] - [12] | Nucleus devices: CI22 / CI24 | ✓ | ✓ | ✓ | ✓ | † | ✓ | ||

| Clarion Research Interface (CRI) [13], CRI-2 [14] | Clarion implant by Advanced Bionics | ✓ | ✓ | ✓ | ✓ | ||||

| Research Interface Box (RIB) [15] | MED-EL devices | ✓ | ✓ | ✓ |

Real-time: In real-time configuration, signal acquisition, sound processing and stimulation all are done in real-time similar to a clinical processor.

Offline: In offline configuration, the processor transfers preprocessed stimuli to the implant. Thus, stimuli creation and stimuli delivery are not necessarily done in real-time. A computer is typically used to create the stimuli and then stream it to the implant later in time.

To address these needs, we proposed a PDA-based research platform for cochlear implant research for both human and animal studies which overcomes these limitations and provides a flexible software driven solution for both clinicians and researchers without requiring advanced programming skills or major hardware investment [7]. The proposed platform works only with Nucleus Freedom1 devices, although its idea can be applied to other devices. The main reason to choose Freedom devices was access to the communication protocols between the processor and the implant. However, the platform can easily be upgraded to support new devices.

The prominent features of the PDA-based platform are as follows.

It supports real-time (RT) and offline modes of operation.

It supports unilateral and bilateral CI.

It supports electric-only and electric plus acoustic (bimodal) stimulation.

Flexibility in programmability: It supports development in C, C++, C#, MATLAB, LabVIEW, and assembly language or a combination of the above.

Portability and wearability makes it suitable for take home field trials for realistic assessment of new algorithms after long-term use.

Flexible feature space to define stimulation parameters (rate, pulse width, interphase gap, stimulation modes etc.), shape, and timing of stimuli pulses of individual electrodes to deliver complex stimuli e.g., in psychophysics experiments,

Flexibility to allow quick development and evaluation of new research ideas (e.g., by using MATLAB in offline mode or LabVIEW in RT mode),

Touch-screen with stylus user interface allows user interaction and custom fitting of stimulation parameters on the go,

Centralized processor enables perfectly synchronous stimulation for both left and right implants. This makes it an ideal platform to investigate channel (left/right) interaction, spatial hearing, interaural time differences and squelch effects in binaural studies,

Platform independence: It may easily be interfaced with the new generation of smart phones that support the secure digital I/O (SDIO) interface,

Computational Power: The PDA runs at 625 MHz on an ARM processor and supports multithreaded programming environment. This offers unparallel computational power to do RT processing of complex algorithms. The newer generations of dual-core/quad-core processor-based smart phones will significantly improve the processing capability,

The software framework provides a versatile set of example applications to address a multitude of research needs,

Portable bipolar stimulator can be used for animal studies,

Capability to record electroencephalogram (EEG) and cortical auditory evoked potentials (CAEPs), and

Scalable design: The software architecture is flexible and scalable to accommodate new features and research ideas.

These features have enabled the PDA platform to emerge as a successful tool in the research arena with many researchers utilizing it to implement new and existing algorithms [16]–[19] and establishing its use in clinical studies [20], [21]. In [7], our group demonstrated RT implementation of a 16-channel noise-band vocoder algorithm in C and LabVIEW. In the same paper, recording of EEG signals on the PDA acquired through a compact flash data acquisition card was also reported. Peddigari et al. presented RT implementation of a CI signal processing system on the PDA platform in LabVIEW using dynamic link libraries in [16]. A recursive RT-DFT-based advanced combinational encoder (ACE) implementation for high analysis rate was reported in [17]. Gopalakrishna et al. presented RT implementation of a wavelet-based ACE strategy in [18] and a novel wavelet packet-based speech coding strategy in [19] on the PDA platform. The offline capability of the PDA platform to process and stream stimuli from a PC within the MATLAB environment was presented in [22]. In the same paper, the ability to perform psychophysics experiments with the platform was also shown. The use of the platform for electric plus acoustic stimulation, and evaluation with bimodal listeners was presented in [23]. The platform was used in clinical evaluation of a novel blind time-frequency masking technique for suppressing reverberation with human subjects in [20] and [21]. The successful implementation of algorithms of varying complexity and versatile features justifies the potential of the PDA-based research platform for undertaking a wide variety of experiments for various research needs. The platform is currently under the FDA trial.

This paper is organized as follows. Section II presents the design of the platform from the hardware perspective. Software architecture of the platform for real time, offline, and electric plus acoustic stimulation (EAS) modes is discussed in Section III. Clinical evaluation of the platform with unilateral, bilateral and bimodal CI subjects, and statistical analysis of the results are presented in Section IV. Finally, the conclusions are given in Section V.

II. HARDWARE OVERVIEW

Current CI users either carry a body-worn speech processor or use a behind-the-ear (BTE) processor. In the case of a BTE processor, the microphone, processor, and battery are contained within the BTE package, and the transmitting coil is connected to the BTE via a custom cable. For a body-worn processor, the microphone headset and transmitting coil are connected via a custom cable to the processor. Sound is picked up by the microphone and sent to the processor, which processes the signal in a way that mimics the auditory signal processing performed by the inner ear. The processor sends coded and radio frequency (RF)-modulated electrical stimulation information (e.g., stimulation parameters, current amplitude levels, etc.) for transmission via a headpiece coil [24]. The headpiece coil transmits the RF transcutaneously through the skin to the implanted RF receiver, which in turn decodes the information and sends electrical stimulation to the electrode array implanted in the inner ear.

The main difference between what is currently available in the market and the speech processor presently described is the replacement of the body-worn or BTE processor with a PDA and an interface board. The FDA-approved headpiece coil and the internal implant are the same in both cases.

The research platform as a whole comprises

a portable processor in the form of a smart-phone or a PDA for implementing and evaluating novel speech processing algorithms [7];

an interface board to connect the PDA with the Freedom [25] headpiece coil through the SDIO port of the PDA [26], [27];

a bench-top and a portable stimulator (monopolar and bipolar) designed for electrical stimulation and neurophysiologic studies with animals [28]; and

a recording unit for collecting evoked potentials from the human subjects [7].

The following sections provide details on each of these hardware components and their usage.

A. PDA

The PDA is used as a portable processor for implementing signal processing algorithms. There are a number of reasons for choosing the PDA as the computing platform. First, it is light weight (164 g), small in size (11.9 × 7.6 × 1.6 cm) and therefore portable. Second, it uses a powerful (Intel's XScale PXA27x processor). The majority of the PDAs run at high clock speeds and the ARM-based processors allow very efficient programming. Third, the software running on the PDA operates under the Windows mobile environment, which allows researchers to program novel sound processing strategies in assembly language and/or high-level languages such as C, C++, and C# or a combination of both. LabVIEW also provides a tool-box to program using their conventional graphical environment virtual instruments (VI). We have developed libraries which allow the PDA platform to be interfaced with MATLAB for offline stimulation tasks [22]. This offers immense flexibility and ease in implementing and testing new algorithms for CI over a relatively short period of time, as opposed to implementation in assembly language. Fourth, the PDA platform is easily “adaptable” to new and emerging technologies as they become available. That is, the platform is easily portable to newer generation of PDAs and smart phones as more powerful and more energy efficient chips become available in the market.

The PDA used in the current study is the HP iPAQ, model hx2790b which houses the XScale processor based on the ARM9 processor core and allows ARMv4 Thumb instruction set architecture. The PDA has 64 MB of SDRAM and 256 MB of persistent storage memory (to store files, etc.).

B. SDIO Interface Board

The SDIO board is a custom developed board used to interface the PDA with the Cochlear Corporation's CI. The board plugs into the SDIO port of the PDA and enables the PDA to stimulate the Cochlear Corporation's CI24 or CI22 implants. Very briefly, the PDA sends stimulus amplitude/electrode packets to the SDIO card using the SDIO 4-bit communication protocol. The amplitudes are converted by the FPGA to the embedded protocol [29] for the CI24 implant or the expanded protocol [30] for the CI22 implant. The encoded stimulus is finally sent to stimulate the implant via the Freedom coil. Fig. 1 shows the functional diagram of the SDIO board. It has two Cochlear headset sockets which connect with the right and left ear BTEs via custom cables, thus allowing bilateral stimulation. To ensure that patients will not plug a commercially available cable into the SDIO board, we use a different size socket that does not mate with the cable used in the commercial body-worn processors.

Fig. 1.

Functional diagram of the SDIO board.

The board is equipped with a Xilinx Spartan 3 FPGA, an Arasan SDIO interface controller, a configuration PROM, and power management circuitry to implement the communication interface between the PDA and the implant. The board is powered using a 5-V battery source. An overview of the primary board components is given as follows.

The Arasan ASIC AC2200ie is a SDIO card controller and implements the SDIO standard 1.2 and SD physical layer spec-ification 1.10. It communicates with the SDIO host controller on the PXA270 processor in the PDA via a command response interface.

The 24LC08B EEPROM stores the initialization parameters for the ASIC to startup in “CPU-like interface” mode. The EEPROM communicates with the ASIC via an I2C bus.

The Xilinx FPGA (XC3S1500) receives the amplitude packets from the Arasan ASIC and encodes them to the embedded [29] or expanded Protocol [30] depending on the device type. The encoded bit stream is sent to the Freedom coil using a 5 MHz data signaling clock. The FPGA is clocked by a 50 MHz crystal for communication with the implant and a 48 MHz crystal for communication with the ASIC. The FPGA logic implements a receive and transmit state machine and can support the 0.94 Mbps data link to the Freedom coil in the low rate stimulation mode using 5 cycles per cell. The peak stimulation rate is 15 151 pulses/s. Using 4 cycles per cell the peak stimulation rate can be increased to19 608 pulses/ s. The SDIO board can be used for bilateral or unilateral cochlear implant studies.

The Xilinx XCF08P is an FPGA configuration PROM, which stores the synthesized logic from which the FPGA boots up during power on.

A preamplifier from Linear Technologies (LTC6912) provides two independent inverting amplifiers with programmable gain. The signal in each channel can be amplified with one of 8 gain settings 0, 1, 2, 5, 10, 20, 50, and 100 corresponding to –120, 0, 6, 14, 20, 26, 34, and 40 dB, respectively. The LTC6912 is programmed by the FPGA using the synchronous peripheral interface (SPI) control bus.

The output of the preamplifier is filtered by the LT1568 antialiasing filter IC configured as a dual second-order Bessel filter with a cutoff frequency of 11 025 Hz.

The LTC1407 is a stereo A/D converter (ADC) which samples the microphone outputs from the bilateral BTE connected to their respective Freedom coils. The ADC has 1.5 MSps throughput per channel, operates at a sampling rate of 22 050 Hz and presents a 14-bit two's complement digital output (interleaved left and right channels) to the FPGA. The samples are received by the FPGA over the SPI data bus.

A Texas Instruments triple-supply power management IC (TPS75003) supplies power to the FPGA and configuration PROM. The TPS75003 takes a 5 V input from the external battery pack and generates 1.2 V for VCCINT (core voltage), 3.3 V for VCCO (I/O voltage), and 2.5 V for VCCAUX (JTAG, Digital Clock Manager and other circuitry). The TPS75003 is a switching regulator (pulse width modulation control) type and is “on” only when the power is needed. An Intersil ISL8014 regulator supplies 1.8 V for the FPGA configuration PROM core supply.

The SDIO board also has a Lemo mini-coax connector providing access to a trigger out signal. A 5 V trigger signal can be generated from the FPGA through a Texas Instruments SN74LVC1T45 voltage translator. This trigger signal can be used for synchronization purposes in external evoked potentials recording systems.

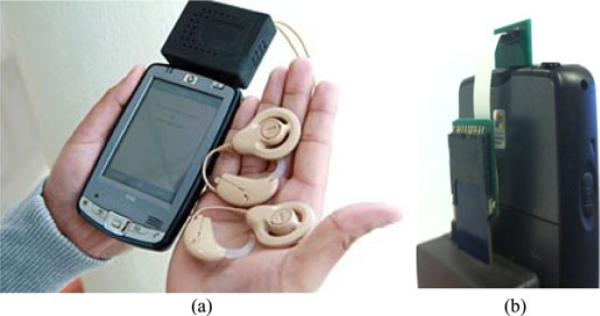

The SDIO board is housed in an enclosure as shown in Fig. 2(a) for safety purposes. An SDIO extender cable as shown in Fig. 2(b) may also be used for ease of use or for accessing other PDA ports, e.g., the headphone port used for acoustic stimulation.

Fig. 2.

(a) PDA-based processor with PDA, BTE, RF coil, and SDIO interface board (plugged in the SDIO port) and (b) SDIO board connected via an extender flat flex cable.

C. BTE and Coil

Freedom coils with modified (flex circuit) HS8 BTEs from Cochlear Corporation [14] are used for this study. Each BTE houses a directional microphone.

D. Stimulator Unit

The bipolar stimulator or BiSTM is a multichannel bipolar current source designed for acute experiments on percutaneous, animal cochlear implant systems. The BiSTM offers researchers the ability to study the effects of channel interactions with speech stimuli, particularly, as a function of electrode array configuration. While the BiSTM is intended to be used primarily for bipolar, BP+1, eight-channel stimulation, it is also capable of generating up to 16 independent, time-interleaved monopolar signals. Therefore, the effects of time-interleaved monopolar stimulation with speech stimuli can also be investigated with this device.

The BiSTM can generate a wide array of excitation patterns including both pulsatile and analog-like, or combinations of both. At the core of the board is the 9-bit configurable current source chip, simply referred to as the BiSTM chip designed in our lab [28], [31]. The BiSTM chip is designed to provide programmable anodic and cathodic current pulses for stimulation.

The BiSTM chip possesses the following specifications.

8 independently controlled bipolar channels or up to 16 independently controlled time-interleaved monopolar channels, each electrically isolated and charge balanced.

5 V compliance voltage.

1 mA maximum current amplitude per channel.

9-bit current amplitude resolution per channel (1.95 μA).

4 μs minimum pulse width per channel (1 s maximum pulse width).

0 μs minimum interphase gap per channel (maximum allowed interphase gap depends on maximum pulse width).

4 μs minimum interstimulus interval per channel (maximum depends on desired pulse rate).

83.3 kHz maximum pulse rate per channel.

>50 MΩ output resistance per channel.

With these features, a wide array of stimulation techniques for CI can be tested on animals. By varying parameters such as current amplitude, pulse width, interphase gap, interstimulus interval, and pulse rate, a multitude of stimulation patterns can be created both in phase (simultaneous) or interleaved across multiple channels.

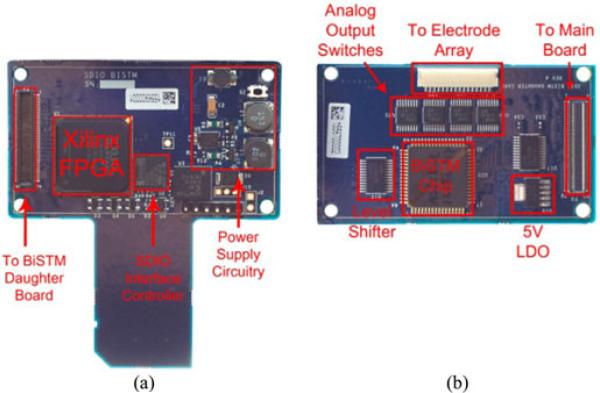

From the hardware perspective, the portable BiSTM board is divided into two separate boards, a main board and a daughter board (see Fig. 3) in order to minimize the overall size of the package. A single board would have been many times larger and hence impractical. The two boards overlaid in a sandwich-like (stacked) configuration helped to reduce the form factor and make it suitable for chronic animal studies. The main board houses a Xilinx Spartan 3 FPGA which controls the BiSTM chip, Arasan SDIO interface, power circuitry, and an 80-pin board-to-board connector to route control, power, and data signals to the BiSTM chip located on the daughter board. The daughter board houses the BiSTM chip, analog output switches, a 5-V regulator, and voltage level shifters. The overall dimensions of the PDA with the portable stimulator unit are 15.2 cm × 7.6 cm × 1.6 cm with an overall weight of 300 g. For more details on the stimulator design, please refer to [28] and [31].

Fig. 3.

(a) Portable SDIO-BiSTM main board and (b) SDIO BiSTM daughter board.

E. Recording Unit

The PDA platform has the capability to record EEG and CAEPs via data acquisition cards. Off the shelf data acquisition cards (such as DATAQ-CF2 and CF-6004) plug into the compact flash slot of the PDA and can be programmed in C or in Lab-VIEW. A major limitation of the commercial data acquisition cards is the limited number of recording channels. In order to compensate for this, the SDIO board was equipped with a Lemo mini-coax connector which provides access to an output trigger signal. This trigger signal can be used for synchronization purposes in external recording systems of evoked potentials. The trigger output signal is not connected directly to the patient; hence, it poses no safety concerns. Rather, it is used as an input to external neural-recording systems (e.g., Neuroscan, Compumedics Ltd.), which have been approved by FDA for use with human subjects. For more details on the design and recordings, please refer to [26], [32] and [33].

III. SOFTWARE OVERVIEW

The PDA-based speech processor has two modes of operation:

the RT speech processor, which allows both electric-only and EAS in real time, and

the offline speech processor, which transfers preprocessed stimuli to the implant in offline mode through MATLAB running on a PC. In addition to the EAS capability, offline mode also supports psychophysics.

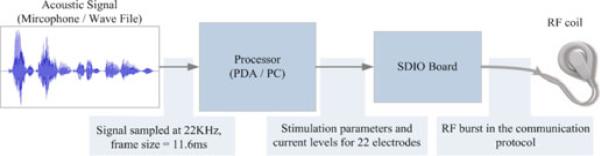

A simplified generic signal flow for both modes is depicted in Fig. 4. For the RT mode, the acoustic signal is acquired from the microphone and processed within the PDA. Alternatively, for offline mode, an audio file is read and processed by the software running on the PC. The processing results in a set of n current levels for n active electrodes. The maximum value of n is 22, which corresponds to the total number of electrodes available in the Cochlear Corporation's implants. The set of current level and electrode number pairs are sent to the interface board via the SD slot. The FPGA on the interface board receives the stimulus data and prepares it for transmission using an RF data communication protocol. The transmission is done via the cable and is a stream of 5 MHz RF bursts containing information about the current levels, active electrodes, and mode of stimulation (bipolar versus monopolar). The timing information of the RF bursts is used by the implanted RF decoder for constructing biphasic pulses.

Fig. 4.

Generic signal flow of sound processing using PDA-based processor in real-time and offline modes.

The following sections describe both RT and offline software modes in greater detail.

A. RT Speech Processor

The RT speech processor mimics a clinical processor such that all speech processing and stimulation is done in real time. Fig. 5 provides a general overview of the signal flow involved in the PDA-based speech processor in the RT mode. The acoustic signal is picked up by the microphone located in the BTE and sent to the FPGA interface board via the headset cable. The interface board samples the signal binaurally at a rate of 22 050 Hz/channel and sends the sampled (digital) signal to the PDA via the SD slot. The PDA processes the digital signal via a speech coding algorithm (e.g., continuous interleaved sampling (CIS) [34], ACE, etc.) which produces stimulus data comprising stimulation parameters and pairs of current levels of active electrodes. The stimulus data are sent to the SDIO board which transmits it to the implant using RF protocols specific to the implant.

Fig. 5.

Signal Flow in the PDA-based speech processor in real-time mode. The acoustic signal is picked up by the microphone (A), sent (via the headset cable) to the SDIO interface board (D), which is then sent to the PDA (B). The PDA processes the signal and generates a set (one for each channel of stimulation) of amplitude electrode pairs (C). The stimulus data are sent to the SDIO interface board (D), which is then coded for transmission to the cochlear implant as RF bursts (E). For bimodal (EAS) stimulation, the acoustic signal is processed through an audio processing routine simultaneously with electric processing. The processed audio buffer is sent to the transducer (F) which presents the acoustic signal to the ear via the insert ear-tips. Both electric and acoustic stimuli are synchronized in time and provided to the user without any delay. A sample of interactive user interface is shown on the PDA screen in the figure above.

In a nutshell, the PDA functions as a body-worn processor with additional features such as programming flexibility and a touch-screen user interface for custom fitting of stimulation parameters on the go. The following section describes the software architecture for the RT mode.

1) Software Architecture

The software running on the PDA performs the signal processing (i.e., implements the sound coding strategy), while the FPGA logic implements the communication protocols needed for proper communication with the implant receiver/stimulator. Fig. 6 shows the organization of the software running on the PDA and FPGA logic. There are two inputs to the PDA software: 1) acoustic signals from the microphones and 2) the patient's MAP. The patient's MAP file contains the most comfortable loudness (MCL) and threshold (THR) levels in addition to parameters such as stimulation mode (BP/MP/CG), electrode array type, unilateral/bilateral stimulation, etc., in an ASCII text file in a custom format stored locally on the PDA. Routine Read_Patient_MAP_File reads the contents of the MAP file and returns a MAP structure containing stimulation parameters specific to the patient. Some of these parameters are shown in Table II.

Fig. 6.

Software organization on the PDA and FPGA logic.

TABLE II.

Patient MAP Parameters

| Parameter | Option |

|---|---|

| Implant/Electrode type | CI24RE(CS/CA) / CI24M / CI24R (CS/CA) / CI22M / ST |

| Left/Right Ear Implant Strategy | ACE/CIS, etc. |

| Number of Implants | Unilateral / Bilateral |

| Electrode Configuration1 | MP1 /MP2 / MP1+2 |

| Number of Active electrodes or Nmaxima | (1 to 22) depending upon strategy |

| Left/Right Stimulation Rate | 250 Hz – 3500 Hz |

| Left/Right Pulse Width | 9.6 μs-400 μs depending upon rate |

| Left/Right THRs | 0 – 255 clinical units |

| Left/Right MCLs | 0 – 255 clinical units |

| Left/Right Band gains | band gains in dB |

| Compression function Q value | Q value of LGF function – typically 20 |

Electrode configuration: monopolar mode MP1| MP2|MP1+2 [14]. For CI22M common ground mode CG(1), bipolar modes BP(2), BP+1(3), BP+2(4),.

The Get_Input_Data block (see Fig. 6) captures and buffers the acoustic signal from the microphones at 22 050 Hz sampling rate in frames of 11.6 ms binaurally. The Process_Data block takes as input the acquired signal buffer and MAP parameters and returns the set of amplitude–electrode pairs. The amplitudes and corresponding electrodes can be obtained by any sound coding algorithm, e.g., by band-pass filtering the signal into a finite number of bands (e.g., 12, 22) and detecting the envelope in each band, or by computing the FFT spectrum of the signal and estimating the power in each band. Electrical amplitudes are passed through the Error_Checking block (described later), and finally, the Send_Data block transmits the data (current levels, electrode numbers, and stimulation parameters) to the SDIO board. The logic running on the FPGA encodes the received data for RF transmission using the expanded protocol by Crosby et al. for the CI22 system [30] or the embedded protocol by Daly and McDermott for the CI24 system [29]. For safety purposes, the source code of error checking routines and that of the logic running on the FPGA are not distributed to the researchers.

The researchers have the freedom to implement custom sound processing strategies. In order to demonstrate this concept, we implemented the ACE strategy as reported by Vandali et al. in [35] as an example in this paper. Programming was done using a combination of C, C++, and assembly language. Intel Integrated Performance Primitive (IPP) [36] APIs and various signal processing optimizations were used wherever possible to keep the computation cost low.

2) Error Checking

A number of mechanisms have been set in place to ensure the safety of the patients. Foremost among these mechanisms is to keep the logic running on the SDIO interface board unreadable and un-modifiable. This is done to ensure that the CI users will not be stimulated by, for instance, charge unbalanced pulses due to a Hardware Description Language programming error which could be harmful to them. Second, software safety checks are set in place on the PDA side for checking 1) the range of amplitude levels and 2) the range of stimulation parameters (e.g., pulse width) to ensure that they fall within the permissible and safe range. All safety checking routines are embedded as object files, i.e., their source code is not modifiable by the user. Only stimulation parameters approved by the FDA and are currently in use by patients are allowed.

The error checking software routine as shown in Fig. 6 is the gateway routine to the electrical stimulation. This routine takes as input the stimulation parameters from the MAP along with the amplitude levels. There is a limit on the range of the parameters set by the manufacturer for safe operations. For instance, the biphasic pulse width cannot exceed 400 μs/phase (in general, the maximum allowable pulse width depends on the stimulation rate). This 400 μs/phase upper limit is based on evidence from physiological studies published by McCreery et al. [37], [38] and Shannon [39]. The stimulation parameters are checked in each frame to ensure that they fall within the acceptable and safe limits. The permissible range of stimulation parameters was taken from Cochlear Corporation's documents. Validation tests were conducted to verify this.

a) Checking for the valid range of current levels

The current level of each electrode needs to be limited within the range of threshold (THR or T) to the most comfortable loudness (MCL or M) levels (the T and M levels are expressed in clinical units and can range from 0 to 255). Most importantly, the current level of each active electrode is checked to ensure that it is smaller than the specified M level of that electrode. If any of the amplitudes fall outside this range, the program saturates the amplitude to the corresponding M level. This is done to avoid overstimulation. The M levels can be obtained using the clinical fitting software, e.g., Custom Sound (v.2) for Cochlear devices, and are subsequently entered into the patient's MAP file. The verification of stimulus amplitudes in software is performed as an additional measure to ensure patient safety.

b) Checking for the valid range of stimulation parameters

The relationship between various permissible stimulation parameters is complex and it depends among other things on 1) the generation of the nucleus device (e.g., CI22, CI24); 2) the electrode array; and 3) the stimulation strategy. For instance, the allowable pulse width depends on both the stimulation strategy and the generation of the nucleus device. These relationships and dependences among the stimulation parameters were taken into account when writing and testing the error checking routines. Provisions were made for the aforementioned dependences and more specifically for:

valid stimulation modes;

pulse-rate and pulse-width dependence; and

charge density limitations for different electrode arrays.

In addition to this, rate-centric and pulse-width centric parameter checking routines were also hardcoded. In a rate-centric routine, for example, if a user specifies a stimulation rate and pulse width combination that is not realizable, the pulse width will be adjusted to fit the pulse rate. The user is informed (via a warning message box on the PDA screen) for any modification of stimulation parameters by these routines. Exhaustive validation tests were conducted to verify that the stimulation parameters and stimulus pulses are always within safe limits.

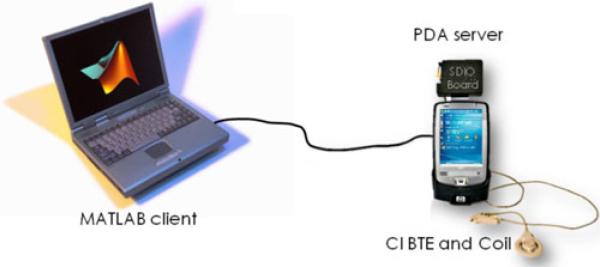

B. Offline Speech Processor

The offline version of the PDA processor is based on a PC running MATLAB, where all processing takes place while the PDA acts as an interface to the implant. The software architecture is designed such that the PDA acts as a server which accepts the incoming connections and the PC acts as a client with MATLAB as a front-end as shown in Fig. 7. Therefore, the overall design can be broken down into three main software components.

Server running on the PDA.

MATLAB client (.mexw32 or .mexw64 dll) called from the MATLAB front-end.

MATLAB front-end running on the PC.

Fig. 7.

High-level diagram for offline-mode setup.

The server client interface is based on Windows Sockets (Winsock) API which is a technical specification that defines how Windows network software should access network services, especially TCP/IP [40]. Fig. 8 shows the transfer of parameters and amplitudes from MATLAB to the PDA and the status returned from the PDA to MATLAB.

Fig. 8.

Device connectivity and data exchange in an offline mode.

1) MATLAB Front-end

The MATLAB front-end, as the name suggests, is the application layer of the system around which most researchers work. It can be a simple command script to create synthetic stimuli or an elaborate GUI or application which implements speech coding algorithms. In either case, the stimuli is streamed it to the PDA by calling the client dynamic link library (dll) (responsible for invoking the client-server communication protocol). A variety of applications can be created at the front-end suitable for different experiments using the same client dll.

The MATLAB-to-PDA interface is designed with the goal of being simple, user friendly, and flexible so that it provides researchers with enough feature space to design experiments they would not otherwise be able to do with conventional researcher interfaces. This is achieved by limiting all the overhead code and communication protocols to the PDA component (server), hence allowing the researchers to focus more on experiments than on coding. The MATLAB front-end goes through the following program cycle.

1) Load Patient MAP

First, the patient MAP is loaded or created either from an existing MAP or an application to load stimulation parameters specific to the patient. The file format remains the same as the one used in the RT version, with some parameters shown in Table II.

2) Check Stimulation Parameters

Parameters loaded from the MAP file are checked to verify that that they are within the implant limits for safe operation. These safety checking routines are similar to the ones used in the RT version and are embedded as MATLAB pcodes in the offline version for additional safety.

3) Create Stimulation Data

Stimulation data essentially comprise stimulation parameters, stimulation sequence of the active electrodes, and their respective current levels. Stimulation data may be loaded from a preprocessed file or they may be created by implementing any speech coding strategy. Alternatively, a waveform of synthetic stimuli may be created for psychophysical experiments.

4) Error Checking

Before streaming stimuli to the implant, a final check on the stimulus current levels is performed to ensure that the stimulation levels fall within the safe ranges specified by the patient's MAP. This is done by comparing the amplitude (current) levels of each band with the MCL value of the corresponding electrode.

5) Call to Client

Finally, the client dll is invoked which transfers stimulation parameters and data to the implant via the PDA. The client dll initializes Winsock, creates a socket, connects to the server, and transmits stimulation data created in MATLAB to the PDA. It does this in two steps to match the receive function on the server: first the number of 11 ms frames, nframes, and the number of pulses per frame are transmitted. Second, nframes frames are sent continuously to the PDA. The dll is compiled from the C source using the MATLAB MEX compiler.

2) PDA Component—Server

The PDA component initializes Winsock, creates a socket, binds the socket, listens on the socket, accepts incoming connections, and performs blocking receives on the stimulation data from the client. The receive is performed within a thread in two steps. In the first step, information about the total number of frames (nframes) is received. The server then performs nframes receives, each time buffering the data. Once all the data are received, the server closes the socket and sends the frames to the SDIO board after verification by the error-checking routine. The 11 ms interval between frames in the buffer is implemented by waiting for a successful readback of a “sent token” from the FPGA. The “sent token” indicates that the FPGA has completed transmission of the frame to the Freedom coil.

The PDA server which runs continuously in a Windows thread automatically initializes a new connection for the next incoming stimuli and waits for the client to transfer the corresponding next set of frames. In this way, the transfer of amplitudes takes place on demand, i.e., the transfer is made under the control of the user.

The PDA component is built as a Windows Mobile 5.0 executable using Visual Studio 2005/2008 Professional in C++. The executable is deployed on the PDA and is run from the desktop remotely using the Windows Remote API (RAPI) application prun provided in the Windows Mobile SDK.

3) Applications

Flexibility to program in MATLAB in offline mode allowed us to design applications targeted to various behavioral, psychoacoustical, and psychophysical experiments which were not easily possible with conventional research interfaces. The offline software package comes with a versatile suite of applications to conduct a variety of experiments. Some examples are scripts for sound coding algorithms (e.g., CIS, ACE etc.), applications to conduct listening experiments with CI users, experiments for modulation detection, electrode pitch matching, finding interaural time differences (ITDs), and interaural intensity level differences (ILDs). This framework of routines, scripts, and applications acts as a reference for the researchers to develop custom experiments according to their own research needs.

4) Psychophysics

The PDA platform has the ability to control stimulation at each individual electrode along with its stimulation parameters. The user can stimulate a single electrode, all 22 electrodes, or a specified subset of electrodes in any timing sequence (as long as the combinations of stimulation parameters are within the allowable ranges). This makes the platform an ideal tool to conduct psychophysical experiments with great ease and flexibility. The software package has various applications to perform psychophysical experiments by controlling stimulation parameters, timing, and stimulus shape at individual electrodes.

The platform was also successfully integrated with Percept [41], which is a software package developed by Sensimetrics Corporation to facilitate the design and assessment of sound processing strategies. Percept offers a wide range of psychophysics experiments which can easily be performed on human subjects in the lab environment.

C. Electric Plus Acoustic Stimulation

A number of studies recently have focused on combined EAS as a rehabilitative strategy for sensorineural hearing loss (HL) [42]–[49]. It is well established that patients fitted with a CI and who have residual hearing in one or both ears and combine the use of a hearing aid with their implant receive a larger benefit in speech understanding compared to electric-alone or acoustic-alone stimulation. EAS has a strong synergistic effect [42] both when acoustic information is delivered ipsilater-ally to the implant (e.g., hybrid implants with short electrode arrays) and when delivered contralaterally (implant in one ear and hearing aid in the other), defined as bimodal stimulation in the current study. Improvement is more evident in noisy conditions and music perception [50]–[52] and is primarily attributed to greater access to more reliable fundamental frequency (F0) cues in the acoustic portion [53].

The functionality of the platform includes acoustic stimulation, in addition to electrical stimulation, for researchers interested in experimenting with bimodal CI users. Similar to electric-only stimulation, the platform is operated in two modes for EAS experiments: 1) RT mode and 2) offline mode. In the RT mode, acoustic and electric stimuli are delivered to the user in real time similar to their own clinical processor or hearing aid. Sound is acquired from the microphone(s) and all the processing is carried out in the PDA in RT. The offline mode, on the other hand, is based on a PC running MATLAB. The user selects an audio file from the PC which is processed by a speech processing strategy in MATLAB, and the electric and acoustic stimuli are streamed to the implant and earphones synchronously.

In addition to the basic hardware requirements, EAS involves one additional hardware module, a high fidelity earphone sys tem. We used commercially available insert earphones from E.A.R. Tone Auditory System. Insert phones are based on a transducer connected to the PDA via an audio cable. The other end of the transducer connects with an ear tip through a tube which transmits acoustic stimuli to the ear as shown in Fig. 5. A portable audio amplifier may be used for additional acoustic gain.

In RT mode, a copy of the original sound buffer is processed simultaneously through the audio processing routine and the electric processing routine. The processed acoustic buffer is streamed to the audio port of the PDA while the electric stimuli are streamed to the implant concurrently on a frame-by-frame basis. In this way, both electric and acoustic stimulations are synchronized in time.

In offline mode, on the other hand, a copy of the complete processed audio signal (e.g., a .wav file) is streamed to the PDA before stimulation using Windows RAPI libraries. Once the server at the PDA has received all stimulus data from the MATLAB client, it performs error checking on the received data and buffers the electrical amplitudes and acoustic waveform in frames of 11 ms. These frames are then continuously and synchronously transmitted to the implant and the earphones, respectively.

The PDA platform is capable of EAS both for contralateral and ipsilateral CI. The underlying software architecture for both configurations remains the same.

D. Performance

The PDA used in this study runs at 625 MHz with 64 MB RAM on an ARM processor and supports the multithreaded programming environment. This configuration offers unparalleled computational power for RT processing of complex algorithms. In order to demonstrate the processing power of the PDA, we implemented CIS, ACE, and SPEAK [54] strategies to exemplify the existing level of clinical processing demands.

Table III shows sequence of processes in the ACE algorithm along with the computation cost associated with each process. The signal is buffered in frames of 11.6 ms duration and subsequent processing is done on overlapping analysis windows of 5.8 ms duration. The RT ACE implementation on the PDA required no more than 2.03 ms processing time per frame in bilateral (electric-only) mode and 1.23 ms per frame in unilateral configuration. Statistics for the EAS mode are not significantly different from electric-only mode. This implies that the PDA processor computes ACE at 5.7 times faster than the RT in bilateral configuration (i.e., more than 80 percent of processing time is still available for any further processing). The total memory footprint of the ACE algorithm (including the code and data sections) is 478 kB.

TABLE III.

Sequence of Processes for ACE Algorithm Along With the PDA Processor's Time Profiling for Each Process

| Process | Time (μs) per analysis window1 | Time (μs) per frame2 |

|---|---|---|

| 1. Apply a Blackman window. | 4.43 | 124.1 |

| 2. Perform a 128-point FFT of the windowed sub-frame using the IPP FFT routine [36]. | 9.09 | 254.5 |

| 3. Compute the square of the FFT magnitudes. | 0.67 | 18.7 |

| 4. Compute the weighted sum of bin powers for 22 channels. The resulting output vector contains the signal power for 22 channels. | 15.36 | 430.1 |

| 5. Compute the square root of weighted sum of bins. | 2.63 | 73.6 |

| 6. Sort the 22 channel amplitudes obtained in step (5) using the shell sorting algorithm. Select the n (of 22) maximum channel amplitudes, and scale appropriately the selected amplitudes. | 9.41 | 263.4 |

| 7. Compress the n selected amplitudes using a loudness growth function given by: y = log(1 + bx)/log(1 + b), where x denotes the input signal from step 6 and b is a constant dependent on clinical parameters: base level, saturation level and Q value. | 0.86 | 24.0 |

| 8. Convert the compressed amplitudes, y (step 7) to current levels: I = (C – T)y + T, where I denotes the current level, T denotes the threshold level and C denotes the most-comfortable loudness level. | 0.82 | 22.9 |

| Total | 43.26 | 1.21 × 103 |

Analysis window: 128-sample (5.8 ms) analysis window at the sampling rate of 22050 Hz.

Frame: Signal is initially buffered in 255-sample (11.6 ms) frames. Subsequent processing is done on 128-sample analysis windows. Profiling statistics reported in this table were carried out at a fixed stimulation rate of 2400 pulses per second per channel, with a block shift of 9 samples on each frame. The total number of analysis windows processed per frame are 28. Steps 1 – 8 are carried out for each of 28 analysis windows per frame.

As far as the battery performance is concerned, the standard PDA battery (1400 mAh) lasts about 8 h with continuous use in RT bilateral mode. Any commercially available off-the-shelf external battery pack with a standard USB charging port and 5 V rating may be used to power the SDIO board, which draws 200 mA. We used a compact battery pack (dimensions: 8.4 cm × 5.8 cm × 1.3 cm) from Energizer (model number: XPAL XP2000). This lithium polymer battery is rated at 2000 mAh and lasts at least 8 h with continuous use in RT bilateral mode. Both batteries are rechargeable and replaceable.

E. Limitations

The platform is currently limited to CI22 and CI24 implants from Cochlear Corporation. The software is built on a Windows Mobile 5 platform and currently supports Windows-based devices only. However, the software may easily be ported to other mobile operating systems such as Android and iOS. Stimulation timing parameters, namely stimulation rate and pulse width, can be changed only once every 11.6 ms (i.e., the frame size) in the current implementation. The FPGA logic as well as PDA code, however, may be modified to specify stimulation parameters (e.g., time period, pulse width, electrode, amplitude, and mode) for individual pulses to support multirate strategies in which analysis/stimulation rates vary independently across channels.

From the hardware perspective, the PDA-based processor is relatively a little bigger and bulkier than a body-worn processor. The interconnect between the PDA and the SDIO board could be a potential source of mechanical failure. The SDIO interconnect cable as shown in Fig. 2(b) helps to minimize mechanical motion/vibration. In addition to this, the current generation of the board requires an external battery pack to drive the board which impacts the usability of the system.

IV. EVALUATION AND RESULTS

The PDA platform has undergone rigorous testing to ensure that it meets all safety criteria of a clinical processor. The output levels at the electrodes were verified extensively using 1) an implant emulator and 2) a decoder and implant emulator tool box, from Cochlear Corporation. Electrodograms produced by each processor were compared to ensure similarity for the same input.

The platform is FCC and IEC compliant. An IDE application for the PDA-based research processor was approved by the FDA in May 2011 for evaluation with human subjects. Since then, the platform has been tested with unilateral, bilateral, and bimodal CI users. The results presented in this paper are from an acute study (i.e., users were allowed to wear the processor for a few hours in the lab environment) on ten CI users. The aim of the current study was to evaluate the performance of the PDA-based speech processors on a speech intelligibly task in quiet and noisy conditions and compare the performance against the users’ own clinical processor.

A. Subjects

A total of ten CI users participated in this acute study. All participants were adults and native speakers of American English with post lingual deafness with a minimum of 1-year experience with CI(s) from Cochlear Corporation. Five subjects were bimodal CI users, i.e., wearing a cochlear implant in one ear and a hearing aid in the other. One of the five bimodal subjects was not available for some test conditions. Of the remaining five subjects, one was unilateral (implant in one ear only) and the remaining four were bilateral (implants in both ears) CI users.

B. Method

All subjects were tested with three processor conditions: clinical, PDA-based offline (PDA_Offline) and PDA-based RT (PDA_RT) processors. The intelligibility scores from their clinical processor were used as benchmark scores. Tests with the subjects’ clinical and the PDA_RT processors were conducted in free field in a sound booth at an average presentation level of 65 dB SPL. Speech stimuli for the PDA_Offline were presented via audio files on the PC. In all cases, sensitivity level and volume adjustments were completed on the respective processors. The subjects’ everyday MAP was used for all the test conditions. Environmental settings (such as autosensitivity, ADRO, and BEAM), if active, were not disabled on the subjects’ clinical processors. These settings were not implemented in the PDA-based processors. A short training with the PDA-based processor was carried out before each test.

In addition to electric alone, bimodal subjects were tested for acoustic alone and EAS with both types of processors. In this study, no audio processing for acoustic stimulation was done.

C. Stimuli

The speech stimuli used for testing were recorded sentences from the Institute of Electrical and Electronics Engineers (IEEE) database [55]. Each sentence is composed of approximately 7–12 words, and each list comprises 10 sentences with an average of 80 words per list. All the words in the sentences were scored for correctness. Two lists for each test condition were used and the scores were averaged. Three conditions were tested for each test: speech in quiet, speech in 10 dB signal to noise ratio (SNR), and speech in 5 dB SNR. Noise type used in all tests was a stationary speech shaped noise created from an average long-term speech spectrum of IEEE sentences.

D. Results

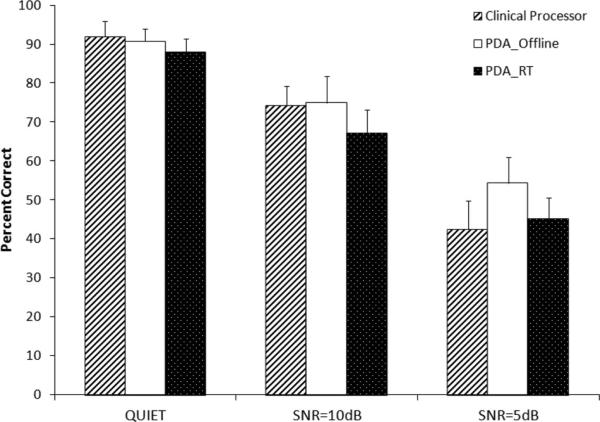

1) Electric-Only

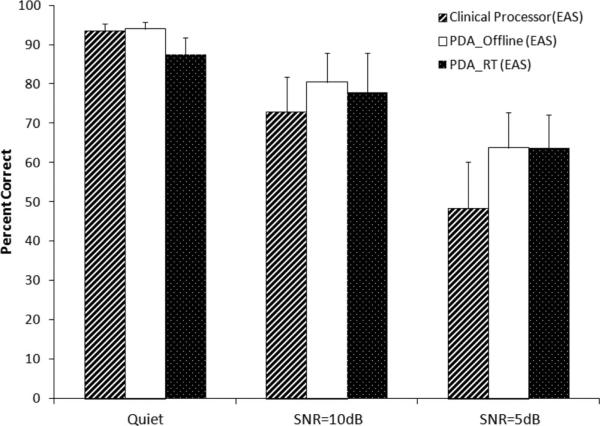

Nine subjects were tested for the electric-only condition. Four subjects were tested in the bilateral mode whereas five subjects were tested in the unilateral mode. Fig. 9 presents the percentage correct mean scores of nine subjects as a function of SNR (quiet, SNR = 10 dB, SNR = 5 dB) and processor type (clinical, PDA_Offline, PDA_RT). The error bars in Fig. 9 represent standard error of the mean (SEM). Repeated-measures analysis of variance (ANOVA) was performed to assess the effect of processor and SNR on the intelligibility scores with an α set at 0.05. Subjects were considered a random (blocked) factor while processor types and SNR values were used as main analysis factors. No statistically significant difference in speech intelligibility was found between the three processor types (F2,16, = 1.810, p = 0.1955). The interaction between the processor types and SNR was not significant (F4,32 = 1.891, p = 0.1361). There was a significant main effect of SNR on speech intelligibility (F2,16 = 85.017, p < 0.0000). The post hoc Bonferroni test for pairwise comparisons between the three SNRs indicated that speech intelligibility at 10 dB was higher than 5 dB and speech intelligibility was highest in the quiet presentation condition.

Fig. 9.

Percentage correct mean speech intelligibility of nine subjects as a function of SNR and processor type. Error bars represent SEM.

2) Electric Plus Acoustic Stimulation

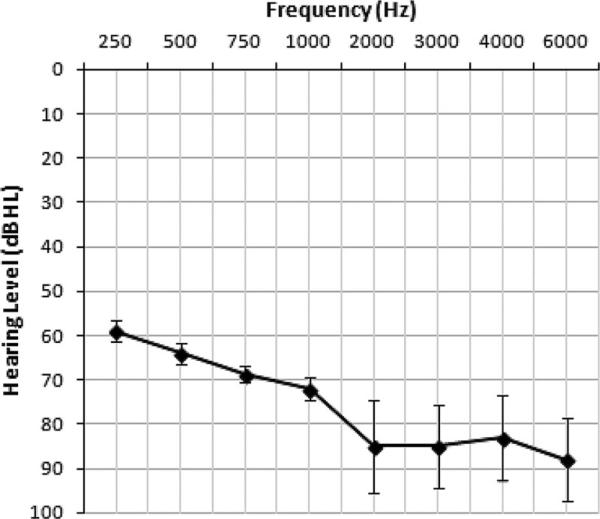

Fig. 10 displays the mean audiometric thresholds in the nonimplanted ear of five bimodal subjects. The mean thresholds at 0.25, 0.5, 0.75, 1.0, 2.0, 3.0, 4.0 and 6.0 kHz were 59, 64, 70, 76, 85, 85, 83, and 87 dB HL, respectively.

Fig. 10.

Mean audiogram of five bimodal subjects for the hearing-aid ear. Error bars represent SEM.

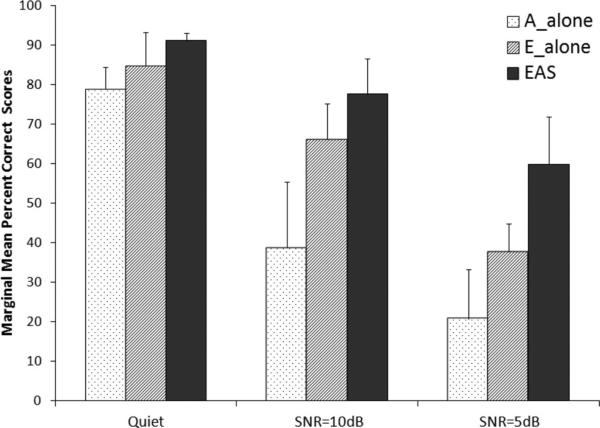

Mean speech intelligibility for the EAS condition as functions of SNR and processor type are presented in Fig. 11. Repeated measures ANOVA was performed by keeping hearing modalities (acoustic alone, electric alone, and combined EAS), SNRs, and processor types as analysis factors. No statistically significant difference was found between the three processor types (F2,6 = 1.968, p = 0.2201). Estimated marginal mean speech intelligibility for acoustic alone (A_alone), electric alone (E_alone), and EAS for the three processor types are shown in Fig. 12. There was a significant effect of hearing modality (F2,6 = 6.492, p = 0.0316) on speech intelligibility. The post hoc Bonferroni test for pairwise comparisons between the three hearing modalities indicated that E_alone speech intelligibility was higher than A_alone, and EAS speech intelligibility was significantly higher than E_alone and A_alone speech intelligibilities.

Fig. 11.

Percentage correct mean speech intelligibility for EAS condition of five bimodal subjects as a function of SNR and processor type. Error bars represent SEM.

Fig. 12.

Estimated marginal mean intelligibility scores for acoustic alone (A_alone), electric alone (E_alone), and EAS for the three processor types. Error bars represent SEM.

Significant interaction between hearing modalities and SNRs was found (F4,12 = 5.259, p = 0.0111). The post hoc Bonferroni test for pairwise comparisons (α = 0.0055) indicated that speech intelligibility at quiet and 5 dB SNR was significantly different for all three hearing modalities (pA_alonea = 0.0012, pE_alone = 0.0004, pEAS = 0.0054). Also, speech intelligibility at 10 and 5 dB SNRs was significantly different for E_alone and EAS hearing modalities (pE_alone = 0.0013; pEAS = 0.0034).

There was a significant interaction between the processor types and SNR (F4,12 = 4.170, p = 0.0241). The post hoc Bonferroni test for pairwise comparisons (α = 0.0055) indicated significant intelligibility differences between quiet and 5 dB SNR and between 10 and 5 dB SNRs (quiet and 5 dB SNR: pclinical = 0.0008, pPDA_RT = 0.0036, pPDA_Offine = 0.0003; 10 and 5 dB SNRs: pclinical = 0.0007, pPDA_RT = 0.0054, pPDA Offline = 0.0005).

V. CONCLUSION

This paper presents the design of a PDA-based research platform which can be used to explore new paradigms in CI research. The flexible design of the platform both in terms of hardware and software infrastructure allows easy development and quick evaluation of new research ideas without learning advanced programming skills. The focus of this study was toward developing a versatile set of tools to design and conduct simple to complex experiments for studies involving CIs with greater ease and flexibility.

The research processor works in two modes: RT mode and offline mode. The RT mode, similar to the clinical processor, allows practical assessment of new algorithms in the free field and provides real-time (RT) feedback from the users. The programming environment for the RT mode is C/C++ which is easy to program and saves considerable development time. The portability and wearability of the platform makes it possible for the RT processor to be used outside the lab environment akin to a body-worn processor. This capability opens up opportunities for long-term evaluation of novel algorithms and strategies for chronic studies with human subjects.

For experiments which do not necessarily require RT processing and stimulation, the offline mode provides an alternative by offering programmability in the MATLAB environment. The MATLAB development environment is preferred by most researchers as it simplifies numerical calculations and graphing of results without complicated programming. It also reduces development time.

The extension of the platform to include EAS capabilities allows researchers to conduct experiments with users with bimodal hearing. The EAS mode can be utilized in RT or offline configurations; thus, giving the same features and flexibility to researchers as an E_alone speech processor. The EAS capability will help foster research in emerging hybrid implants for patients with residual hearing in one or both ears. Both electric and acoustic stimulations can be delivered binaurally and synchronously allowing its use for spatial localization tasks.

The platform also supports the capability to conduct psychophysics experiments in the offline mode. The ability to control stimulation parameters, timing, and stimulation patterns of each individual electrode provides a flexible solution to researchers to design and conduct broad spectrum of experiments.

The researchers are provided with a complete software framework and documentation (technical manuals, user guides, etc.) to assist in developing new or transitioning existing algorithms in the platform. This includes libraries and source code for several sound processing strategies along with a suite of applications to address a multitude of research needs.

The platform only operates within the safe limits established by the FDA and Cochlear Corporation. The evaluation of the platform with unilateral, bilateral, and bimodal CI users suggests that the platform provides stimulations that result in comparable sentence recognition to the users’ own clinical processor. It should be pointed out that the results reported here are from an acute study. Given the differences in microphones, hardware, and the implementation of the ACE coding strategy, between the PDA and the clinical speech processors, it is reasonable to expect that the users would need to adapt to the use of the PDA-based processors.

The presented results would encourage researchers to use the platform for their future work with E_alone and EAS. Our next step is to conduct take-home trials of the PDA platform. Portability and wearability of the platform makes it possible for the users to wear the platform on a daily basis until they adapt to the new processor. Long-term chronic studies to evaluate novel coding strategies are thus possible.

The PDA-based platform is the first of its kind research interface built on a commercially available PDA/smartphone device. It sets an example of the bridging of medical devices with consumer electronics. The platform is being tested in several laboratories in the U.S. and could potentially benefit hundreds of thousands of hearing-impaired people worldwide.

ACKNOWLEDGMENT

The authors would like to thank the anonymous reviewers and the Associate Editor for their constructive comments during the review process for this paper. Their feedback has helped to improve the quality of the paper along with the clarity and organization.

The authors would also like to thank Dr. E. A. Tobey, Dr. J. Hansen, and Dr. N. Srinivasan for providing valuable feedback during the review process, and the researchers who are participating in the FDA clinical trial to test the PDA platform at their research centers.

This work was supported in part by the National Institutes of Health under Contract N01-DC-6-0002 from the National Institute on Deafness and Other Communication Disorders and Contract R01DC010494-01A (E. A. Tobey, current PI).

Biography

Hussnain Ali (S’09) received the B.E. degree in electrical engineering from the National University of Science and Technology, Islamabad, Pakistan, in 2008. He received the M.S. degree in electrical engineering from the University of Texas at Dallas (UTD), Richardson, TX, USA, in 2012, where he is currently working toward the Ph.D. degree.

Hussnain Ali (S’09) received the B.E. degree in electrical engineering from the National University of Science and Technology, Islamabad, Pakistan, in 2008. He received the M.S. degree in electrical engineering from the University of Texas at Dallas (UTD), Richardson, TX, USA, in 2012, where he is currently working toward the Ph.D. degree.

He was a Design Engineer in Center for Advanced Research in Engineering, Islamabad, Pakistan from 2008–2009. Since then, he has been a Research Assistant in the Cochlear Implant Laboratory, the Department of Electrical Engineering, UTD. His research interests include biomedical signal processing, implantable and wearable medical devices, cochlear implants, and emerging healthcare technologies.

Arthur P. Lobo (M’91–SM’08) received the Bachelor of Technology in electronics and communication engineering from the Karnataka Regional Engineering College, Surathkal, India in 1984, and the Ph.D. degree in communication and neuroscience from the University of Keele, U. K., in 1990.

Arthur P. Lobo (M’91–SM’08) received the Bachelor of Technology in electronics and communication engineering from the Karnataka Regional Engineering College, Surathkal, India in 1984, and the Ph.D. degree in communication and neuroscience from the University of Keele, U. K., in 1990.

From 1990 to 1992, he was a Postdoctoral Fellow working on microphone array beamforming at INRS-Telecommunications, University of Quebec, Montreal, Canada. From 1992 to 1996, he was a Senior Research Associate working on speech synthesis at Berkeley Speech Technologies, Inc., Berkeley, CA, USA. From 1996 to 1998, he wwas with DSP Software Engineering, Bedford, MA, USA as a DSP Engineer. From 1999 to 2001, he was a Consultant DSP Engineer for Ericsson Mobile Communications (U.K.) Ltd., Basingstoke, U. K. From 2001 to 2003 and from 2006 to 2008, he was a Research Associate with cochlear implants, the University of Texas at Dallas, Richardson, TX, USA. From 2003 to 2004, he was a Software Engineer on an audio surveillance wireless speech transmitter for law enforcement with L.S. Research, Inc., Cedarburg, WI, USA. From 2004 to 2005, he was a DSP Systems Engineer in the Department of Homeland Security project on gunshot detection for KSI Corp., Ontario, CA, USA. From 2005 to 2006, he was a DSP Engineer on the Zounds hearing aid for Acoustic Technologies, Inc., Mesa, AZ, USA. From 2009 to 2013, he was a Chief Scientific Officer for Signals and Sensors Research, Inc., McKinney, TX, USA, working on projects for the National Institutes of Health and was a visiting scholar at The University of Texas at Dallas. Since 2013, he has been an Electronics Engineer for the U.S. Department of Defense. His research interests include acoustic and radar signal processing, cochlear implants, digital logic design and laser chemistry.

Dr. Lobo has served as Reviewer for the IEEE EMBS conferences, IEEE TRANSACTIONS ON BIOMEDICAL ENGINEERING and IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS and is currently a Reviewer for IET Electronics Letters.

Philipos C. Loizou (S’90–M’91–SM’04) received the B.S., M.S., and Ph.D. degrees from Arizona State University (ASU), Tempe, AZ, USA, in 1989, 1991, and 1995, respectively, all in electrical engineering.

Philipos C. Loizou (S’90–M’91–SM’04) received the B.S., M.S., and Ph.D. degrees from Arizona State University (ASU), Tempe, AZ, USA, in 1989, 1991, and 1995, respectively, all in electrical engineering.

From 1995 to 1996, he was a Postdoctoral Fellow in the Department of Speech and Hearing Science, ASU, where he was involved in research related to cochlear implants. From 1996 to 1999, he was an Assistant Professor at the University of Arkansas at Little Rock, Little Rock, AR, USA. He held the position of Professor as well as the Cecil and Ida Green Endowed Chair in the Department of Electrical Engineering, University of Texas at Dallas, Richardson, TX, USA, until July 2012 In his career, he inspired many students and researchers to follow their passion for research and education in the field of speech and hearing science and technology. He was the author of the book Speech Enhancement: Theory and Practice (Boca Raton, FL, USA: CRC, 2007; 2nd ed. 2013) and the coauthor of the textbooks An Interactive Approach to Signals and Systems Laboratory (Austin, TX, USA: National Instruments, 2008), and Advances in Modern Blind Signal Separation Algorithms: Theory and Applications (San Raphael, CA, USA: Morgan and Claypool Publishers, 2010). His research interests include the areas of signal processing, speech processing, and cochlear implants.

Dr. Loizou was a Fellow of the Acoustical Society of America. He also served as an Associate Editor of the IEEE TRANSACTIONS ON BIOMEDICAL ENGINEERING and International Journal of Audiology. He was previously an Associate Editor of the IEEE TRANSACTIONS ON SPEECH AND AUDIO PROCESSING, from 1999 to 2002 and of the IEEE Signal Processing Letters, from 2006 to 2008. From 2007 to 2009, he was an elected member of the Speech Technical Committee of the IEEE Signal Processing Society.

Footnotes

Nucleus Freedom™ is an implant system developed by Cochlear Corporation which is the largest manufacturer of CIs.

Contributor Information

Hussnain Ali, Department of Electrical Engineering, University of Texas at Dallas, Richardson, TX 75080 USA (hussnain@ieee.org).

Arthur P. Lobo, Signals and Sensors Research, Inc., McKinney, TX 75071 USA. He is now with the U.S. Department of Defense, Alexandria, VA 22350 USA (aplobo@ieee.org).

Philipos C. Loizou, Department of Electrical Engineering, University of Texas at Dallas, Richardson, TX 75080 USA (loizou@utdallas.edu).

References

- 1.National Institute on Deafness and Other Communication Disorders . Cochlear implants. National Institutes of Health; Bethesda, MD, USA: Mar. 2011. NIH Publication No. 11-4798. [Google Scholar]

- 2.National Institute on Deafness and Other Communication Disorders . Cochlear implants. National Institutes of Health; Bethesda, MD, USA: 2005. [Google Scholar]

- 3.Cochlear implants in adults and children. National Institutes of Health; Bethesda, MD, USA: 1995. NIH Consensus Statement Online. [Google Scholar]

- 4.Loizou PC. Introduction to cochlear implants. IEEE Eng. Med. Biol. Mag. 1999 Jan-Feb;18(1):32–42. doi: 10.1109/51.740962. [DOI] [PubMed] [Google Scholar]

- 5.Zeng FG, Rebscher S, Harrison WV, Sun X, Feng H. Cochlear implants: System design, integration, and evaluation. IEEE Rev. Biomed. Eng. 2008 Jan.1:115–142. doi: 10.1109/RBME.2008.2008250. DOI: 10.1109/RBME.2008.2008250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ahmad TJ, Ali H, Ajaz MA, Khan SA. A DSK-based simplified speech processing module for cochlear implant research. Proc. IEEE Int. Conf. Acoustics, Speech Signal Process.; April 19–24, 2009.pp. 369–372. [Google Scholar]

- 7.Lobo AP, Loizou PC, Kehtarnavaz N, Torlak M, Lee H, Sharma A, Gilley P, Peddigari V, Ramanna L. A PDA-based research platform for cochlear implants. Proc. 3rd Int. IEEE/EMBS Conf. Neural Eng.; May 5, 2007.pp. 28–312. [Google Scholar]

- 8.Goorevich M, Irwin C, Swanson AB. Cochlear implant communicator. 2002 Jul. International Patent WO 02/045991.

- 9.Cochlear, NIC v2 software Software interface Interface specification Spec-ification E11318RD. ochlear Corp.; Sydney, Australia: Aug. 2006. [Google Scholar]

- 10.Stohl JS, Throckmorton CS, Collins LM. Technical Rep. Duke University; Durham, NC, USA.: 2008. Developing a flexible SPEAR3-based psychophysical research platform for testing cochlear implant users. [Google Scholar]

- 11.Product Brief, HearWorks. The University of Melbourne, Vic.; Australia: 2003. SPEAR3 3rd generation speech processor for electrical and acoustic research. [Google Scholar]

- 12.Product Brief, CRC and Hear-Works. The University of Melbourne, Vic.; Australia: 2003. SPEAR3 speech processing system. [Google Scholar]

- 13.Shannon RV, Zeng FG, Fu QJ, Chatterjee M, Wygonski J, Galvin J, III, Robert M, Wang X. NIH Quarterly Progress Report, QPR #1. House Ear Institute; Los Angeles, CA, USA: 1999. Speech processors for auditory prostheses. [Google Scholar]

- 14.Shannon RV, Fu QJ, Chatterjee M, Galvin JJ, III, Friesen L, Cruz R, Wygonski J, Robert ME. NIH Quarterly Progress Report, QPR #12—Final Report. House Ear Institute; Los Angeles, CA, USA: 2002. Speech processors for auditory prostheses. [Google Scholar]

- 15.RIB Research Interface Box System: Manual V1.0. The University of Innsbruck; Innsbruck, Austria: 2001. [Google Scholar]

- 16.Peddigari V, Kehtarnavaz N, Loizou PC. Real-time LabVIEW implementation of cochlear implant signal processing on PDA platforms. Proc. IEEE Int. Conf. Acoustics, Speech Signal Process.; Apr. 15–20, 2007.pp. 357–360. [Google Scholar]

- 17.Gopalakrishna V, Kehtarnavaz N, Loizou P. Real-time PDA-based recursive fourier transform implementation for cochlear implant applications. Proc. IEEE Int. Conf. Acoustics, Speech Signal Process.; Taipei, Taiwan. Apr. 19–24, 2009.pp. 1333–1336. [Google Scholar]

- 18.Gopalakrishna V, Kehtarnavaz N, Loizou P. Real-time implementation of wavelet-based Advanced Combination Encoder on PDA platforms for cochlear implant studies. IEEE Int. Conf. Acoustics Speech Signal Process.; Dallas, TX, USA. March 14–19, 2010.pp. 1670–1673. [Google Scholar]

- 19.Gopalakrishna V, Kehtarnavaz N, Loizou PC. A recursive wavelet-based strategy for real-time cochlear implant speech processing on PDA platforms. IEEE Trans. Biomed. Eng. 2010 Aug.57(8):2053–2063. doi: 10.1109/TBME.2010.2047644. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hazrati O, Lee J, Loizou PC. Binary mask estimation for improved speech intelligibility in reverberant environments. presented at 11th Annual Conference of the International Speech Communication Association, Interspeech 2012; Portland, OR, USA. Sep. 2012. [Google Scholar]

- 21.Hazrati O. Ph.D. dissertation. Dept. of Elect. Eng., The Univ. Texas Dallas; Richardson, TX, USA: 2012. Development of dereverberation algorithms for improved speech intelligibility by cochlear implant users. [Google Scholar]

- 22.Ali H, Lobo AP, Loizou PC. A PDA platform for offline processing and streaming of stimuli for cochlear implant research. Proc. 33rd Annu. Int. Conf. Eng. Med. Biol. Soc.; Boston, MA, USA. Sep. 2011; pp. 1045–1048. [DOI] [PubMed] [Google Scholar]

- 23.Ali H, Lobo AP, Loizou PC. On the design and evaluation of the PDA-based research platform for electric and acoustic Stimulation. Proc. 34th Annu. Int. Conf. Eng. Med. Biol. Soc.; San Diego, CA, USA. Aug.–Sep. 2012; pp. 2493–2496. [DOI] [PubMed] [Google Scholar]

- 24.Hsiao S. SP12 active coil (electrical design description) Cochlear Corporation; Sydney, Australia: Mar. 2004. Document # S50656DD. [Google Scholar]

- 25.Patrick JF, Busby PA, Gibson PJ. The development of the nucleus freedom cochlear implant system. Trends Amplif. 2006;10(4):175–200. doi: 10.1177/1084713806296386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Lobo AP, Lee H, Guo S, Kehtarnavaz N, Peddigari V, Torlak M, Kim D, Ramanna L, Ciftci S, Gilley P, Sharma A, Loizou PC. Open architecture research interface for cochlear implants, Fifth quarterly progress report NIHN01DC60002. [Online] 2007 Jun. Available: http://utdallas.edu/~loizou/cimplants/

- 27.Kim D, Lobo AP, Ramachandran R, Gunupudi NR, Gopalakrishna V, Kehtarnavaz N, Loizou PC. Open architecture research interface for cochlear implants, 11th quarterly progress report NIH N01DC60002. [Online] 2008 Dec. Available: http://utdallas.edu/~loizou/cimplants/

- 28.Kim D, Gopalakrishna V, Guo S, Lee H, Torlak M, Kehtarnavaz N, Lobo AP, Loizou PC. On the design of a flexible stimulator for animal studies in auditory prostheses. presented at the 2nd Int. Symp. App. Sci. Biomed. Comm. Tech.; Bratislava, Slovakia. Nov. 24–27, 2009; pp. 1–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Daly C, McDermott H. Embedded data link and protocol. 1998 Apr. U.S. Patent 5 741 314.

- 30.Crosby P, Daly C, Money D, Patrick J, Seligman P, Kuzma J. Cochlear implant system for an auditory prosthesis. 1985 Jun. U.S. Patent 4 532 930.

- 31.Loizou PC, Lobo AP, Kim D, Gunupudi NR, Gopalkrishna V, Kehtarnavaz N, Lee H, Guo S, Ali H. Final Report - Open architecture research interface for cochlear implants. NIH final progress report NIH-NO1-DC-6-0002. University of Texas at Dallas; Richardson, TX: 2011. [Online]. Available: http://utdallas.edu/~loizou/cimplants/ [Google Scholar]

- 32.Loizou PC, Lobo AP, Kehtarnavaz N, Peddigari V, Lee H, Torlak M, Ramanna L, Ozyurt S, Ciftci S. Open architecture research interface for cochlear implants, 2nd quarterly progress report NIH N01DC60002. [Online] 2006 Sep. Available: http://utdallas.edu/~loizou/cimplants/

- 33.Kim D, Lobo AP, Kehtarnavaz N, Peddigari V, Torlak M, Lee H, Ramanna L, Ciftci S, Gilley P, Sharma A, Loizou PC. Open architecture research interface for cochlear implants, 4th quarterly progress report NIH N01DC60002. [Online] 2007 Mar. Available: http://utdallas.edu/~loizou/cimplants/

- 34.Wilson B, Finley C, Lawson DT, Wolford RD, Eddington DK, Rabinowitx WM. Better speech recognition with cochlear implants. Nature. 1991 Jul.352:236–238. doi: 10.1038/352236a0. [DOI] [PubMed] [Google Scholar]

- 35.Vandali AE, Whitford LA, Plant KL, Clark GM. Speech perception as a function of electrical stimulation rate using the Nucleus 24 cochlear implant system. Ear Hear. 2000;21(6):608–624. doi: 10.1097/00003446-200012000-00008. [DOI] [PubMed] [Google Scholar]

- 36.Intel integrated performance primitives on Intel personal internet client architecture processors,” Reference Manual, version 5. Intel Corporation; Milford, PA, USA: 2005. [Google Scholar]

- 37.McCreery D, Agnew WF, Yuen TG, Bullara LA. Comparison of neural damage induced by electrical stimulation with faradaic and capacitor electrodes. Ann. Biomed. Eng. 1988;16(5):463–481. doi: 10.1007/BF02368010. [DOI] [PubMed] [Google Scholar]

- 38.McCreery DB, Agnew WF, Yuen TG, Bullara L. Charge density and charge per phase as cofactors in neural injury induced by electrical stimulation. IEEE Trans. Biomed. Eng. 1990 Oct.37(10):996–1001. doi: 10.1109/10.102812. [DOI] [PubMed] [Google Scholar]

- 39.Shannon R. A model of safe levels for electrical stimulation. IEEE Trans. Biomed. Eng. 1992 Apr.39(4):424–426. doi: 10.1109/10.126616. [DOI] [PubMed] [Google Scholar]

- 40.Makofsky S. Pocket PC Network Programming. Addison-Wesley; Reading, MA, USA: 2003. ch. 1. [Google Scholar]

- 41.Goldsworthy R. Percept. [Online] Available: http://www.sens.com/percept/

- 42.Turner CW, Reiss LA, Gantz BJ. Combined acoustic and electric hearing: Preserving residual acoustic hearing. Hearing Res. 2008;242(1–2):164–171. doi: 10.1016/j.heares.2007.11.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Wilson BS, Dorman MF. Cochlear implants: Current designs and future possibilities. J. Rehabil. Res. Dev. 2008;45(5):695–730. doi: 10.1682/jrrd.2007.10.0173. [DOI] [PubMed] [Google Scholar]

- 44.Gantz BJ, Turner C, Gfeller KE. Acoustic plus electric speech processing: Preliminary results of a multicenter clinical trial of the Iowa/Nucleus hybrid implant. Audiol. Neurotol. 2006 Oct.11(1):63–68. doi: 10.1159/000095616. [DOI] [PubMed] [Google Scholar]

- 45.Gantz BJ, Turner C, Gfeller KE, Lowder MW. Preservation of hearing in cochlear implant surgery: Advantages of combined electrical and acoustical speech processing. Laryngoscope. 2005;115(5):796–802. doi: 10.1097/01.MLG.0000157695.07536.D2. [DOI] [PubMed] [Google Scholar]

- 46.Kiefer J, Pok M, Adunka O, Sturzebecher E, Baumgartner W, Schmidt M, Tillein J, Ye Q, Gstoettner W. Combined electric and acoustic stimulation of the auditory system: Results of a clinical study. Audiol. Neurotol. 2005;10(3):134–144. doi: 10.1159/000084023. [DOI] [PubMed] [Google Scholar]

- 47.Gifford RH, Dorman MF, McKarns SA, Spahr AJ. Combined electric and contralateral acoustic hearing: Word and sentence recognition with bimodal hearing. J. Speech, Lang., Hear. Res. 2007 Aug.50(4):835–843. doi: 10.1044/1092-4388(2007/058). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Ching T, Psarros C, Hill M, Dillon H. Should children who use cochlear implants wear hearing aids in the opposite ear? Ear Hear. 2001;22:365–380. doi: 10.1097/00003446-200110000-00002. [DOI] [PubMed] [Google Scholar]

- 49.Ching TY, Incerti P, Hill M, Wanrooy E. An overview of binaural advantages for children and adults who use binaural/bimodal hearing devices. Audiol. Neurotol. 2006;11(1):6–11. doi: 10.1159/000095607. [DOI] [PubMed] [Google Scholar]

- 50.Turner CW, Gantz BJ, Vidal C, Behrens A, Henry BA. Speech recognition in noise for cochlear implant listeners: benefits of residual acoustic hearing. J. Acoust. Soc. Amer. 2004;115(4):1729–1735. doi: 10.1121/1.1687425. [DOI] [PubMed] [Google Scholar]