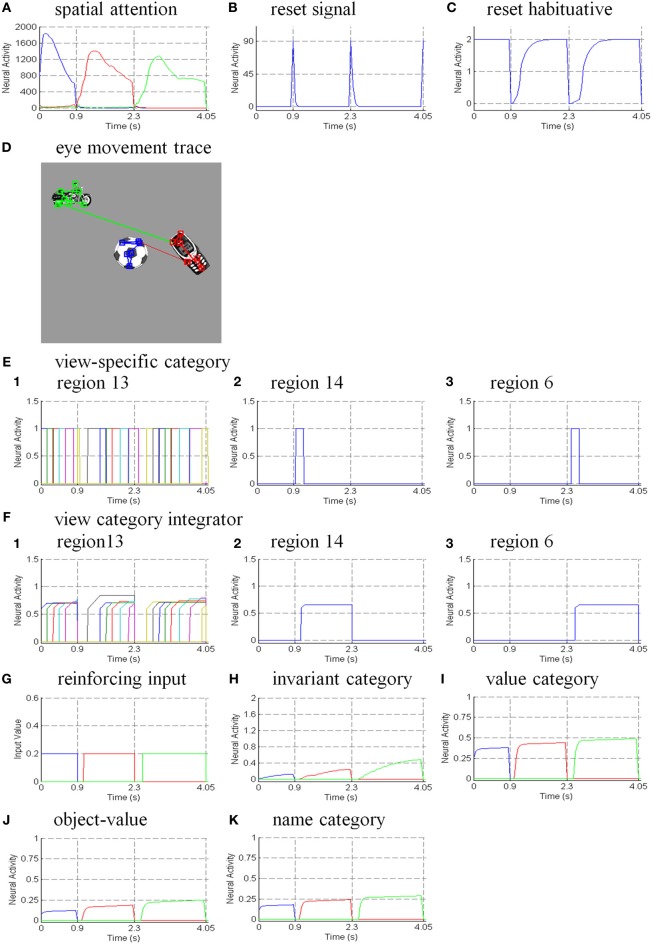

Figure 10.

Model simulations of view-invariant object category learning, after ten reinforcement simulation trials. Figure 8B presents the scenic input for the simulation. The attentional shrouds competitively form around objects in the Where stream and the winner shroud carries out view-invariant object category learning in the What stream. The persistence of a shroud controls the eye movements on the salient features on the object surface, thereby generating a sequence of views to that are encoded by view-specific categories which are, in turn, associated with the view-invariant object category. The collapse of an active shroud triggers a reset signal which shuts off the corresponding layers, including the spatial attention map, object surface, view category integrator, and view-invariant object category, to enable an attentional shift to another object. (A) Sum of the neural activities of each shroud. Each line indicates the total activities of the shroud that is activated by the corresponding object. Blue line: soccer ball; red line: cellphone; green line: motorcycle. (B) Object category reset signals. A reset is triggered at time = 1.25, 2.6, and 3.95 when collapse of the shroud reaches the threshold ε for triggering a reset signal in Equation (A55). (C) Habituative gate of reset signal. The depletion of the habituative neurotransmitter in Equation (A57) causes the reset signal in Equation (A42) to collapse after its transient burst and then to replenish through time to enable future resets to occur. (D) Eye movement traces of the simulated scene. The figures show only the central regions of the simulated scene. The initial eye fixation is located at the center of the scene and each square indicates an eye fixation on the object surfaces. (E) View-specific category activities in corresponding regions. Different colored lines indicate that each category activates for a short time and gets reset after the saccadic eye movement occurs. (1) Region 13 activation corresponding to the foveal views. (2) Region 14 activation corresponding to the extra-foveal view after the first object is learned. (3) Region 6 activation corresponding to the extra-foveal view after the second object is learned. (F) View category integrator activities in the corresponding regions. Different colored lines indicate integrators’ persistent activities that are inhibited when they receive a reset signal. (G) Reinforcing inputs are presented to value categories when the view-invariant object categories are active. (H) Invariant object category activities. The activation of the first object category corresponds to learning the cellphone; activation of the third object category corresponds to learning the motorcycle. (I) Value category activities corresponding to the activations of invariant object categories. (J) Object-value category activities driven by activations of invariant object categories. (K) Name category activities.