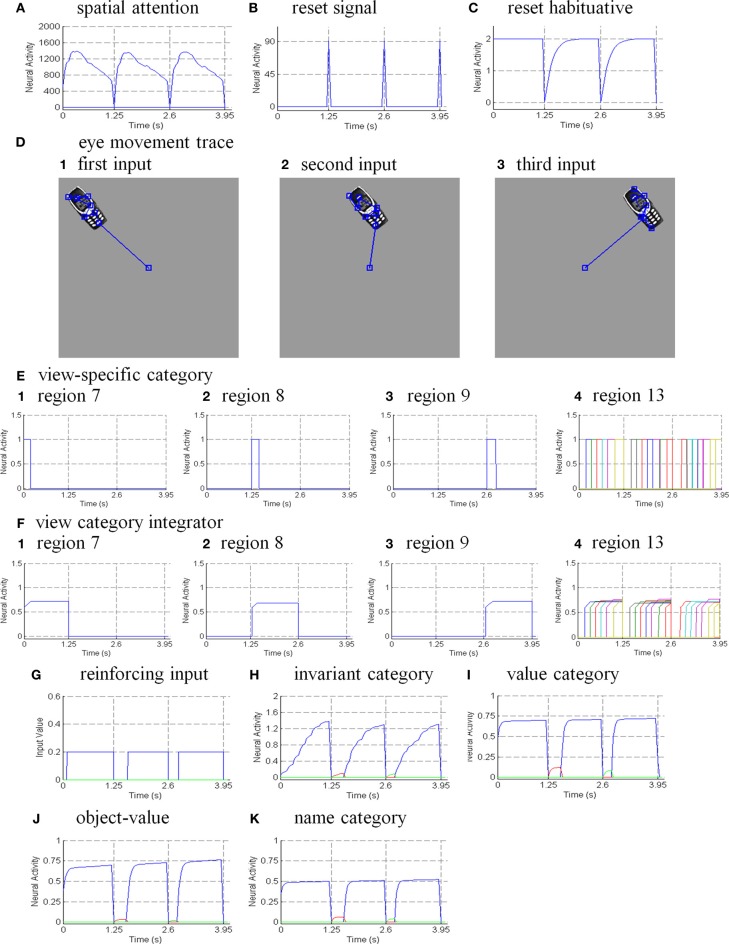

Figure 12.

Model simulations of positionally- and view-invariant cellphone object category learning, after 10 reinforcement simulation trials. Model receives a sequence of three simulated scenes. Each scene contains a single cellphone placed at different positions (see Figures 8E–G), and the initial eye fixation is located at the center of the scene. Before the object is brought to the foveal region by a saccadic eye movement, a view from the retinal periphery is generated to activate the view-specific category in the What stream and the subsequent categorical stages. An attentional shroud forms around the cellphone in the Where stream and controls the eye movements visiting several salient features on the cellphone surface which generate a sequence of views to the What stream during shroud persistence. After the collapse of an attentive shroud triggers a reset to inhibit the spatial attention map, object surface map, view category integrator neurons, and view-invariant object category neurons, another simulated scene is fed to the model to repeat category learning until all the scenes are learned. (A) Sum of the neural activities in three attentional shrouds which are active at times 0–1.25, 1.25–2.6, and 2.6–3.95 s. (B) Object category reset signals occur at times 1.25, 2.6, and 3.95 s when shroud collapse reaches the reset threshold. (C) Habituative gate of reset signal. (D) Eye movement traces scanning the cellphone presented in three positions. (E) View-specific category activities of the corresponding regions. Different colored lines indicate that each category activates for the duration of an eye fixation and gets reset after the saccadic eye movement occurs. (1) neural activation corresponding to the extra-foveal view of the first cellphone input at region 7. (2) activation corresponding to the extra-foveal view of the second input at region 8. (3) activation corresponding to the extra-foveal view of the third input at region 9. (4) activation corresponding to the foveal views of all the scenes at the foveal region 13. (F) View category integrator neuron activities in corresponding regions. (G) Reinforcing inputs (H) Invariant object category neuron activities. From t = 0–1.25 s, the invariant category is activated via a series of activations from view category integrators until it receives a reset signal. Another invariant category neuron (red line) is activated corresponding to the beginning of the second scene’s category learning and then is inhibited by the previously learned invariant category which is activated by a previous view-specific category when a feature on the cellphone is repeatedly selected. The activation of the other invariant category (green line) corresponds to the beginning of third scene’s category learning and is inhibited by the first learned invariant object category when a previously learned view-specific category is activated. (I) Value category activities corresponding to the activations of invariant object categories. (J) Object-value category activities corresponding to the activations of invariant object categories. (K) Name activities corresponding to activations of object-value categories.