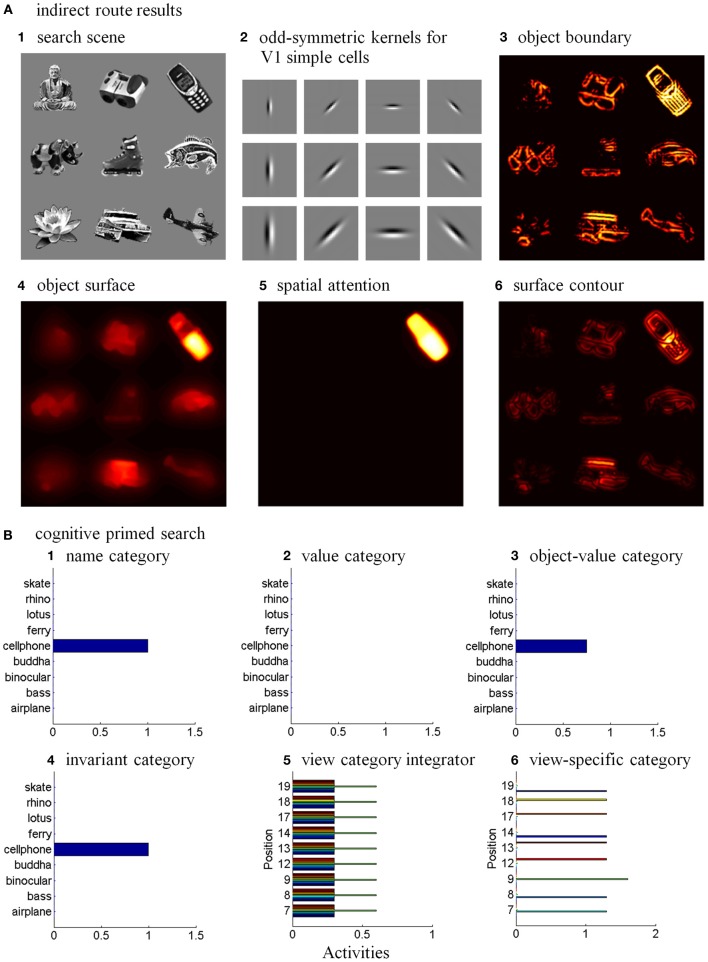

Figure 13.

Where’s Waldo cognitive primed search results. Search is based on positionally-and view-invariant object category learning of 24 objects, as illustrated in (A). In (B), a cognitive primed search are illustrated. (A) In the indirect route, the amplified view-specific category selectively primes the target boundary to make it stronger than other object boundaries in the search scene. (1) A typical input for the search task with the cellphone denoted as the Waldo target. (2) Odd-symmetric kernels for V1 polarity-sensitive oriented simple cells. The kernels have four orientations and three scales. (3) The boundary representation gates the filling-in process of the object surface stage. Priming from the cellphone’s view-specific category increases the contrast of its target surface. (4). The enhanced cellphone surface representation competitively forms the cellphone’s attentional shroud (5) within the spatial attention map. This shroud draws spatial attention to the primed cellphone object. The hot spots on the cellphone’s enhanced surface contour (6) determine eye movements to salient features on the cellphone. (B) Cognitive primed search. The category representations in a top-down cognitive primed search are consistent with the interactions in Figures 6A,B. The bars represent category activities at the time when the view-specific category is selectively amplified through the matching process. (1) Name category. Only the cellphone category receives a cognitive priming signal. (2) Value category. The value category remains at rest because no reinforcement signals are received. (3) Object-value category. The object-value category corresponding to the cellphone is primed by the cellphone name category. The object-value category also receives a volitional signal (Figure 1B), which enables its top-down prime to activate suprathreshold output signals. A volitional signal also reaches the invariant object category and view category integrator stages to enable them to also fire in response to their top-down primes, as now discussed: (4) Invariant object category. The cellphone invariant object category fires in response to its object-value category and volitional inputs. (5) View category integrator. The view category integrators corresponding to the cellphone also fire in response to their invariant object category and volitional inputs. Colored bars in each position index activations corresponding to the different objects. View category integrators at each position that learn to be associated with the cellphone’s invariant object category have enhanced representations. (6) View-specific category. The view-specific category at position 9 receives a top-down priming input from its view category integrator and a bottom-up input from the cellphone stimulus. It is thereby selectively amplified.