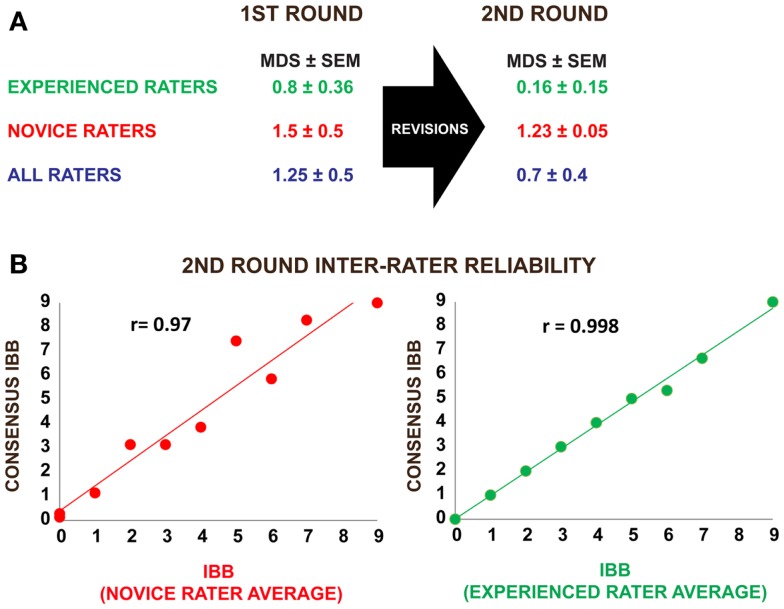

Figure 3.

Results of inter-rater reliability testing using a standardized set of rat behavioral videos before and after revision of the IBB operational definitions and score sheet. (A) Three experienced raters and six novice raters participated in the first round of inter-rater reliability testing. Mean difference scores (MDS) from a “gold-standard” consensus score were calculated as described in the methods. Following score-sheet revisions, a second round of inter-rater reliability testing was performed by three experienced and seven novice raters. Note that the MDS values as well as their standard errors (SE) were reduced after the revisions, indicating an increase in inter-rater reliability. (B) Pearson correlations between the mean IBB score and the consensus score suggest a high degree of agreement with consensus in both novice and experienced raters, providing strong evidence that the IBB has high inter-rater reliability that improves with practice.