Abstract

In this review we synthesize the existing literature demonstrating the dynamic interplay between conceptual knowledge and visual perceptual processing. We consider two theoretical frameworks demonstrating interactions between processes and brain areas traditionally considered perceptual or conceptual. Specifically, we discuss categorical perception, in which visual objects are represented according to category membership, and highlight studies showing that category knowledge can penetrate early stages of visual analysis. We next discuss the embodied account of conceptual knowledge, which holds that concepts are instantiated in the same neural regions required for specific types of perception and action, and discuss the limitations of this framework. We additionally consider studies showing that gaining abstract semantic knowledge about objects and faces leads to behavioral and electrophysiological changes that are indicative of more efficient stimulus processing. Finally, we consider the role that perceiver goals and motivation may play in shaping the interaction between conceptual and perceptual processing. We hope to demonstrate how pervasive such interactions between motivation, conceptual knowledge, and perceptual processing are to our understanding of the visual environment, and demonstrate the need for future research aimed at understanding how such interactions arise in the brain.

Keywords: semantic memory, conceptual knowledge, perception, visual processing

The penetrability of visual perception to influences from higher-order cognition has been the subject of great controversy in psychology over the past century. Proponents of the “New Look” movement, spanning the 1940’s and 1950’s, argued that motivated states influence perceptual decisions about the world. For example, it was shown that poor children overestimate the size of coins (Bruner & Goodman, 1947) and that hungry people overrate the brightness of images of food (Gilchrist & Nesberg, 1952). More recent work has shown that higher-order cognitive factors, such as learned stimulus prediction value (O’Brien & Raymond, 2012), cognitive reappraisal (Blechert, Sheppes, Di Tella, Williams, & Gross, 2012), and motivation (Radel & Clemént-Guillotin, 2012) can exert top-down influences on the early stages of visual perception. Moreover, a large body of research demonstrating perceptual learning has shown that perceptual systems can be trained to more efficiently act in accordance with task-demands, and that sensitivity to perceptual dimensions can be strategically tuned (for a review see Goldstone, Landy, & Brunel, 2011)

These findings are not without controversy. Other researchers have argued that perceptual processes are highly modular, impervious to influences from cognitive states (Pylyshyn, 1999; Riesenhuber & Poggio, 2000). Proponents of this view have attributed instances of cognition influencing perception to post-perceptual decision processes, or to pre-perceptual attention-allocation processes (Pylyshyn, 1999). Pylyshnyn has specifically argued that early vision is impenetrable. However theories of cognitive impenetrability have difficulty accounting for several findings, such as demonstrations that prior knowledge influences the perception of color (Levin & Banaji, 2006; Macpherson, 2012), which cannot be easily attributed to influences of attention.

The goal of this review is to synthesize the existing literature demonstrating the dynamic interplay between conceptual knowledge and visual perceptual processing. The semantic memory, category learning, and visual discrimination literatures have remained relatively dissociated within the field of psychology, however object identification and categorization both involve comparing an incoming visual representation with some representation of stored knowledge. Thus, a full characterization of visual object understanding necessitates research into the nature of object representations, knowledge representations, and the processes that operate on these representations. A comprehensive account of the cognitive penetration of perceptual processes is beyond the scope of a psychological journal, and has been suitably addressed in other venues (Macpherson, 2012; Siegel, 2012; Stokes, 2012). Instead, we will consider bodies of literature that we believe best demonstrate interactions between processes and brain areas traditionally considered perceptual or conceptual, and highlight ways in which these different bodies of research can be integrated to gain a fuller understanding of high-level vision. Fundamental questions that we seek to address are: How are objects represented by the visual system? Where in the brain is object-related conceptual knowledge represented, and how does the activation of conceptual information about an object unfold? What are the consequences of accessing conceptual knowledge for perception and perceptual decision-making about the visual world? In addressing these questions we will first consider the two major theoretical frameworks in which interactions between perceptual and conceptual processing systems have been studied: Categorical Perception, and Embodied Cognition. We will then discuss findings that are not easily assimilated within these frameworks.

Theoretical Foundations: Categorical Perception

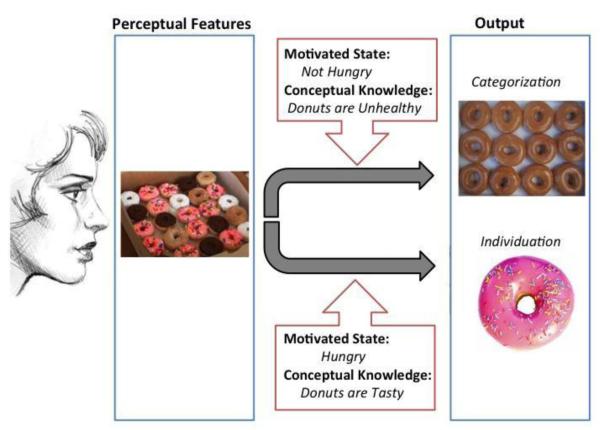

One of the most robust sources of evidence for conceptual-perceptual interactions comes from research on the phenomenon of categorical perception. Categorical perception refers to our tendency to perceive the environment in terms of the categories we have formed, with continuous perceptual changes being perceived as a series of discrete qualitative changes separated by category boundaries (Harnard, 1987). Thus, category knowledge is used to abstract over perceptual differences between objects from the same class, and to highlight differences between objects from different classes (see Figure 1).

Figure 1.

Schematic illustration of how motivational state and conceptual knowledge can influence visual processing. The top portion is an illustration of categorical perception: the donuts are perceived in terms of their category membership, with individual differences between each donut being abstracted over. If the perceiver is motivated to individuate each of the donuts, then categorical perception will be overridden and the characteristics that distinguish each donut will be highlighted.

One example of categorical perception effects at work is the way in which we perceive a rainbow. Although a rainbow is composed of a continuous range of light frequencies, changing smoothly from top to bottom, we perceive seven distinct bands of color. Even in tightly controlled laboratory experiments that use psychophysically balanced color spaces, thus controlling for low-level nonlinearities between color, color perception is categorical. This is due to our higher order conceptual representations (in this case color category labels) shaping the output of color perception. The categorical perception of color is just one example of this phenomenon, as categorical perception has been observed for various other natural stimuli, including faces and trained novel objects (Goldstone, Steyvers, & Rogosky, 2003; Goldstone, 1994; Goldstone, Lippa, & Shiffrin, 2001; Levin & Beale, 2000; Livingston, Andrews, & Harnad, 1998; Lupyan, Thompson-Schill, & Swingley, 2010; Newell & Bulthoff, 2002; Sigala, Gabbiani, & Logothetis, 2002).

Why would we want to see our environment categorically? Given the complexity of the visual environment, and the variation in visual features for objects from the same category, categorical perception is a useful information-compression mechanism. Categorical perception allows us to carve up the world into the categories that are relevant to our behavior, thus allowing us to more efficiently process the visual features that are relevant to these categories. For example, when presented with a poisonous snake, it is more useful to quickly process snake-relevant features for fast categorization than to attend to the visual features that discriminate this snake from other snakes. Note that we are not arguing that individuals are incapable of perceiving within-category differences; indeed it has been shown that individuals are sensitive to within category phonetic differences, which constitutes one of the strongest cases of categorical perception (McMurray, Aslin, Tanenhaus, Spivey, & Subik, 2008). Rather, we are arguing that such within-category differences are attenuated relative to those distinguishing exemplars from different categories.

Conceptual influences on perceptual decision-making can operate by modifying perception, attention, or decision processes, and recent research has been aimed at addressing when during visual stimulus processing effects of categorical perception emerge. Behavioral studies have suggested that categorical perception modifies the discriminability of category relevant features for faces (Goldstone et al., 2001) and for oriented lines (Notman, Sowden, & Ozgen, 2005) through a process of perceptual learning. These findings thus suggest an early, perceptual, locus for categorical perception effects, in which category learning modifies perceptual representations for learned objects. Electrophysiological research has supported these findings, with category differences being reflected in early markers of pre-attentive visual processing originating from the visual cortex, including the N1 and P1 components (Holmes, Franklin, Clifford, & Davies, 2009) as well as the vMMN component (Clifford, Holmes, Davies, & Franklin, 2010; Mo, Xu, Kay, & Tan, 2011).

Is Categorical Perception Verbally Mediated?

Language is often used to convey categorical knowledge, and ample research has shown that language and conceptual knowledge interact (Casasola, 2005; Gentner & Goldiwn-Meadow, 2003; Gumperz & Levinson, 1996; Levinson, 1997; Lupyan, Rakison, & McClelland, 2007; Snedeker & Gleitman, 2004; Spelke, 2003; Waxman & Markow, 1995; Yoshida & Smith, 2005). Indeed, recent findings demonstrate that even non-informative, redundant labels can influence visual processing in striking ways (Lupyan & Spivey, 2010; Lupyan & Thompson-Schill, 2012). Lupyan & Thompson-Schill (2012) found that performance on an orientation discrimination task was facilitated when the image was preceded by the auditory presentation of a verbal label, but not by a sound that was equally associated with the object. For instance, participants more quickly and accurately indicated which side of a display contained an upright cow following the presentation of the word “cow” but not following the presentation of an auditory “moo”. The authors also found that the priming effect for the presentation of labels was greater for objects that were rated as typical, and thus presumably has a stronger relationship with a category conceptual representation (Lupyan & Spivey, 2010; Lupyan & Thompson-Schill, 2012). These findings suggest that labels may have a special status in their ability to influence visual processing. Because category membership is typically demarcated by the presence of a label, it has been difficult to dissociate influences of categorical relatedness from those of having shared verbal labels. An important question is how critical are verbal labels in modulating the influence of conceptual knowledge on perception?

Several studies have suggested that object-to-label mapping may be necessary for categorical perception to occur. Research has shown that occupying verbal working memory interferes with the categorical perception of color patches (Roberson & Davidoff, 2000), and that this interference effect is consistent across languages (Winawer et al., 2007). Furthermore, categorical perception for faces only emerges when those faces are familiar or associated with names (Angeli, Davidoff, & Valentine, 2008; Kikutani, Roberson, & Hanley, 2008). It has also been shown that the categorical perception of color is strongest if the stimuli are presented in the right visual field, and thus directed to the left hemisphere language areas (Gilbert, Regier, Kay, & Ivry, 2006; Roberson, Pak, & Hanley, 2008), and that these effects are contingent upon the formation of color labels in childhood (Franklin, Drivonikou, Bevis, et al., 2008; Franklin, Drivonikou, Clifford, et al., 2008), or in adulthood (Zhou et al., 2010).

The preceding findings raise an interesting paradox: how can categorical perception be both robust, in that it alters early perceptual processing (Holmes et al., 2009) and warps perceptual representations (Goldstone, 1994; Goldstone et al., 2001), and fragile, in that it can be mitigated by manipulations of verbal working memory (Roberson & Davidoff, 2000) and can appear after small amounts of training (Zhou et al., 2010)? Lupyan (2012) has proposed the label-feedback hypothesis as a potential solution to this question. He argues that the distinction between verbal and nonverbal processes should be replaced by a system in which language is viewed as a modulator of a distributed and interactive process. According to this hypothesis, category-diagnostic perceptual features may automatically trigger the activation of labels, which then feedback to dynamically amplify category-diagnostic features that were activated by the label (Lupyan, 2012). Such a mechanism would enable perceptual representations to be modulated quickly and transiently and would presumably be up or down down-regulated by linguistic manipulations that modified the availability of labels, such as verbal working memory load.

It should be noted that a recent study demonstrated that categorical perception effects could emerge for non-linguistic categories, and interestingly, that these effects were stronger in the left hemisphere, as is typical for categories with verbal labels (Holmes & Wolff, 2012). These findings suggest that the frequently found left lateralization of categorical perception may not be due to the recruitment of language processing areas per se, but may rather be due to the propensity of the left hemisphere for category-level perceptual discriminations (Marsolek & Burgund, 2008; Marsolek, 1999). Alternatively, participants may automatically label categories during training, in the absence of explicit instructions, and these implicitly formed labels may be recruited during subsequent visual processing. The latter possibility seems more consistent with the work demonstrating that verbal interference attenuates categorical perception effects; however, future work using verbal interference paradigms during training could help distinguish between these two possibilities. To gain further traction in understanding the contribution of verbal labels to categorical perception, researchers could use non-invasive brain stimulation techniques, such as transcranial magnetic stimulation (TMS), to create a temporary lesion in language areas, such as Wernickes and Broca’s areas. If categorical perception relies on the recruitment of language processing resources, one would expect that decreasing activation in an area like Wernicke’s area would diminish categorical perception.

How is Categorical Perception Instantiated in the Brain?

As discussed in the preceding section, behavioral research has suggested that categorical perception can operate by modifying perceptual representations such that dimensions relevant to category membership are sensitized (Goldstone et al., 2001). If this is true, one would expect to see category-specific perceptual enhancements within the inferior temporal (IT) cortex, where object processing takes place (Grill-Spector, 2003; Mishkin, Ungerleider, & Macko, 1983). Interestingly, it has been shown that category learning for shapes can cause monkey IT neurons to become more sensitive to variations along a category-relevant dimension than variations along a category irrelevant dimension (De Baene, Ons, Wagemans, & Vogels, 2008). In this study monkeys learned to categorize novel objects into 4 sets of 16 objects each based on either the curvature or the aspect ratio of the shapes. Importantly, the authors controlled for effects of pre-training stimulus selectivity by counterbalancing the relevant dimension for categorization across animals, and by recording neuronal sensitivity for the objects before and after learning. The effect of category learning was small, however, with only 55% of the recorded neurons demonstrating the effect.

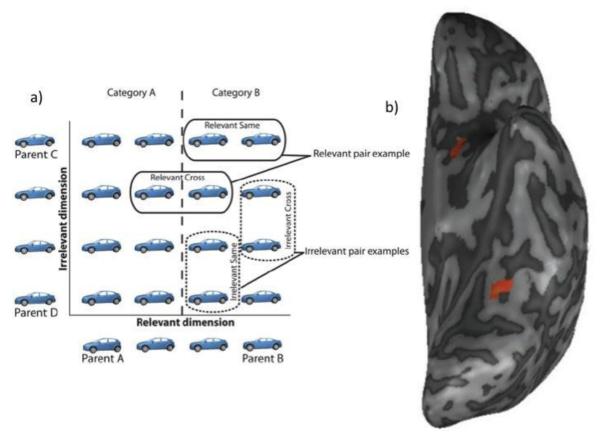

In humans, fMRI adaptation paradigms have been used to probe whether category learning can similarly alter object representations in the visual and IT cortex. FMRI adaptation refers to the reduction in the fMRI BOLD response that is seen when a population of neurons is stimulated twice, such as when two identical objects are presented in succession (Grill-Spector, Henson, & Martin, 2006). The more similar two visual stimuli are, the more the BOLD response for the second stimulus is reduced (Grill-Spector & Malach, 2001), thus making fMRI adaptation a useful tool for probing the dimensions across which neural populations gauge similarity. Using this technique, Folstein and colleagues found that category learning increased the discriminability of neural representations along category-relevant dimensions in the ventral visual processing stream (Folstein, Palmeri, & Gauthier, 2012). Participants learned to categorize morphed car stimuli into two categories based on their resemblance to two parent cars (see Figure 2). After category learning, fMRI adaptation was assessed during a location-matching task, for which category information was irrelevant. Adaptation was reduced along object dimensions relevant to categorization within object-selective cortex of the mid-fusiform gyrus, suggesting that neurons in this area had become more sensitive to perceptual variations relevant to the learned categories.

Figure 2.

Folstein and colleagues trained participants to categorize morphed car stimuli into two categories based on their resemblance to two parent cars. Later, participants performed an orthogonal task on these stimuli while being scanned. fMRI adaptation was reduced along object dimensions relevant to categorization within object-selective cortex of the mid-fusiform gyrus, suggesting that neurons in this area had become more sensitive to perceptual variations relevant to the learned categories. The image in (b) depicts a whole-brain comparison of all relevant stimulus pairs compared with all irrelevant stimulus pairs. Adapted from Folstein, J. R., Palmeri, T. J., & Gauthier, I. (2012). Category learning increases discriminability of relevant object dimensions in visual cortex. Cerebral Cortex.

Other studies that have failed to find similar enhancements of relevant dimensions in visual cortex after category learning (Gillebert, Op de Beeck, Panis, & Wagemans, 2008; Jiang et al., 2007; van der Linden, van Turennout, & Indefrey, 2009), either did not test for behavioral influences of category learning (Gillebert et al., 2008; van der Linden et al., 2009), or failed to find such behavioral effects (Jiang et al., 2007), leaving open the possibility that their training manipulation was not sufficient to engender the changes to object representations that are necessary for categorical perception to occur. This may be partially attributable to the stimuli used by these studies, which were created using a blended rather than a factorial morph-space, as used by Folstein and colleagues (2012). Essentially, the use of a factorial morph-space allows participants to make category distinctions on the basis of one dimension (although note that this dimension may be complex as is the case with morphed car or face stimuli), whereas category decisions are made on the basis of four dimensions with a blended morph-space (for an illustration of the distinction between blended and factorial morph-spaces refer to Folstein, Gauthier, & Palmeri, 2012). Thus, category learning may only change object representations when one perceptual dimension can be used to infer category membership.

There is some evidence that category-learning can lead to long-term, structural, changes to the perceptual system (Kwok et al., 2011). In this study participants learned subcategory shades of green and blue, and associated these sub categories with meaningless names over a 2-hour training period. Training resulted in an increase in the volume of gray matter in area V2/V3 of the left visual cortex, thus suggesting that the frequently found left-lateralized categorical perception of color may be due to structural changes in early visual cortex. Such findings also seem to contradict the flexible nature of categorical perception, which can be up or down regulated by linguistic manipulations. It is important to note that the authors did not include a control group with no category training, and thus did not control for factors that could have artificially inflated the structural differences seen between the two scanning sessions, such as scanner drift. Additionally, structural analysis of visual cortex was only performed once, immediately after training, and so it is unclear whether the observed effects truly reflect tang-term structural changes, or were more transient in nature. Some researchers have recently called into question the validity of studies showing that structural changes in gray matter density can be attained in adults using training paradigms (Thomas & Baker, 2012) and thus the results of Kwok and colleagues should be interpreted with caution. This not to say that discrimination training with colors cannot lead to long-term structural changes in early visual cortex, indeed developmental research suggests that structural plasticity during childhood shapes our visual and auditory perception of the environment (Wiesel & Hubel, 1965), but rather that much more research is needed before we accept findings demonstrating training-induced structural plasticity in adults.

Categorical Perception: Summary and Conclusions

Categorical perception is a pervasive demonstration of the dynamic interplay between conceptual category knowledge and perceptual processes. Behavioral, electrophysiological, and neuroimaging work has suggested that category knowledge can penetrate the early stages of visual analysis, and engender changes to object representations. Additional work has suggested that these effects may be highly reliant on the recruitment of left-hemisphere language processing resources, which allow labels to modify perceptual representations according to category distinctions automatically and online, giving rise to categorical perception effects that are both flexible and robust.

Neuroimaging work has shown that categorical perception may arise through the restructuring of perceptual representations within the ventral-visual processing stream, but only when stimulus classes are used that allow participants to attend to one dimension for categorical distinctions (Folstein, Gauthier et al., 2012). Given the paucity of studies addressing the neural-underpinnings of categorical perception for visual stimuli, it will be important for additional work to delineate the types and amounts of training that are sufficient for category knowledge to alter perceptual representations, and the constraints that variations in stimulus complexity may place on category learning.

Theoretical Foundations: Embodied Cognition

When considering how conceptual knowledge should affect the neural processing of visual stimuli, it is important to contemplate predictions made by current theories about semantic representation in the brain. One of the most successful neural-based accounts of semantic memory is the embodied account, which holds that concepts are instantiated in the same neural regions required for specific types of perception and action (Barsalou, Simmons, Barbey, & Wilson, 2003; Barsalou, 1999; Goldberg, Perfetti, & Schneider, 2006; Martin, 2007). This hypothesis has been supported by behavioral work showing that perceptual and conceptual representations utilize shared resources (for a review see Barsalou, 2008).

Most of the research supporting the embodied cognition hypothesis has come from studies showing that motion perception and motion-language comprehension interact. Response time for semantic judgments about sentences containing motion-related words is influenced by the motion used to make a response (i.e. whether it is congruent or incongruent) and by simultaneously viewing a rotating cross in a motion-congruent or incongruent direction (Zwaan & Taylor, 2006). However, the degree of temporal overlap and integratability determine whether concurrent motion perception interferes with, or facilitates, motion-language comprehension. When visually salient and attention grabbing stimuli are used, motion perception can impair the comprehension of verbs implying motion in a congruent direction (Kaschak, Madden, & Therriault, 2005); whereas the inverse is true for stimuli that are non-salient and easily integrated with the context of the sentence (Zwaan & Taylor, 2006). The dissociable influences of concurrent motion perception on the comprehension of motion-related language depending on the visual saliency of the stimuli has been supported by a study showing that lexical decisions for motion related words (regardless of congruence) were less accurate when participants simultaneously viewed moving dots presented above the threshold for conscious awareness; however were slower to respond to motion-incongruent, relative to congruent or non-motion related, words when the dots were presented just below threshold (Meteyard, Zokaei, Bahrami, & Vigliocco, 2008).

Additional studies have shown that the perceptual processing of motion-related stimuli can be infiltrated by the conceptual processing of motion-related words. For example, participants more quickly identified shapes presented along the vertical axis of a screen when preceded by verbs implying horizontal, relative to vertical motion (Richardson, 2003). The author also found that participants more quickly responded to pictures in a vertical orientation that they had previously seen paired with a sentence associated with vertical (e.g. “the girl hoped for a horse”), relative to a horizontal (e.g. “the girl rushes to school”), context sentence, suggesting that abstract reference to motion can influence perceptual processing. Furthermore, it has been shown that the early stages of motion perception are penetrable to the semantic processing of motion; perceptual sensitivity (measured by d’) for motion detection is impaired when participants simultaneously process motion-related words in an incongruent direction (Meteyard, Bahrami, & Vigliocco, 2007).

How is Embodied Cognition Instantiated in the Brain?

The strongest support for the embodied account has come from neuroimaging research showing that sensory and motor brain areas are recruited when performing semantic tasks that involve a sensory or motor modality (Barsalou, 2008). For example, the retrieval of tactile information is associated with the activation of somatosensory, motor, and premotor brain areas (Goldberg, Perfetti, & Schneider, 2006; Oliver, Geiger, Lewandowski, & Thompson-Schill, 2009). Additionally, the retrieval of color knowledge is associated with the activation of brain areas involved in color perception, namely the left fusiform gyrus (Hsu, Frankland, & Thompson-Schill, 2012; Simmons et al., 2007) and the left lingual gyrus (Hsu et al., 2012). TMS research has further implicated sensory-motor brain areas as playing a causal role in conceptual processing by showing that stimulation of motor cortex can facilitate the processing of motion-related words (Pulvermüller, Hauk, & Nikulin, 2005; Willems, Labruna, D’Esposito, Ivry, & Casasanto, 2011). When repetitive transcranial magnetic stimulation is used to induce a temporary lesion to primary motor cortex (M1), interference is seen for the processing of motion related words (Gerfo et al., 2008); and these effects are specific to hand-related words when rTMS is directed to the hand portion of M1 (Repetto, Colombo, Cipresso, & Riva, 2013).

The above-mentioned work suggests that perceptually-grounded conceptual knowledge is recruited automatically during stimulus processing, however it remains unclear whether this knowledge constitutes part of the object representation itself, or instead reflects down-stream activation of embodied semantic representations once identification has taken place. It has been shown that tools elicit greater activity in motor and pre-motor cortex than non-manipulable objects during passive viewing, suggesting that these embodied representations may be utilized in the absence of semantic processing demands (Chao, Haxby, & Martin, 1999). Furthermore, Kiefer and colleagues found that the posterior superior temporal gyrus (pSTG), and middle temporal gyrus (MTG), both of which were activated during the perception of real sounds, were also activated quickly (within 150ms) and automatically by the visual presentation of object names for which acoustic features are diagnostic (e.g., “telephone”), and that this activation increased linearly with the relevance of auditory features to the object concept (Kiefer, Sim, Herrnberger, Grothe, & Hoenig, 2008). The authors argued that the early latency of auditory-related activity precludes an explanation based on post-perceptual imagery, and suggests that the activations in auditory cortex are partly constituent of the object concepts themselves (however, for evidence that the pSTG and MTG may play a role in object naming see Acheson, Hamidi, Binder, & Postle, 2011).

Recent findings suggest that the action-related features of tools, stored in motor and pre-motor areas of the brain, may facilitate the restructuring of perceptual representations within the medial fusiform gyrus (Mahon et al., 2007), a part of ventral stream that has been implicated in the processing of manipulable objects (Beauchamp, Lee, Haxby, & Martin, 2002, 2003; Chao et al., 1999; Chao, Weisberg, & Martin, 2002; Noppeney, Price, Penny, & Friston, 2006). Using fMRI repetition suppression, the authors found that neurons in the medial fusiform gyrus exhibited neural specificity for tools, but not for arbitrarily manipulable objects (such as books or envelopes), or non-manipulable large objects (Mahon et al., 2007). Stimulus-specific repetition suppression in dorsal motor areas was similarly restricted to tools, and was functionally related to the neural specificity for tools in the ventral stream. Similar effects have been found for novel objects after extensive training to use those objects for tool-like tasks (Weisberg, Van Turennout, & Martin, 2007). These results beg the question, what types of experience are sufficient and/or necessary for an object to be represented as a tool in the ventral stream? A compelling area for future research would be to use fMRI repetition suppression or multivoxel pattern analysis (MVPA) to examine whether, and if so how, different types of learning and experience engender stimulus-specific changes to perceptual representations within visual cortex. The results of Mahon et al., (2007) suggest that associating function-related motor experience with novel objects increases neural specificity for those objects within the ventral visual stream, however it remains unclear to what extent direct motor experience is necessary for such changes in neural tuning to arise.

Is direct sensory/motor experience necessary for embodied conceptual representations to emerge? One study tested this by having participants learn associations between novel objects and words describing features of these stimuli, such as being “loud” (James & Gauthier, 2003). The results of a subsequent fMRI study showed that a portion of auditory cortex – the superior temporal gyrus - was activated for objects associated with sound descriptors, and that a region near motion sensitive cortex MT/v5 - the posterior superior temporal sulcus - was activated for objects associated with motion descriptors. These findings suggest that knowledge about an object’s sensory features derived through abstract semantic learning can engage similar neural mechanisms as sensory knowledge acquired through direct experience. In other words, being told that an object is loud and hearing a loud object may influence subsequent identification by engaging similar neural processing regions.

Arguments Against Embodied Cognition

The crux of the embodied account of conceptual knowledge holds that concepts are embodied or instantiated in the same neural regions required for specific types of perception and action. This idea has been largely supported by behavioral and neuroimaging findings demonstrating that sensory and motor features of concepts are activated quickly and automatically, and that motor-relevant properties for objects can shape perceptual representations formed in ventral-visual cortex. These findings however, do not provide unequivocal support that embodied representations are necessary for conceptual understanding. Opponents of the Embodied Cognition hypothesis have argued that the behavioral influences of perceptual processing on conceptual processing that have been cited in support of embodiment (Kaschak et al., 2005; Pecher, Zeelenberg, & Barsalou, 2003, 2004; van Dantzig, Pecher, Zeelenberg, & Barsalou, 2008; Zwaan & Taylor, 2006) may be occurring at the level of response selection, rather than playing a necessary role in conceptual understanding (Mahon & Caramazza, 2008). Similarly, the neuroimaging findings that have been cited in support of embodiment are also consistent with theories that allow for spreading activation from disembodied conceptual representations to the sensory and motor systems that guide behavior (Chatterjee, 2011; Mahon & Caramazza, 2008). Sensory-motor areas may be activated because they are necessary for conceptual processing, or alternatively activation in sensory motor areas may reflect a spread of activation from amodal areas (Mahon & Caramazza, 2008). This interpretation of the relevant neuroimaging work is consistent with a recent study showing that activity in an amodal association area (left IFG) correlated with behavioral performance on a semantic property verification task (Smith et al., 2012). TMS research has offered the most compelling source of evidence of evidence in favor of the embodied cognition hypothesis, but even these findings are subject to criticism since TMS effects tend to be distributed away from the stimulated cite via cerebrospinal fluid (Wagner, Valero-Cabre, & Pascual-Leone, 2007), potentially influencing brain activity in functionally connected regions.

Moreover, neuropsychological findings are largely inconsistent with embodied accounts. For instance, patients with focal lesions causing apraxia, a deficit in using objects, retain conceptual knowledge of object names and how objects should be used (Johnson-Frey, 2004; Mahon & Caramazza, 2005; Negri et al., 2007). Many other lesion studies that appear to support embodied accounts suffer from a lack of anatomical specificity needed to support a strong versions of embodiment. For example, in one study it was shown that patients with damage to frontal motor areas demonstrated a lexical decision impairment for action related words (Neininger & Pulvermüller, 2003), however most of these patients also had extensive damage to the parietal and temporal cortices, and thus their semantic deficit could not be attributed to the frontal damage per se. Furthermore, a recent study of a large cohort of individuals with left hemisphere lesions to sensorimotor areas found no relationship between the site of the cortical lesion, and conceptual processing of motor verbs (Arévalo, Baldo, & Dronkers, 2012). Additional work using such methods could shed light on the roles of other sensory processing areas in object cognition and perception.

Embodied Cognition: Summary and Conclusions

The current body of literature does not provide strong evidence that embodiment is necessary for semantic understanding. Moving forward, it is probably more useful to ask to what degree concepts are embodied (Hauk & Tschentscher, 2013), under what circumstances they are embodied, and how this varies between individuals. It has been shown that the degree to which concepts are embodied varies from person to person depending on object-related experience (Beilock, Lyons, Mattarella-Micke, Nusbaum, & Small, 2008; Calvo-Merino, Glaser, Grèzes, Passingham, & Haggard, 2005; Hoenig et al., 2011). Thus, future work should be aimed at understanding the mechanisms through which individual differences in embodied cognition emerge, and how these embodied representations contribute to cognition. Additionally, conceptual processing may engage embodied representations to different extents depending on the task at hand. One possibility is that perceptual and motor areas mediate visual imagery, which may be needed to verify complex perceptual properties that are not immediately accessible to the observer, but not simple perceptual characteristics that are strongly associated with a concept (Thompson-Schill, 2003). Finally, current neuroimaging work has been aimed at understanding the dynamic interactions between distributed brain networks that give rise to cognition and perception. FMRI methods that are aimed at assessing functional and effective connectivity between brain areas will likely contribute to our understanding of how sensory-motor areas interact with amodal association areas to give rise to semantic understanding (Valdés-Sosa et al., 2011)

Semantic Knowledge Affects the Visual Processing of Objects and Faces

In the preceding sections we discussed the two major frameworks in which interactions between conceptual and perceptual processing systems have been studied. However these frameworks offer little insight into the problem of how conceptual knowledge that is unrelated to the sensory properties of the stimulus affects subsequent processing. For example, we frequently acquire emotionally laden information about the people around us - that they are silly, curious, lazy, or extraverted – and this information can bias perceptual processing (Anderson, Siegel, Bliss-Moreau, & Barrett, 2011).

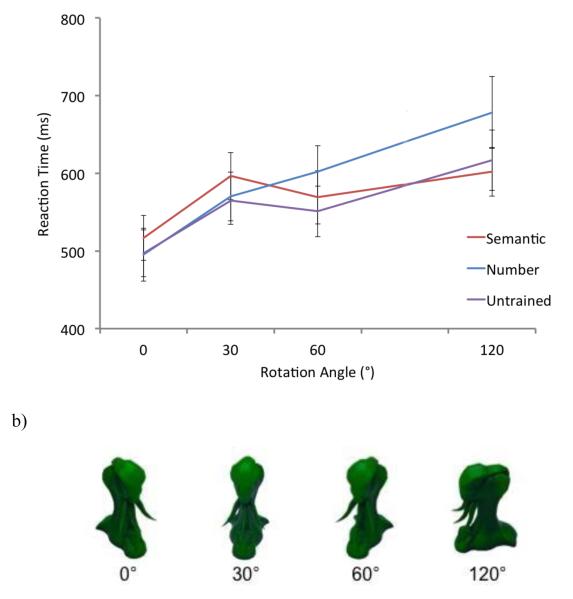

In the laboratory this issue is studied by training participants to associate semantic features with previously unfamiliar stimuli. For example, it has been shown that associating meaningful verbal labels with perceptually novel stimuli improves visual search efficiency (Lupyan & Spivey, 2008), however only when participants adopt a passive search strategy (Smilek, Dixon, & Merikle, 2006). Three additional behavioral studies have used training paradigms, during which participants learned to associate in-depth semantic knowledge with novel visual objects, to identify conceptual influences on visual processing that occur independently of visual object features and familiarity. Findings from this literature show that conceptual knowledge can facilitate the recognition of novel objects, and attenuate the viewpoint dependency of object recognition (Collins & Curby, 2013; Curby, Hayward, & Gauthier, 2004; Isabel Gauthier, James, Curby, & Tarr, 2003). In these studies, participants learned to associate clusters of three semantic features with each of four novel objects (see Figure 3). Later, these stimuli appeared in a perceptual matching task in which participants indicated whether two sequentially presented stimuli were the same or different. The first trained object was always presented in its canonical orientation, whereas the second trained object could be presented at one of four viewpoints (0°, 30°, 60°, or 120°). Gauthier and colleagues found that the discrimination of novel objects was facilitated across all viewpoints when these objects were associated with a cluster of distinctive (non-overlapping) semantic features (Gauthier et al., 2003). Furthermore, Curby and colleagues found that the viewpoint dependency of object recognition was attenuated for objects that had been associated with in-depth semantic associations (Curby et al., 2004), but not for those associated with non-semantic verbal labels (Collins & Curby, 2013). These findings indicate that changes in visual recognition performance can be attributed to conceptual attributes, and are consistent with findings indicating that that learning to associate in-depth semantic knowledge with novel objects attenuated the recognition deficits of a CSVA patient to a near normal level (Arguin, Bub, & Dudek, 1996). Similar results have been obtained for face recognition in patients with prosopagnosia (Dixon, Bub, & Arguin, 1998).

Figure 3.

Participants learned to associate semantic features or number words with a set of novel objects (yufos). Afterwards, participants performed a speeded sequential matching task with the trained and untrained yufos. The first yufo was always presented in its canonical orientation (0°), whereas the second yufo could be presented at one of four viewpoints (0°, 30°, 60°, or 120°). (a) Lines represent mean reaction times on the perceptual matching of yufos that were in the untrained, number, or semantic learning condition, at each orientation. Error bars represent standard error of the mean. The image in (b) depicts the yufo stimuli used by Collins & Curby (2013).

What is unclear is when during perceptual decision-making tasks such influences of in-depth semantic knowledge occur. In the perceptual matching task used for the three studies discussed above, the first object was presented for 1500 ms, followed immediately by a second object for 180 ms. Semantic knowledge could have improved perceptual matching performance by facilitating the consolidation of the first stimulus into a durable representation for perceptual comparison, by enabling participants to more efficiently process features of the second stimulus that were diagnostic across changes of viewpoint, or by facilitating the integration of the visual features of the second object into a durable form for perceptual comparison. These possibilities are by no means mutually exclusive, and semantic knowledge likely contributes to visual object processing through multiple mechanisms. Because of its high temporal resolution, electrophysiological findings are useful in elucidating when during stimulus processing such influences of in-depth semantic knowledge on visual processing emerge.

When does Semantic Knowledge Influence Perception? Insights from Electrophysiological Studies

Because of its role in the holistic processing of faces (Sagiv & Bentin, 2001) recent research has focused on the role of familiarity on the N170 component. The N170 is a negative going component that is larger for faces than for other objects over lateral occipital electrode sites, with a peak at approximately 170ms (Sagiv & Bentin, 2001). Due to the sensitivity of the N170 component to portrait manipulations (i.e. turning a stimulus upside down), the N170 component has been related to the structural encoding of stimuli (i.e. recognition that is driven by the configuration of the parts of a stimulus), as well as the initial categorization of face stimuli (Itier & Taylor, 2002; Sagiv & Bentin, 2001). Although some studies have revealed modulations of the N170 component by face familiarity (Caharel et al., 2002; Heisz & Shedden, 2009; Herzmann, Schweinberger, Sommer, & Jentzsch, 2004; Jemel, Pisani, Calabria, Crommelinck, & Bruyer, 2003), other studies have not (Bentin & Deouell, 2000; Eimer, 2000; Schweinberger, Pickering, Burton, & Kaufmann, 2002) thus suggesting that influences of familiarity on the structural encoding of face stimuli are tenuous at best. The studies that have utilized training procedures to assess the independent contribution of conceptual knowledge to the N170 response, while controlling for visual familiarity confounds, are also inconsistent in their findings. Two studies have shown that faces associated with in-depth biographical information elicit greater N170 components than faces without such information (Galli, Feurra, & Viggiano, 2006; Herzmann & Sommer, 2010). However, two studies that used similar training procedures revealed similar amplitude N170 responses to faces with and without learned associations (Kaufmann & Schweinberger, 2008; Paller, Gonsalves, Grabowecky, Bozic, & Yamada, 2000).

Further insight into the contribution of conceptual knowledge to the structural encoding of face stimuli can be gained through studies of the N170 repetition effect. The N170 repetition effect is thought to reflect the identification of a stimulus based on its perceptual features, and several studies have shown that the N170 repetition effect is restricted to faces that are unfamiliar in nature (Caharel et al., 2002; Henson et al., 2003). Across two studies (Heisz & Shedden, 2009; Herzmann & Sommer, 2010) faces that were associated with in-depth social knowledge elicited a reduced N170 repetition effect, relative to faces learned without such associations. The results of these two studies suggest that conceptual knowledge modulates the perceptual processing of faces, as reflected by the N170 repetition effect, possibly by allowing semantic representations to contribute to face identification, and thus reducing the perceptual demands of identification.

Two recent studies have shown that conceptual knowledge can penetrate even earlier stages of visual recognition, as revealed by modulations of the P100 component (Abdel-Rahman & Sommer, 2008; Abdel-Rahman & Sommer, 2012). The P100 component typically has a post-stimulus onset of 60-90ms, with a peak between 100 and 130ms. This component is elicited by any visual object, is sensitive to stimuli parameters such as contrast or spatial frequency, and is often considered an indicator of early, visual processing (Itier & Taylor, 2004). Abdel-Rahman & Sommer (2008) used a 2-part training paradigm in which participants learned semantic information about a class of complex novel objects. In the first part, all stimuli were associated with names and minimal semantic information (whether the item was real or fictitious). In the second part, participants listened to in-depth stories detailing an object’s function while viewing some stimuli, and listened to irrelevant stories while viewing the other stimuli. Thus, stimuli in both conditions were matched for naming, visual exposure, and amount of verbal information, with the only difference between conditions being whether the presented verbal information was informative in nature. The in-depth stories facilitated recognition when these objects were blurred in an identification task, and this effect was associated with an attenuated P100 component. Using a similar training paradigm as that reported in the previous study, Abdel-Rahman & Sommer (2012) investigated the influence of in-depth semantic knowledge on the perception of faces. Consistent with their previous findings, faces associated with in-depth semantic knowledge elicited a reduced P100 component, relative to faces associated with only minimal semantic knowledge. Taken together, these studies suggest that the earliest stages of visual analysis are penetrable to influences from higher-order conceptual knowledge. It is interesting to note that there was no influence of in-depth semantic learning on the N170 component for the faces in the Abdel-Rahman & Sommer (2012) study. This finding is consistent with the findings of Paller and colleagues (2000) and Kaughman and colleagues (2008), and support the suggestion that conceptual knowledge may only influence the processes underlying the N170 component when stimuli are presented twice in rapid succession (Heisz Shedden, 2009; Herzmann Sommer, 2010).

To summarize, although influences of conceptual knowledge on the magnitude of the N170 effect for faces are tenuous at best, the N170 repetition effect is reduced for faces with learned semantic knowledge. These results suggest that semantic knowledge may modulates facial representations such that the perceptual demands of identification are reduced. Additional work has shown that in-depth semantic knowledge can facilitate the early evaluation of stimulus features, as reflected by the P100 component. One possibility is that semantic knowledge modulates electrophysiological correlates of visual processing by attracting additional attention to faces or objects that have been associated with knowledge. However, training induced increases in attention would likely increase (Hillyard & Anllo-Vento, 1998; Hopfinger, Luck, & Hillyard, 2004), as opposed to attenuate, the P100 as seen in these studies (Abdel-Rahman & Sommer, 2008; Abdel-Rahman & Sommer, 2012). Alternatively, conceptual knowledge may make the visual processes underlying the P100 component more efficient. Such influences of conceptual knowledge on perception may operate by altering the perceptual representation formed for novel objects and faces during training, or by recruiting top-down feedback from higher-order semantic to visual cortical areas, thus offsetting the perceptual demands of visual recognition (Bar et al., 2006). The latter possibility is consistent with research showing that information propagates through the visual stream to parietal and prefrontal corticies extremely quickly (within 30ms) allowing ample time for areas higher-level brain areas to feedback and modulate activity in visual cortex. The P100 thus likely reflects coordinated activity between multiple cortical areas extending beyond V1 (Foxe & Simpson, 2002).

Where in the Brain is Semantic Knowledge Represented?

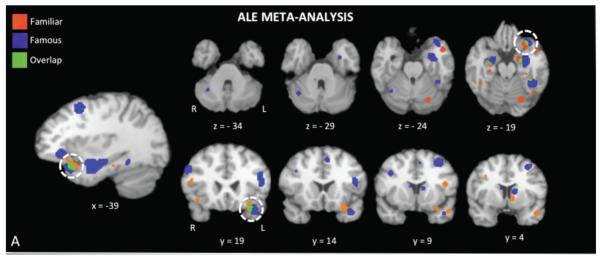

There is mounting evidence that a face patch in the ventral anterior temporal lobe (ATL) has the computational property of integrating complex perceptual representations of faces with socially important semantic knowledge (Olson et al., 2013). Neurons in this face patch are sensitive to small perceptual differences that distinguish the identity of one novel face from another (Anzelloti et al., 2013; Kriegeskorte et al., 2007; Nestor et al., 2011). This region is up-regulated when a face is accompanied by certain types of conceptual knowledge, such as knowledge that makes the face conceptually unique (e.g. “This person invented television” Ross & Olson, 2012), socially unique (a friend), or famous. This sensitivity to fame and friendship is depicted in Figure 4 which shows the results of a recent meta-analysis and empirical study of these attributes (Von Der Heide, Skipper, & Olson, 2013). These findings are consistent with a recent single-unit study in macaques demonstrating that neurons in the ventral ATLs represent paired-associations between facial identity and abstract semantic knowledge (Eifuku et al., 2010).

Figure 4.

Activations to famous and familiar faces from a random effects ALE meta-analysis. The white circle highlights activations to famous and familiar faces in the left anterior temporal lobe (Von der Heid et al., 2013).

The ATL is also sensitive to non-face stimuli, albeit ones that are associated with social emotional conceptual information. Skipper, Ross, and Olson (2011) trained participants to associate social or non-social concepts (e.g. ‘friendly’ or ‘bumpy’) with novel objects and later scanned subjects while they were presented with the objects alone (see Figure 4). The results showed that stimuli that had previously been associated with social concepts, as compared to non-social concepts, activated brain regions commonly activated in social tasks such as the amygdala, the temporal pole, and medial prefrontal cortex (see also Todorov, Gobbini, Evans, & Haxby, 2007). An additional study has shown that activity patters in the ventral ATLs carry information about the non-social conceptual properties of every day objects, such as where that object is typically found and how the object is typically used (Peelen & Caramazza, 2012). The relative sensitivity of the ventral ATLs to other conceptual object properties has remained unexplored and warrants future research.

Influences of Semantic Knowledge on Perception: Summary and Conclusions

To summarize, gaining semantic knowledge about objects and faces leads to behavioral and electrophysiological changes that are indicative of more efficient stimulus processing. The neural instantiation of this depends on several factors including the particular stimuli that are being trained as well as the particular associations that are being formed. Because of this, there is no region or network for this general process. Instead investigators should carefully consider the types of associations that are being created and draw hypotheses about neural processing based on the relevant literature.

It is common knowledge but worth reiterating that fMRI activations during task processing does not imply a causal role in the visual processing or conceptual processing of the object at hand. There is a great deal of variance in findings across the literature and only some of these activations appear consistently across studies that train subjects to associate semantic knowledge with objects or faces. Consistent activations have been observed in the left IFG (James & Gauthier, 2004; Ross & Olson, 2012) which has been implicated in semantic retrieval and language production, the perirhinal cortex (Barense et al., 2011) which may aid in the recognition of meaningful objects characterized by multiple overlapping features, and the ventral ATL which may integrate facial identity with perception-specific conceptual knowledge (Olson et al., 2013; Von Der Heide et al., 2013). It is plausible that activity in these regions feeds back and biases processing in visual areas in the occipital lobe and the posterior temporal lobe. Plausible conduits of rapid feedback include the long-range white matter association tracts, the inferior fronto-occipital fasciculus and the inferior longitudinal fasciculus. The former runs between the frontal lobe and posterior occipital/anterior fusiform, the later between the amygdala and ventral anterior temporal lobe to ventral extrastriate cortex.

Perceiver Goals and Motivational Salience Modulate Conceptual Influences on Perception

One way that conceptual knowledge can influence visual processing is by making perceptual representations more motivationally relevant. Here we argue that the current state and goals of the perceiver are critical in determining what stimuli are considered motivationally relevant, and thus selected for visual prioritization (see Figure 1 for an illustration). Motivational relevance can be conferred by visual properties that are intrinsic to a stimulus, or may be derived through conceptual learning about the properties of a stimulus. For example, while an angry face coming towards us is always motivationally relevant, regardless of the individual, the retrieval of conceptual knowledge may be required for us to prioritize the visual processing of someone we recently learned is the CEO of our company. A comprehensive understanding of how conceptual knowledge influences visual processing will require a careful consideration of the current goals of the perceiver, and the motivational relevance of the stimulus at hand, as both of these factors have been shown to influence the allocation of perceptual resources.

Studies demonstrating that novel faces and objects associated with distinctive, emotional, or high-status, associations are more easily recognized and dominate perceptual processing resources are all similar in that these associations likely made the perceptual representations motivationally relevant (Anderson et al., 2011; Collins, Blacker, & Curby, submitted; Ratcliff, Hugenberg, Shriver, & Bernstein, 2011). Similarly, findings showing that novel objects and faces that have been associated with characteristics or names in training procedures elicit increased BOLD response in the fusiform face area (FFA, a bilateral region in the posterior fusiform gyrus) (Gauthier, Tarr, Anderson, Skudlarski, & Gore, 1999; Gauthier & Tarr, 2002; Van Bavel, Packer, & Cunningham, 2008; Van Bavel, Packer, Cunningham, & Cunningham, 2011) may be partially attributable to the increased motivational relevance of these stimuli following training. For instance, Van Bavel and colleagues (2011) demonstrated that activity in the FFA was increased for faces arbitrarily assigned to an in-group, relative to out-group or unaffiliated faces, and that activity in the FFA for in-group faces predicted subsequent memory for those faces. These findings are consistent with the idea that motivationally relevant stimuli (in this case due to group-membership) receive preferential processing resources, and that these effects can occur through top-down mechanisms, in the absence of perceptual cues signifying emotional salience.

One compelling demonstration of how perceptual and conceptual features can interact with visual processing goals to influence the allocation of visual processing resources is the other-race effect (ORE). It has been argued that the ORE is a type of categorical perception, whereby other race faces are perceived as being more similar because of their category membership. Some research has suggested that the ORE is partially due to the out-group status of other-race faces, leading perceivers to have less motivation to individuate other-race faces (Sporer, 2001). Consistent with this possibility it has been shown that the racial category of other race faces is very quickly triggered during face perception (Cloutier, Mason, & Macrae, 2005; Ito & Urland, 2003; Mouchetant-Rostaing & Girard, 2003). Additionally, it has been shown that the ORE is attenuated for faces that are made more motivationally relevant through a shared university affiliation (Hehman, Mania, & Gaertner, 2010), and that the encoding of same-race faces is reduced to the level of other race faces if they are made less motivationally relevant by being presented on an impoverished background (Shriver, Young, Hugenberg, Bernstein, & Lanter, 2008).

When during perception do influences of racial category on face recognition arise? Behavioral findings have suggested that other-race faces are encoded less configurally than same-race faces (Fallshore & Schooler, 1995; Hancock & Rhodes, 2008; Michel, Corneille, & Rossion, 2007; Michel, Rossion, Han, Chung, Caldara, 2006; Sangrigoli & de Schonen, 2004). This suggestion has been corroborated by electrophysiological work showing that the N170 component is reduced for other-relative to same-race faces (Balas & Nelson, 2010; Brebner, Krigolson, Handy, Quadflieg, & Turk, 2011; Stéphanie Caharel et al., 2011; He, Johnson, Dovidio, & McCarthy, 2009; Herrmann et al., 2007; Stahl, Wiese, & Schweinberger, 2008, 2010; Walker, Silvert, Hewstone, & Nobre, 2008). Importantly, processing goals shape the influence of race on the N170 component, with one study showing that relative to same-race faces, the N170 component is attenuated for other race faces when participants attend to race, and enhanced when participants attend to identity (Senholzi & Ito, 2012).

Influences of race on configural processing and the N170 component can occur in the absence of perceptual cues signifying racial category. Using the composite task, it has been shown that racially ambiguous faces (faces that have neither stereotypically Black or White features) are perceived more holistically when categorized as belonging to the same-relative to another race (Michel et al., 2007). Additionally, Caucasian participants elicited an earlier N170 component when viewing Caucasian faces that they were told shared their nationality or university affiliation (Zheng & Segalowitz, 2013). Social-categorical knowledge has been shown to influence even earlier perceptual processes, such as luminance perception. In a particularly eloquent study (Levin & Banaji, 2006) it was demonstrated that people consistently misperceive the lightness of faces such that faces with stereotypically Black features are perceived as darker than faces with stereotypically White features, even when luminance is tightly controlled. Furthermore, racially ambiguous faces are perceived as being darker when they are paired with the label Black relative to White. Together these findings indicate that social knowledge about the racial category of a face can bias the visual encoding of that face at early stages of perception.

General Discussion

The goal of this review was to synthesize the existing literature demonstrating the dynamic interplay between conceptual knowledge visual perceptual processing. In doing so, we sought to address three questions that we consider fundamental to the understanding of higher-level vision. We will consider each of these in turn.

How are objects represented by the visual system?

We have reviewed studies demonstrating that category knowledge, which is inherently conceptual, can penetrate early stages of visual analysis, and engender changes to object representations such that category-relevant features are sensitized within the ventral-visual stream. There may be fundamental information processing constraints on the stimulus dimensions that can be used to infer category membership and bias perceptual representations. Future work should be addressed at understanding the types and amounts of training that are sufficient for category knowledge to alter perceptual representations, and the constraints that variations in stimulus complexity may place on category learning.

Where in the brain is object-related conceptual knowledge represented, and how does the activation of conceptual information about an object unfold?

In answering this question we first considered the embodied account of conceptual knowledge, which holds that concepts are embodied or instantiated in the same neural regions required for specific types of perception and action. This idea has been largely supported by behavioral and neuroimaging findings demonstrating that sensory and motor features of concepts are activated quickly and automatically. Although these findings are interesting in their own right in that they support a tight-coupling between conceptual and perceptual processing systems, it is not clear what role these embodied representations play in cognition. The degree to which concepts are embodied likely varies from person to person concomitant with sensory-motor experience with a given object, and according to semantic processing demands. It will behoove future researchers to design research aimed at understanding the mechanisms through which individual differences in embodied cognition emerge, and how these embodied representations contribute to cognition.

Moreover, the embodied account of conceptual knowledge provides little insight into the neural representation of the in-depth and abstract conceptual knowledge that we frequently have for faces and objects. The few studies that have utilized training paradigms in which novel objects or faces are associated with in-depth knowledge have revealed a great deal of variance in findings, with only some activations appearing consistently. Two areas that are consistently activated to faces accompanied by in-depth knowledge are the portions of the ATL and the left IFG, which may reflect the automatic retrieval of concepts, although superior-polar ATL activations appear to be most closely associated with the processing of socially important concepts (Skipper, Ross, & Olson, 2011). It is worth reiterating that the neural regions subserving the recognition of meaningful stimuli will depend on (a) the particular stimuli (faces? objects? tools?); and (b) the particular associations linked to these stimuli. Thus, investigators should carefully consider the types of associations that are being created and draw hypotheses about neural processing based on the relevant literature.

What are the consequences of accessing conceptual knowledge for perceptual decisionLmaking about the visual world?

Modular, impenetrable views of perception have difficulty accounting for many of the findings reviewed in this paper. For example, research showing that category learning can modify perceptual representations (De Baene et al., 2008; Folstein et al., 2012; Goldstone, 1994; Goldstone et al., 2001; Notman et al., 2005); that perceptual sensitivity (d’) is influenced by language (Meteyard et al., 2007); that social-categorical knowledge can influence luminance perception (Levin & Banaji, 2006); and that semantic knowledge can bias electrophysiological markers of pre-attentive visual processing (Abdel-Rahman & Sommer, 2008; Abdel-Rahman & Sommer, 2012; Holmes et al., 2009), are all incompatible with modular views in which perception is completely encapsulated from cognition. It remains unclear whether conceptual knowledge influences perceptual processing by modifying perceptual representations within visual cortex, or through top-down feedback from higher-order to sensory areas of the brain (Bar et al., 2006). One training study has demonstrated experience dependent plasticity for tools within ventral-visual cortex (Weisberg et al., 2007), however it is unclear whether similar effects would generalize to non-tool objects, or to objects with no motor experience.

Conclusions

Each of the bodies of literature reviewed above supports a tight coupling between conceptual and perceptual processing that is incompatible with strong modular views of perception (Pylyshyn, 1999). Conscious perception results from reverberation between feed-forward and top-down flows of information in the brain (Gilbert & Sigman, 2007). Early visual cortex (V1-V4) has been shown to respond to associative learning (Damaraju, Huang, Barrett, & Pessoa, 2009) and brain areas implicated in learning and memory have been shown to have perceptual capacities (see Graham, Barense, & Lee, 2010 for a review). A more fruitful endeavor in guiding our understanding of visual cognition may be to investigate the representations that are housed in different cortical areas, rather than the alleged specialized tasks performed by those cortical areas (Cowell, Bussey, & Saksida, 2010). If one does away with the assumption that perception and cognition are encapsulated in functionally discrete processing regions, then it is not clear that cognition influencing perception is any more controversial than cognition influencing cognition. The dynamic interactions between processes considered conceptual and those considered perceptual have remained a relatively under-explored area of psychology. It is our hope that future research will be aimed at further understanding the dynamic interplay between conceptual knowledge and visual object processing.

Acknowledgements

We would like to thank Kim Curby for her thoughtful suggestions and feedback on a preliminary version of this paper. We would also like to thank Laura skipper for her comments on embodied cognition. This work was supported by a National Institute of Health grant to I. Olson [RO1 MH091113]. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute of Mental Health or the National Institutes of Health.

References

- Abdel Rahman R, Sommer W. Seeing what we know and understand: how knowledge shapes perception. Psychonomic bulletin & review. 2008;15:1055–63. doi: 10.3758/PBR.15.6.1055. doi:10.3758/PBR.15.6.1055. [DOI] [PubMed] [Google Scholar]

- Abdel-Rahman R, Sommer W. Knowledge scale effects in face recognition: An electrophysiological investigation. Cognitive Affective & Behavioral Neuroscience. 2012;12:161–174. doi: 10.3758/s13415-011-0063-9. doi: 10.3758/s13415-011-0063-9. [DOI] [PubMed] [Google Scholar]

- Acheson DJ, Hamidi M, Binder JR, Postle BR. A common neural substrate for language production and verbal working memory. Journal of Cognitive Neuroscience. 2011;23:1358–67. doi: 10.1162/jocn.2010.21519. doi:10.1162/jocn.2010.21519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Althoff RR, Cohen NJ. Eye-movementbased memory effect: A reprocessing effect in face perception. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1999;25:997–1010. doi: 10.1037//0278-7393.25.4.997. [DOI] [PubMed] [Google Scholar]

- Anderson E, Siegel EH, Bliss-Moreau E, Barrett LF. The visual impact of gossip. Science. 2011;17:1446–1448. doi: 10.1126/science.1201574. doi: 10.1126/science.1201574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angeli A, Davidoff J, Valentine T. Face familiarity, distinctiveness, and categorical perception. Quarterly Journal of Experimental Psychology. 2008;61:690–707. doi: 10.1080/17470210701399305. [DOI] [PubMed] [Google Scholar]

- Anzellotti S, Fairhall SL, Caramazza A. Decoding Representations of Face Identity That are Tolerant to Rotation. Cerebral Cortex. 2013:1–8. doi: 10.1093/cercor/bht046. doi:10.1093/cercor/bht046. [DOI] [PubMed] [Google Scholar]

- Arévalo AL, Baldo JV, Dronkers NF. What do brain lesions tell us about theories of embodied semantics and the human mirror neuron system? Cortex. 2012;48:242–54. doi: 10.1016/j.cortex.2010.06.001. doi:10.1016/j.cortex.2010.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arguin M, Bub D, Dudek G. Shape integration for visual object recognition and its implication in category-specific visual agnosia. Visual Cognition. 1996;3:221–275. [Google Scholar]

- Balas B, Nelson CA. The role of face shape and pigmentation in other-race face perception: an electrophysiological study. Neuropsychologia. 2010;48:498–506. doi: 10.1016/j.neuropsychologia.2009.10.007. doi:10.1016/j.neuropsychologia.2009.10.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M, Kassam KS, Ghuman AS, Boshyan J, Schmidt AM, Dale AM, Hamalainen MS, et al. Top-down facilitation of visual recognition. Proceedings of the National Academy of Sciences of the United States of America. 2006;103:449–454. doi: 10.1073/pnas.0507062103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barense MD, Henson R. N. a, Graham KS. Perception and conception: temporal lobe activity during complex discriminations of familiar and novel faces and objects. Journal of Cognitive Neuroscience. 2011;23:3052–67. doi: 10.1162/jocn_a_00010. doi:10.1162/jocn_a_00010. [DOI] [PubMed] [Google Scholar]

- Barense MD, Rogers TT, Bussey TJ, Saksida LM, Graham KS. Influence of conceptual knowledge on visual object discrimination: Insights from semantic dementia and MTL patients. Cerebral Cortex. 2010;20:2568–2582. doi: 10.1093/cercor/bhq004. doi:10.1093/cercor/bhq004. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Perceptual symbol systems. Behavioral and Brain Sciences. 1999;22:577–660. doi: 10.1017/s0140525x99002149. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Grounded Cognition. Annual Review of Psychology. 2008;59:617–645. doi: 10.1146/annurev.psych.59.103006.093639. [DOI] [PubMed] [Google Scholar]

- Barsalou LW, Simmons WK, Barbey AK, Wilson CD. Grounding conceptual knowledge in modality-specific systems. Trends in Cognitive Sciences. 2003;7(2):84–91. doi: 10.1016/s1364-6613(02)00029-3. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. Parallel visual motion processing streams for manipulable objects and human movements. Neuron. 2002;34:149–159. doi: 10.1016/s0896-6273(02)00642-6. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. fMRI responses to video and point-light displays of moving humans and manipulable objects. Journal of Cognitive Neuroscience. 2003;15:991–1001. doi: 10.1162/089892903770007380. [DOI] [PubMed] [Google Scholar]

- Beilock SL, Lyons IM, Mattarella-Micke A, Nusbaum HC, Small SL. Sports experience changes the neural processing of action language. Proceedings of the National Academy of Sciences of the United States of America. 2008;105:13269–73. doi: 10.1073/pnas.0803424105. doi:10.1073/pnas.0803424105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S, Deouell LY. Structural encoding and identification in face processing: ERP evidence for separate mechanisms. Cognitive Neuropsychology. 2000;17:35–54. doi: 10.1080/026432900380472. [DOI] [PubMed] [Google Scholar]

- Blechert J, Sheppes G, Di Tella C, Williams H, Gross JJ. See what you think: Reappraisal modulates behavioral and neural responses to social stimuli. Psychological Science. 2012;23:346–53. doi: 10.1177/0956797612438559. doi:10.1177/0956797612438559. [DOI] [PubMed] [Google Scholar]

- Brebner JL, Krigolson O, Handy TC, Quadflieg S, Turk DJ. The importance of skin color and facial structure in perceiving and remembering others: An electrophysiological study. Brain Research. 2011;1388:123–33. doi: 10.1016/j.brainres.2011.02.090. doi:10.1016/j.brainres.2011.02.090. [DOI] [PubMed] [Google Scholar]

- Bruce V. Influences of familiarity on the processing of faces. Perception. 1986;15:387–397. doi: 10.1068/p150387. [DOI] [PubMed] [Google Scholar]

- Bruner J, Goodman CC. Value and need as organizing factors in perception. Journal of Abnormal Social Psychology. 1947;42:33–44. doi: 10.1037/h0058484. [DOI] [PubMed] [Google Scholar]

- Burton AM, Bruce V, Hancock PJB. From pixels to people: A model of familar face recognition. Cognitive Science. 1990;23:1–31. [Google Scholar]

- Buttle HM, Raymond JE. High familiarity enhances change detection for face stimuli. Perception and Psychophysics. 2003;65:1296–1306. doi: 10.3758/bf03194853. [DOI] [PubMed] [Google Scholar]

- Caharel S, Poiroux s., Bernard C, Thibaut F, Lalonde R, Rebai M. ERPs associated with familiarity and degree of familiarity during face recognition. International Journal of Neuroscience. 2002;112:1499–1512. doi: 10.1080/00207450290158368. [DOI] [PubMed] [Google Scholar]

- Caharel, Stéphanie, Montalan B, Fromager E, Bernard C, Lalonde R, Mohamed R. Other-race and inversion effects during the structural encoding stage of face processing in a race categorization task: an event-related brain potential study. International Journal of Psychophysiology. 2011;79:266–71. doi: 10.1016/j.ijpsycho.2010.10.018. doi:10.1016/j.ijpsycho.2010.10.018. [DOI] [PubMed] [Google Scholar]

- Calvo-Merino B, Glaser DE, Grèzes J, Passingham RE, Haggard P. Action observation and acquired motor skills: An FMRI study with expert dancers. Cerebral Cortex. 2005;15:1243–1249. doi: 10.1093/cercor/bhi007. doi:10.1093/cercor/bhi007. [DOI] [PubMed] [Google Scholar]

- Casasola M. Can language do the driving? The effect of linguistic input on infants’ categorization of support spatial relations. Developmental Psychology. 2005;41:183–192. doi: 10.1037/0012-1649.41.1.183. doi:10.1037/0012-1649.41.1.183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nature Neuroscience. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Chao LL, Weisberg J, Martin A. Experience dependent modulation of category-related cortical activity. Cerebral Cortex. 2002;12:545–551. doi: 10.1093/cercor/12.5.545. [DOI] [PubMed] [Google Scholar]

- Chatterjee A. Disembodying cognition. Language and Cognition. 2011;2:1–27. doi: 10.1515/LANGCOG.2010.004. doi:10.1515/LANGCOG.2010.004.Disembodying. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clifford A, Holmes A, Davies IRL, Franklin A. Color categories affect pre-attentive color perception. Biological Psychology. 2010;85:275–282. doi: 10.1016/j.biopsycho.2010.07.014. doi: 10.1016/j.biopsycho.2010.07.014. [DOI] [PubMed] [Google Scholar]

- Cloutier J, Mason MF, Macrae CN. The perceptual determinants of person construal: Reopening the social-cognitive toolbox. Journal of Personality and Social Psychology. 2005;88:885–894. doi: 10.1037/0022-3514.88.6.885. [DOI] [PubMed] [Google Scholar]

- Collins JA, Blacker KJ, Curby KM. Learned emotional associations (eventually) influence visual processing. (submitted) [Google Scholar]

- Collins JA, Curby K. Conceptual knowledge attenuates viewpoint dependency in visual object recognition. Visual Cognition. 2013 doi: 10.1080/13506285.2013.836138. [Google Scholar]

- Cowell R. a, Bussey TJ, Saksida LM. Functional dissociations within the ventral object processing pathway: Cognitive modules or a hierarchical continuum? Journal of Cognitive Neuroscience. 2010;22:2460–2479. doi: 10.1162/jocn.2009.21373. doi:10.1162/jocn.2009.21373. [DOI] [PubMed] [Google Scholar]

- Curby KM, Hayward G, Gauthier I. Laterality effects in the recognition of depth-rotated novel objects. Cognitive, Affective & Behavioral neuroscience. 2004;4(1):100–11. doi: 10.3758/cabn.4.1.100. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/15259892. [DOI] [PubMed] [Google Scholar]

- Damaraju E, Huang Y-M, Barrett LF, Pessoa L. Affective learning enhances activity and functional connectivity in early visual cortex. Neuropsychologia. 2009;47:2480–7. doi: 10.1016/j.neuropsychologia.2009.04.023. doi:10.1016/j.neuropsychologia.2009.04.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Baene W, Ons B, Wagemans J, Vogels R. Effects of category learning on the stimulus selectivity of macaque inferiro temporal neurons. Learning and Memory. 2008;15:717–727. doi: 10.1101/lm.1040508. doi: 10.1101/lm.1040508. [DOI] [PubMed] [Google Scholar]

- Dixon MJ, Bub DN, Arguin M. Semantic and visual determinants of face recognition in a prosopagnosic patient. Journal of Cognitive Neuroscience. 1998;10:362–376. doi: 10.1162/089892998562799. [DOI] [PubMed] [Google Scholar]

- Eger E, Schweinberger SR, Dolan RJ, Henson RN. Familiarity enhances invariance of face representations in human ventral visual cortex: fMRI evidence. NeuroImage. 2005;26(4):1128–39. doi: 10.1016/j.neuroimage.2005.03.010. doi:10.1016/j.neuroimage.2005.03.010. [DOI] [PubMed] [Google Scholar]

- Eifuku S, Nakata R, Sugimori M, Ono T, Tamura R. Neural correlates of associative face memory in the anterior inferior temporal cortex of monkeys. The Journal of Neuroscience. 2010;30(45):15085–96. doi: 10.1523/JNEUROSCI.0471-10.2010. doi:10.1523/JNEUROSCI.0471-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eimer M. Event-related brain potentials distinguish processing stages involved in face perception and recognition. Clinical Neurophysiology. 2000;111:694–705. doi: 10.1016/s1388-2457(99)00285-0. [DOI] [PubMed] [Google Scholar]

- Fallshore M, Schooler JW. Verbal vulnerability of perceptual expertise. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1995;21:1608–23. doi: 10.1037//0278-7393.21.6.1608. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/7490581. [DOI] [PubMed] [Google Scholar]

- Folstein J, Gauthier I, Palmeri TJ. How category learning affects object representations: Not all morphspaces stretch alike. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2012;38:807–820. doi: 10.1037/a0025836. doi:10.1037/a0025836. [DOI] [PMC free article] [PubMed] [Google Scholar]