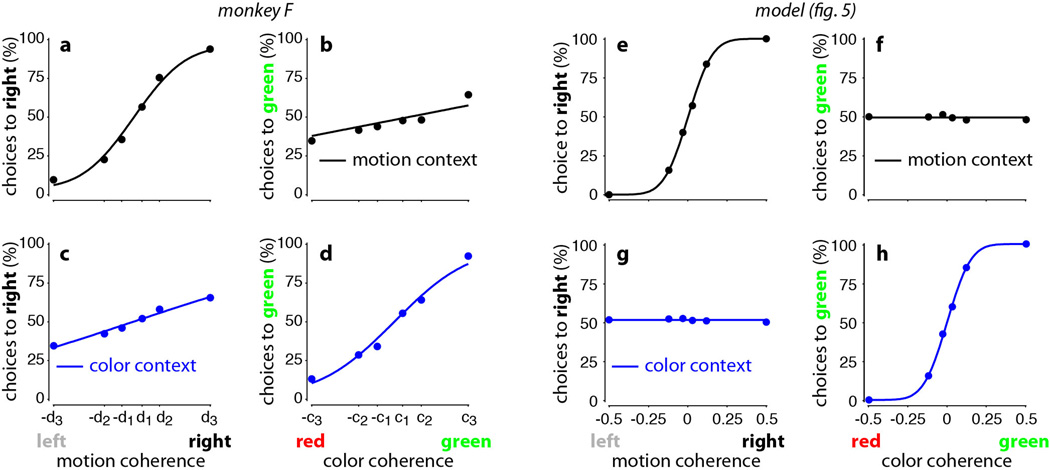

Extended Data Figure 2. Psychophysical performance for monkey F and for the model.

a-d, Psychophysical performance for monkey F, for motion (top) and color contexts (bottom), averaged over 60 recording sessions (123,550 trials). Performance is shown as a function of motion (left) or color (right) coherence in each behavioral context. As in Fig. 1c-f, coherence values along the horizontal axis correspond to the average low, intermediate and high motion coherence (a,c) and color coherence (b,d) computed over all behavioral trials. The curves are fits of a behavioral model (see Suppl. Information, section 4). e-h, ‘Psychophysical’ performance for the trained neural-network model (Figs. 4–6) averaged over a total of 14,400 trials (200 repetitions per condition). Choices were generated based on the output of the model at the end of the stimulus presentation—an output larger than zero corresponds to a choice to the left target (choice 1), and an output smaller than zero corresponds to a choice to the left target (choice 2). We simulated model responses to inputs with motion and color coherences of 0.03, 0.12, and 0.50. The variability in the input (i.e. the variance of the underlying Gaussian distribution) was chosen such that the performance of the model for the relevant sensory signal qualitatively matches the performance of the monkeys. As in Fig. 1c-f, performance is shown as a function of motion (left) or color (right) coherence in the motion (top) and color contexts (bottom). Curves are fits of a behavioral model (as in a-d and in Fig. 1c-f). In each behavioral context, the relevant sensory input affects the model’s choices (e, h), but the irrelevant input does not (f, g), reflecting successful context-dependent integration. In fact, the model output essentially corresponds to the bounded temporal integral of the relevant input (not shown) and is completely unaffected by the irrelevant input.